>- **🍨 本文为[🔗365天深度学习训练营](https://mp.weixin.qq.com/s/0dvHCaOoFnW8SCp3JpzKxg) 中的学习记录博客**

>- **🍖 原作者:[K同学啊](https://mtyjkh.blog.csdn.net/)**一、模型跑通

1.1环境配置

编译器:pycharm community

语言环境:Python 3.9.19

深度学习环境:TensorFlow 2.9.0

1.2模型搭建步骤

- GPU 设置:检查是否有 GPU 可用,并设置显存按需增长。

- 数据目录设置:设置数据目录,统计图片总数。

- 数据集参数设置:设置批次大小、图像高度和宽度。

- 加载数据集:使用

tf.keras.preprocessing.image_dataset_from_directory加载训练和验证数据集。 - 数据集缓存和预取:缓存和预取数据,以提高训练效率。

- 构建模型:定义神经网络模型,包括卷积层、池化层和全连接层。

- 编译模型:指定优化器、损失函数和评估指标。

- 训练模型:使用训练数据集训练模型,并使用验证数据集评估模型性能。

- 可视化:绘制训练和验证的准确率与损失曲线,帮助分析模型的表现。

1.3 完整代码

import os

import tensorflow as tf

import pathlib

import PIL.Image

import matplotlib.pyplot as plt

from tensorflow.keras import layers, models

# 设置环境变量以避免OpenMP错误

os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE"

# GPU配置

gpus = tf.config.list_physical_devices('GPU')

if gpus:

gpu0 = gpus[0]

tf.config.experimental.set_memory_growth(gpu0, True) # 设置显存按需增长

tf.config.set_visible_devices([gpu0], "GPU")

print("GPU available.")

else:

print("GPU cannot be found, using CPU instead.")

# 数据目录

data_dir = "D:/others/pycharm/pythonProject/T4"

data_dir = pathlib.Path(data_dir)

# 图片总数

image_count = len(list(data_dir.glob('*/*.jpg')))

print("图片总数为:", image_count)

# 显示“Monkeypox”类别中的一个示例图像

Monkeypox = list(data_dir.glob('Monkeypox/*.jpg'))

if Monkeypox:

PIL.Image.open(str(Monkeypox[0])).show()

else:

print("在 'Monkeypox' 目录中未找到图片。")

# 数据集参数

batch_size = 32

img_height = 224

img_width = 224

# 创建训练数据集

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

# 创建验证数据集

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

# 获取类别名称

class_names = train_ds.class_names

print(class_names)

# 显示样本图像

plt.figure(figsize=(20, 10))

for images, labels in train_ds.take(1):

for i in range(20):

ax = plt.subplot(5, 10, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.axis("off")

plt.show()

# 打印训练数据集的形状

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

# 数据集预处理

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

num_classes = 2

# 定义模型

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1. / 255, input_shape=(img_height, img_width, 3)),

layers.Conv2D(16, (3, 3), activation='relu', input_shape=(img_height, img_width, 3)), # 卷积层1,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层1,2*2采样

layers.Conv2D(32, (3, 3), activation='relu'), # 卷积层2,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层2,2*2采样

layers.Dropout(0.3),

layers.Conv2D(64, (3, 3), activation='relu'), # 卷积层3,卷积核3*3

layers.Dropout(0.3),

layers.Flatten(), # Flatten层,连接卷积层与全连接层

layers.Dense(128, activation='relu'), # 全连接层,特征进一步提取

layers.Dense(num_classes) # 输出层,输出预期结果

])

model.summary() # 打印网络结构

# 设置优化器

opt = tf.keras.optimizers.Adam(learning_rate=1e-4)

model.compile(optimizer=opt,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

from tensorflow.keras.callbacks import ModelCheckpoint

epochs = 50

checkpointer = ModelCheckpoint('best_model.h5',

monitor='val_accuracy',

verbose=1,

save_best_only=True,

save_weights_only=True)

history = model.fit(train_ds,

validation_data=val_ds,

epochs=epochs,

callbacks=[checkpointer])

# 绘制训练和验证的准确率和损失曲线

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(epochs)

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

输出全部内容:

D:\others\anaconda\envs\deep_learning_env\python.exe D:\others\pycharm\pythonProject\T4_Monkeypox_Identification.py

GPU cannot be found, using CPU instead.

图片总数为: 2142

Found 2142 files belonging to 2 classes.

Using 1714 files for training.

2024-06-16 16:13:40.484425: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-06-16 16:13:40.485345: I tensorflow/core/common_runtime/process_util.cc:146] Creating new thread pool with default inter op setting: 2. Tune using inter_op_parallelism_threads for best performance.

Found 2142 files belonging to 2 classes.

Using 428 files for validation.

['Monkeypox', 'Others']

(32, 224, 224, 3)

(32,)

Model: "sequential"

Layer (type) Output Shape Param #

rescaling (Rescaling) (None, 224, 224, 3) 0

conv2d (Conv2D) (None, 222, 222, 16) 448

average_pooling2d (AverageP (None, 111, 111, 16) 0

ooling2D)

conv2d_1 (Conv2D) (None, 109, 109, 32) 4640

average_pooling2d_1 (Averag (None, 54, 54, 32) 0

ePooling2D)

dropout (Dropout) (None, 54, 54, 32) 0

conv2d_2 (Conv2D) (None, 52, 52, 64) 18496

dropout_1 (Dropout) (None, 52, 52, 64) 0

flatten (Flatten) (None, 173056) 0

dense (Dense) (None, 128) 22151296

dense_1 (Dense) (None, 2) 258

=================================================================

Total params: 22,175,138

Trainable params: 22,175,138

Non-trainable params: 0

Epoch 1/50

54/54 [==============================] - ETA: 0s - loss: 0.7373 - accuracy: 0.5239

Epoch 1: val_accuracy improved from -inf to 0.53505, saving model to best_model.h5

54/54 [==============================] - 16s 267ms/step - loss: 0.7373 - accuracy: 0.5239 - val_loss: 0.6834 - val_accuracy: 0.5350

Epoch 2/50

54/54 [==============================] - ETA: 0s - loss: 0.6814 - accuracy: 0.5449

Epoch 2: val_accuracy did not improve from 0.53505

54/54 [==============================] - 14s 256ms/step - loss: 0.6814 - accuracy: 0.5449 - val_loss: 0.6805 - val_accuracy: 0.5350

Epoch 3/50

54/54 [==============================] - ETA: 0s - loss: 0.6764 - accuracy: 0.5484

Epoch 3: val_accuracy did not improve from 0.53505

54/54 [==============================] - 14s 264ms/step - loss: 0.6764 - accuracy: 0.5484 - val_loss: 0.6791 - val_accuracy: 0.5350

Epoch 4/50

54/54 [==============================] - ETA: 0s - loss: 0.6732 - accuracy: 0.5589

Epoch 4: val_accuracy improved from 0.53505 to 0.53738, saving model to best_model.h5

54/54 [==============================] - 14s 266ms/step - loss: 0.6732 - accuracy: 0.5589 - val_loss: 0.6724 - val_accuracy: 0.5374

Epoch 5/50

54/54 [==============================] - ETA: 0s - loss: 0.6662 - accuracy: 0.6009

Epoch 5: val_accuracy improved from 0.53738 to 0.56308, saving model to best_model.h5

54/54 [==============================] - 14s 266ms/step - loss: 0.6662 - accuracy: 0.6009 - val_loss: 0.6734 - val_accuracy: 0.5631

Epoch 6/50

54/54 [==============================] - ETA: 0s - loss: 0.6630 - accuracy: 0.6243

Epoch 6: val_accuracy improved from 0.56308 to 0.62850, saving model to best_model.h5

54/54 [==============================] - 14s 258ms/step - loss: 0.6630 - accuracy: 0.6243 - val_loss: 0.6562 - val_accuracy: 0.6285

Epoch 7/50

54/54 [==============================] - ETA: 0s - loss: 0.6540 - accuracy: 0.6295

Epoch 7: val_accuracy improved from 0.62850 to 0.65421, saving model to best_model.h5

54/54 [==============================] - 14s 263ms/step - loss: 0.6540 - accuracy: 0.6295 - val_loss: 0.6237 - val_accuracy: 0.6542

Epoch 8/50

54/54 [==============================] - ETA: 0s - loss: 0.6255 - accuracy: 0.6424

Epoch 8: val_accuracy did not improve from 0.65421

54/54 [==============================] - 14s 256ms/step - loss: 0.6255 - accuracy: 0.6424 - val_loss: 0.6196 - val_accuracy: 0.6425

Epoch 9/50

54/54 [==============================] - ETA: 0s - loss: 0.5943 - accuracy: 0.6873

Epoch 9: val_accuracy did not improve from 0.65421

54/54 [==============================] - 14s 258ms/step - loss: 0.5943 - accuracy: 0.6873 - val_loss: 0.6232 - val_accuracy: 0.6308

Epoch 10/50

54/54 [==============================] - ETA: 0s - loss: 0.5757 - accuracy: 0.7071

Epoch 10: val_accuracy improved from 0.65421 to 0.70327, saving model to best_model.h5

54/54 [==============================] - 14s 263ms/step - loss: 0.5757 - accuracy: 0.7071 - val_loss: 0.5815 - val_accuracy: 0.7033

Epoch 11/50

54/54 [==============================] - ETA: 0s - loss: 0.5461 - accuracy: 0.7334

Epoch 11: val_accuracy improved from 0.70327 to 0.72664, saving model to best_model.h5

54/54 [==============================] - 14s 255ms/step - loss: 0.5461 - accuracy: 0.7334 - val_loss: 0.5499 - val_accuracy: 0.7266

Epoch 12/50

54/54 [==============================] - ETA: 0s - loss: 0.5282 - accuracy: 0.7398

Epoch 12: val_accuracy improved from 0.72664 to 0.74533, saving model to best_model.h5

54/54 [==============================] - 14s 257ms/step - loss: 0.5282 - accuracy: 0.7398 - val_loss: 0.5327 - val_accuracy: 0.7453

Epoch 13/50

54/54 [==============================] - ETA: 0s - loss: 0.5179 - accuracy: 0.7526

Epoch 13: val_accuracy did not improve from 0.74533

54/54 [==============================] - 14s 258ms/step - loss: 0.5179 - accuracy: 0.7526 - val_loss: 0.5860 - val_accuracy: 0.6402

Epoch 14/50

54/54 [==============================] - ETA: 0s - loss: 0.4868 - accuracy: 0.7806

Epoch 14: val_accuracy improved from 0.74533 to 0.78037, saving model to best_model.h5

54/54 [==============================] - 14s 267ms/step - loss: 0.4868 - accuracy: 0.7806 - val_loss: 0.4927 - val_accuracy: 0.7804

Epoch 15/50

54/54 [==============================] - ETA: 0s - loss: 0.4567 - accuracy: 0.7841

Epoch 15: val_accuracy did not improve from 0.78037

54/54 [==============================] - 14s 257ms/step - loss: 0.4567 - accuracy: 0.7841 - val_loss: 0.4941 - val_accuracy: 0.7617

Epoch 16/50

54/54 [==============================] - ETA: 0s - loss: 0.4413 - accuracy: 0.7935

Epoch 16: val_accuracy did not improve from 0.78037

54/54 [==============================] - 14s 253ms/step - loss: 0.4413 - accuracy: 0.7935 - val_loss: 0.4773 - val_accuracy: 0.7687

Epoch 17/50

54/54 [==============================] - ETA: 0s - loss: 0.4218 - accuracy: 0.8162

Epoch 17: val_accuracy did not improve from 0.78037

54/54 [==============================] - 14s 253ms/step - loss: 0.4218 - accuracy: 0.8162 - val_loss: 0.4723 - val_accuracy: 0.7780

Epoch 18/50

54/54 [==============================] - ETA: 0s - loss: 0.4116 - accuracy: 0.8145

Epoch 18: val_accuracy improved from 0.78037 to 0.78505, saving model to best_model.h5

54/54 [==============================] - 14s 264ms/step - loss: 0.4116 - accuracy: 0.8145 - val_loss: 0.4518 - val_accuracy: 0.7850

Epoch 19/50

54/54 [==============================] - ETA: 0s - loss: 0.3887 - accuracy: 0.8186

Epoch 19: val_accuracy did not improve from 0.78505

54/54 [==============================] - 14s 259ms/step - loss: 0.3887 - accuracy: 0.8186 - val_loss: 0.4550 - val_accuracy: 0.7710

Epoch 20/50

54/54 [==============================] - ETA: 0s - loss: 0.3755 - accuracy: 0.8361

Epoch 20: val_accuracy improved from 0.78505 to 0.78972, saving model to best_model.h5

54/54 [==============================] - 14s 262ms/step - loss: 0.3755 - accuracy: 0.8361 - val_loss: 0.4299 - val_accuracy: 0.7897

Epoch 21/50

54/54 [==============================] - ETA: 0s - loss: 0.3684 - accuracy: 0.8291

Epoch 21: val_accuracy improved from 0.78972 to 0.81075, saving model to best_model.h5

54/54 [==============================] - 14s 258ms/step - loss: 0.3684 - accuracy: 0.8291 - val_loss: 0.4272 - val_accuracy: 0.8107

Epoch 22/50

54/54 [==============================] - ETA: 0s - loss: 0.3545 - accuracy: 0.8419

Epoch 22: val_accuracy improved from 0.81075 to 0.82710, saving model to best_model.h5

54/54 [==============================] - 14s 259ms/step - loss: 0.3545 - accuracy: 0.8419 - val_loss: 0.4261 - val_accuracy: 0.8271

Epoch 23/50

54/54 [==============================] - ETA: 0s - loss: 0.3380 - accuracy: 0.8582

Epoch 23: val_accuracy did not improve from 0.82710

54/54 [==============================] - 14s 260ms/step - loss: 0.3380 - accuracy: 0.8582 - val_loss: 0.4460 - val_accuracy: 0.7874

Epoch 24/50

54/54 [==============================] - ETA: 0s - loss: 0.3503 - accuracy: 0.8366

Epoch 24: val_accuracy improved from 0.82710 to 0.83645, saving model to best_model.h5

54/54 [==============================] - 14s 260ms/step - loss: 0.3503 - accuracy: 0.8366 - val_loss: 0.4269 - val_accuracy: 0.8364

Epoch 25/50

54/54 [==============================] - ETA: 0s - loss: 0.3369 - accuracy: 0.8530

Epoch 25: val_accuracy did not improve from 0.83645

54/54 [==============================] - 14s 258ms/step - loss: 0.3369 - accuracy: 0.8530 - val_loss: 0.4089 - val_accuracy: 0.8318

Epoch 26/50

54/54 [==============================] - ETA: 0s - loss: 0.3057 - accuracy: 0.8699

Epoch 26: val_accuracy did not improve from 0.83645

54/54 [==============================] - 14s 259ms/step - loss: 0.3057 - accuracy: 0.8699 - val_loss: 0.4184 - val_accuracy: 0.8131

Epoch 27/50

54/54 [==============================] - ETA: 0s - loss: 0.3140 - accuracy: 0.8728

Epoch 27: val_accuracy did not improve from 0.83645

54/54 [==============================] - 14s 256ms/step - loss: 0.3140 - accuracy: 0.8728 - val_loss: 0.4118 - val_accuracy: 0.8224

Epoch 28/50

54/54 [==============================] - ETA: 0s - loss: 0.2888 - accuracy: 0.8856

Epoch 28: val_accuracy did not improve from 0.83645

54/54 [==============================] - 14s 255ms/step - loss: 0.2888 - accuracy: 0.8856 - val_loss: 0.4663 - val_accuracy: 0.7897

Epoch 29/50

54/54 [==============================] - ETA: 0s - loss: 0.2876 - accuracy: 0.8775

Epoch 29: val_accuracy did not improve from 0.83645

54/54 [==============================] - 14s 255ms/step - loss: 0.2876 - accuracy: 0.8775 - val_loss: 0.4428 - val_accuracy: 0.8271

Epoch 30/50

54/54 [==============================] - ETA: 0s - loss: 0.2994 - accuracy: 0.8804

Epoch 30: val_accuracy did not improve from 0.83645

54/54 [==============================] - 14s 266ms/step - loss: 0.2994 - accuracy: 0.8804 - val_loss: 0.4233 - val_accuracy: 0.8341

Epoch 31/50

54/54 [==============================] - ETA: 0s - loss: 0.3112 - accuracy: 0.8693

Epoch 31: val_accuracy improved from 0.83645 to 0.84579, saving model to best_model.h5

54/54 [==============================] - 15s 269ms/step - loss: 0.3112 - accuracy: 0.8693 - val_loss: 0.4222 - val_accuracy: 0.8458

Epoch 32/50

54/54 [==============================] - ETA: 0s - loss: 0.2743 - accuracy: 0.9002

Epoch 32: val_accuracy did not improve from 0.84579

54/54 [==============================] - 14s 259ms/step - loss: 0.2743 - accuracy: 0.9002 - val_loss: 0.4125 - val_accuracy: 0.8411

Epoch 33/50

54/54 [==============================] - ETA: 0s - loss: 0.2685 - accuracy: 0.8950

Epoch 33: val_accuracy did not improve from 0.84579

54/54 [==============================] - 14s 258ms/step - loss: 0.2685 - accuracy: 0.8950 - val_loss: 0.4494 - val_accuracy: 0.8201

Epoch 34/50

54/54 [==============================] - ETA: 0s - loss: 0.2707 - accuracy: 0.8880

Epoch 34: val_accuracy did not improve from 0.84579

54/54 [==============================] - 14s 263ms/step - loss: 0.2707 - accuracy: 0.8880 - val_loss: 0.4340 - val_accuracy: 0.8388

Epoch 35/50

54/54 [==============================] - ETA: 0s - loss: 0.2513 - accuracy: 0.9032

Epoch 35: val_accuracy did not improve from 0.84579

54/54 [==============================] - 14s 255ms/step - loss: 0.2513 - accuracy: 0.9032 - val_loss: 0.4157 - val_accuracy: 0.8458

Epoch 36/50

54/54 [==============================] - ETA: 0s - loss: 0.2559 - accuracy: 0.8985

Epoch 36: val_accuracy improved from 0.84579 to 0.86449, saving model to best_model.h5

54/54 [==============================] - 14s 268ms/step - loss: 0.2559 - accuracy: 0.8985 - val_loss: 0.4156 - val_accuracy: 0.8645

Epoch 37/50

54/54 [==============================] - ETA: 0s - loss: 0.2540 - accuracy: 0.8979

Epoch 37: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 256ms/step - loss: 0.2540 - accuracy: 0.8979 - val_loss: 0.4351 - val_accuracy: 0.8528

Epoch 38/50

54/54 [==============================] - ETA: 0s - loss: 0.2494 - accuracy: 0.9002

Epoch 38: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 268ms/step - loss: 0.2494 - accuracy: 0.9002 - val_loss: 0.4853 - val_accuracy: 0.8014

Epoch 39/50

54/54 [==============================] - ETA: 0s - loss: 0.2409 - accuracy: 0.9102

Epoch 39: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 268ms/step - loss: 0.2409 - accuracy: 0.9102 - val_loss: 0.4283 - val_accuracy: 0.8481

Epoch 40/50

54/54 [==============================] - ETA: 0s - loss: 0.2248 - accuracy: 0.9195

Epoch 40: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 260ms/step - loss: 0.2248 - accuracy: 0.9195 - val_loss: 0.4533 - val_accuracy: 0.8458

Epoch 41/50

54/54 [==============================] - ETA: 0s - loss: 0.2210 - accuracy: 0.9259

Epoch 41: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 256ms/step - loss: 0.2210 - accuracy: 0.9259 - val_loss: 0.4639 - val_accuracy: 0.8458

Epoch 42/50

54/54 [==============================] - ETA: 0s - loss: 0.2172 - accuracy: 0.9288

Epoch 42: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 262ms/step - loss: 0.2172 - accuracy: 0.9288 - val_loss: 0.4669 - val_accuracy: 0.8411

Epoch 43/50

54/54 [==============================] - ETA: 0s - loss: 0.2090 - accuracy: 0.9277

Epoch 43: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 259ms/step - loss: 0.2090 - accuracy: 0.9277 - val_loss: 0.4404 - val_accuracy: 0.8505

Epoch 44/50

54/54 [==============================] - ETA: 0s - loss: 0.2328 - accuracy: 0.9236

Epoch 44: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 256ms/step - loss: 0.2328 - accuracy: 0.9236 - val_loss: 0.4917 - val_accuracy: 0.8458

Epoch 45/50

54/54 [==============================] - ETA: 0s - loss: 0.2134 - accuracy: 0.9172

Epoch 45: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 268ms/step - loss: 0.2134 - accuracy: 0.9172 - val_loss: 0.4667 - val_accuracy: 0.8551

Epoch 46/50

54/54 [==============================] - ETA: 0s - loss: 0.2053 - accuracy: 0.9352

Epoch 46: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 260ms/step - loss: 0.2053 - accuracy: 0.9352 - val_loss: 0.4301 - val_accuracy: 0.8575

Epoch 47/50

54/54 [==============================] - ETA: 0s - loss: 0.2031 - accuracy: 0.9306

Epoch 47: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 262ms/step - loss: 0.2031 - accuracy: 0.9306 - val_loss: 0.4869 - val_accuracy: 0.8248

Epoch 48/50

54/54 [==============================] - ETA: 0s - loss: 0.2021 - accuracy: 0.9259

Epoch 48: val_accuracy did not improve from 0.86449

54/54 [==============================] - 15s 270ms/step - loss: 0.2021 - accuracy: 0.9259 - val_loss: 0.4501 - val_accuracy: 0.8505

Epoch 49/50

54/54 [==============================] - ETA: 0s - loss: 0.2119 - accuracy: 0.9207

Epoch 49: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 255ms/step - loss: 0.2119 - accuracy: 0.9207 - val_loss: 0.4755 - val_accuracy: 0.8458

Epoch 50/50

54/54 [==============================] - ETA: 0s - loss: 0.1854 - accuracy: 0.9405

Epoch 50: val_accuracy did not improve from 0.86449

54/54 [==============================] - 14s 260ms/step - loss: 0.1854 - accuracy: 0.9405 - val_loss: 0.4722 - val_accuracy: 0.8435

进程已结束,退出代码为 0二、学习积累

本模型相比T3(天气识别)有很多的相同点,二者有着基本相同的框架

但是也有区别。

首先是对数据图像的预处理。T3使用的图像尺寸是180x180,图像尺寸较小,可以加快训练速度,对于天气分类,足够用了。T4使用的是224x224,图像尺寸较大,可以捕捉更多细节,需要高分辨率的图片来执行特征识别的任务。

T3模型结构:

- Rescaling 层:将输入图像从 [0, 255] 缩放到 [0, 1]。

- Conv2D 层:16 个过滤器(每个过滤器提取一张特征图,16个过滤器提取16个特征图),3x3 卷积核,ReLU 激活函数。

- AveragePooling2D 层:2x2 池化大小。

- Conv2D 层:32 个过滤器,3x3 卷积核,ReLU 激活函数。

- AveragePooling2D 层:2x2 池化大小。

- Conv2D 层:64 个过滤器,3x3 卷积核,ReLU 激活函数。

- Dropout 层:30% 的 dropout 率。

- Flatten 层:将输入展平。

- Dense 层:128 个神经元,ReLU 激活函数。

- Dense 层:输出层,神经元数量等于类别数。

T4模型结构:

- Rescaling 层:将输入图像从 [0, 255] 缩放到 [0, 1]。

- Conv2D 层:16 个过滤器(每个过滤器提取一张特征图,16个过滤器提取16个特征图),3x3 卷积核,ReLU 激活函数。

- AveragePooling2D 层:2x2 池化大小。

- Conv2D 层:32 个过滤器,3x3 卷积核,ReLU 激活函数。

- AveragePooling2D 层:2x2 池化大小。

- Dropout 层:30% 的 dropout 率。

- Conv2D 层:64 个过滤器,3x3 卷积核,ReLU 激活函数。

- Dropout 层:30% 的 dropout 率。

- Flatten 层:将输入展平。

- Dense 层:128 个神经元,ReLU 激活函数。

- Dense 层:输出层,神经元数量等于类别数。

学习笔记:

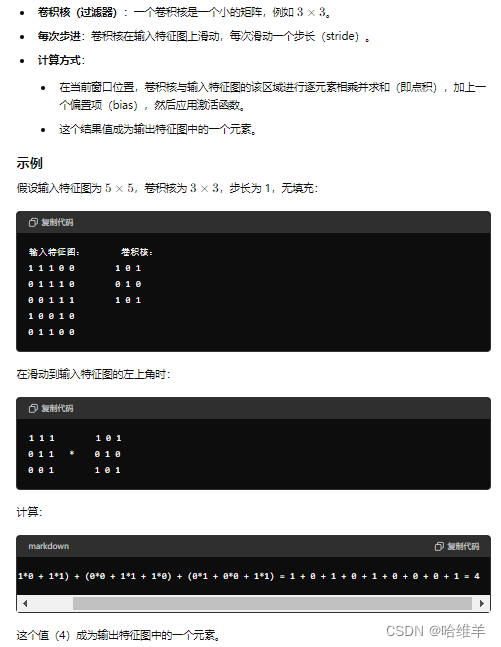

1.关于卷积层

在设计卷积神经网络时,我们不需要手动考虑每个过滤器应该学习哪些特征。相反,我们依赖于卷积神经网络通过训练自动学习到适合的特征表示。过滤器数量的选择依赖于经验法则和实际应用中的验证和调整,而具体特征的学习则是通过反向传播和梯度下降自动完成的。

通常,我们会使用16、32、64、128等常见的过滤器数量作为卷积层的初始设置。这些值是基于大量实际应用和研究中的经验总结得出的。

如果在训练过程中发现模型的性能不佳,可以通过调整过滤器数量、学习率、层数等参数进行优化。

卷积层包括两个主要部分:卷积运算和激活函数。严格来说,卷积运算是线性的,需要引入激活函数来进行非线性的运算。经过激活函数的张量维度和之前的卷积运算输出的张量维度是一致的。激活函数逐元素应用于特征图中的每一个值,因此不会改变特征图的尺寸或数量。

卷积计算

无论是卷积核还是池化窗口,在每次步进时都会从当前窗口覆盖的区域内提取出一个值,这个值会成为输出特征图中的一个元素。具体过程如下:

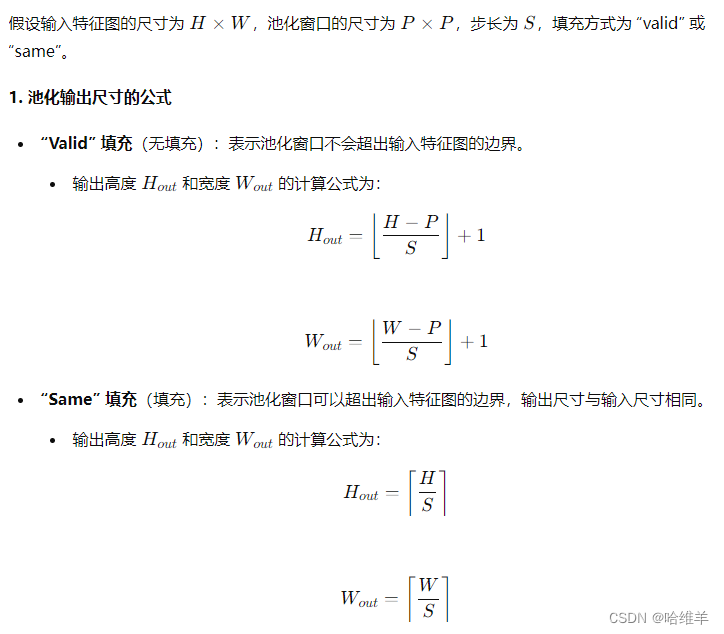

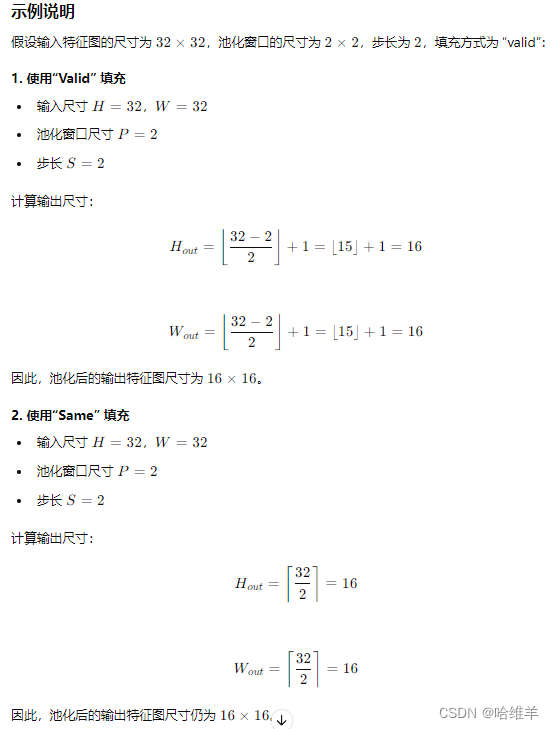

2.关于池化层

池化层的主要目的是通过降采样操作来减小特征图的尺寸,同时保留重要的特征。这样可以减少计算量,提高模型的训练速度,并减少过拟合。

池化层输出的尺寸与输入的尺寸以及池化窗口的尺寸之间有明确的关系。池化层的输出尺寸取决于输入特征图的尺寸、池化窗口的尺寸、步长(stride)和填充(padding)方式。

什么是same和valid填充?

"valid" 和 "same" 填充实际上是指卷积和池化操作中的填充策略(padding)。它们控制着如何在进行卷积或池化操作时处理输入特征图的边界。

1. Valid 填充(无填充)

- 定义:在卷积或池化操作中不添加任何额外的像素,这意味着卷积或池化窗口不会超出输入特征图的边界。

- 结果:输出特征图的尺寸通常小于输入特征图的尺寸。

2. Same 填充(填充)

- 定义:在输入特征图的边缘添加适量的零像素,以确保输出特征图的尺寸与输入特征图的尺寸相同。

- 结果:输出特征图的尺寸与输入特征图的尺寸相同。

平均池化和最大池化

1.最大池化(Max Pooling)

特点

- 提取显著特征:在每个池化窗口内选择最大值,保留局部区域内最显著的特征。

- 对噪声鲁棒:较为鲁棒于噪声,因为它选择的是最大值,而不是所有值的平均。

- 非线性引入:通过选择最大值,最大池化引入了一种非线性变化。

适用场景

- 边缘检测和物体识别:保留边缘、角点等显著特征,对于需要检测局部显著特征的任务非常有效。

- 自然图像处理:在处理包含大量边缘和细节的自然图像时,最大池化通常表现更好。

2.平均池化(Average Pooling)

特点

- 平滑特征图:在每个池化窗口内计算平均值,起到平滑特征图的作用。

- 信息保留:保留更多的整体信息,相比于最大池化,它不会只关注局部最大值。

- 减少过拟合:通过平滑操作,平均池化有助于减少过拟合。

适用场景

- 图像去噪和平滑:适用于需要平滑图像或去噪的任务,因为它可以有效地减少噪声的影响。

- 特征表示的平滑:在某些任务中,保留整体特征的平滑表示可能更有助于分类或回归。

3.逐层增加过滤器数量的原因

在cnn模型中,逐层增加卷积层的过滤器数量是一个很有效的策略,有助于逐步提取更复杂和更高级的特征。

- 低级特征:在网络的初始层,卷积核提取的是输入图像的低级特征,如边缘、角点等。这些特征相对简单,因此过滤器数量可以较少,如16个。

- 中级特征:随着网络的深入,卷积核需要提取的特征变得更加复杂,如纹理和局部模式。此时,增加过滤器数量(如32个)可以捕捉到更多类型的中级特征。

- 高级特征:在更深层次,卷积核提取的是更加抽象和高级的特征,如物体的一部分或整体形状。需要更多的过滤器(如64个)来捕捉这些复杂特征。

4.flatten层

flatten层将输入的多维特征图展平为一个一维向量,方便后续全连接层的处理(全连接层只接受一维向量的导入)。

5.全连接层

在功能上,卷积层的目的是为了提取特征,而全连接的目的分类。全连接层将特征提取得到的特征图非线性地映射成一维特征向量,该特征向量包含所有特征信息,可以转化为分类成各个类别的概率。

比如我画的这个草图,flatten层展开为576个数(576维),连接至128个神经元(激活函数为relu),最后输出4维向量(激活函数为softmax),表示4个类别的概率。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?