使用二进制及Docker-Compsoe安装SkyWalking

二进制部署skywalking

部署es

#修改系统内核参数;不然会启动不了

vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

vm.max_map_count=262144

sysctl -p

#安装

dpkg -i elasticsearch-8.5.1-amd64.deb

#修改配置文件

vim /etc/elasticsearch/elasticsearch.yml

root@ubuntu:/usr/local/src# grep -Ev '^($|#)' /etc/elasticsearch/elasticsearch.yml

cluster.name: es1

node.name: node1

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 0.0.0.0

http.port: 9200

xpack.security.enabled: false

xpack.security.enrollment.enabled: false

xpack.security.http.ssl:

enabled: false

keystore.path: certs/http.p12

xpack.security.transport.ssl:

enabled: false

verification_mode: certificate

keystore.path: certs/transport.p12

truststore.path: certs/transport.p12

cluster.initial_master_nodes: ["ubuntu"]

http.host: 0.0.0.0

#重启

systemctl restart elasticsearch

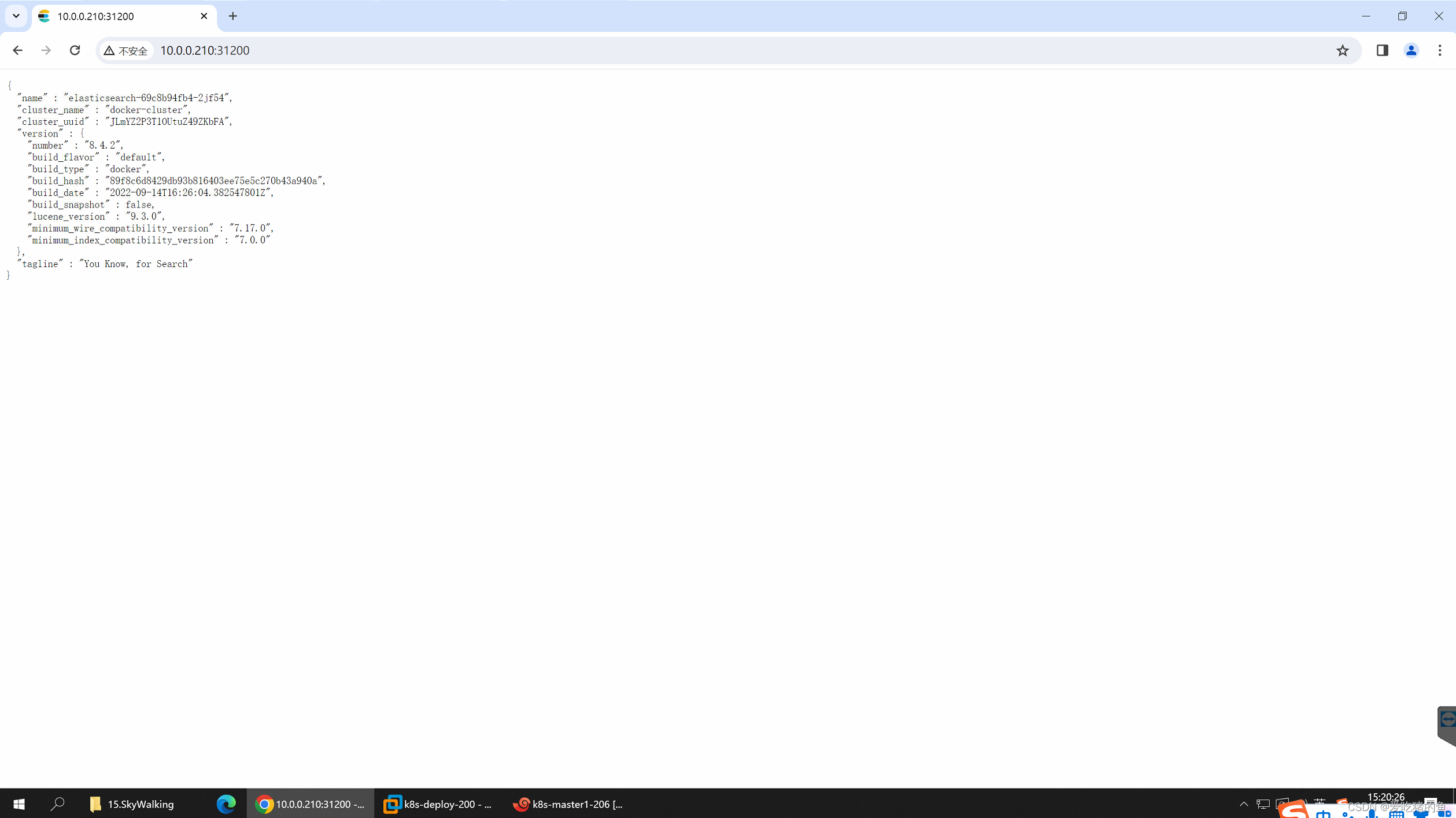

web页面:

9200是客户端端口;9300是集群端口

10.0.0.160:9200

部署skywalking

下载地址:https://archive.apache.org/dist/skywalking

#安装java环境

apt install openjdk-11-jdk -y

#下载skywalking二进制安装包

mkdir /apps

cd /apps

wget https://archive.apache.org/dist/skywalking/9.3.0/apache-skywalking-apm-9.3.0.tar.gz

tar xvf apache-skywalking-apm-9.3.0.tar.gz

ln -sv /apps/apache-skywalking-apm-bin /apps/skywalking

#编辑配置文件

vim /apps/skywalking/config/application.yml

storage:

selector: ${SW_STORAGE:elasticsearch} #选择elasticsearch存储数据

elasticsearch:

namespace: ${SW_NAMESPACE:""}

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:10.0.0.160:9200} #elasticsearch地址

#编辑service启动文件

vim /etc/systemd/system/skywalking.service

[Unit]

Description=Apache Skywalking

After=network.target

[Service]

Type=oneshot

User=root

WorkingDirectory=/apps/skywalking/bin/

ExecStart=/bin/bash /apps/skywalking/bin/startup.sh

RemainAfterExit=yes

RestartSec=5

[Install]

WantedBy=multi-user.target

#启动并设置开机启动

systemctl daemon-reload && systemctl restart skywalking && systemctl enable skywalking

web界面访问:

前端服务,端口号8080

10.0.0.161:8080

docker-compose 部署skywalking

#创建es数据目录

mkdir -pv /data/elasticsearch/

chown 1000.1000 -R /data/elasticsearch

mkdir /data/skywalking-9.3-docker-composefile -pv

cd /data/skywalking-9.3-docker-composefile

vim docker-compose.yml

version: "3"

services:

elasticsearch:

image: elasticsearch:8.4.2

container_name: elasticsearch

ports:

- "9200:9200"

healthcheck:

test: ["CMD-SHELL", "curl -sf http://localhost:9200/_cluster/health || exit 1"] #心跳检测,成功之后不再执行后面的退出

interval: 60s #心跳检测间隔周期

timeout: 10s

retries: 3

start_period: 60s #首次检测延迟时间

environment:

discovery.type: single-node #单节点模式

ingest.geoip.downloader.enabled: "false"

bootstrap.memory_lock: "true"

ES_JAVA_OPTS: "-Xms512m -Xmx512m"

TZ: "Asia/Shanghai"

xpack.security.enabled: "false" #单机模式

ulimits:

memlock:

soft: -1

hard: -1

skywalking-oap:

image: apache/skywalking-oap-server:9.3.0

container_name: skywalking-oap

depends_on:

elasticsearch:

condition: service_healthy

links:

- elasticsearch

environment:

SW_HEALTH_CHECKER: default

SW_STORAGE: elasticsearch

SW_STORAGE_ES_CLUSTER_NODES: elasticsearch:9200

JAVA_OPTS: "-Xms2048m -Xmx2048m"

TZ: Asia/Shanghai

SW_TELEMETRY: prometheus

healthcheck:

test: ["CMD-SHELL", "/skywalking/bin/swctl ch"]

interval: 30s

timeout: 10s

retries: 3

start_period: 10s

restart: on-failure

ports:

- "11800:11800"

- "12800:12800"

skywalking-ui:

image: apache/skywalking-ui:9.3.0

depends_on:

skywalking-oap:

condition: service_healthy

links:

- skywalking-oap

ports:

- "8080:8080"

environment:

SW_OAP_ADDRESS: http://skywalking-oap:12800

SW_HEALTH_CHECKER: default

TZ: Asia/Shanghai

healthcheck:

test: ["CMD-SHELL", "curl -sf http://localhost:8080 || exit 1"] #心跳检测,成功之后不再执行后面的退出

interval: 60s #心跳检测间隔周期

timeout: 10s

retries: 3

start_period: 60s #首次检测延迟时间

测试页面:

在K8S 环境部署SkyWalking

部署es

#准备ES数据目录;nfs服务器,10.0.0.200

mkdir -p /data/k8sdata/myserver/esdata

vim /etc/exports

/data/k8sdata/myserver/esdata *(rw,no_root_squash,insecure)

systemctl restart nfs-server.service

#确认官方es镜像启动账户ID

#在nfs服务器上给es数据目录赋权

chown -R 1000.1000 /data/k8sdata/myserver/esdata

#部署es,编辑es,yaml脚本

vim 1.es.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: myserver

spec:

type: NodePort

ports:

- name: db

nodePort: 31200

port: 9200

protocol: TCP

targetPort: 9200

- name: transport

nodePort: 31300

port: 9300

targetPort: 9300

protocol: TCP

selector:

app: elasticsearch

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: elasticsearch

name: elasticsearch

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

creationTimestamp: null

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/elasticsearch:8.4.2

env:

- name: discovery.type

value: single-node

- name: xpack.security.enabled

value: "false"

- name: TZ

value: "Asia/Shanghai"

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

cpu: 500m

memory: 1Gi

volumeMounts:

- mountPath: /user/share/elasticsearch/data

name: es-storage-volume

volumes:

- name: es-storage-volume

nfs:

server: 10.0.0.200

path: /data/k8sdata/myserver/esdata

#部署

kubectl apply -f 1.es.yaml

#查看pod

kubectl get pod -n myserver

NAME READY STATUS RESTARTS AGE

elasticsearch-69c8b94fb4-2jf54 1/1 Running 0 4m54s

部署skywalking-oap

#编辑yaml文件

vim 2.skywalking-oap.yaml

apiVersion: v1

kind: Service

metadata:

name: skywalking-oap

namespace: myserver

spec:

type: NodePort

ports:

- name: db

nodePort: 31088

port: 12800

- name: transport

nodePort: 31089

port: 11800

selector:

app: skywalking-oap

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: skywalking-oap

name: skywalking-oap

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: skywalking-oap

template:

metadata:

labels:

app: skywalking-oap

spec:

containers:

- name: skywalking-oap

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/skywalking-oap-server:9.3.0

imagePullPolicy: IfNotPresent

env:

- name: SW_STORAGE

value: elasticsearch

- name: SW_STORAGE_ES_CLUSTER_NODES

value: elasticsearch.myserver:9200

- name: TZ

value: "Asia/Shanghai"

ports:

- containerPort: 11800

name: grpc

- containerPort: 12800

name: http

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 1

memory: 2Gi

#部署

kubectl apply -f 2.skywalking-oap.yaml

#查看pod

kubectl get pod -n myserver

NAME READY STATUS RESTARTS AGE

elasticsearch-69c8b94fb4-2jf54 1/1 Running 0 27m

kafka-consumer 0/1 Unknown 0 11d

skywalking-oap-57f5cb8854-plb9n 1/1 Running 0 5m37s

strimzi-cluster-operator-95d88f6b5-kd5jb 1/1 Running 3 (101m ago) 11d

部署skywalking-ui

#编辑yaml文件

vim 3.skywalking-ui.yaml

apiVersion: v1

kind: Service

metadata:

name: skywalking-ui

namespace: myserver

spec:

type: NodePort

ports:

- name: page

nodePort: 31808

port: 8080

selector:

app: skywalking-ui

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: skywalking-ui

name: skywalking-ui

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: skywalking-ui

template:

metadata:

labels:

app: skywalking-ui

spec:

containers:

- name: skywalking-ui

image: apache/skywalking-ui:9.3.0

env:

- name: SW_OAP_ADDRESS

value: http://skywalking-oap.myserver:12800

- name: TZ

value: "Asia/Shanghai"

ports:

- containerPort: 8080

name: page

#部署

kubectl apply -f 3.skywalking-ui.yaml

#查看pod

kubectl get pod -n myserver

NAME READY STATUS RESTARTS AGE

elasticsearch-69c8b94fb4-2jf54 1/1 Running 0 38m

kafka-consumer 0/1 Unknown 0 11d

skywalking-oap-57f5cb8854-plb9n 1/1 Running 0 16m

skywalking-ui-b9c46dc69-kvkn2 1/1 Running 0 2m49s

strimzi-cluster-operator-95d88f6b5-kd5jb 1/1 Running 3 (112m ago) 11d

skywalking-ui界面验证

实现单体服务halo博客和jenkins的请求链路跟踪

SkyWalking-java博客追踪案例

部署skywalking java agent

需要jdk环境,一般是jdk8 或者jdk11居多

mkdir /data && cd /data

tar xvf apache-skywalking-java-agent-8.13.0.tgz

#编辑配置文件

vim /data/skywalking-agent/config/agent.config

#服务名称

agent.service_name=${SW_AGENT_NAME:myserver-halo}

# The agent namespace

#项目名称

agent.namespace=${SW_AGENT_NAMESPACE:myserver}

# Backend service addresses.

#skywalking服务器地址

collector.backend_service=${SW_AGENT_COLLECTOR_BACKEND_SERVICES:10.0.0.160:11800}

部署java服务halo博客

Halo 是一款现代化的个人独立博客系统,而且可能是最好的Java博客系统,从 1.4.3 起,版本要求为 11 以上的版本,1.4.3 以下需要 1.8 以上的版

安装要求:

https://docs.halo.run/getting-started/prepare

apt install openjdk-11-jdk

#创建服务目录

mkdir /apps && cd /apps/

#下载服务java包

wget https://dl.halo.run/release/halo-1.6.1.jar

启动java服务及skywalking java agent

java -javaagent:/data/skywalking-agent/skywalking-agent.jar -jar /apps/halo-1.6.1.jar

生产环境启动方式:

java -javaagent:/data/skywalking-agent/skywalking-agent.jar \

-DSW_AGENT_NAMESPACE=xyz \

-DSW_AGENT_NAME=abc-application \

-Dskywalking.collector.backend_service=skywalking.abc.xyz.com:11800 \

-jar abc-xyz-1.0-SNAPSHOT.jar

#-D 传递参数;传递参数比修改配置文件的优先级高;skywalking.abc.xyz.com skywalking服务器地址

#SW_AGENT_NAMESPACE :项目名称

#SW_AGENT_NAME:服务名称

登录博客

http://10.0.0.167:8090

查看SkyWalking 界面

Java服务案例-tomcat运行Jenkins实现链路跟踪案例

基础环境准备:

https://skywalking.apache.org/docs/skywalking-java/latest/en/setup/service-agent/java-agent/readme/

部署jdk环境

注意jdk版本

apt install openjdk-8-jdk -y

部署skywalking java客户端

mkdir /data && cd /data

tar xvf apache-skywalking-java-agent-8.13.0.tgz

vim skywalking-agent/config/agent.config

# The group name is optional only.

agent.service_name=${SW_AGENT_NAME:myserver-jenkins}

# The agent namespace

agent.namespace=${SW_AGENT_NAMESPACE:myserver}

# Backend service addresses.

collector.backend_service=${SW_AGENT_COLLECTOR_BACKEND_SERVICES:10.0.0.161:11800}

部署tomcat

https://tomcat.apache.org/

root@ubuntu:/apps# pwd

/apps

root@ubuntu:/apps# wget wget https://archive.apache.org/dist/tomcat/tomcat-8/v8.5.84/bin/apache-tomcat-8.5.84.tar.gz

tar xvf apache-tomcat-8.5.84.tar.gz

#编辑Tomcat启动文件

vim /apps/apache-tomcat-8.5.84/bin/catalina.sh

#添加

CATALINA_OPTS="$CATALINA_OPTS -javaagent:/data/skywalking-agent/skywalking-agent.jar"; export CATALINA_OPTS

上传Jenkins app并启动tomcat

#上传到这个目录

cd /apps/apache-tomcat-8.5.84/webapps

root@ubuntu:/apps/apache-tomcat-8.5.84/webapps# ll

total 89068

drwxr-x--- 2 root root 4096 Nov 3 06:43 ./

drwxr-xr-x 9 root root 4096 Nov 3 06:35 ../

-rw-r--r-- 1 root root 91196986 Nov 1 05:30 jenkins.war

#启动Tomcat

/apps/apache-tomcat-8.5.84/bin/catalina.sh run

验证

访问Jenkins页面

http://10.0.0.166:8080/jenkins

skywalking web页面

在虚拟机环境基于skyWalking实现对dubbo微服务的链路跟踪

部署注册中心zk

服务器:10.0.0.162;10.0.0.163;10.0.0.164

#各个服务器安装jdk

apt install openjdk-8-jdk -y

#各个服务器创建zk目录

mkdir /apps && cd /apps

#各个服务器创建zookeeper数据目录

#各个服务器下载zk二进制包

wget https://dlcdn.apache.org/zookeeper/zookeeper-3.7.1/apache-zookeeper-3.7.1-bin.tar.gz

tar xvf apache-zookeeper-3.7.1-bin.tar.gz

ln -sv /apps/apache-zookeeper-3.7.1-bin /apps/zookeeper

#编辑zookeeper配置文件;三个服务器配置文件都一样

cp /apps/zookeeper/conf/zoo_sample.cfg /apps/zookeeper/conf/zoo.cfg

vim /apps/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper

clientPort=2181

server.1=10.0.0.162:2888:3888

server.2=10.0.0.163:2888:3888

server.3=10.0.0.164:2888:3888

#配置myid文件;配置依据在zoo.cfg中server.1=10.0.0.162:2888:3888;server.2=10.0.0.163:2888:3888;server.3=10.0.0.164:2888:3888

#配置10.0.0.162 myid文件

echo 1 > /data/zookeeper/myid

#配置10.0.0.163 myid文件

echo 2 > /data/zookeeper/myid

#配置10.0.0.164 myid文件

echo 3 > /data/zookeeper/myid

#各个服务器启动zookeeper;启动要快,因为tickTime=2000。(票据时间20s)

/apps/zookeeper/bin/zkServer.sh start

#查看每个服务器zookeeper状态

root@ubuntu:/apps# /apps/zookeeper/bin/zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

root@ubuntu:/apps# /apps/zookeeper/bin/zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

root@ubuntu:/apps# /apps/zookeeper/bin/zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

部署provider

10.0.0.160

apt install openjdk-8-jdk -y

#部署skywalking java agent

mkdir /data && cd /data

tar xvf apache-skywalking-java-agent-8.13.0.tgz

vim /data/skywalking-agent/config/agent.config

# The group name is optional only.

agent.service_name=${SW_AGENT_NAME:dubbo-server1}

# The agent namespace

agent.namespace=${SW_AGENT_NAMESPACE:myserver}

# Backend service addresses.

collector.backend_service=${SW_AGENT_COLLECTOR_BACKEND_SERVICES:10.0.0.161:11800}

#添加环境变量,dubbo里面的zookeeper地址写在了源代码中,域名变量为ZK_SERVER1:

vim /etc/profile

export ZK_SERVER1=10.0.0.162

source /etc/profile

#创建provider服务目录

mkdir -pv /apps/dubbo/provider

#上传服务dubbo代码

cd /apps/dubbo/provider

#启动

java -javaagent:/data/skywalking-agent/skywalking-agent.jar -jar /apps/dubbo/provider/dubbo-server.jar

查看skywalking页面

部署consumer

10.0.0.166

apt install openjdk-8-jdk -y

#部署skywalking java agent

mkdir /data && cd /data

tar xvf apache-skywalking-java-agent-8.13.0.tgz

vim /data/skywalking-agent/config/agent.config

# The group name is optional only.

agent.service_name=${SW_AGENT_NAME:dubbo-consumer1}

# The agent namespace

agent.namespace=${SW_AGENT_NAMESPACE:myserver}

# Backend service addresses.

collector.backend_service=${SW_AGENT_COLLECTOR_BACKEND_SERVICES:10.0.0.161:11800}

#添加环境变量,dubbo里面的zookeeper地址写在了源代码中,域名变量为ZK_SERVER1:

vim /etc/profile

export ZK_SERVER1=10.0.0.162

source /etc/profile

#创建provider服务目录

mkdir -pv /apps/dubbo/consumer

#上传服务dubbo代码

cd /apps/dubbo/consumer

#启动

java -javaagent:/data/skywalking-agent/skywalking-agent.jar -jar /apps/dubbo/consumer/dubbo-client.jar

访问consumer:

http://10.0.0.166:8080/hello?name=wang 传递参数访问

验证skywalking数据

实现skywalking的钉钉告警

在k8s中配置钉钉告警

#编辑alarm-settings.yml告警规则配置文件的cm

cat skywalkin-cfg.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: skywalking-cfg

namespace: myserver

data:

alarm-settings: |

rules:

service_cpm_rule:

metrics-name: service_cpm

op: ">"

threshold: 1

period: 1

count: 1

silence-period: 0

message: myserver-openresty service_cpm 大于1了

dingtalkHooks:

textTemplate: |-

{

"msgtype": "text",

"text": {

"content": "Apache SkyWalking alertname : \n %s."

}

}

webhooks:

- url: https://oapi.dingtalk.com/robot/send?access_token=652aa58c664ff935e75b4f7f1b61d9d96e98da813d040424b2d027277946b518

#执行创建

kubectl apply -f skywalkin-cfg.yaml

cat 1.es.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: myserver

spec:

type: NodePort

ports:

- name: db

nodePort: 31200

port: 9200

protocol: TCP

targetPort: 9200

- name: transport

nodePort: 31300

port: 9300

targetPort: 9300

protocol: TCP

selector:

app: elasticsearch

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: elasticsearch

name: elasticsearch

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

creationTimestamp: null

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/elasticsearch:8.4.2

env:

- name: discovery.type

value: single-node

- name: xpack.security.enabled

value: "false"

- name: TZ

value: "Asia/Shanghai"

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

cpu: 500m

memory: 1Gi

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: es-storage-volume

volumes:

- name: es-storage-volume

nfs:

server: 10.0.0.200

path: /data/k8sdata/myserver/esdata

cat 2.skywalking-oap.yaml

apiVersion: v1

kind: Service

metadata:

name: skywalking-oap

namespace: myserver

spec:

type: NodePort

ports:

- name: db

nodePort: 31088

port: 12800

- name: transport

nodePort: 31089

port: 11800

selector:

app: skywalking-oap

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: skywalking-oap

name: skywalking-oap

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: skywalking-oap

template:

metadata:

labels:

app: skywalking-oap

spec:

containers:

- name: skywalking-oap

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/skywalking-oap-server:9.3.0

imagePullPolicy: IfNotPresent

env:

- name: SW_STORAGE

value: elasticsearch

- name: SW_STORAGE_ES_CLUSTER_NODES

value: elasticsearch.myserver:9200

- name: TZ

value: "Asia/Shanghai"

ports:

- containerPort: 11800

name: grpc

- containerPort: 12800

name: http

volumeMounts:

- name: skywalking-cfg-vl

mountPath: /skywalking/config/alarm-settings.yml

subPath: alarm-settings.yml

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 1

memory: 2Gi

volumes:

- name: skywalking-cfg-vl

configMap:

name: skywalking-cfg

items:

- key: alarm-settings

path: alarm-settings.yml

cat 3.skywalking-ui.yaml

apiVersion: v1

kind: Service

metadata:

name: skywalking-ui

namespace: myserver

spec:

type: NodePort

ports:

- name: page

nodePort: 31808

port: 8080

selector:

app: skywalking-ui

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: skywalking-ui

name: skywalking-ui

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: skywalking-ui

template:

metadata:

labels:

app: skywalking-ui

spec:

containers:

- name: skywalking-ui

image: apache/skywalking-ui:9.3.0

env:

- name: SW_OAP_ADDRESS

value: http://skywalking-oap.myserver:12800

- name: TZ

value: "Asia/Shanghai"

ports:

- containerPort: 8080

name: page

web页面测试

实现python Django项目的请求链路跟踪

Django项目(ubuntu 20.04.x/python3.8

安装SkyWalking Python agent

apt install python3-pip

pip3 install "apache-skywalking"

#测试注册sw

root@ubuntu:~# python3

Python 3.10.12 (main, Jun 11 2023, 05:26:28) [GCC 11.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from skywalking import agent, config

>>> config.init(collector_address='10.0.0.161:11800', service_name='python-app')

>>> agent.start()

#上传Django项目

mkdir /apps

cd /apps/

tar xvf django-test.tgz

cd django-test/

#安装依赖模块

pip3 install -r requirements.txt

#创建django项目mysite

django-admin startproject mysite

#创建应用

cd mysite

python3 manage.py startapp myapp

#初始化数据库

python3 manage.py makemigrations

python3 manage.py migrate

#创建管理员,用于登录web控制台

python3 manage.py createsuperuser

#skywarking环境变量:

export SW_AGENT_NAME='python-app1'

export SW_AGENT_NAMESPACE='python-project'

export SW_AGENT_COLLECTOR_BACKEND_SERVICES='10.0.0.161:11800'

#修改配置:允许哪些主机访问,*所有主机

vim mysite/settings.py

ALLOWED_HOSTS = ["*",]

#启动服务:

sw-python -d run python3 manage.py runserver 10.0.0.160:80

#访问

http://10.0.0.160/admin/

结合OpenResty实现动静分离的全链路请求指标收集

编译安装 openresty

apt install iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute gcc openssh-server lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute iotop unzip zip

wget https://openresty.org/download/openresty-1.21.4.1.tar.gz

tar xvf openresty-1.21.4.1.tar.gz

cd openresty-1.21.4.1

./configure --prefix=/apps/openresty \

--with-luajit \

--with-pcre \

--with-http_iconv_module \

--with-http_realip_module \

--with-http_sub_module \

--with-http_stub_status_module \

--with-stream \

--with-stream_ssl_module

make && make install

#验证及启动

root@ubuntu:/opt/openresty-1.21.4.1# /apps/openresty/nginx/sbin/nginx -t

nginx: the configuration file /apps/openresty/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /apps/openresty/nginx/conf/nginx.conf test is successful

root@ubuntu:/opt/openresty-1.21.4.1# /apps/openresty/nginx/sbin/nginx

验证lua环境

vim /apps/openresty/nginx/conf/nginx.conf

server {

listen 80;

server_name localhost;

location /hello {

default_type text/html;

content_by_lua_block {

ngx.say("Hello Lua!")

}

}

/apps/openresty/nginx/sbin/nginx -s reload

配置 skywalking nginx agent

https://github.com/apache/skywalking-nginx-lua

mkdir /data

cd /data

wget https://github.com/apache/skywalking-nginx-lua/archive/refs/tags/v0.6.0.tar.gz

tar xvf v0.6.0.tar.gz

cd skywalking-nginx-lua-0.6.0/

cd /apps/openresty/nginx/conf/

vim nginx.conf

#写在最后一个}上面

include /apps/openresty/nginx/conf/conf.d/*.conf;

mkdir conf.d

vim conf.d/myserver.conf

#导入skywalking-nginx-lua的库文件

lua_package_path "/data/skywalking-nginx-lua-0.6.0/lib/?.lua;;";

# Buffer represents the register inform and the queue of the finished segment

lua_shared_dict tracing_buffer 100m;

# Init is the timer setter and keeper

# Setup an infinite loop timer to do register and trace report.

init_worker_by_lua_block {

local metadata_buffer = ngx.shared.tracing_buffer

metadata_buffer:set('serviceName', 'myserver-openresty') ---#在skywalking 显示的当前server 名称,用于区分事件是有哪个服务产生的

-- Instance means the number of Nginx deloyment, does not mean the worker instances

metadata_buffer:set('serviceInstanceName', 'myserver-openresty-node1') ---#当前示例名称,用户事件是在那台服务器产生的

-- type 'boolean', mark the entrySpan include host/domain

metadata_buffer:set('includeHostInEntrySpan', false) ---#在span信息中包含主机信息;true包含,可以改成true

-- set randomseed

require("skywalking.util").set_randomseed()

require("skywalking.client"):startBackendTimer("http://10.0.0.161:12800") --#使用的http协议,12800端口

-- Any time you want to stop reporting metrics, call `destroyBackendTimer`

-- require("skywalking.client"):destroyBackendTimer()

-- If there is a bug of this `tablepool` implementation, we can

-- disable it in this way

-- require("skywalking.util").disable_tablepool()

skywalking_tracer = require("skywalking.tracer")

}

server {

listen 80;

server_name www.wang.com;

location / {

root html;

index index.html index.htm;

#手动配置的一个上游服务名称或DNS名称,在skywalking会显示此名称,指定访问www.myserver.com域名的时候开始链路指标收集

rewrite_by_lua_block {

------------------------------------------------------

-- NOTICE, this should be changed manually

-- This variable represents the upstream logic address

-- Please set them as service logic name or DNS name

--

-- Currently, we can not have the upstream real network address

------------------------------------------------------

skywalking_tracer:start("www.wang.com")

-- If you want correlation custom data to the downstream service

-- skywalking_tracer:start("upstream service", {custom = "custom_value"})

}

#用于修改响应内容(注入JS)

body_filter_by_lua_block {

if ngx.arg[2] then

skywalking_tracer:finish()

end

}

#记录日志

log_by_lua_block {

skywalking_tracer:prepareForReport()

}

}

location /myserver {

default_type text/html;

rewrite_by_lua_block {

------------------------------------------------------

-- NOTICE, this should be changed manually

-- This variable represents the upstream logic address

-- Please set them as service logic name or DNS name

--

-- Currently, we can not have the upstream real network address

------------------------------------------------------

skywalking_tracer:start("www.wang.com")

-- If you want correlation custom data to the downstream service

-- skywalking_tracer:start("upstream service", {custom = "custom_value"})

}

proxy_pass http://172.31.2.165;

body_filter_by_lua_block {

if ngx.arg[2] then

skywalking_tracer:finish()

end

}

log_by_lua_block {

skywalking_tracer:prepareForReport()

}

}

location /hello {

default_type text/html;

rewrite_by_lua_block {

------------------------------------------------------

-- NOTICE, this should be changed manually

-- This variable represents the upstream logic address

-- Please set them as service logic name or DNS name

--

-- Currently, we can not have the upstream real network address

------------------------------------------------------

skywalking_tracer:start("www.wang.com")

-- If you want correlation custom data to the downstream service

-- skywalking_tracer:start("upstream service", {custom = "custom_value"})

}

proxy_pass http://10.0.0.210:30001;

body_filter_by_lua_block {

if ngx.arg[2] then

skywalking_tracer:finish()

end

}

log_by_lua_block {

skywalking_tracer:prepareForReport()

}

}

}

#验证重新加载

/apps/openresty/nginx/sbin/nginx -t

/apps/openresty/nginx/sbin/nginx -s reload

前后端测试访问

skywalking界面验证

在K8S 环境基于kyWalking实现对运行在K8S环境的dubbo微服务的链路跟踪

创建系统基础镜像

cat Dockerfile

#自定义Centos 基础镜像

FROM centos:7.9.2009

#FROM centos:7.9.2009

MAINTAINER 2973707860@qq.com

ADD filebeat-7.12.1-x86_64.rpm /tmp

RUN yum makecache fast

RUN yum install -y /tmp/filebeat-7.12.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && rm -rf /etc/localtime /tmp/filebeat-7.12.1-x86_64.rpm && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && useradd nginx -u 2022

cat build-command.sh

#!/bin/bash

#docker build -t harbor.linuxarchitect.io/baseimages/centos-base:7.9.2009 .

#docker push harbor.linuxarchitect.io/baseimages/centos-base:7.9.2009

nerdctl build -t harbor.canghailyt.com/sw/centos-base:7.9.2009 .

nerdctl push harbor.canghailyt.com/sw/centos-base:7.9.2009

ll

total 31856

drwxr-xr-x 2 root root 4096 Nov 6 12:30 ./

drwxr-xr-x 3 root root 4096 Jun 23 18:44 ../

-rw-r--r-- 1 root root 482 Nov 6 12:30 Dockerfile

-rw-r--r-- 1 root root 289 Nov 6 12:30 build-command.sh

-rw-r--r-- 1 root root 32600353 Jun 23 18:44 filebeat-7.12.1-x86_64.rpm

bash build-command.sh

创建jdk基础镜像

ll

total 190468

drwxr-xr-x 2 root root 4096 Oct 26 23:24 ./

drwxr-xr-x 4 root root 4096 Oct 26 23:23 ../

-rw-r--r-- 1 root root 385 Jun 23 18:45 Dockerfile

-rw-r--r-- 1 root root 306 Jun 23 18:45 build-command.sh

-rw-r--r-- 1 root root 195013152 Jun 23 18:45 jdk-8u212-linux-x64.tar.gz

-rw-r--r-- 1 root root 2105 Jun 23 18:45 profile

cat Dockerfile

#JDK Base Image

FROM harbor.canghailyt.com/sw/centos-base:7.9.2009

MAINTAINER zhangshijie "zhangshijie53@jd.com"

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

ADD profile /etc/profile

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

cat build-command.sh

#!/bin/bash

#docker build -t harbor.linuxarchitect.io/baseimages/jdk-base:v8.212 .

#sleep 1

#docker push harbor.linuxarchitect.io/baseimages/jdk-base:v8.212

nerdctl build -t harbor.canghailyt.com/sw/jdk-base:v8.212 .

sleep 1

nerdctl push harbor.canghailyt.com/sw/jdk-base:v8.212

cat profile

# /etc/profile

# System wide environment and startup programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless you know what you

# are doing. It's much better to create a custom.sh shell script in

# /etc/profile.d/ to make custom changes to your environment, as this

# will prevent the need for merging in future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" = "after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`/usr/bin/id -u`

UID=`/usr/bin/id -ru`

fi

USER="`/usr/bin/id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ]; then

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

fi

HOSTNAME=`/usr/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" = "ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

# By default, we want umask to get set. This sets it for login shell

# Current threshold for system reserved uid/gids is 200

# You could check uidgid reservation validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [ "`/usr/bin/id -gn`" = "`/usr/bin/id -un`" ]; then

umask 002

else

umask 022

fi

for i in /etc/profile.d/*.sh /etc/profile.d/sh.local ; do

if [ -r "$i" ]; then

if [ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null

fi

fi

done

unset i

unset -f pathmunge

export LANG=en_US.UTF-8

export HISTTIMEFORMAT="%F %T `whoami` "

export JAVA_HOME=/usr/local/jdk

export TOMCAT_HOME=/apps/tomcat

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$TOMCAT_HOME/bin:$PATH

export CLASSPATH=.$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tools.jar

bash build-command.sh

创建Tomcat基础镜像

# ll

total 9508

drwxr-xr-x 2 root root 4096 Oct 26 23:26 ./

drwxr-xr-x 4 root root 4096 Oct 26 23:23 ../

-rw-r--r-- 1 root root 352 Oct 26 23:24 Dockerfile

-rw-r--r-- 1 root root 9717059 Jun 22 2021 apache-tomcat-8.5.43.tar.gz

-rw-r--r-- 1 root root 316 May 11 14:17 build-command.sh

cat Dockerfile

#Tomcat 8.5.43基础镜像

FROM harbor.canghailyt.com/sw/jdk-base:v8.212

MAINTAINER zhangshijie "zhangshijie@magedu.net"

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

ADD apache-tomcat-8.5.43.tar.gz /apps

RUN useradd tomcat -u 2050 && ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat && chown -R tomcat.tomcat /apps /data -R

cat build-command.sh

#!/bin/bash

#docker build -t harbor.linuxarchitect.io/pub-images/tomcat-base:v8.5.43 .

#sleep 3

#docker push harbor.linuxarchitect.io/pub-images/tomcat-base:v8.5.43

nerdctl build -t harbor.canghailyt.com/sw/tomcat-base:v8.5.43 .

nerdctl push harbor.canghailyt.com/sw/tomcat-base:v8.5.43

bash build-command.sh

创建zookeeper基础镜像

# ll

total 36900

drwxr-xr-x 4 root root 4096 Oct 26 23:14 ./

drwxr-xr-x 4 root root 4096 Jun 23 18:45 ../

-rw-r--r-- 1 root root 1739 Jun 23 18:45 Dockerfile

-rw-r--r-- 1 root root 63587 Jun 23 18:45 KEYS

-rw-r--r-- 1 root root 0 Jun 23 18:45 Using

drwxr-xr-x 2 root root 4096 Jun 23 18:45 bin/

-rwxr-xr-x 1 root root 358 Jun 23 18:45 build-command.sh*

drwxr-xr-x 2 root root 4096 Jun 23 18:45 conf/

-rwxr-xr-x 1 root root 278 Jun 23 18:45 entrypoint.sh*

-rw-r--r-- 1 root root 91 Jun 23 18:45 repositories

-rw-r--r-- 1 root root 2270 Jun 23 18:45 zookeeper-3.12-Dockerfile.tar.gz

-rw-r--r-- 1 root root 37676320 Jun 23 18:45 zookeeper-3.4.14.tar.gz

-rw-r--r-- 1 root root 836 Jun 23 18:45 zookeeper-3.4.14.tar.gz.asc

cat Dockerfile

#FROM elevy/slim_java:8

FROM registry.cn-hangzhou.aliyuncs.com/zhangshijie/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

# Download Zookeeper

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

#

# Clean up

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

cat repositories

http://mirrors.aliyun.com/alpine/v3.6/main

http://mirrors.aliyun.com/alpine/v3.6/community

cat bin/zkReady.sh

#!/bin/bash

/zookeeper/bin/zkServer.sh status | egrep 'Mode: (standalone|leading|following|observing)'

cat conf/log4j.properties

# Define some default values that can be overridden by system properties

zookeeper.root.logger=INFO, CONSOLE, ROLLINGFILE

zookeeper.console.threshold=INFO

zookeeper.log.dir=/zookeeper/log

zookeeper.log.file=zookeeper.log

zookeeper.log.threshold=INFO

zookeeper.tracelog.dir=/zookeeper/log

zookeeper.tracelog.file=zookeeper_trace.log

#

# ZooKeeper Logging Configuration

#

# Format is "<default threshold> (, <appender>)+

# DEFAULT: console appender only

log4j.rootLogger=${zookeeper.root.logger}

# Example with rolling log file

#log4j.rootLogger=DEBUG, CONSOLE, ROLLINGFILE

# Example with rolling log file and tracing

#log4j.rootLogger=TRACE, CONSOLE, ROLLINGFILE, TRACEFILE

#

# Log INFO level and above messages to the console

#

log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender

log4j.appender.CONSOLE.Threshold=${zookeeper.console.threshold}

log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout

log4j.appender.CONSOLE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add ROLLINGFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.ROLLINGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.ROLLINGFILE.Threshold=${zookeeper.log.threshold}

log4j.appender.ROLLINGFILE.File=${zookeeper.log.dir}/${zookeeper.log.file}

# Max log file size of 10MB

log4j.appender.ROLLINGFILE.MaxFileSize=10MB

# uncomment the next line to limit number of backup files

log4j.appender.ROLLINGFILE.MaxBackupIndex=5

log4j.appender.ROLLINGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.ROLLINGFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add TRACEFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.TRACEFILE=org.apache.log4j.FileAppender

log4j.appender.TRACEFILE.Threshold=TRACE

log4j.appender.TRACEFILE.File=${zookeeper.tracelog.dir}/${zookeeper.tracelog.file}

log4j.appender.TRACEFILE.layout=org.apache.log4j.PatternLayout

### Notice we are including log4j's NDC here (%x)

log4j.appender.TRACEFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L][%x] - %m%n

cat conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/zookeeper/data

dataLogDir=/zookeeper/wal

#snapCount=100000

autopurge.purgeInterval=1

clientPort=2181

cat entrypoint.sh

#!/bin/bash

echo ${MYID:-1} > /zookeeper/data/myid

if [ -n "$SERVERS" ]; then

IFS=\, read -a servers <<<"$SERVERS"

for i in "${!servers[@]}"; do

printf "\nserver.%i=%s:2888:3888" "$((1 + $i))" "${servers[$i]}" >> /zookeeper/conf/zoo.cfg

done

fi

cd /zookeeper

exec "$@"

cat build-command.sh

#!/bin/bash

TAG=$1

nerdctl build -t harbor.canghailyt.com/sw/zookeeper:${TAG} .

#nerdctl tag harbor.linuxarchitect.io/magedu/zookeeper:${TAG} registry.cn-hangzhou.aliyuncs.com/zhangshijie/zookeeper:${TAG}

sleep 1

nerdctl push harbor.canghailyt.com/sw/zookeeper:${TAG}

#nerdctl push registry.cn-hangzhou.aliyuncs.com/zhangshijie/zookeeper:${TAG}

bash build-command.sh v1

创建consumer镜像

# ll

total 70672

drwxr-xr-x 3 root root 4096 Oct 26 23:19 ./

drwxr-xr-x 5 root root 4096 Jun 23 18:45 ../

-rw-r--r-- 1 root root 466 Jun 23 18:44 Dockerfile

-rw-r--r-- 1 root root 28982315 Jun 23 18:44 apache-skywalking-java-agent-8.8.0.tgz

-rwxr-xr-x 1 root root 318 Oct 26 23:19 build-command.sh*

-rw-r--r-- 1 root root 21677237 Jun 23 18:44 dubbo-client.jar

-rw-r--r-- 1 root root 21678246 Jun 23 18:44 dubbo-client.jar.bak

-rwxr-xr-x 1 root root 442 Jun 23 18:44 run_java.sh*

drwxr-xr-x 10 root root 4096 Jun 23 18:44 skywalking-agent/

cat Dockerfile

#Dubbo consumer

FROM harbor.canghailyt.com/sw/jdk-base:v8.212

MAINTAINER zhangshijie

RUN yum install file -y

RUN mkdir -p /apps/dubbo/consumer && useradd user1 -u 2000

ADD run_java.sh /apps/dubbo/consumer/bin/

ADD skywalking-agent/ /skywalking-agent/

ADD dubbo-client.jar /apps/dubbo/consumer/dubbo-client.jar

RUN chown user1.user1 /apps /skywalking-agent -R

RUN chmod a+x /apps/dubbo/consumer/bin/*.sh

CMD ["/apps/dubbo/consumer/bin/run_java.sh"]

cat run_java.sh

#!/bin/bash

#echo "nameserver 223.6.6.6" > /etc/resolv.conf

#/usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat &

#su - user1 -c "java -jar /apps/dubbo/consumer/dubbo-client.jar"

su - user1 -c "java -javaagent:/skywalking-agent/skywalking-agent.jar -jar /apps/dubbo/consumer/dubbo-client.jar"

#tail -f /etc/hosts

cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.linuxarchitect.io/myserver/dubbo-consumer:${TAG} .

#sleep 1

#docker push harbor.linuxarchitect.io/myserver/dubbo-consumer:${TAG}

nerdctl build -t harbor.canghailyt.com/sw/dubbo-consumer:${TAG} .

nerdctl push harbor.canghailyt.com/sw/dubbo-consumer:${TAG}

bash build-command.sh v1

创建provider镜像

# ll

total 56272

drwxr-xr-x 3 root root 4096 Oct 26 23:18 ./

drwxr-xr-x 5 root root 4096 Jun 23 18:45 ../

-rw-r--r-- 1 root root 6148 Jun 23 18:45 .DS_Store

-rw-r--r-- 1 root root 450 Jun 23 18:45 Dockerfile

-rw-r--r-- 1 root root 28982315 Jun 23 18:45 apache-skywalking-java-agent-8.8.0.tgz

-rwxr-xr-x 1 root root 303 Oct 26 23:18 build-command.sh*

-rw-r--r-- 1 root root 14301410 Jun 23 18:45 dubbo-server.jar

-rw-r--r-- 1 root root 14302411 Jun 23 18:45 dubbo-server.jar.bak

-rwxr-xr-x 1 root root 396 Jun 23 18:45 run_java.sh*

drwxr-xr-x 10 root root 4096 Jun 23 18:45 skywalking-agent/

cat Dockerfile

#Dubbo provider

FROM harbor.canghailyt.com/sw/jdk-base:v8.212

MAINTAINER zhangshijie

RUN yum install file nc -y && useradd user1 -u 2000

RUN mkdir -p /apps/dubbo/provider

ADD dubbo-server.jar /apps/dubbo/provider/

ADD run_java.sh /apps/dubbo/provider/bin/

ADD skywalking-agent/ /skywalking-agent/

RUN chown user1.user1 /apps /skywalking-agent -R

RUN chmod a+x /apps/dubbo/provider/bin/*.sh

CMD ["/apps/dubbo/provider/bin/run_java.sh"]

cat build-command.sh

#!/bin/bash

#docker build -t harbor.linuxarchitect.io/myserver/dubbo-provider:v1 .

#sleep 1

#docker push harbor.linuxarchitect.io/myserver/dubbo-provider:v1

nerdctl build -t harbor.canghailyt.com/sw/dubbo-provider:v1 .

sleep 1

nerdctl push harbor.canghailyt.com/sw/dubbo-provider:v1

cat run_java.sh

#!/bin/bash

#/usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat &

#su - user1 -c "java -jar /apps/dubbo/provider/dubbo-server.jar"

su - user1 -c "java -javaagent:/skywalking-agent/skywalking-agent.jar -jar /apps/dubbo/provider/dubbo-server.jar"

#tail -f /etc/hosts

bash build-command.sh v1

创建dubboadmin镜像

# ll

total 54316

drwxr-xr-x 3 root root 4096 Oct 26 23:21 ./

drwxr-xr-x 5 root root 4096 Jun 23 18:45 ../

-rw-r--r-- 1 root root 656 Jun 23 18:45 Dockerfile

-rwxr-xr-x 1 root root 295 Oct 26 23:21 build-command.sh*

-rwxr-xr-x 1 root root 22201 Jun 23 18:44 catalina.sh*

drwxr-xr-x 8 root root 4096 Jun 23 18:44 dubboadmin/

-rw-r--r-- 1 root root 27777987 Jun 23 18:45 dubboadmin.war

-rw-r--r-- 1 root root 27777984 Jun 23 18:45 dubboadmin.war.bak

-rw-r--r-- 1 root root 3436 Jun 23 18:45 logging.properties

-rwxr-xr-x 1 root root 99 Jun 23 18:45 run_tomcat.sh*

-rw-r--r-- 1 root root 6427 Jun 23 18:45 server.xml

cat Dockerfile

#Dubbo dubboadmin

#FROM harbor.magedu.local/pub-images/tomcat-base:v8.5.43

FROM harbor.canghailyt.com/sw/tomcat-base:v8.5.43

MAINTAINER zhangshijie "zhangshijie@magedu.net"

RUN yum install unzip -y

ADD server.xml /apps/tomcat/conf/server.xml

ADD logging.properties /apps/tomcat/conf/logging.properties

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD dubboadmin.war /data/tomcat/webapps/dubboadmin.war

RUN useradd user1 && cd /data/tomcat/webapps && unzip dubboadmin.war && rm -rf dubboadmin.war && chown -R user1.user1 /data /apps

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

cat run_tomcat.sh

#!/bin/bash

su - user1 -c "/apps/tomcat/bin/catalina.sh start"

su - user1 -c "tail -f /etc/hosts"

cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.linuxarchitect.io/myserver/dubboadmin:${TAG} .

#docker push harbor.linuxarchitect.io/myserver/dubboadmin:${TAG}

nerdctl build -t harbor.canghailyt.com/sw/dubboadmin:${TAG} .

nerdctl push harbor.canghailyt.com/sw/dubboadmin:${TAG}

bash build-command.sh v1

在k8s中创建zookeeper集群

cat zookeeper-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-1

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.200

path: /data/k8sdata/magedu/zookeeper-datadir-1

mountOptions:

- nfsvers=3

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-2

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.200

path: /data/k8sdata/magedu/zookeeper-datadir-2

mountOptions:

- nfsvers=3

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-3

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.200

path: /data/k8sdata/magedu/zookeeper-datadir-3

mountOptions:

- nfsvers=3

#创建

kubectl apply -f zookeeper-persistentvolume.yaml

cat zookeeper-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-1

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-1

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-2

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-2

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-3

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-3

resources:

requests:

storage: 10Gi

#创建

kubectl apply -f zookeeper-persistentvolumeclaim.yaml

kubectl get pvc -n myserver

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

zookeeper-datadir-pvc-1 Bound zookeeper-datadir-pv-1 10Gi RWO 10s

zookeeper-datadir-pvc-2 Bound zookeeper-datadir-pv-2 10Gi RWO 10s

zookeeper-datadir-pvc-3 Bound zookeeper-datadir-pv-3 10Gi RWO 10s

cat zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: myserver

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

#imagePullSecrets:

#- name: jcr-pull-secret

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir: {}

#emptyDir:

# medium: Memory

containers:

- name: server

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-1

volumes:

- name: zookeeper-datadir-pvc-1

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

#imagePullSecrets:

#- name: jcr-pull-secret

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir: {}

#emptyDir:

# medium: Memory

containers:

- name: server

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-2

volumes:

- name: zookeeper-datadir-pvc-2

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

#imagePullSecrets:

#- name: jcr-pull-secret

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir: {}

#emptyDir:

# medium: Memory

containers:

- name: server

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-3

volumes:

- name: zookeeper-datadir-pvc-3

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-3

#执行

kubectl apply -f zookeeper.yaml

#查看pod

# kubectl get pod -n myserver

NAME READY STATUS RESTARTS AGE

kafka-consumer 0/1 Unknown 0 9d

strimzi-cluster-operator-95d88f6b5-kd5jb 1/1 Running 2 (80m ago) 9d

zookeeper1-667b589dd-f7xwz 1/1 Running 0 4m35s

zookeeper2-77b696dc65-qbmv2 1/1 Running 0 4m35s

zookeeper3-6f75b9c87d-ccn67 1/1 Running 0 4m35s

#查看svc

# kubectl get svc -n myserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

zookeeper ClusterIP 10.10.3.152 <none> 2181/TCP 4m57s

zookeeper1 NodePort 10.10.114.158 <none> 2181:32181/TCP,2888:31872/TCP,3888:31417/TCP 4m57s

zookeeper2 NodePort 10.10.74.210 <none> 2181:32182/TCP,2888:32426/TCP,3888:31732/TCP 4m57s

zookeeper3 NodePort 10.10.31.214 <none> 2181:31183/TCP,2888:31390/TCP,3888:30089/TCP 4m57s

#验证zookeeper集群状态

# kubectl exec -it -n myserver zookeeper2-77b696dc65-qbmv2 /zookeeper/bin/zkServer.sh status

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: follower

# kubectl exec -it -n myserver zookeeper3-6f75b9c87d-ccn67 /zookeeper/bin/zkServer.sh status

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: leader

在k8s中部署consumer

cat consumer.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: myserver-consumer

name: myserver-consumer-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-consumer

template:

metadata:

labels:

app: myserver-consumer

spec:

imagePullSecrets:

containers:

- name: myserver-consumer-container

#image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/dubbo-consumer:v2.5.3-2022092301-zookeeper1

image: harbor.canghailyt.com/sw/dubbo-consumer:v1

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

cat service.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-consumer

name: myserver-consumer-server

namespace: myserver

spec:

#type: LoadBalancer

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30001

selector:

app: myserver-consumer

kubectl apply -f consumer.yaml -f service.yaml

在k8s中创建provider

cat provider.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: myserver-provider

name: myserver-provider-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-provider

template:

metadata:

labels:

app: myserver-provider

spec:

containers:

- name: myserver-provider-container

#image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/dubboadmin:v2.5.3-2022092301-zookeeper1

image: harbor.canghailyt.com/sw/dubbo-provider:v1

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-provider

name: myserver-provider-spec

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

nodePort: 30800

selector:

app: myserver-provider

#创建

kubectl apply -f provider.yaml

在k8s中部署dubboadmin

cat dubboadmin.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: myserver-dubboadmin

name: myserver-dubboadmin-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-dubboadmin

template:

metadata:

labels:

app: myserver-dubboadmin

spec:

containers:

- name: myserver-dubboadmin-container

#image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/dubboadmin:v2.5.3-2022092301-zookeeper1

image: harbor.canghailyt.com/sw/dubboadmin:v1

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-dubboadmin

name: myserver-dubboadmin-service

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30080

selector:

app: myserver-dubboadmin

#部署

kubectl apply -f dubboadmin.yaml

282

282

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?