中文简单介绍:

在机器学习中,(高斯)径向基函数核(英语:Radial basis function kernel),或称为RBF核,是一种常用的核函数。它是支持向量机分类中最为常用的核函数。[1]

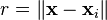

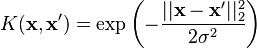

关于两个样本x和x'的RBF核可表示为某个“输入空间”(input space)的特征向量,它的定义如下所示:[2]

可以看做两个特征向量之间的平方欧几里得距离。

可以看做两个特征向量之间的平方欧几里得距离。 是一个自由参数。一种等价但更为简单的定义是设一个新的参数

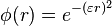

是一个自由参数。一种等价但更为简单的定义是设一个新的参数 ,其表达式为

,其表达式为 :

:

因为RBF核函数的值随距离减小,并介于0(极限)和1(当x = x'的时候)之间,所以它是一种现成的相似性度量表示法。[2]核的特征空间有无穷多的维数;对于 ,它的展开式为:[3]

,它的展开式为:[3]

近似[编辑]

因为支持向量机和其他模型使用了核技巧,它在处理输入空间中大量的训练样本或含有大量特征的样本的时表现不是很好。所以,目前已经设计出了多种RBF核(或相似的其他核)的近似方法。[4] 典型的情况下,这些方法使用z(x)的形式,也就是用一个函数对一个与其他向量(例如支持向量机中的支持向量)无关的单向量进行变换,例如:

其中 是RBF核中植入的隐式映射。

是RBF核中植入的隐式映射。

一种构造这样的z函数的方法是对核函数作傅里叶变换,然后从中随机抽出所需函数。[5]

wiki详解:

A radial basis function (RBF) is a real-valued function whose value depends only on the distance from the origin, so that ; or alternatively on the distance from some other point

c, called a

center, so that

; or alternatively on the distance from some other point

c, called a

center, so that

. Any function

. Any function

that satisfies the property

that satisfies the property

is a

radial function. The norm is usually

Euclidean distance, although other

distance functions are also possible. For example, using

Łukaszyk–Karmowski metric, it is possible for some radial functions to avoid problems with

ill conditioning of the matrix solved to determine coefficients

w

i (see below), since the

is a

radial function. The norm is usually

Euclidean distance, although other

distance functions are also possible. For example, using

Łukaszyk–Karmowski metric, it is possible for some radial functions to avoid problems with

ill conditioning of the matrix solved to determine coefficients

w

i (see below), since the

is always greater than zero.

[1]

is always greater than zero.

[1]

Sums of radial basis functions are typically used to approximate given functions. This approximation process can also be interpreted as a simple kind of neural network; this was the context in which they originally surfaced, in work by David Broomhead and David Lowe in 1988,[2][3] which stemmed from Michael J. D. Powell's seminal research from 1977.[4][5][6] RBFs are also used as a kernel in support vector classification.[7]

Types[edit]

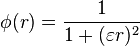

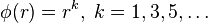

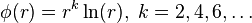

Commonly used types of radial basis functions include (writing  ):

):

The first term, that is used for normalisation of the Gaussian, is missing, because in our sum every Gaussian has a weight, so the normalisation is not necessary.

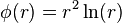

- Thin plate spline (a special polyharmonic spline):

Approximation[edit]

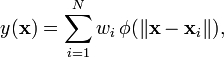

Radial basis functions are typically used to build up function approximations of the form

where the approximating function y(x) is represented as a sum of N radial basis functions, each associated with a different center xi, and weighted by an appropriate coefficient wi. The weights wi can be estimated using the matrix methods of linear least squares, because the approximating function is linear in the weights.

Approximation schemes of this kind have been particularly used[citation needed] in time series prediction and control of nonlinear systems exhibiting sufficiently simple chaotic behaviour, 3D reconstruction in computer graphics (for example, hierarchical RBF and Pose Space Deformation).

RBF Network[edit]

The sum

can also be interpreted as a rather simple single-layer type of artificial neural network called a radial basis function network, with the radial basis functions taking on the role of the activation functions of the network. It can be shown that any continuous function on a compact interval can in principle be interpolated with arbitrary accuracy by a sum of this form, if a sufficiently large number N of radial basis functions is used.

The approximant y(x) is differentiable with respect to the weights wi. The weights could thus be learned using any of the standard iterative methods for neural networks.

Using radial basis functions in this manner yields a reasonable interpolation approach provided that the fitting set has been chosen such that it covers the entire range systematically (equidistant data points are ideal). However, without a polynomial term that is orthogonal to the radial basis functions, estimates outside the fitting set tend to perform poorly.

See also[edit]

References[edit]

- Jump up^ Łukaszyk, S. (2004) A new concept of probability metric and its applications in approximation of scattered data sets. Computational Mechanics, 33, 299-3004. limited access

- Jump up^Radial Basis Function networks

- Jump up^ Broomhead, David H.; Lowe, David (1988). "Multivariable Functional Interpolation and Adaptive Networks" (PDF). Complex Systems 2: 321––355. Archived from the original (PDF) on 2014-07-14.

- Jump up^ Michael J. D. Powell (1977). "Restart procedures for the conjugate gradient method" (PDF). Mathematical Programming (Springer) 12 (1): 241––254.

- Jump up^ Sahin, Ferat (1997). A Radial Basis Function Approach to a Color Image Classification Problem in a Real Time Industrial Application (PDF) (M.Sc.). Virginia Tech. p. 26.

Radial basis functions were first introduced by Powell to solve the real multivariate interpolation problem.

- Jump up^ Broomhead & Lowe 1988, p. 347: "We would like to thank Professor M.J.D. Powell at the Department of Applied Mathematics and Theoretical Physics at Cambridge University for providing the initial stimulus for this work."

- Jump up^ VanderPlas, Jake (6 May 2015). "Introduction to Support Vector Machines" [O'Reilly]. Retrieved 14 May 2015.

Further reading[edit]

|

| This article includes a list of references, but its sources remain unclear because it has insufficient inline citations. (June 2013) |

- Buhmann, Martin D. (2003), Radial Basis Functions: Theory and Implementations, Cambridge University Press, ISBN 978-0-521-63338-3.

- Hardy, R.L., Multiquadric equations of topography and other irregular surfaces. Journal of Geophysical Research, 76(8):1905–1915, 1971.

- Hardy, R.L., 1990, Theory and applications of the multiquadric-biharmonic method, 20 years of Discovery, 1968 1988, Comp. math Applic. Vol 19, no. 8/9, pp. 163 208

- Press, WH; Teukolsky, SA; Vetterling, WT; Flannery, BP (2007),"Section 3.7.1. Radial Basis Function Interpolation" Numerical Recipes: The Art of Scientific Computing (3rd ed.), New York: Cambridge University Press, ISBN 978-0-521-88068-8

- Sirayanone, S., 1988, Comparative studies of kriging, multiquadric-biharmonic, and other methods for solving mineral resource problems, PhD. Dissertation, Dept. of Earth Sciences,Iowa State University, Ames, Iowa.

- Sirayanone S. and Hardy, R.L., "The Multiquadric-biharmonic Method as Used for Mineral Resources, Meteorological, and Other Applications," Journal of Applied Sciences and Computations Vol. 1, pp. 437–475, 1995.

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?