说明

统一监控平台采用prometheus+grafana+alertmanager技术路线进行平台监控。

被监控系统单独部署一个prometheus节点,用于抓取本系统的监控数据。统一监控平台利用prometheus的federate功能从各个系统的prometheus进行数据抓取。通过grafana组件进行统一展示,通过alertmanager服务进行统一的告警。各个系统的prometheus服务保留24小时数据。统一监控平台长期保留数据。下级Prometheus通过env标签进行系统区分

资源规划

| 序号 | 服务 | 服务器资源 | 说明 |

|---|---|---|---|

| 1 | 主Prometheus | 4C/16G/1T | 主prometheus服务,存储各系统监控数据Metric scrapy加签 env用于区分各系统 |

| 2 | alertmanager | 告警发送服务 | |

| 3 | grafana | 统一展示服务 | |

| 4 | dingtalk | 钉钉告警发送webhook服务 | |

| 5 | 各系统Prometheus进程 | 内存<200M硬盘<300M | 部署在各系统机器上,每套业务系统部署一套 |

| 6 | 各监控服务进程 | 内存<50M | 部署在各系统机器上 |

prometheus节点

各系统prometh存储节点保留24小时的prometheus数据

安装

- 下载prometheus

wget https://github.com/prometheus/prometheus/releases/download/v2.38.0/prometheus-2.38.0.linux-amd64.tar.gz

#上传/root目录 解压

tar -zxvf prometheus-2.38.0.linux-amd64.tar.gz

- 注册systemd服务(不带认证的Prometheus,后面有开启认证和tls的说明)

##--web.enable-lifecycle 容许热加载 curl -X POST 10.127.130.189:9090/-/reload

##--web.listen-address=0.0.0.0:9090 前端端口

vim /usr/lib/systemd/system/prometheus.service

[Unit]

Description=Prometheus Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/root/prometheus-2.38.0

ExecStart=/root/prometheus-2.38.0/prometheus --storage.tsdb.path=/data/prometheus --config.file=/root/prometheus-2.38.0/prometheus.yml --storage.tsdb.retention.time=24h --log.format=json --log.level=info --web.enable-lifecycle --web.listen-address=0.0.0.0:9090

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

配置

- 以下展示主prometheus抓取配置部分示例

- job_name: 'prod-system1-os'

scrape_interval: 15s

static_configs:

- targets:

- 124.170.14.51:9200

- 124.170.14.51:9100

labels:

env: "prod-system1"

relabel_configs:

#以下是relabel改变lable标签

- source_labels: ['__address__']

regex: '(.*):9200'

replacement: '10.117.22.164'

target_label: 'instance'

action: replace

- source_labels: ['__address__']

regex: '(.*):9100'

replacement: '10.117.22.71'

target_label: 'instance'

action: replace

## system2系统生产

#system2展示Prometheus的federate相关功能,主Prometheus抓取别的系统Prometheus的数据,这在很多网络隔离的系统监控是很有优势的,免去了开放scrapy的麻烦也提升了安全。

- job_name: 'prod-system2'

scrape_interval: 15s

honor_labels: true

metrics_path: '/federate'

scheme: http

params:

'match[]':

- '{env="prod-system2"}'

static_configs:

- targets:

- '12.33.105.164:9090'

labels:

env: "prod-system2"

认证和加密

很多情况下,由于Prometheus默认是没有用户密码登录认证的,Prometheus在这种情况下是不安全的,下面介绍prometheus开启认证和tls加密的相关操作。主要利用httpd-tools进行安全设置。openssl签发私有证书

增加basic auth认证和TLS加密

####创建密码加密

# 没有https-tools则 yum install -y httpd-tools

[root@VM_2-44 ~]# rpm -qa|grep httpd-tools

httpd-tools-2.4.6-97.el7.centos.x86_64

[root@VM_2-44 ~]# htpasswd -nBC 12 '' | tr -d ':\n'

New password: # 这里设置密码为123456

Re-type new password:

$2y$12$6yR84yKSqoYv3B2D70QAOuqggT0QvdpMp1wUNfLwBo63oLYWc1AYy

####生成SSL证书和私钥

openssl req -new -newkey rsa:2048 -days 36500 -nodes -x509 -keyout prometheus.key -out prometheus.crt

##在WorkingDirectory=/root/prometheus-2.38.0创建配置文件config.yml

basic_auth_users:

admin: $2y$12$Sk0Jpm9wBNC/z1VEN4MHkuIeeYSsJaujXuQfTwnpyDeHM53VZ9t9K

tls_server_config:

cert_file: prometheus.crt

key_file: prometheus.key

##修改/usr/lib/systemd/system/prometheus.service

在exec后面加上 --web.config.file=/root/prometheus-2.38.0/config.yml

##修改采集配置,加上认证和tls

scrape_configs:

- job_name: "prometheus"

basic_auth:

username: admin

password: sdadmin_110

scheme: https

tls_config:

ca_file: prometheus.crt

insecure_skip_verify: true

static_configs:

- targets: ["localhost:9090"]

各export也支持tls和basic_auth认证,方法一样

#抓取配置示例

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

basic_auth:

username: admin

password: sdadmin_110

scheme: https

tls_config:

ca_file: prometheus.crt

insecure_skip_verify: true

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: "prod-mh-nodes"

static_configs:

- targets:

- 10.88.10.21:9100

- 10.88.10.22:9100

- 10.88.10.23:9100

- 10.88.10.27:9100

- 10.88.10.28:9100

- 10.88.10.29:9100

- 10.88.10.30:9100

labels:

env: "prod-mh"

- job_name: "prod-mh-mysql"

static_configs:

- targets:

- 10.88.10.23:9104

- 10.88.10.23:9105

labels:

env: "prod-mh"

relabel_configs:

- source_labels: ['__address__']

regex: '(.*):9104'

replacement: '10.88.10.27'

target_label: 'instance'

action: replace

- source_labels: ['__address__']

regex: '(.*):9105'

replacement: '10.88.10.28'

target_label: 'instance'

action: replace

- job_name: 'prod-mh-redis'

static_configs:

- targets:

- redis://10.88.10.29:6379

- redis://10.88.10.30:6379

labels:

env: "prod-mh"

#监控进程

- job_name: "prod-mh-process"

static_configs:

- targets:

- 10.88.10.21:9256

- 10.88.10.22:9256

labels:

env: "prod-mh"

grafana 配置

https://dl.grafana.com/enterprise/release/grafana-enterprise-8.1.5-1.x86_64.rpm

yum install grafana-enterprise-8.1.5-1.x86_64.rpm

启动服务systemctl start grafana-server

Grafana配置clickhouse数据源

https://grafana.com/grafana/plugins/grafana-clickhouse-datasource/?tab=installation

wget https://storage.googleapis.com/integration-artifacts/grafana-clickhouse-datasource/release/2.0.4/linux/grafana-clickhouse-datasource-2.0.4.linux_amd64.zip

查看插件路径,将下载的插件解压放在插件目录,重启grafana

Grafana配置文件路径在/etc/grafana/grafana.ini

迁移降数据文件复制出来即可,一个sqlite3文件

告警服务

安装

Alertmanager服务安装

下载服务

https://github.com/prometheus/alertmanager/releases/download/v0.24.0/alertmanager-0.24.0.linux-amd64.tar.gz

##注册systemd服务

启动服务systemctl start grafana-server

Vim /usr/lib/systemd/system/alertmanager.service

# /lib/systemd/system/prometheus.service

[Unit]

Description=alertmanager Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/usr/local/prometheus/

ExecStart=/usr/local/prometheus/alertmanager --config.file=/usr/local/prometheus/alertmanager.yaml --cluster.listen-address=0.0.0.0:9094 --storage.path=/data/alertmanager --data.retention=24h --web.listen-addr

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

配置

global:

# 经过此时间后,如果尚未更新告警,则将告警声明为已恢复。(即prometheus没有向alertmanager发送告警了)

resolve_timeout: 5m

# 配置发送邮件信息

smtp_smarthost: 'smtp.qq.com:465'

smtp_from: '1111@qq.com'

smtp_auth_username: '1111@qq.com'

smtp_auth_password: 'password'

smtp_require_tls: false

# 所有报警都会进入到这个根路由下,可以根据根路由下的子路由设置报警分发策略

route:

# 先解释一下分组,分组就是将多条告警信息聚合成一条发送,这样就不会收到连续的报警了。

# 将传入的告警按标签分组(标签在prometheus中的rules中定义),例如:

# 接收到的告警信息里面有许多具有cluster=A 和 alertname=LatencyHigh的标签,这些个告警将被分为一个组。

#

# 如果不想使用分组,可以这样写group_by: [...]

group_by: ['alertname', 'cluster', 'service']

# 第一组告警发送通知需要等待的时间,这种方式可以确保有足够的时间为同一分组获取多个告警,然后一起触发这个告警信息。

group_wait: 30s

# 发送第一个告警后,等待"group_interval"发送一组新告警。

group_interval: 1m

# 分组内发送相同告警的时间间隔。这里的配置是每3小时发送告警到分组中。举个例子:收到告警后,一个分组被创建,等待5分钟发送组内告警,如果后续组内的告警信息相同,这些告警会在3小时后发送,但是3小时内这些告警不会被

发送。 repeat_interval: 1m

# 这里先说一下,告警发送是需要指定接收器的,接收器在receivers中配置,接收器可以是email、webhook、pagerduty、wechat等等。一个接收器可以有多种发送方式。

# 指定默认的接收器

receiver: dingding-alarm

# 下面配置的是子路由,子路由的属性继承于根路由(即上面的配置),在子路由中可以覆盖根路由的配置

# 下面是子路由的配置

routes:

# 使用正则的方式匹配告警标签

- match_re:

env: prod-prometheus

# 指定接收器为dingding-alarm

receiver: dingding-prod-prometheus

# 这里配置的是子路由的子路由

- match_re:

env: prod-system

receiver: dingding-prod-system

- match_re:

env: prod-system1

receiver: dingding-prod-system1

- match_re:

env: prod-system2

receiver: dingding-prod-system2

- match_re:

env: prod-system3

receiver: dingding-prod-system3

- match_re:

env: prod-system4

receiver: dingding-prod-system4

- match_re:

# 这里可以匹配出标签含有service=foo1或service=foo2或service=baz的告警

env: ^(test).*$

# 指定接收器为dingding-alarm

receiver: dingding-alarm

# 这里配置的是子路由的子路由,当满足父路由的的匹配时,这条子路由会进一步匹配出severity=critical的告警,并使用team-X-pager接收器发送告警,没有匹配到的告警会由父路由进行处理。

routes:

- match:

severity: critical

receiver: dingding-alarm

# 下面是关于inhibit(抑制)的配置,先说一下抑制是什么:抑制规则允许在另一个警报正在触发的情况下使一组告警静音。其实可以理解为告警依赖。比如一台数据库服务器掉电了,会导致db监控告警、网络告警等等,可以配置抑制规则

如果服务器本身down了,那么其他的报警就不会被发送出来。

#inhibit_rules:

##下面配置的含义:当有多条告警在告警组里时,并且他们的标签alertname,cluster,service都相等,如果severity: 'critical'的告警产生了,那么就会抑制severity: 'warning'的告警。

#- source_match: # 源告警(我理解是根据这个报警来抑制target_match中匹配的告警)

# severity: 'critical' # 标签匹配满足severity=critical的告警作为源告警

# target_match: # 目标告警(被抑制的告警)

# severity: 'warning' # 告警必须满足标签匹配severity=warning才会被抑制。

# equal: ['alertname', 'cluster', 'service'] # 必须在源告警和目标告警中具有相等值的标签才能使抑制生效。(即源告警和目标告警中这三个标签的值相等'alertname', 'cluster', 'service')

# 下面配置的是接收器

receivers:

# 接收器的名称、通过邮件的方式发送、这边告警对接钉钉告警,多个系统对接多个钉钉机器人,

- name: 'dingding-alarm'

webhook_configs:

- url: 'http://127.0.0.1:8060/dingtalk/webhook/send'

send_resolved: true

- name: 'dingding-prod-prometheus'

webhook_configs:

- url: 'http://localhost:8060/dingtalk/prod-prometheus/send'

send_resolved: true

- name: 'dingding-prod-system'

webhook_configs:

- url: 'http://localhost:8060/dingtalk/prod-system/send'

send_resolved: true

- name: 'dingding-prod-system1'

webhook_configs:

- url: 'http://localhost:8060/dingtalk/prod-system1/send'

send_resolved: true

- name: 'dingding-prod-system2'

webhook_configs:

- url: 'http://localhost:8060/dingtalk/prod-system2/send'

send_resolved: true

- name: 'dingding-prod-system3'

webhook_configs:

- url: 'http://localhost:8060/dingtalk/prod-system3/send'

send_resolved: true

- name: 'dingding-prod-system4'

webhook_configs:

- url: 'http://localhost:8060/dingtalk/prod-system4/send'

send_resolved: true

Dingtalk服务器安装

安装

下载地址

https://github.com/timonwong/prometheus-webhook-dingtalk/releases

##注册systemd服务

启动服务systemctl start prometheus-webhook-dingtalk

Vim /usr/lib/systemd/system/prometheus-webhook-dingtalk.service

# /lib/systemd/system/prometheus.service

[Unit]

Description=prometheus-webhook-dingtalk

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/usr/local/prometheus/

ExecStart=/usr/local/prometheus/prometheus-webhook-dingtalk --web.enable-ui --web.enable-lifecycle --config.file=/usr/local/prometheus/prometheus-webhook-dingtalk.yml

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

配置

- webhook.yml

## Request timeout

timeout: 5s

## Customizable templates path

templates:

- /usr/local/prometheus/notification.tmpl

- /usr/local/prometheus/test.tmpl

- /usr/local/prometheus/prod-system.tmpl

- /usr/local/prometheus/prod-system1.tmpl

- /usr/local/prometheus/prod-system2.tmpl

- /usr/local/prometheus/prod-system3.tmpl

- /usr/local/prometheus/prod-system4.tmpl

- /usr/local/prometheus/prod-prometheus.tmpl

## You can also override default template using `default_message`

## The following example to use the 'legacy' template from v0.3.0

default_message:

title: '{{ template "webhook_configs.default.subject" . }}'

text: '{{ template "webhook_configs.default.html" . }}'

#标签要与上面tmpl名称对应

targets:

prod-prometheus:

url: https://oapi.dingtalk.com/robot/send?access_token=xx

secret: xx

message:

title: '{{ template "ding.link.title" . }}'

text: '{{ template "ding.link.content-prod-prometheus" . }}'

prod-system:

url: https://oapi.dingtalk.com/robot/send?access_token=xx

secret: xx

message:

#下面于告警tmpl里面对应标签对应,进行模板替换

title: '{{ template "ding.link.title" . }}'

text: '{{ template "ding.link.content-prod-system" . }}'

#一个系统一个模板

- 告警模板/usr/local/prometheus/prod-prometheus.tmpl示例

{{ define "__subject" }}[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] {{ .GroupLabels.SortedPairs.Values | join " " }} {{ if gt (len .CommonLabels) (len .GroupLabels

) }}({{ with .CommonLabels.Remove .GroupLabels.Names }}{{ .Values | join " " }}{{ end }}){{ end }}{{ end }}

{{ define "__alertmanagerURL" }}https://grafana.cn/ {{ end }}

{{ define "__text_alert_list" }}{{ range . }}

**Labels** {{ range .Labels.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**Annotations** {{ range .Annotations.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**Source:** [{{ .GeneratorURL }}]({{ .GeneratorURL }}) {{ end }}{{ end }}

{{ define "default.__text_alert_list1" }}{{ range . }}

---

**告警级别:** {{ .Labels.severity | upper }}

**触发时间:** {{ dateInZone "2006.01.02 15:04:05" (.StartsAt) "Asia/Shanghai" }}

**告警系统:** xx生产环境

**事件信息:** {{ range .Annotations.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}{{ end }}

{{ end }}

{{ define "default.__text_alertresolve_list1" }}{{ range . }}

---

**告警级别:** {{ .Labels.severity | upper }}

**触发时间:** {{ dateInZone "2006.01.02 15:04:05" (.StartsAt) "Asia/Shanghai" }}

**结束时间:** {{ dateInZone "2006.01.02 15:04:05" (.EndsAt) "Asia/Shanghai" }}

**事件信息:** {{ range .Annotations.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}{{ end }}

{{ end }}

{{/* Default */}}

{{ define "default.title" }}{{ template "__subject" . }}{{ end }}

{{ define "default.content1" }}#### \[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}\] **[{{ index .GroupLabels "alertname" }}]({{ template "__alertmanagerURL" . }})**

{{ if gt (len .Alerts.Firing) 0 -}}

**=====xx生产环境告警=====** {{ template "default.__text_alert_list1" .Alerts.Firing }}

{{- end }}

{{ if gt (len .Alerts.Resolved) 0 -}}

**=====故障已修复=====** {{ template "default.__text_alertresolve_list" .Alerts.Resolved }}

{{- end }}

{{- end }}

{{/* Legacy */}}

{{ define "legacy.title" }}{{ template "__subject" . }}{{ end }}

{{ define "legacy.content" }}#### \[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}\] **[{{ index .GroupLabels "alertname" }}]({{ template "__alertmanagerURL" . }})**

{{ template "__text_alert_list" .Alerts.Firing }}

{{- end }}

{{/* Following names for compatibility */}}

{{ define "ding.link.title" }}{{ template "default.title" . }}{{ end }}

{{ define "ding.link.content-prod-prometheus" }}{{ template "default.content1" . }}{{ end }}

监控服务

节点监控

下载node-export进行部署,对prometheus节点暴露9100端口

https://github.com/prometheus/node_exporter/releases/download/v1.4.0-rc.0/node_exporter-1.4.0-rc.0.linux-amd64.tar.gz

将服务包解压重命名到/root/node_exporter

##注册systemd服务

启动服务systemctl status node-exporter

Vim /usr/lib/systemd/system/node-exporter.service

# /lib/systemd/system/node-exporter.service

[Unit]

Description=Node exporter

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/usr/local/node_exporter

#自定义端口

ExecStart=/usr/local/node_exporter/node_exporter --web.listen-address=:9101

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

mysql监控

下载mysql-export进行部署,对prometheus节点暴露9104端口

https://github.com/prometheus/mysqld_exporter/releases/download/v0.14.0/mysqld_exporter-0.14.0.linux-amd64.tar.gz

上传解压重命名到/root/mysql_export目录下

mysql数据库创建监控账号

[root@localhost ~]# mysql -u root -p***********

CREATE USER 'exporter'@'10.10.0.%' IDENTIFIED BY '***' WITH MAX_USER_CONNECTIONS 3;

GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'exporter'@'10.10.0.%';

mysql > flush privileges;

##注册systemd服务

启动服务systemctl status mysqld-exporter

Vim /usr/lib/systemd/system/mysqld-exporter.service

[Unit]

Description=mysqld exporter

After=netw ork.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/root/mysqld_exporter

Environment="DATA_SOURCE_NAME=exporter:Godlike_110@(10.10.0.27:3306)/mysql/"

ExecStart=/root/mysqld_exporter/mysqld_exporter

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

redis 监控

#下载redis-exporter进行部署,对prometheus节点暴露9121端口

https://github.com/oliver006/redis_exporter/releases/download/v1.42.0/redis_exporter-v1.42.0.linux-amd64.tar.gz

#上传解压重命名到/root/redis_exporter目录下

##注册systemd服务

启动服务systemctl status redis-exporter

vim /usr/lib/systemd/system/redis-exporter.service

##此处有两种写法,对应的prometheus 抓取也有两种

##第一种Environment 只配置密码,prometheus侧通过relabel传递想要抓取的目标redis,此处多个redis密码必须一样,ExecStart=/root/redis_exporter/redis_exporte

##第二种ExecStart=/data/redisexport-hy/redis_exporter -redis.addr 10.10.10.1:6379 -redis.password xxx -web.listen-address :3389

##第二种比较好理解

[Unit]

Description=redis exporter

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/root/redis_exporter

Environment="REDIS_PASSWORD=xxxx"

#/data/redisexport-hy/redis_exporter -redis.addr 10.10.10.1:6379 -redis.password xxx -web.listen-address :3389

ExecStart=/root/redis_exporter/redis_exporter

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

- 抓取配置

##第一种写法的prometheus接入(如果主备集群密码一样的话,可以利用relabel通过exporter的api 更改target,实现一个export监控多套redis的目的)

- job_name: 'prod-xxx-redis'

static_configs:

- targets:

- redis://10.10.0.219:6379 #redis1

- redis://10.10.0.130:6379 #redis2

labels:

env: "prod-xxx"

params:

check-keys: ["metrics:*"]

metrics_path: /scrape

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 10.10.0.123:9121 #api的地址

##第二种写法

- job_name: "prod-xxxx-redis"

static_configs:

- targets:

- 10.10.10.1:9121 #直接抓取api

labels:

env: "prod-xxx"

relabel_configs:

- source_labels: ['__address__']

regex: '(.*)'

replacement: '10.10.10.12' #重新打标,把api地址换成redis地址,方便展示查看

target_label: 'instance'

action: replace

进程监控

#下载process-exporter进行部署,对prometheus节点暴露9256端口

https://github.com/ncabatoff/process-exporter/releases/download/v0.7.10/process-exporter-0.7.10.linux-amd64.tar.gz

#上传解压重命名到/root/process-exporter目录下

#添加进程配置文件/root/process-exporter/process.yml

#可以用这个命令查询想要监控的进程,直接格式化成配置,复制到yml中

#ps -ef|grep java|grep -v grep |awk '{print $10}'|awk -F "-1" '{print $1}'|awk -F "/" '{print " - name: \"{{.Matches}}\"\n cmdline:\n - "$NF""}'

process_names:

- name: "{{.Matches}}"

cmdline:

- 'processkey'

- name: "{{.Matches}}"

cmdline:

- 'processkey'

- name: "{{.Matches}}"

cmdline:

- 'processkey'

- name: "{{.Matches}}"

cmdline:

- 'processkey'

- name: "{{.Matches}}"

cmdline:

- 'processkey'

- name: "{{.Matches}}"

cmdline:

- 'processkey'

- name: "{{.Matches}}"

cmdline:

- 'java'

##注册systemd服务

启动服务systemctl status process-exporter

vim /usr/lib/systemd/system/process-exporter.service

[Unit]

Description=Node exporter

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/root/process-exporter

ExecStart=/root/process-exporter/process-exporter -config.path /root/process-exporter/process.yml -web.listen-address :9265

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

----------------------------------

Prometheus配置采集

- job_name: "prod-xx-process"

static_configs:

- targets:

- 10.10.10.10:9256

labels:

env: "prod-xx"

Grafana模版ID 249

kafka

1、使用kafka-eagle-bin

1、https://github.com/smartloli/kafka-eagle-bin/archive/refs/heads/master.zip

官网也有http://www.kafka-eagle.org/index.html

2、kafka开启jmx 建议加在kafka-server-start.sh

#在export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"下方添加一行export JMX_PORT="9999"然后重启kafka

或者 vim kafka-run-class.sh

#添加端口变量

JMX_PORT=9999

3、修改kafka-eagle配置

vim system-config.properties

############################################################################

# 配置Kafka集群所对应的Zookeeper

############################################################################

kafka.eagle.zk.cluster.alias=cluster1

cluster1.zk.list=xxxxxx:2181,xxxxxx:2181,xxxxxx:2181

############################################################################

# 设置Zookeeper线程数,默认25个

############################################################################

kafka.zk.limit.size=25

############################################################################

# 设置Kafka Eagle浏览器访问端口,80端口

# 需要换成其他端口,避免和其他端口冲突

############################################################################

kafka.eagle.webui.port=18048

############################################################################

# 存储消费信息的类型,一般在0.9版本之前,消费信息会默认存储在Zookeeper中,

# 所以存储类型设置zookeeper即可;如果是在0.10版本之后,消费者信息默认存储在

# Kafka中,所以存储类型需要设置为kafka。注意:这里配置的是默认存储的位置。

# 我们的offset保存在了zk中,如果配置为zk,dashboard会看不到消费者的信息

############################################################################

cluster1.kafka.eagle.offset.storage=kafka

############################################################################

# 开启性能监控,数据默认保存30天

############################################################################

kafka.eagle.metrics.charts=true

kafka.eagle.metrics.retain=30

############################################################################

# 在使用KSQL查询主题时,如果遇到错误,可以尝试开启这个属性,默认情况下不开启

############################################################################

kafka.eagle.sql.fix.error=true

############################################################################

# 超级管理员删除主题的Token

############################################################################

kafka.eagle.topic.token=keadmin

############################################################################

# 如果启动Kafka SASL协议,开启该属性;SASL是一个鉴权协议,主要用来保证客户端

# 登录服务器的时候,传输的鉴权数据的安全性, SASL是对用户名和密码加解密用的

############################################################################

kafka.eagle.sasl.enable=true

kafka.eagle.sasl.protocol=SASL_PLAINTEXT

kafka.eagle.sasl.mechanism=PLAIN

############################################################################

# Kafka Eagle默认存储在Sqlite中,这里我们使用MySQL来存储

############################################################################

#kafka.eagle.driver=org.sqlite.JDBC

#kafka.eagle.url=jdbc:sqlite:/Users/dengjie/workspace/kafka-egale/db/ke.db

#kafka.eagle.username=root

#kafka.eagle.password=rootkafka.eagle.driver=com.mysql.jdbc.Driver

kafka.eagle.url=jdbc:mysql://xxxxxx:3306/kafka_eagle?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

kafka.eagle.username=root

kafka.eagle.password=xxxxxx

4、设置环境变量

export KE_HOME=/data/kafka-eagle-bin-master/efak-web-3.0.2

5、启动:bin/ke.sh start 关闭:bin/ke.sh stop

* EFAK Service has started success.

* Welcome, Now you can visit 'http://10.100.13.3:8048'

* Account:admin ,Password:123456

2、kafka_exporter

下载kafka_exporter进行部署

https://github.com/danielqsj/kafka_exporter/releases/download/v1.6.0/kafka_exporter-1.6.0.linux-amd64.tar.gz

##注册systemd服务

启动服务systemctl status node-exporter

Vim /usr/lib/systemd/system/kafka-exporter.service

# /lib/systemd/system/node-exporter.service

[Unit]

Description=kafka exporter

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/data/prometheus/kafka_exporter-1.6.0.linux-amd64

#自定义端口

ExecStart=/data/prometheus/kafka_exporter-1.6.0.linux-amd64/kafka_exporter --web.listen-address=:9308 --kafka.server=10.100.13.3:9092 --zookeeper.server=localhost:2181

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

grafanaid 7589

- job_name: "prod-kafka"

static_configs:

- targets: ['10.100.13.3:9308']

3、kminion

1、#下载

https://github.com/redpanda-data/kminion/releases/download/v2.2.4/kminion_2.2.4_linux_amd64.tar.gz

#https://github.com/redpanda-data/kminion

2、设置环境变量#exprot CONFIG_FILEPATH= 这里设置在systemd中

Vim /usr/lib/systemd/system/kminion.service

[Unit]

Description=kminion exporter

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=simple

WorkingDirectory=/data/prometheus/kminion_2.2.4

#自定义端口

Environment="CONFIG_FILEPATH=/data/prometheus/kminion_2.2.4/config.yaml"

ExecStart=/data/prometheus/kminion_2.2.4/kminion

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

Restart=no

TimeoutSec=1min

LimitNOFILE=65536

[Install]

WantedBy=default.target

3、#修改配置。配置在github上有

[root@zq-fmsp-app2 kminion_2.2.4]# cat config.yaml

logger:

# Valid values are: debug, info, warn, error, fatal, panic

level: info

kafka:

brokers: [10.100.13.3:9092,10.100.13.5:9092,10.100.13.6:9092 ]

clientId: "kminion"

rackId: ""

tls:

enabled: false

caFilepath: ""

certFilepath: ""

keyFilepath: ""

# base64 encoded tls CA, cannot be set if 'caFilepath' is set

ca: ""

# base64 encoded tls cert, cannot be set if 'certFilepath' is set

cert: ""

# base64 encoded tls key, cannot be set if 'keyFilepath' is set

key: ""

passphrase: ""

insecureSkipTlsVerify: false

sasl:

# Whether or not SASL authentication will be used for authentication

enabled: false

# Username to use for PLAIN or SCRAM mechanism

username: ""

# Password to use for PLAIN or SCRAM mechanism

password: ""

# Mechanism to use for SASL Authentication. Valid values are PLAIN, SCRAM-SHA-256, SCRAM-SHA-512, GSSAPI

mechanism: "PLAIN"

# GSSAPI / Kerberos config properties

gssapi:

authType: ""

keyTabPath: ""

kerberosConfigPath: ""

serviceName: ""

username: ""

password: ""

realm: ""

enableFast: true

minion:

consumerGroups:

# Enabled specifies whether consumer groups shall be scraped and exported or not.

enabled: true

# Mode specifies whether we export consumer group offsets using the Admin API or by consuming the internal

# __consumer_offsets topic. Both modes have their advantages and disadvantages.

# * adminApi:

# - Useful for managed kafka clusters that do not provide access to the offsets topic.

# * offsetsTopic

# - Enables kminion_kafka_consumer_group_offset_commits_total metrics.

# - Processing the offsetsTopic requires slightly more memory and cpu than using the adminApi. The amount depends on the

# size and throughput of the offsets topic.

scrapeMode: adminApi # Valid values: adminApi, offsetsTopic

# Granularity can be per topic or per partition. If you want to reduce the number of exported metric series and

# you aren't interested in per partition lags you could choose "topic" where all partition lags will be summed

# and only topic lags will be exported.

granularity: partition

# AllowedGroups are regex strings of group ids that shall be exported

# You can specify allowed groups by providing literals like "my-consumergroup-name" or by providing regex expressions

# like "/internal-.*/".

allowedGroups: [ ".*" ]

# IgnoredGroups are regex strings of group ids that shall be ignored/skipped when exporting metrics. Ignored groups

# take precedence over allowed groups.

ignoredGroups: [ ]

topics:

# Granularity can be per topic or per partition. If you want to reduce the number of exported metric series and

# you aren't interested in per partition metrics you could choose "topic".

granularity: partition

# AllowedTopics are regex strings of topic names whose topic metrics that shall be exported.

# You can specify allowed topics by providing literals like "my-topic-name" or by providing regex expressions

# like "/internal-.*/".

allowedTopics: [ ".*" ]

# IgnoredTopics are regex strings of topic names that shall be ignored/skipped when exporting metrics. Ignored topics

# take precedence over allowed topics.

ignoredTopics: [ ]

# infoMetric is a configuration object for the kminion_kafka_topic_info metric

infoMetric:

# ConfigKeys are set of strings of Topic configs that you want to have exported as part of the metric

configKeys: [ "cleanup.policy" ]

logDirs:

# Enabled specifies whether log dirs shall be scraped and exported or not. This should be disabled for clusters prior

# to version 1.0.0 as describing log dirs was not supported back then.

enabled: true

# EndToEnd Metrics

# When enabled, kminion creates a topic which it produces to and consumes from, to measure various advanced metrics. See docs for more info

endToEnd:

enabled: false

# How often to send end-to-end test messages

probeInterval: 100ms

topicManagement:

# You can disable topic management, without disabling the testing feature.

# Only makes sense if you have multiple kminion instances, and for some reason only want one of them to create/configure the topic

enabled: true

# Name of the topic kminion uses to send its test messages

# You do *not* need to change this if you are running multiple kminion instances on the same cluster.

# Different instances are perfectly fine with sharing the same topic!

name: kminion-end-to-end

# How often kminion checks its topic to validate configuration, partition count, and partition assignments

reconciliationInterval: 10m

# Depending on the desired monitoring (e.g. you want to alert on broker failure vs. cluster that is not writable)

# you may choose replication factor 1 or 3 most commonly.

replicationFactor: 1

# Rarely makes sense to change this, but maybe if you want some sort of cheap load test?

# By default (1) every broker gets one partition

partitionsPerBroker: 1

producer:

# This defines:

# - Maximum time to wait for an ack response after producing a message

# - Upper bound for histogram buckets in "produce_latency_seconds"

ackSla: 5s

# Can be to "all" (default) so kafka only reports an end-to-end test message as acknowledged if

# the message was written to all in-sync replicas of the partition.

# Or can be set to "leader" to only require to have written the message to its log.

requiredAcks: all

consumer:

# Prefix kminion uses when creating its consumer groups. Current kminion instance id will be appended automatically

groupIdPrefix: kminion-end-to-end

# Whether KMinion should try to delete empty consumer groups with the same prefix. This can be used if you want

# KMinion to cleanup it's old consumer groups. It should only be used if you use a unique prefix for KMinion.

deleteStaleConsumerGroups: false

# This defines:

# - Upper bound for histogram buckets in "roundtrip_latency"

# - Time limit beyond which a message is considered "lost" (failed the roundtrip)

roundtripSla: 20s

# - Upper bound for histogram buckets in "commit_latency_seconds"

# - Maximum time an offset commit is allowed to take before considering it failed

commitSla: 10s

exporter:

namespace: "kminion"

host: ""

port: 9007

##granafa id

Cluster Dashboard: https://grafana.com/grafana/dashboards/14012

Consumer Group Dashboard: https://grafana.com/grafana/dashboards/14014

Topic Dashboard: https://grafana.com/grafana/dashboards/14013

#prometheus

- job_name: "prod-kminion"

static_configs:

- targets: ['10.100.13.3:9007']

Rocketmq监控

#https://github.com/apache/rocketmq-exporter

#下载rocketmq-exporter进行部署,对prometheus节点暴露5557端口

https://github.com/apache/rocketmq-exporter/archive/refs/heads/master.zip

##修改配置文件

src/main/resources/application.yml

namesrvAddr: 10.10.10.1:8100;10.10.10.2:8100

#github上下载的源码的话则需要编译。可以参考github

编译mvn install

rocketmq-exporter-0.0.2-SNAPSHOT.jar

启动脚本

nohup java -jar rocketmq-exporter-0.0.2-SNAPSHOT-exec.jar &

grafana 14612 10477

springboot监控

需要java开发开启admin监控,这个监控好像也没啥意义

prometheus配置

- job_name: "prod-xxx-SpringBoot"

metrics_path: '/actuator/prometheus'

scrape_interval: 60s

static_configs:

- targets: [10.10.10.1:9203, 10.10.10.2:9203, 10.10.10.3:9203]

labels:

appName: xxx1

env: "prod-xxx"

grafanaid 6756

Spring Boot: https://grafana.com/grafana/dashboards/10280

JVM: https://grafana.com/grafana/dashboards/12856

Druid: https://grafana.com/grafana/dashboards/11157

Nginx监控

#nginx侧配置 开启http_stub_status_module和nginx-module-vts-0.2.1

nginx -V 输出要有--with-http_stub_status_module 模块

wget http://nginx.org/download/nginx-1.14.1.tar.gz

wget https://github.com/vozlt/nginx-module-vts/archive/refs/tags/v0.2.1.zip

yum -y install gcc zlib zlib-devel pcre-devel openssl openssl-devel libxslt-devel gd-devel perl-devel perl-ExtUtils-Embed

编译nginx 添加 --with-http_stub_status_module --add-module=nginx-module-vts-0.2.1

./configure --prefix=/usr/share/nginx --sbin-path=/usr/share/nginx/sbin/nginx --modules-path=/usr/lib64/nginx/modules --http-client-body-temp-path=/var/lib/nginx/tmp/client_body --http-proxy-temp-path=/var/lib/nginx/tmp/proxy --http-fastcgi-temp-path=/var/lib/nginx/tmp/fastcgi --http-uwsgi-temp-path=/var/lib/nginx/tmp/uwsgi --http-scgi-temp-path=/var/lib/nginx/tmp/scgi --lock-path=/run/lock/subsys/nginx --with-file-aio --with-ipv6 --with-http_ssl_module --with-http_v2_module --with-http_realip_module --with-http_addition_module --with-http_xslt_module=dynamic --with-http_image_filter_module=dynamic --with-http_sub_module --with-http_dav_module --with-http_flv_module --with-http_mp4_module --with-http_gunzip_module --with-http_gzip_static_module --with-http_random_index_module --with-http_secure_link_module --with-http_degradation_module --with-http_slice_module --with-http_stub_status_module --with-http_perl_module=dynamic --with-http_auth_request_module --with-mail=dynamic --with-mail_ssl_module --with-pcre --with-pcre-jit --with-stream=dynamic --with-stream_ssl_module --with-debug --with-http_stub_status_module --add-module=nginx-module-vts-0.2.1

单独编译nginx模块 --with-compat,

1、配置

./configure --(nginx -V的输出) --add-dynamic-module=nginx-module-vts-0.2.1

2、编译

make -f objs/Makefile modules

3、编译完成后将objs文件夹下的模块ngx_http_vhost_traffic_status_module.so文件放到nginx的模块目录下在load下。

mkdir -p /usr/lib64/nginx/modules/

4、修改nginx.conf全局配置加入

include /usr/local/nginx/modules/*.conf;

5、添加loadmod 配置

cat /usr/local/nginx/modules/mod-vhost-status.conf

load_module "/usr/lib64/nginx/modules/ngx_http_vhost_traffic_status_module.so";

方法1、tub_status模块监控

探针下载

wget https://github.com/nginxinc/nginx-prometheus-exporter/releases/download/v0.11.0/nginx-prometheus-exporter_0.11.0_linux_amd64.tar.gz

探针启动

nohup ./nginx-prometheus-exporter -nginx.scrape-uri http://127.0.0.1:2480/nginx_status &

server {

listen 2480;

#端口可以自己重新起一个,配置文件要在外层nginx.conf中添加

location /nginx_status {

stub_status on;

access_log off;

allow 127.0.0.1;

deny all;

}

}

Prometheus侧配置

- job_name: "prod-xx-nginx"

static_configs:

- targets:

- 10.10.10.1:9113

labels:

env: "prod-xx"

Active connections: 2 表示Nginx正在处理的活动连接数2个。

server 2 表示Nginx启动到现在共处理了2个连接

accepts 2 表示Nginx启动到现在共成功创建2次握手

handled requests 1 表示总共处理了 1 次请求

Reading:Nginx 读取到客户端的 Header 信息数

Writing:Nginx 返回给客户端 Header 信息数

Waiting:Nginx 已经处理完正在等候下一次请求指令的驻留链接(开启keep-alive的情况下,这个值等于Active-(Reading+Writing))

https://grafana.com/grafana/dashboards/12708

方法二、vts工具监控推荐(推荐)

http://localhost/status

nginx添加配置

location /status {

vhost_traffic_status_display;

vhost_traffic_status_display_format html;

}

#http中添加:

vhost_traffic_status_zone;

vhost_traffic_status_filter_by_host on;

#下载探针

https://github.com/hnlq715/nginx-vts-exporter/releases/download/v0.10.3/nginx-vts-exporter-0.10.3.linux-amd64.tar.gz

vim /usr/lib/systemd/system/nginx_exporter.service

[Unit]

Description=nginx_vts_exporter

After=network.target

[Service]

Type=simple

ExecStart=/usr/local/nginx_exporter/bin/nginx-vts-exporter -nginx.scrape_uri http://127.0.0.1:2480/status/format/json

Restart=on-failure

[Install]

WantedBy=multi-user.target

Prometheus侧配置

- job_name: "test-xxx-vts-nginx"

static_configs:

- targets:

- 10.88.4.35:9913

- 10.88.4.36:9913

labels:

env: "prod-xxx"

https://grafana.com/grafana/dashboards/2949

以下是两种方式的配置

server {

listen 2480;

location /nginx_status {

stub_status on;

access_log off;

allow 127.0.0.1;

deny all;

}

location /status {

vhost_traffic_status_display;

vhost_traffic_status_display_format html;

}

}

vhost_traffic_status_zone;

vhost_traffic_status_filter_by_host on;

集成skywalking日志页面

text支持html

集成页面html,在grafana里面新建一个text的panel。在里面编写html,此处注意要先打开grafana的text解析html功能。修改配置文件

[panels]

disable_sanitize_html = true #打开解析html

日志查看页面集成

需要修改以下html的js中var url 变量改成自己的。把以下html复制进text panel中

<script src="https://apps.bdimg.com/libs/jquery/2.1.4/jquery.min.js"></script>

<style>

table {

border-collapse:collapse;

border:none;

width: 100%;

}

table td{

border:1px solid #000;

}

.dataDiv{

border-top: 1px solid #000;

margin-top: 10px;

padding-top: 10px;

}

.pageDiv{

margin-right: 10px;

margin-top:10px;

float: right;

}

#searchBtn{

float : right;

}

#keyword{

width: 500px;

}

#traceId{

width: 300px;

}

select {

width: 100px;

}

</style>

<html >

<head><meta http-equiv="content-type" content="text/html; charset=utf-8" /></head>

<body>

<div id="queryDiv" >

服务名:<select id="services"></select>

开始时间:<input type="date" id="startDate" />

结束时间:<input type="date" id="endDate" />

排序:<select id="queryOrder"><option value="BY_START_TIME">按开始时间</option><option value="BY_DURATION">按执行时长</option></select>

TraceId:<input type="text" id="traceId" /><br/>

关键词:<input type="text" id="keyword" placeholder="支持多关键词搜索,每一个关键词用双引号括起来,多个关键词用空格分隔" />

<input type="button" value=" 搜 索 " onclick="doSearch()" id="searchBtn"/>

</div>

<div id="traceListDiv" class="dataDiv">

<div>

Trace列表<br/>

<table id="traceListTable">

<thead><tr><td>服务</td><td>端点</td><td>traceid</td><td>是否异常</td><td>开始时间</td><td>耗时</td><td>操作</td></tr><thead>

<tbody></tbody>

</table>

</div>

<div class="pageDiv"><input type="button" value="上一页" onclick="traceListPrevPage()" /> <span id="traceListCurrentPage"></span> <input type="button" value="下一页" onclick="traceListNextPage()" /></div>

</div>

<div id="traceSpanListDiv" class="dataDiv">

Span列表 <br/>

<table id="traceSpanListTable">

<thead><tr><td>服务</td><td>端点</td><td>SpanId</td><td>开始时间</td><td>结束时间</td><td>耗时</td><td>是否异常</td><td>tags</td><td><input type="button" value="返回" onclick="showTraceList()"></td></tr><thead>

<tbody></tbody>

</table>

</div>

<div id="logListDiv" class="dataDiv">

<div>

日志列表<br/>

<table id="logListTable">

<thead><tr><td>服务</td><td>端点</td><td>level</td><td>logger</td><td>thread</td><td>内容</td><td><input type="button" value="返回" onclick="backFromLogList()"></td></tr><thead>

<tbody></tbody>

</table>

</div>

<div class="pageDiv"><input type="button" value="上一页" onclick="logListPrevPage()" /> <span id="logListCurrentPage"></span><span id="logListTotalPages"></span> <input type="button" value="下一页" onclick="logListNextPage()" /></div>

</div>

</body>

</html>

<script>

//var url = "https://prometheus.tongxin.cn/graphql";

var url = "http://10.117.130.196:18079/graphql"

//url是skywalking的前端提供的graphql接口

var queryServicesParam = {"query":"query queryServices($layer: String!) {\n services: listServices(layer: $layer) {\n id\n value: name\n label: name\n group\n layers\n normal\n }\n }","variables":{"layer":"GENERAL"}}

//9.0.0

//var queryTracesParam = {"query":"query queryTraces($condition: TraceQueryCondition) {\n data: queryBasicTraces(condition: $condition) {\n traces {\n key: segmentId\n endpointNames\n duration\n start\n isError\n traceIds\n }\n total\n }}","variables":{"condition":{"serviceId":"ZGVtby1hcHBsaWNhdGlvbg==.1","traceState":"ALL","queryDuration":{"start":"2022-05-20 1257","end":"2022-05-20 1327","step":"MINUTE"},"queryOrder":"BY_START_TIME","paging":{"pageNum":1,"pageSize":15,"needTotal":true}}}};

//9.1.0

var queryTracesParam = {"query":"query queryTraces($condition: TraceQueryCondition) {\n data: queryBasicTraces(condition: $condition) {\n traces {\n key: segmentId\n endpointNames\n duration\n start\n isError\n traceIds\n }\n }}","variables":{"condition":{"serviceId":"ZGVtby1hcHBsaWNhdGlvbg==.1","traceState":"ALL","queryDuration":{"start":"2022-06-18 0900","end":"2022-06-19 0900","step":"MINUTE"},"queryOrder":"BY_DURATION","paging":{"pageNum":1,"pageSize":20},"minTraceDuration":null,"maxTraceDuration":null}}}

var quertTraceSpansParam = {"query":"query queryTrace($traceId: ID!) {\n trace: queryTrace(traceId: $traceId) {\n spans {\n traceId\n segmentId\n spanId\n parentSpanId\n refs {\n traceId\n parentSegmentId\n parentSpanId\n type\n }\n serviceCode\n serviceInstanceName\n startTime\n endTime\n endpointName\n type\n peer\n component\n isError\n layer\n tags {\n key\n value\n }\n logs {\n time\n data {\n key\n value\n }\n }\n }\n }\n }","variables":{"traceId":"877c9053277f4de3b1f8b62be43ad2f2.46.16535425354160001"}};

//9.0.0

// var queryLogParam = {"query":"query queryLogs($condition: LogQueryCondition) {\n queryLogs(condition: $condition) {\n logs {\n serviceName\n serviceId\n serviceInstanceName\n serviceInstanceId\n endpointName\n endpointId\n traceId\n timestamp\n contentType\n content\n tags {\n key\n value\n }\n }\n total\n }}","variables":{"condition":{"relatedTrace":{"traceId":"877c9053277f4de3b1f8b62be43ad2f2.46.16535425354160001","segmentId":"877c9053277f4de3b1f8b62be43ad2f2.46.16535425354160000","spanId":0},"paging":{"pageNum":1,"pageSize":15,"needTotal":true}}}};

//9.1.0

var queryLogParam = {"query":"query queryLogs($condition: LogQueryCondition) {\n queryLogs(condition: $condition) {\n logs {\n serviceName\n serviceId\n serviceInstanceName\n serviceInstanceId\n endpointName\n endpointId\n traceId\n timestamp\n contentType\n content\n tags {\n key\n value\n }\n }\n }}","variables":{"condition":{"relatedTrace":{"traceId":"4a6873da633342dca40e06b5b47f7a5c.48.16555146660170001","segmentId":"4a6873da633342dca40e06b5b47f7a5c.48.16555146660170000","spanId":0},"paging":{"pageNum":1,"pageSize":10}}}}

// 9.1.0

var searchLogParam = {"query":"query queryLogs($condition: LogQueryCondition) {\n queryLogs(condition: $condition) {\n logs {\n serviceName\n serviceId\n serviceInstanceName\n serviceInstanceId\n endpointName\n endpointId\n traceId\n timestamp\n contentType\n content\n tags {\n key\n value\n }\n }\n }}", "variables":{"condition":{"relatedTrace":{"traceId":"4a6873da633342dca40e06b5b47f7a5c.48.16555146660170001"},"queryDuration":{"start":"2022-06-18 09","end":"2022-06-19 09","step":"HOUR"},"paging":{"pageNum":1,"pageSize":15},"serviceId":"ZGVtby1hcHBsaWNhdGlvbg==.1","keywordsOfContent":["日志"],"excludingKeywordsOfContent":[]}}}

var traceListPage = {pageNum:1, pageSize:15, total: 0, totalPages: 0 };

var logListPage = {pageNum:1, pageSize:15, total: 0, totalPages: 0, traceId:"", segmentId:"", spanId:"", rows:0, keyword:"", isSearch:false };

function getLocalTime(nS) {

return new Date(parseInt(nS)).toLocaleString();

}

$(function(){

showTraceList();

initSearchDate();

queryServices();

});

function showTraceList(){

$("#traceListDiv").show();

$("#traceSpanListDiv").hide();

$("#logListDiv").hide();

}

function showTraceSpans(){

$("#traceListDiv").hide();

$("#traceSpanListDiv").show();

$("#logListDiv").hide();

}

function showLogList(){

$("#traceListDiv").hide();

$("#traceSpanListDiv").hide();

$("#logListDiv").show();

}

function backFromLogList(){

var isSearch = logListPage.isSearch

if(isSearch){

showTraceList();

}else{

showTraceSpans();

}

}

function initSearchDate(){

var now = new Date();

var day = ("0" + now.getDate()).slice(-2);

var month = ("0" + (now.getMonth()+1)).slice(-2);

var year = now .getFullYear();

$("#startDate").val(year+"-" + month + "-" + day);

$("#endDate").val(year+"-" + month + "-" + day);

}

function queryServices(){

$.ajax({

url:url,

type: "POST",

dataType:"json",

contentType: "application/json",

data: JSON.stringify(queryServicesParam),

success: function(result){

if(result.data){

var data = result.data;

if(data.services && data.services.length > 0){

renderServices(data.services);

}else{

alert("没有查询到任何数据");

}

}else{

alert("没有查询到任何数据");

}

}

})

}

function renderServices(services){

for(var i=0; i<services.length;i++){

var service = services[i];

$("#services").append("<option value='"+service.id+"'>"+service.value+"</option>");

}

}

function doSearch(){

traceListPage.pageNum = 1;

logListPage.pageNum=1

var service = $("#services option:selected").text();

if(!service){

alert("请先选择一个服务");

return;

}

var keyword = $("#keyword").val();

var traceId = $("#traceId").val();

// 关键字搜索

if(keyword){

searchLogs(keyword.trim(), traceId.trim());

}else{

// traceid搜索

if(traceId){

$("#traceId").val(traceId.trim());

queryTraceSpans(traceId.trim());

}else{

// trace搜索

traceListPage.pageNum = 1;

_queryTraces();

}

}

}

//多个关键字以空格分割,每一个关键字用双引号包起来,比如: "a" "b"

function splitKeywords(kv){

var vs ;

if(kv.indexOf("\"") < 0){

vs = kv.split(/\s+/);

}else{

vs = kv.split(/(?<=\")\s+(?=\")/);

for(var i=0; i<vs.length; i++){

vs[i] = vs[i].replace(/^\"/, "")

vs[i] = vs[i].replace(/\"$/, "")

}

}

for(var i=0; i<vs.length; i++){

vs[i] = vs[i].trim();

}

return vs;

}

function searchLogs(keyword, traceId){

var startDate = $("#startDate").val() +" 00";

var endDate = $("#endDate").val() + " 23";

var serviceId = $("#services").val();

logListPage.isSearch = true;

var condition = searchLogParam.variables.condition;

condition.queryDuration.start = startDate;

condition.queryDuration.end = endDate;

condition.paging.pageNum = logListPage.pageNum;

condition.paging.pageSize = logListPage.pageSize;

condition.serviceId = serviceId;

condition.keywordsOfContent = splitKeywords(keyword);

if(traceId){

condition.relatedTrace={};

condition.relatedTrace.traceId = traceId

}else{

delete condition.relatedTrace;

}

$.ajax({

url:url,

type: "POST",

dataType:"json",

contentType: "application/json",

data: JSON.stringify(searchLogParam),

success: function(result){

if(result.data){

if(result.data.queryLogs && result.data.queryLogs.logs){

logListPage.traceId = traceId

logListPage.keyword = keyword;

logListPage.rows = result.data.queryLogs.logs.length

renderLogList(result.data.queryLogs.logs);

}else{

alert("没有查询到任何数据");

}

}else{

alert("没有查询到任何数据");

}

}

})

}

function _queryTraces(){

var startDate = $("#startDate").val() +" 0000";

var endDate = $("#endDate").val() + " 2359";

var serviceId = $("#services").val();

var queryOrder = $("#queryOrder").val();

var condition = queryTracesParam.variables.condition;

condition.serviceId = serviceId;

condition.queryDuration.start = startDate;

condition.queryDuration.end = endDate;

condition.paging.pageNum = traceListPage.pageNum;

condition.paging.pageSize = traceListPage.pageSize;

condition.queryOrder = queryOrder;

$.ajax({

url:url,

type: "POST",

dataType:"json",

contentType: "application/json",

data: JSON.stringify(queryTracesParam),

success: function(result){

if(result.data){

if(result.data.data && result.data.data.traces && result.data.data.traces.length > 0){

traceListPage.rows = result.data.data.traces.length

renderTraces(result.data.data.traces);

}else{

alert("没有查询到任何数据");

}

}else{

alert("没有查询到任何数据");

}

}

})

}

function renderTraces(traces){

showTraceList();

var service = $("#services option:selected").text();

var tbody = $("#traceListTable>tbody");

tbody.empty();

for(var i=0; i<traces.length;i++){

var trace = traces[i];

var row = "<tr><td>"+service+"</td><td>"+trace.endpointNames+"</td><td>"+trace.traceIds[0]+"</td><td>"+trace.isError+"</td><td>"+getLocalTime(trace.start)+"</td><td>"+trace.duration+"</td><td><input type='button' value='Spans列表' οnclick=queryTraceSpans('"+trace.traceIds[0]+"') /></td></tr>"

tbody.append(row)

}

$("#traceListCurrentPage").html(traceListPage.pageNum);

}

function traceListPrevPage(){

if(traceListPage.pageNum <= 1){

alert("已经是第一页");

return;

}

traceListPage.pageNum = traceListPage.pageNum - 1;

_queryTraces();

}

function traceListNextPage(){

if(traceListPage.rows < traceListPage.pageSize){

alert("已经是最后一页");

return;

}

traceListPage.pageNum = traceListPage.pageNum + 1;

_queryTraces();

}

function queryTraceSpans(traceId){

quertTraceSpansParam.variables.traceId = traceId;

$.ajax({

url:url,

type: "POST",

dataType:"json",

contentType: "application/json",

data: JSON.stringify(quertTraceSpansParam),

success: function(result){

if(result.data){

var data = result.data;

if(data.trace && data.trace.spans && data.trace.spans.length > 0){

renderTraceSpans(data.trace.spans);

}else{

alert("没有查询到任何数据");

}

}else{

alert("没有查询到任何数据");

}

}

})

}

function renderTraceSpans(spans){

showTraceSpans();

var tbody = $("#traceSpanListTable>tbody");

tbody.empty();

for(var i=0; i<spans.length;i++){

var span = spans[i];

var row = "<tr><td>"+span.serviceCode+"</td><td>"+span.endpointName+"</td><td>"+span.spanId+"</td><td>"+getLocalTime(span.startTime)+"</td><td>"+getLocalTime(span.endTime)+"</td><td>"+(span.endTime-span.startTime)+"</td><td>"+span.isError+"</td><td>"+JSON.stringify(span.tags)+"</td><td><input type='button' value='日志列表' οnclick=queryLogs('"+span.traceId+"','"+span.segmentId+"','"+span.spanId+"')></td></tr>";

tbody.append(row)

}

}

function queryLogs(traceId, segmentId, spanId){

logListPage.isSearch = false;

var condition = queryLogParam.variables.condition;

condition.relatedTrace.traceId = traceId;

condition.relatedTrace.segmentId = segmentId;

condition.relatedTrace.spanId = spanId;

condition.paging.pageNum = logListPage.pageNum

condition.paging.pageSize = logListPage.pageSize

$.ajax({

url:url,

type: "POST",

dataType:"json",

contentType: "application/json",

data: JSON.stringify(queryLogParam),

success: function(result){

if(result.data){

var data = result.data;

if(data.queryLogs && data.queryLogs.logs && data.queryLogs.logs.length > 0){

logListPage.traceId = traceId;

logListPage.segmentId = segmentId;

logListPage.spanId = spanId;

logListPage.rows = data.queryLogs.logs.length

renderLogList(data.queryLogs.logs);

}else{

alert("没有查询到任何数据");

}

}else{

alert("没有查询到任何数据");

}

}

})

}

function renderLogList(logs){

showLogList();

var tbody = $("#logListTable>tbody");

tbody.empty();

for(var i=0; i<logs.length;i++){

var log = logs[i];

var row = "<tr><td>"+log.serviceName+"</td><td>"+log.endpointName+"</td><td>"+log.tags[0].value+"</td><td>"+log.tags[1].value+"</td><td>"+log.tags[2].value+"</td><td colspan=2>"+log.content+"</td></tr>";

tbody.append(row)

}

$("#logListCurrentPage").html(logListPage.pageNum);

}

function logListPrevPage(){

if(logListPage.pageNum <= 1){

alert("已经是第一页");

return;

}

logListPage.pageNum = logListPage.pageNum - 1;

if(logListPage.isSearch){

searchLogs(logListPage.keyword, logListPage.traceId);

}else{

queryLogs(logListPage.traceId, logListPage.segmentId, logListPage.spanId);

}

}

function logListNextPage(){

if(logListPage.rows < logListPage.pageSize){

alert("已经是最后一页");

return;

}

logListPage.pageNum = logListPage.pageNum + 1;

searchLogs(logListPage.keyword, logListPage.traceId);

}

</script>

以上访问会有跨域问题,我是通过nginx来解决的,有好的方法可以留言教教我。

跨域问题

#js中的url就是nginx地址10.117.130.196:18079

#skywalking和nginx在一台服务器上

#grafana在 10.117.130.239上。这样设置就不能用原来的grafana地址登录得用这个地址来访问grafana地址,才能解决跨域。用原来的地址这个skywaling日志页面是打不开的

upstream grafana {

ip_hash;

server 10.117.130.239:3000;

}

upstream skywalking {

ip_hash;

server 10.117.130.196:18081;

}

server {

listen 18079;

##通过这个路径访问grafana

location / {

add_header 'Access-Control-Allow-Origin' $http_origin;

add_header 'Access-Control-Allow-Credentials' 'true';

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS';

add_header 'Access-Control-Allow-Headers' 'DNT,web-token,app-token,Authorizati

on,Accept,Origin,Keep-Alive,User-Agent,X-Mx-ReqToken,X-Data-Type,X-Auth-Token,X-Requested-

With,If-Modified-Since,Cache-Control,Content-Type,Range';

add_header 'Access-Control-Expose-Headers' 'Content-Length,Content-Range';

if ($request_method = 'OPTIONS') {

add_header 'Access-Control-Max-Age' 1728000;

add_header 'Content-Type' 'text/plain; charset=utf-8';

add_header 'Content-Length' 0;

return 204;

}

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://grafana/;

}

##这个路由给html中js的url参数

location /graphql {

add_header 'Access-Control-Allow-Origin' $http_origin;

add_header 'Access-Control-Allow-Credentials' 'true';

add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS';

add_header 'Access-Control-Allow-Headers' '*';

add_header 'Access-Control-Expose-Headers' 'Content-Length,Content-Range';

if ($request_method = 'OPTIONS') {

add_header 'Access-Control-Max-Age' 1728000;

add_header 'Content-Type' 'text/plain; charset=utf-8';

add_header 'Content-Length' 0;

return 204;

}

proxy_pass http://skywalking/graphql;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

URL

https://github.com/prometheus/blackbox_exporter

wget https://github.com/prometheus/blackbox_exporter/releases/download/v0.23.0/blackbox_exporter-0.23.0.linux-amd64.tar.gz

vim start.sh

#!/bin/bash

nohup ./blackbox_exporter \

--config.file=./blackbox.yml \

--web.listen-address=":9098" \

--log.level=debug \

>> /blackbox.out 2>&1 &

prometheus配置

- job_name: 'blackbox_http_2xx' # 配置get请求检测

scrape_interval: 30s

metrics_path: /probe

params:

module: [http_2xx]

static_configs:

- targets: # 测试如下的请求是否可以访问的通

- http://www.baidu.com

- https://www.sina.com.cn

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 127.0.0.1:9098 # blackbox-exporter 服务所在的机器和端口

- job_name: 'blackbox_tcp_connect' # 检测某些端口是否在线

scrape_interval: 30s

metrics_path: /probe

params:

module: [tcp_connect]

static_configs:

- targets:

- 127.0.0.1:10006

- 127.0.0.1:10005

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 127.0.0.1:9098

grafana 13659 7587

K8S的监控

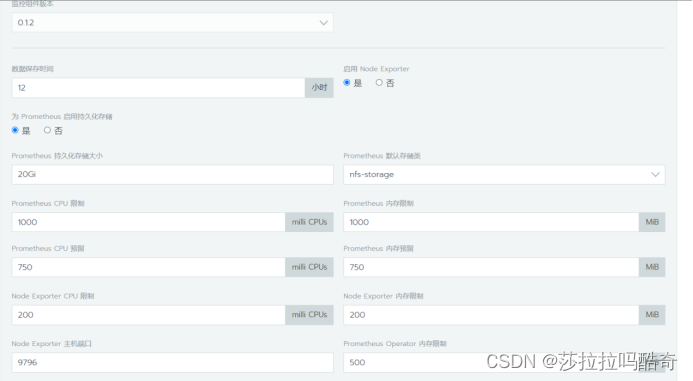

我推荐利用rancher的监控功能,免去配置,同时又有一套可以方便开发操作k8s集群的工具。主prometheus抓取k8s集群中的prometheus数据即可。

下面说说rancher中的监控配置。主要是nfs storage-classs的配置

安装rancher

1.docker pull rancher/rancher:v2.4.8

2.docker run -d --network host --privileged --restart=unless-stopped rancher/rancher:v2.4.8

访问

https://192.168.223.10/update-password

配置集群信息

Rancher 监控方案需要存储类,所以先创建nfs storageclass。创建完之后全局页面就有storageclass了。存储类好处是直接创建pvc自动创建pv了。准备以下三个配置文件

- nfs-provisioner-deploy.yaml

- nfs-rbac.yaml

- nfs-sc.yaml

nfs-provisioner-deploy.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

# namespace: kube-system

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate #---设置升级策略为删除再创建(默认为滚动更新)

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#---由于quay.io仓库国内被墙,所以替换成七牛云的仓库

# image: quay-mirror.qiniu.com/external_storage/nfs-client-provisioner:latest

image: vbouchaud/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: nfs-client #--- nfs-provisioner的名称,以后设置的storageclass要和这个保持一致

- name: NFS_SERVER

value: 192.168.223.10 #---NFS服务器地址,和 valumes 保持一致

- name: NFS_PATH

value: /root/nfs #---NFS服务器目录,和 valumes 保持一致

volumes:

- name: nfs-client-root

nfs:

server: 192.168.223.10 #---NFS服务器地址

path: /root/nfs #---NFS服务器目录

nfs-rbac.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kube-system # 此处安装进Rancher的系统命名空间,可替换

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kube-system # 此处安装进Rancher的系统命名空间,可替换

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

nfs-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true" #---设置为默认的 storageclass

provisioner: nfs-client #---动态卷分配者名称,必须和上面创建的"provisioner"变量中设置的Name一致

#provisioner: nfs-client-provisioner #---动态卷分配者名称,必须和上面创建的"provisioner"变量中设置的Name一致

parameters:

archiveOnDelete: "true" #---设置为"false"时删除PVC不会保留数据,"true"则保留数据

mountOptions:

- hard #指定为硬挂载方式

- nfsvers=4 #指定NFS版本,这个需要根据 NFS Server 版本号设置

Kubectl apply -f *.yaml -n kube-system

rancher页面配置就比较简单了

非rancher的K8S监控

下次再写

3405

3405

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?