为什么要用集群

使用集群的主要原因是为了提高系统的可扩展性、可用性和容错性。

- 可扩展性:当单个节点无法处理增加的负载时,可以通过添加更多的节点到集群来增加处理能力。这使得系统可以处理更大的数据量和更高的查询负载。

- 可用性:在集群中,如果一个节点出现故障,其他节点可以接管它的工作,从而保证系统的持续运行。这对于需要24/7运行的系统来说非常重要。

- 容错性:集群可以通过数据复制和故障转移来提高系统的容错性。如果一个节点出现故障,系统可以从其他节点获取数据,从而避免数据丢失。

为什么要分布式存储

使用分布式存储的主要原因有以下几点:

- 可扩展性:分布式存储系统可以通过添加更多的节点来扩展存储容量和处理能力,这使得它们可以处理大规模的数据。

- 高可用性:在分布式存储系统中,数据通常会在多个节点上进行复制,这意味着如果一个节点出现故障,其他节点可以提供数据,从而保证系统的可用性。

- 容错性:分布式存储系统通过数据复制和故障转移来提高系统的容错性。如果一个节点出现故障,系统可以从其他节点获取数据,从而避免数据丢失。

- 性能:分布式存储系统可以通过在多个节点上并行处理数据来提高性能。

那么今天我们今天用docker的形式搭建一个clickhouse集群来提供我们学习用。

dockerfile

docker-compose.yml

version: "3.7"

services:

ck1:

image: yandex/clickhouse-server

ulimits:

nofile:

soft: 300001

hard: 300002

ports:

- 9001:9000

volumes:

- ./conf/config.xml:/etc/clickhouse-server/config.xml

- ./conf/users.xml:/etc/clickhouse-server/users.xml

- ./conf/metrika1.xml:/etc/metrika.xml

links:

- "zk1"

depends_on:

- zk1

ck2:

image: yandex/clickhouse-server

ulimits:

nofile:

soft: 300001

hard: 300002

volumes:

- ./conf/metrika2.xml:/etc/metrika.xml

- ./conf/config.xml:/etc/clickhouse-server/config.xml

- ./conf/users.xml:/etc/clickhouse-server/users.xml

ports:

- 9002:9000

depends_on:

- zk1

ck3:

image: yandex/clickhouse-server

ulimits:

nofile:

soft: 300001

hard: 300002

volumes:

- ./conf/metrika3.xml:/etc/metrika.xml

- ./conf/config.xml:/etc/clickhouse-server/config.xml

- ./conf/users.xml:/etc/clickhouse-server/users.xml

ports:

- 9003:9000

depends_on:

- zk1

zk1:

image: zookeeper:3.5

restart: always

hostname: zk1

expose:

- "2181"

ports:

- 2181:2181

注意:这里clickhouse的版本要和zookeeper的版本相互匹配上,不然后续就会有兼容性的问题。我的clickhouse的版本是22.1.3 所以我的zookeeper 的版本是3.5

配置

- user.xml

<?xml version="1.0"?>

<clickhouse>

<!-- Profiles of settings. -->

<profiles>

<!-- Default settings. -->

<default>

<!-- Maximum memory usage for processing single query, in bytes. -->

<max_memory_usage>10000000000</max_memory_usage>

<!-- How to choose between replicas during distributed query processing.

random - choose random replica from set of replicas with minimum number of errors

nearest_hostname - from set of replicas with minimum number of errors, choose replica

with minimum number of different symbols between replica's hostname and local hostname

(Hamming distance).

in_order - first live replica is chosen in specified order.

first_or_random - if first replica one has higher number of errors, pick a random one from replicas with minimum number of errors.

-->

<load_balancing>random</load_balancing>

<allow_ddl>1</allow_ddl>

<readonly>0</readonly>

</default>

<!-- Profile that allows only read queries. -->

<readonly>

<readonly>1</readonly>

</readonly>

</profiles>

<!-- Users and ACL. -->

<users>

<!-- If user name was not specified, 'default' user is used. -->

<default>

<access_management>1</access_management>

<password></password>

<networks>

<ip>::/0</ip>

</networks>

<!-- Settings profile for user. -->

<profile>default</profile>

<!-- Quota for user. -->

<quota>default</quota>

<!-- User can create other users and grant rights to them. -->

<!-- <access_management>1</access_management> -->

</default>

<test>

<password></password>

<quota>default</quota>

<profile>default</profile>

<allow_databases>

<database>default</database>

<database>test_dictionaries</database></allow_databases>

<allow_dictionaries>

<dictionary>replicaTest_all</dictionary>

</allow_dictionaries>

</test>

</users>

<!-- Quotas. -->

<quotas>

<!-- Name of quota. -->

<default>

<!-- Limits for time interval. You could specify many intervals with different limits. -->

<interval>

<!-- Length of interval. -->

<duration>3600</duration>

<!-- No limits. Just calculate resource usage for time interval. -->

<queries>0</queries>

<errors>0</errors>

<result_rows>0</result_rows>

<read_rows>0</read_rows>

<execution_time>0</execution_time>

</interval>

</default>

</quotas>

</clickhouse>

- config.xml

<?xml version="1.0"?>

<!--

NOTE: User and query level settings are set up in "users.xml" file.

If you have accidentally specified user-level settings here, server won't start.

You can either move the settings to the right place inside "users.xml" file

or add <skip_check_for_incorrect_settings>1</skip_check_for_incorrect_settings> here.

-->

<clickhouse>

<logger>

<level>trace</level>

<log>/var/log/clickhouse-server/clickhouse-server.log</log>

<errorlog>/var/log/clickhouse-server/clickhouse-server.err.log</errorlog>

<size>1000M</size>

<count>10</count>

</logger>

<http_port>8123</http_port>

<tcp_port>9000</tcp_port>

<mysql_port>9004</mysql_port>

<postgresql_port>9005</postgresql_port>

<interserver_http_port>9009</interserver_http_port>

<max_connections>4096</max_connections>

<keep_alive_timeout>3</keep_alive_timeout>

<grpc>

<enable_ssl>false</enable_ssl>

<ssl_cert_file>/path/to/ssl_cert_file</ssl_cert_file>

<ssl_key_file>/path/to/ssl_key_file</ssl_key_file>

<ssl_require_client_auth>false</ssl_require_client_auth>

<ssl_ca_cert_file>/path/to/ssl_ca_cert_file</ssl_ca_cert_file>

<compression>deflate</compression>

<compression_level>medium</compression_level>

<max_send_message_size>-1</max_send_message_size>

<max_receive_message_size>-1</max_receive_message_size>

<verbose_logs>false</verbose_logs>

</grpc>

<openSSL>

<server>

<certificateFile>/etc/clickhouse-server/server.crt</certificateFile>

<privateKeyFile>/etc/clickhouse-server/server.key</privateKeyFile>

<dhParamsFile>/etc/clickhouse-server/dhparam.pem</dhParamsFile>

<verificationMode>none</verificationMode>

<loadDefaultCAFile>true</loadDefaultCAFile>

<cacheSessions>true</cacheSessions>

<disableProtocols>sslv2,sslv3</disableProtocols>

<preferServerCiphers>true</preferServerCiphers>

</server>

<client>

<loadDefaultCAFile>true</loadDefaultCAFile>

<cacheSessions>true</cacheSessions>

<disableProtocols>sslv2,sslv3</disableProtocols>

<preferServerCiphers>true</preferServerCiphers>

<invalidCertificateHandler>

<name>RejectCertificateHandler</name>

</invalidCertificateHandler>

</client>

</openSSL>

<max_concurrent_queries>100</max_concurrent_queries>

<max_server_memory_usage>0</max_server_memory_usage>

<max_thread_pool_size>10000</max_thread_pool_size>

<max_server_memory_usage_to_ram_ratio>0.9</max_server_memory_usage_to_ram_ratio>

<total_memory_profiler_step>4194304</total_memory_profiler_step>

<total_memory_tracker_sample_probability>0</total_memory_tracker_sample_probability>

<uncompressed_cache_size>8589934592</uncompressed_cache_size>

<mark_cache_size>5368709120</mark_cache_size>

<mmap_cache_size>1000</mmap_cache_size>

<compiled_expression_cache_size>134217728</compiled_expression_cache_size>

<compiled_expression_cache_elements_size>10000</compiled_expression_cache_elements_size>

<path>/var/lib/clickhouse/</path>

<tmp_path>/var/lib/clickhouse/tmp/</tmp_path>

<user_files_path>/var/lib/clickhouse/user_files/</user_files_path>

<ldap_servers>

</ldap_servers>

<user_directories>

<users_xml>

<path>users.xml</path>

</users_xml>

<local_directory>

<path>/var/lib/clickhouse/access/</path>

</local_directory>

</user_directories>

<default_profile>default</default_profile>

<custom_settings_prefixes></custom_settings_prefixes>

<default_database>default</default_database>

<mlock_executable>true</mlock_executable>

<remap_executable>false</remap_executable>

<![CDATA[

Uncomment below in order to use JDBC table engine and function.

To install and run JDBC bridge in background:

* [Debian/Ubuntu]

export MVN_URL=https://repo1.maven.org/maven2/ru/yandex/clickhouse/clickhouse-jdbc-bridge

export PKG_VER=$(curl -sL $MVN_URL/maven-metadata.xml | grep '<release>' | sed -e 's|.*>\(.*\)<.*|\1|')

wget https://github.com/ClickHouse/clickhouse-jdbc-bridge/releases/download/v$PKG_VER/clickhouse-jdbc-bridge_$PKG_VER-1_all.deb

apt install --no-install-recommends -f ./clickhouse-jdbc-bridge_$PKG_VER-1_all.deb

clickhouse-jdbc-bridge &

* [CentOS/RHEL]

export MVN_URL=https://repo1.maven.org/maven2/ru/yandex/clickhouse/clickhouse-jdbc-bridge

export PKG_VER=$(curl -sL $MVN_URL/maven-metadata.xml | grep '<release>' | sed -e 's|.*>\(.*\)<.*|\1|')

wget https://github.com/ClickHouse/clickhouse-jdbc-bridge/releases/download/v$PKG_VER/clickhouse-jdbc-bridge-$PKG_VER-1.noarch.rpm

yum localinstall -y clickhouse-jdbc-bridge-$PKG_VER-1.noarch.rpm

clickhouse-jdbc-bridge &

Please refer to https://github.com/ClickHouse/clickhouse-jdbc-bridge#usage for more information.

]]>

<remote_servers incl="clickhouse_remote_servers">

<test_unavailable_shard>

<shard>

<replica>

<host>localhost</host>

<port>9000</port>

</replica>

</shard>

<shard>

<replica>

<host>localhost</host>

<port>1</port>

</replica>

</shard>

</test_unavailable_shard>

</remote_servers>

<zookeeper incl="zookeeper-servers">

</zookeeper>

<builtin_dictionaries_reload_interval>3600</builtin_dictionaries_reload_interval>

<max_session_timeout>3600</max_session_timeout>

<default_session_timeout>60</default_session_timeout>

<query_log>

<database>system</database>

<table>query_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</query_log>

<trace_log>

<database>system</database>

<table>trace_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</trace_log>

<query_thread_log>

<database>system</database>

<table>query_thread_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</query_thread_log>

<query_views_log>

<database>system</database>

<table>query_views_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</query_views_log>

<part_log>

<database>system</database>

<table>part_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</part_log>

<metric_log>

<database>system</database>

<table>metric_log</table>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

<collect_interval_milliseconds>1000</collect_interval_milliseconds>

</metric_log>

<asynchronous_metric_log>

<database>system</database>

<table>asynchronous_metric_log</table>

<flush_interval_milliseconds>7000</flush_interval_milliseconds>

</asynchronous_metric_log>

<opentelemetry_span_log>

<engine>

engine MergeTree

partition by toYYYYMM(finish_date)

order by (finish_date, finish_time_us, trace_id)

</engine>

<database>system</database>

<table>opentelemetry_span_log</table>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</opentelemetry_span_log>

<crash_log>

<database>system</database>

<table>crash_log</table>

<partition_by />

<flush_interval_milliseconds>1000</flush_interval_milliseconds>

</crash_log>

<session_log>

<database>system</database>

<table>session_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</session_log>

<top_level_domains_lists>

</top_level_domains_lists>

<dictionaries_config>*_dictionary.xml</dictionaries_config>

<user_defined_executable_functions_config>*_function.xml</user_defined_executable_functions_config>

<encryption_codecs>

</encryption_codecs>

<distributed_ddl>

<path>/clickhouse/task_queue/ddl</path>

</distributed_ddl>

<graphite_rollup_example>

<pattern>

<regexp>click_cost</regexp>

<function>any</function>

<retention>

<age>0</age>

<precision>3600</precision>

</retention>

<retention>

<age>86400</age>

<precision>60</precision>

</retention>

</pattern>

<default>

<function>max</function>

<retention>

<age>0</age>

<precision>60</precision>

</retention>

<retention>

<age>3600</age>

<precision>300</precision>

</retention>

<retention>

<age>86400</age>

<precision>3600</precision>

</retention>

</default>

</graphite_rollup_example>

<format_schema_path>/var/lib/clickhouse/format_schemas/</format_schema_path>

<query_masking_rules>

<rule>

<name>hide encrypt/decrypt arguments</name>

<regexp>((?:aes_)?(?:encrypt|decrypt)(?:_mysql)?)\s*\(\s*(?:'(?:\\'|.)+'|.*?)\s*\)</regexp>

<replace>\1(???)</replace>

</rule>

</query_masking_rules>

<send_crash_reports>

<enabled>false</enabled>

<anonymize>false</anonymize>

<endpoint>https://6f33034cfe684dd7a3ab9875e57b1c8d@o388870.ingest.sentry.io/5226277</endpoint>

</send_crash_reports>

<include_from>/etc/metrika.xml</include_from>

</clickhouse>

- metrika.xml

这里我们3个metrika.xml都是一样的

<yandex>

<clickhouse_remote_servers>

<test_cluster>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>ck1</host>

<port>9000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>ck2</host>

<port>9000</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>ck3</host>

<port>9000</port>

</replica>

</shard>

</test_cluster>

</clickhouse_remote_servers>

<zookeeper-servers>

<node index="1">

<host>zk1</host>

<port>2181</port>

</node>

</zookeeper-servers>

</yandex>

我们将配置文件在conf文件夹里面创建好以后执行docker-compose up -d 就可以启动我们的集群了。

创建表

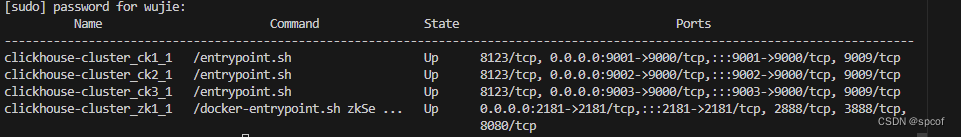

通过docker-compose ps 查看端口号

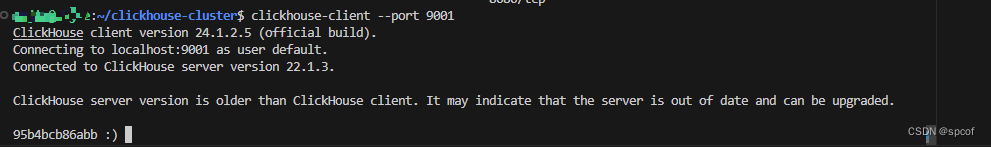

通过clickhouse-client --port 9001 连接数据库

创建数据表

分表在3台节点啥

CREATE TABLE access_logs

(

`create_time` DateTime,

`status` String,

`runtime` String,

`client_ip` String,

`method` String,

`url` String,

`body` String,

`response` String,

`hander` String,

`request_id` String

)

ENGINE = ReplacingMergeTree

PARTITION BY toYYYYMM(create_time)

PRIMARY KEY request_id

ORDER BY (request_id, create_time)

TTL create_time + toIntervalHour(48)

找一台节点执行一下sql

CREATE TABLE access_logs_all ENGINE = Distributed(test_cluster, big_data_all, access_logs, rand());

第二条sql的意思,我们通过on cluster在每个节点上创建了一个分布式的表,该表指向了我们已经有的表access_logs。

test_cluster : 集群的名称

big_data_all: 远程数据库的名称

access_logs: 远程数据表的名称

rand(): 分片的逻辑,我们这里使用的是随机分配,还有hivehash 等。

Distributed Engine

ClickHouse 的 Distributed 引擎并不存储数据,而是允许在物理上分布在多个节点上的表之间进行查询。当一个查询被发送到 Distributed 表时,它将查询分发到所有的本地表,然后汇总结果。

-

当一个查询被发送到 Distributed 表时,ClickHouse 首先将查询分解为两部分:一个主查询和一个子查询。

-

子查询被发送到集群中的每个节点。每个节点在其本地表上执行子查询,并返回结果。

-

主查询在 Distributed 表所在的节点上执行。它从所有的子查询中收集结果,然后汇总这些结果。

-

如果查询包含聚合函数,如

SUM()或COUNT(),那么 ClickHouse 将在每个节点上进行部分聚合,然后在 Distributed 表所在的节点上进行最终聚合。 -

如果查询包含

ORDER BY子句,那么排序将在每个节点上进行,然后在 Distributed 表所在的节点上进行最终排序。

通过这种方式,Distributed 引擎可以在多个节点上并行执行查询,从而提高查询性能。同时,由于数据是在多个节点上存储的,因此可以提供更大的存储容量。

在 ClickHouse 中,你可以直接向 Distributed 表插入数据。当你向 Distributed 表插入数据时,ClickHouse 会根据分片键将数据分发到相应的分片上。

640

640

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?