LD is tigger forever,CG are not brothers forever, throw the pot and shine.

Efficient work is better than attitude。All right, hide it。Advantages should be hidden.

talk is selected, show others the code,Keep progress,make a better result.

目录

概述

hadoop 源码分析

正文:

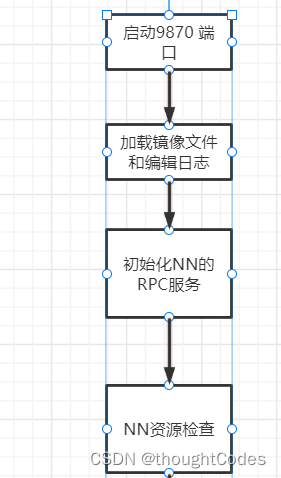

1.启动Name节点:

public static void main(String argv[]) throws Exception {

if (DFSUtil.parseHelpArgument(argv, NameNode.USAGE, System.out, true)) {

System.exit(0);

}

try {

StringUtils.startupShutdownMessage(NameNode.class, argv, LOG);

// 创建 NameNode

NameNode namenode = createNameNode(argv, null);

if (namenode != null) {

namenode.join();

}

} catch (Throwable e) {

LOG.error(“Failed to start namenode.”, e);

terminate(1, e);

}

}

点击 createNameNode

public static NameNode createNameNode(String argv[], Configuration conf)

throws IOException {

… …

StartupOption startOpt = parseArguments(argv);

if (startOpt == null) {

printUsage(System.err);

return null;

}

setStartupOption(conf, startOpt);

boolean aborted = false;

switch (startOpt) {

case FORMAT:

aborted = format(conf, startOpt.getForceFormat(),

startOpt.getInteractiveFormat());

terminate(aborted ? 1 : 0);

return null; // avoid javac warning

case GENCLUSTERID:

… …

default:

DefaultMetricsSystem.initialize(“NameNode”);

// 创建 NameNode 对象

return new NameNode(conf);

}

}

点击nameNode:

public NameNode(Configuration conf) throws IOException {

this(conf, NamenodeRole.NAMENODE);

}

protected NameNode(Configuration conf, NamenodeRole role)

throws IOException {

… …

try {

initializeGenericKeys(conf, nsId, namenodeId);

initialize(getConf());

… …

} catch (IOException e) {

this.stopAtException(e);

throw e;

} catch (HadoopIllegalArgumentException e) {

this.stopAtException(e);

throw e;

}

this.started.set(true);

}

点击 initialize

protected void initialize(Configuration conf) throws IOException {

… …

if (NamenodeRole.NAMENODE == role) {

// 启动 HTTP 服务端(9870)

startHttpServer(conf);

}

// 加载镜像文件和编辑日志到内存

loadNamesystem(conf);

startAliasMapServerIfNecessary(conf);

// 创建 NN 的 RPC 服务端

rpcServer = createRpcServer(conf);

initReconfigurableBackoffKey();

if (clientNamenodeAddress == null) {

// This is expected for MiniDFSCluster. Set it now using

// the RPC server’s bind address.

clientNamenodeAddress =

NetUtils.getHostPortString(getNameNodeAddress());

LOG.info(“Clients are to use " + clientNamenodeAddress + " to access”

- " this namenode/service.");

}

if (NamenodeRole.NAMENODE == role) {

httpServer.setNameNodeAddress(getNameNodeAddress());

httpServer.setFSImage(getFSImage());

}

// NN 启动资源检查

startCommonServices(conf);

startMetricsLogger(conf);

}

1.1 启动 9870 端口服务

1)点击 startHttpServer

NameNode.java

private void startHttpServer(final Configuration conf) throws IOException {

httpServer = new NameNodeHttpServer(conf, this, getHttpServerBindAddress(conf));

httpServer.start();

httpServer.setStartupProgress(startupProgress);

}

protected InetSocketAddress getHttpServerBindAddress(Configuration conf) {

InetSocketAddress bindAddress = getHttpServerAddress(conf);

… …

return bindAddress;

}

protected InetSocketAddress getHttpServerAddress(Configuration conf) {

return getHttpAddress(conf);

}

public static InetSocketAddress getHttpAddress(Configuration conf) {

return NetUtils.createSocketAddr(

conf.getTrimmed(DFS_NAMENODE_HTTP_ADDRESS_KEY,

DFS_NAMENODE_HTTP_ADDRESS_DEFAULT));

}

public static final String DFS_NAMENODE_HTTP_ADDRESS_DEFAULT = “0.0.0.0:” +

DFS_NAMENODE_HTTP_PORT_DEFAULT;

public static final int DFS_NAMENODE_HTTP_PORT_DEFAULT =

HdfsClientConfigKeys.DFS_NAMENODE_HTTP_PORT_DEFAULT;

int DFS_NAMENODE_HTTP_PORT_DEFAULT = 9870;

2)点击 startHttpServer 方法中的 httpServer.start();

void start() throws IOException {

… …

// Hadoop 自己封装了 HttpServer,形成自己的 HttpServer2

HttpServer2.Builder builder = DFSUtil.httpServerTemplateForNNAndJN(conf,

httpAddr, httpsAddr, “hdfs”,

DFSConfigKeys.DFS_NAMENODE_KERBEROS_INTERNAL_SPNEGO_PRINCIPAL_K

EY,

DFSConfigKeys.DFS_NAMENODE_KEYTAB_FILE_KEY);

… …

httpServer = builder.build();

… …

httpServer.setAttribute(NAMENODE_ATTRIBUTE_KEY, nn);

httpServer.setAttribute(JspHelper.CURRENT_CONF, conf);

setupServlets(httpServer, conf);

httpServer.start();

… …

}

点击 setupServlets

private static void setupServlets(HttpServer2 httpServer, Configuration conf) {

httpServer.addInternalServlet(“startupProgress”,

StartupProgressServlet.PATH_SPEC, StartupProgressServlet.class);

httpServer.addInternalServlet(“fsck”, “/fsck”, FsckServlet.class,

true);

httpServer.addInternalServlet(“imagetransfer”, ImageServlet.PATH_SPEC,

ImageServlet.class, true);

}

1.2 加载镜像文件和编辑日志

1)点击 loadNamesystem

protected void loadNamesystem(Configuration conf) throws IOException {

this.namesystem = FSNamesystem.loadFromDisk(conf);

}

static FSNamesystem loadFromDisk(Configuration conf) throws IOException {

checkConfiguration(conf);

FSImage fsImage = new FSImage(conf,

FSNamesystem.getNamespaceDirs(conf),

FSNamesystem.getNamespaceEditsDirs(conf));

FSNamesystem namesystem = new FSNamesystem(conf, fsImage, false);

StartupOption startOpt = NameNode.getStartupOption(conf);

if (startOpt == StartupOption.RECOVER) {

namesystem.setSafeMode(SafeModeAction.SAFEMODE_ENTER);

}

long loadStart = monotonicNow();

try {

namesystem.loadFSImage(startOpt);

} catch (IOException ioe) {

LOG.warn(“Encountered exception loading fsimage”, ioe);

fsImage.close();

throw ioe;

}

long timeTakenToLoadFSImage = monotonicNow() - loadStart;

LOG.info(“Finished loading FSImage in " + timeTakenToLoadFSImage + " msecs”);

NameNodeMetrics nnMetrics = NameNode.getNameNodeMetrics();

if (nnMetrics != null) {

nnMetrics.setFsImageLoadTime((int) timeTakenToLoadFSImage);

}

namesystem.getFSDirectory().createReservedStatuses(namesystem.getCTime());

return namesystem;

1.3 初始化 NN 的 RPC 服务端

protected NameNodeRpcServer createRpcServer(Configuration conf)

throws IOException {

return new NameNodeRpcServer(conf, this);

}

NameNodeRpcServer.java

public NameNodeRpcServer(Configuration conf, NameNode nn)

throws IOException {

… …

serviceRpcServer = new RPC.Builder(conf)

.setProtocol(

org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolPB.class)

.setInstance(clientNNPbService)

.setBindAddress(bindHost)

.setPort(serviceRpcAddr.getPort())

.setNumHandlers(serviceHandlerCount)

.setVerbose(false)

.setSecretManager(namesystem.getDelegationTokenSecretManager())

.build();

… …

1.4 NN 启动资源检查

1)点击 startCommonServices

private void startCommonServices(Configuration conf) throws IOException {

namesystem.startCommonServices(conf, haContext);

registerNNSMXBean();

if (NamenodeRole.NAMENODE != role) {

startHttpServer(conf);

httpServer.setNameNodeAddress(getNameNodeAddress());

httpServer.setFSImage(getFSImage());

}

rpcServer.start();

try {

plugins = conf.getInstances(DFS_NAMENODE_PLUGINS_KEY,

ServicePlugin.class);

} catch (RuntimeException e) {

String pluginsValue = conf.get(DFS_NAMENODE_PLUGINS_KEY);

LOG.error("Unable to load NameNode plugins. Specified list of plugins: " +

pluginsValue, e);

throw e;

}

… …

}

2)点击 startCommonServices

void startCommonServices(Configuration conf, HAContext haContext) throws IOException {

this.registerMBean(); // register the MBean for the FSNamesystemState

writeLock();

this.haContext = haContext;

try {

nnResourceChecker = new NameNodeResourceChecker(conf);

// 检查是否有足够的磁盘存储元数据(fsimage(默认 100m)editLog(默认 100m))

checkAvailableResources();

assert !blockManager.isPopulatingReplQueues();

StartupProgress prog = NameNode.getStartupProgress();

prog.beginPhase(Phase.SAFEMODE);

long completeBlocksTotal = getCompleteBlocksTotal();

// 安全模式

prog.setTotal(Phase.SAFEMODE, STEP_AWAITING_REPORTED_BLOCKS,

completeBlocksTotal);

// 启动块服务

blockManager.activate(conf, completeBlocksTotal);

} finally {

writeUnlock(“startCommonServices”);

}

registerMXBean();

DefaultMetricsSystem.instance().register(this);

if (inodeAttributeProvider != null) {

inodeAttributeProvider.start();

dir.setINodeAttributeProvider(inodeAttributeProvider);

}

snapshotManager.registerMXBean();

InetSocketAddress serviceAddress = NameNode.getServiceAddress(conf, true);

this.nameNodeHostName = (serviceAddress != null) ?

serviceAddress.getHostName() : “”;

}

点击 NameNodeResourceChecker

NameNodeResourceChecker.java

FSNamesystem.java

void startCommonServices(Configuration conf, HAContext haContext) throws IOException {

this.registerMBean(); // register the MBean for the FSNamesystemState

writeLock();

this.haContext = haContext;

try {

nnResourceChecker = new NameNodeResourceChecker(conf);

// 检查是否有足够的磁盘存储元数据(fsimage(默认 100m)editLog(默认 100m))

checkAvailableResources();

assert !blockManager.isPopulatingReplQueues();

StartupProgress prog = NameNode.getStartupProgress();

prog.beginPhase(Phase.SAFEMODE);

long completeBlocksTotal = getCompleteBlocksTotal();

// 安全模式

prog.setTotal(Phase.SAFEMODE, STEP_AWAITING_REPORTED_BLOCKS,

completeBlocksTotal);

// 启动块服务

blockManager.activate(conf, completeBlocksTotal);

} finally {

writeUnlock(“startCommonServices”);

}

registerMXBean();

DefaultMetricsSystem.instance().register(this);

if (inodeAttributeProvider != null) {

inodeAttributeProvider.start();

dir.setINodeAttributeProvider(inodeAttributeProvider);

}

snapshotManager.registerMXBean();

InetSocketAddress serviceAddress = NameNode.getServiceAddress(conf, true);

this.nameNodeHostName = (serviceAddress != null) ?

serviceAddress.getHostName() : “”;

}

点击 NameNodeResourceChecker

NameNodeResourceChecker.java

public NameNodeResourceChecker(Configuration conf) throws IOException {

this.conf = conf;

volumes = new HashMap<String, CheckedVolume>();

// dfs.namenode.resource.du.reserved 默认值 1024 * 1024 * 100 =》100m

duReserved = conf.getLong(DFSConfigKeys.DFS_NAMENODE_DU_RESERVED_KEY,

DFSConfigKeys.DFS_NAMENODE_DU_RESERVED_DEFAULT);

Collection extraCheckedVolumes = Util.stringCollectionAsURIs(conf

.getTrimmedStringCollection(DFSConfigKeys.DFS_NAMENODE_CHECKED_VO

LUMES_KEY));

Collection localEditDirs = Collections2.filter(

FSNamesystem.getNamespaceEditsDirs(conf),

new Predicate() {

@Override

public boolean apply(URI input) {

if (input.getScheme().equals(NNStorage.LOCAL_URI_SCHEME)) {

return true;

}

return false;

}

});

// 对所有路径进行资源检查

for (URI editsDirToCheck : localEditDirs) {

addDirToCheck(editsDirToCheck,

FSNamesystem.getRequiredNamespaceEditsDirs(conf).contains(

editsDirToCheck));

}

// All extra checked volumes are marked “required”

for (URI extraDirToCheck : extraCheckedVolumes) {

addDirToCheck(extraDirToCheck, true);

}

minimumRedundantVolumes = conf.getInt(

DFSConfigKeys.DFS_NAMENODE_CHECKED_VOLUMES_MINIMUM_KEY,

DFSConfigKeys.DFS_NAMENODE_CHECKED_VOLUMES_MINIMUM_DEFAULT);

}

点击 checkAvailableResources

void checkAvailableResources() {

long resourceCheckTime = monotonicNow();

Preconditions.checkState(nnResourceChecker != null,

“nnResourceChecker not initialized”);

// 判断资源是否足够,不够返回 false

hasResourcesAvailable = nnResourceChecker.hasAvailableDiskSpace();

resourceCheckTime = monotonicNow() - resourceCheckTime;

NameNode.getNameNodeMetrics().addResourceCheckTime(resourceCheckTime);

}

NameNodeResourceChecker.java

public boolean hasAvailableDiskSpace() {

return NameNodeResourcePolicy.areResourcesAvailable(volumes.values(),

minimumRedundantVolumes);

}

NameNodeResourcePolicy.java

static boolean areResourcesAvailable(

Collection<? extends CheckableNameNodeResource> resources,

int minimumRedundantResources) {

// TODO: workaround:

// - during startup, if there are no edits dirs on disk, then there is

// a call to areResourcesAvailable() with no dirs at all, which was

// previously causing the NN to enter safemode

if (resources.isEmpty()) {

return true;

}

int requiredResourceCount = 0;

int redundantResourceCount = 0;

int disabledRedundantResourceCount = 0;

// 判断资源是否充足

for (CheckableNameNodeResource resource : resources) {

if (!resource.isRequired()) {

redundantResourceCount++;

if (!resource.isResourceAvailable()) {

disabledRedundantResourceCount++;

}

} else {

requiredResourceCount++;

if (!resource.isResourceAvailable()) {

// Short circuit - a required resource is not available. 不充足返回 false

return false;

}

}

}

if (redundantResourceCount == 0) {

// If there are no redundant resources, return true if there are any

// required resources available.

return requiredResourceCount > 0;

} else {

return redundantResourceCount - disabledRedundantResourceCount >=

minimumRedundantResources;

}

}

interface CheckableNameNodeResource {

public boolean isResourceAvailable();

public boolean isRequired();

}

public boolean isResourceAvailable() {

// 获取当前目录的空间大小

long availableSpace = df.getAvailable();

if (LOG.isDebugEnabled()) {

LOG.debug(“Space available on volume '” + volume + "’ is "

- availableSpace);

}

/ 如果当前空间大小,小于 100m,返回 false

if (availableSpace < duReserved) {

LOG.warn(“Space available on volume '” + volume + "’ is " - availableSpace +

", which is below the configured reserved amount " + duReserved);

return false;

} else {

return true;

}

}

1.5 NN 对心跳超时判断

Ctrl + n 搜索 namenode,ctrl + f 搜索 startCommonServices

点击 namesystem.startCommonServices(conf, haContext);

点击 blockManager.activate(conf, completeBlocksTotal);

点击 datanodeManager.activate(conf);

DatanodeManager.java

void activate(final Configuration conf) {

datanodeAdminManager.activate(conf);

heartbeatManager.activate();

}

DatanodeManager.java

void activate() {

// 启动的线程,搜索 run 方法

heartbeatThread.start();

}

public void run() {

while(namesystem.isRunning()) {

restartHeartbeatStopWatch();

try {

final long now = Time.monotonicNow();

if (lastHeartbeatCheck + heartbeatRecheckInterval < now) {

// 心跳检查

heartbeatCheck();

lastHeartbeatCheck = now;

}

if (blockManager.shouldUpdateBlockKey(now - lastBlockKeyUpdate)) {

synchronized(HeartbeatManager.this) {

for(DatanodeDescriptor d : datanodes) {

d.setNeedKeyUpdate(true);

}

}

lastBlockKeyUpdate = now;

}

} catch (Exception e) {

LOG.error(“Exception while checking heartbeat”, e);

}

try {

Thread.sleep(5000); // 5 seconds

} catch (InterruptedException ignored) {

// avoid declaring nodes dead for another cycle if a GC pause lasts

// longer than the node recheck interval

if (shouldAbortHeartbeatCheck(-5000)) {

LOG.warn(“Skipping next heartbeat scan due to excessive pause”);

lastHeartbeatCheck = Time.monotonicNow();

}

}

}

void heartbeatCheck() {

final DatanodeManager dm = blockManager.getDatanodeManager();

boolean allAlive = false;

while (!allAlive) {

// locate the first dead node.

DatanodeDescriptor dead = null;

// locate the first failed storage that isn’t on a dead node.

DatanodeStorageInfo failedStorage = null;

// check the number of stale nodes

int numOfStaleNodes = 0;

int numOfStaleStorages = 0;

synchronized(this) {

for (DatanodeDescriptor d : datanodes) {

// check if an excessive GC pause has occurred

if (shouldAbortHeartbeatCheck(0)) {

return;

}

// 判断 DN 节点是否挂断

if (dead == null && dm.isDatanodeDead(d)) {

stats.incrExpiredHeartbeats();

dead = d;

}

if (d.isStale(dm.getStaleInterval())) {

numOfStaleNodes++;

}

DatanodeStorageInfo[] storageInfos = d.getStorageInfos();

for(DatanodeStorageInfo storageInfo : storageInfos) {

if (storageInfo.areBlockContentsStale()) {

numOfStaleStorages++;

}

if (failedStorage == null &&

storageInfo.areBlocksOnFailedStorage() &&

d != dead) {

failedStorage = storageInfo;

}

}

}

// Set the number of stale nodes in the DatanodeManager

dm.setNumStaleNodes(numOfStaleNodes);

dm.setNumStaleStorages(numOfStaleStorages);

}

… …

}

}

boolean isDatanodeDead(DatanodeDescriptor node) {

return (node.getLastUpdateMonotonic() <

(monotonicNow() - heartbeatExpireInterval));

}

private long heartbeatExpireInterval;

// 10 分钟 + 30 秒

this.heartbeatExpireInterval = 2 * heartbeatRecheckInterval + 10 * 1000 *

heartbeatIntervalSeconds;

private volatile int heartbeatRecheckInterval;

heartbeatRecheckInterval = conf.getInt(

DFSConfigKeys.DFS_NAMENODE_HEARTBEAT_RECHECK_INTERVAL_KEY,

DFSConfigKeys.DFS_NAMENODE_HEARTBEAT_RECHECK_INTERVAL_DEFAULT);

// 5 minutes

private volatile long heartbeatIntervalSeconds;

heartbeatIntervalSeconds = conf.getTimeDuration(

DFSConfigKeys.DFS_HEARTBEAT_INTERVAL_KEY,

DFSConfigKeys.DFS_HEARTBEAT_INTERVAL_DEFAULT,

TimeUnit.SECONDS);

public static final long DFS_HEARTBEAT_INTERVAL_DEFAULT = 3;

1.6 安全模式

void startCommonServices(Configuration conf, HAContext haContext) throws IOException {

this.registerMBean(); // register the MBean for the FSNamesystemState

writeLock();

this.haContext = haContext;

try {

nnResourceChecker = new NameNodeResourceChecker(conf);

// 检查是否有足够的磁盘存储元数据(fsimage(默认 100m)editLog(默认 100m))

checkAvailableResources();

assert !blockManager.isPopulatingReplQueues();

StartupProgress prog = NameNode.getStartupProgress();

// 开始进入安全模式

prog.beginPhase(Phase.SAFEMODE);

// 获取所有可以正常使用的 block

long completeBlocksTotal = getCompleteBlocksTotal();

prog.setTotal(Phase.SAFEMODE, STEP_AWAITING_REPORTED_BLOCKS,

completeBlocksTotal);

// 启动块服务

blockManager.activate(conf, completeBlocksTotal);

} finally {

writeUnlock(“startCommonServices”);

}

registerMXBean();

DefaultMetricsSystem.instance().register(this);

if (inodeAttributeProvider != null) {

inodeAttributeProvider.start();

dir.setINodeAttributeProvider(inodeAttributeProvider);

}

snapshotManager.registerMXBean();

InetSocketAddress serviceAddress = NameNode.getServiceAddress(conf, true);

this.nameNodeHostName = (serviceAddress != null) ?

serviceAddress.getHostName() : “”;

}

点击 getCompleteBlocksTotal

public long getCompleteBlocksTotal() {

// Calculate number of blocks under construction

long numUCBlocks = 0;

readLock();

try {

// 获取正在构建的 block

numUCBlocks = leaseManager.getNumUnderConstructionBlocks();

// 获取所有的块 - 正在构建的 block = 可以正常使用的 block

return getBlocksTotal() - numUCBlocks;

} finally {

readUnlock(“getCompleteBlocksTotal”);

}

}

点击 activate

public void activate(Configuration conf, long blockTotal) {

pendingReconstruction.start();

datanodeManager.activate(conf);

this.redundancyThread.setName(“RedundancyMonitor”);

this.redundancyThread.start();

storageInfoDefragmenterThread.setName(“StorageInfoMonitor”);

storageInfoDefragmenterThread.start();

this.blockReportThread.start();

mxBeanName = MBeans.register(“NameNode”, “BlockStats”, this);

bmSafeMode.activate(blockTotal);

}

点击 activate

void activate(long total) {

assert namesystem.hasWriteLock();

assert status == BMSafeModeStatus.OFF;

startTime = monotonicNow();

// 计算是否满足块个数的阈值

setBlockTotal(total);

// 判断 DataNode 节点和块信息是否达到退出安全模式标准

if (areThresholdsMet()) {

boolean exitResult = leaveSafeMode(false);

Preconditions.checkState(exitResult, “Failed to leave safe mode.”);

} else {

// enter safe mode

status = BMSafeModeStatus.PENDING_THRESHOLD;

initializeReplQueuesIfNecessary();

reportStatus(“STATE* Safe mode ON.”, true);

lastStatusReport = monotonicNow();

}

}

点击 setBlockTotal

void setBlockTotal(long total) {

assert namesystem.hasWriteLock();

synchronized (this) {

this.blockTotal = total;

// 计算阈值:例如:1000 个正常的块 * 0.999 = 999

this.blockThreshold = (long) (total * threshold);

}

this.blockReplQueueThreshold = (long) (total * replQueueThreshold);

}

this.threshold = conf.getFloat(DFS_NAMENODE_SAFEMODE_THRESHOLD_PCT_KEY,

DFS_NAMENODE_SAFEMODE_THRESHOLD_PCT_DEFAULT);

public static final float

DFS_NAMENODE_SAFEMODE_THRESHOLD_PCT_DEFAULT = 0.999f;

点击 areThresholdsMet

private boolean areThresholdsMet() {

assert namesystem.hasWriteLock();

// Calculating the number of live datanodes is time-consuming

// in large clusters. Skip it when datanodeThreshold is zero.

int datanodeNum = 0;

if (datanodeThreshold > 0) {

datanodeNum = blockManager.getDatanodeManager().getNumLiveDataNodes();

}

synchronized (this) {

// 已经正常注册的块数 》= 块的最小阈值 》=最小可用 DataNode

return blockSafe >= blockThreshold && datanodeNum >= datanodeThreshold;

}

}

设计思路

相关代码如下:

完整版下载: hadoopNameNode解析

小结:

主要讲述了自己的一些体会,里面有许多不足,请大家指正~

参考资料和推荐阅读

深度开源: link

欢迎阅读,各位老铁,如果对你有帮助,点个赞加个关注呗!~

395

395

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?