实时视频流通常是由安防设备负责推流,服务器端进行解析和转发,手机app播放视频流。

在开发服务器端和手机app时,为了方便调试码流和解决相关bug,我们在windows平台下利用

ffmpeg获取采集实时视频流,并对视频流进行H264编码后推流到视频流服务器。

分析详细的代码之前,先看运行效果:

下面先看main的代码:

int _tmain(int argc, char* argv[])

{

init_ffmpeg();

CCameraVideo* cVideo = new CCameraVideo(true);

//rtmp://192.168.3.94:1935/live/home

int frame_index = 0;

int64_t start_time = 0;

//SDL----------------------------

SDL_Window *screen = NULL;

SDL_Renderer* sdlRenderer=NULL;

SDL_Texture* sdlTexture = NULL;

SDL_Thread *video_tid = NULL;

SDL_Event event;

unsigned int sdlFlag = SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER;

if (SDL_Init(sdlFlag))

{

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

int screen_w = 1280;

int screen_h = 780;

//SDL 2.0 Support for multiple windows

AVCodecContext* pCodecCtx = cVideo->getInputCodecContext();

if (pCodecCtx)

{

screen_w = pCodecCtx->width / VIDEO_SCALE;

screen_h = pCodecCtx->height / VIDEO_SCALE;

}

screen = SDL_CreateWindow("Simplest ffmpeg player's Window", SDL_WINDOWPOS_UNDEFINED,

SDL_WINDOWPOS_UNDEFINED, screen_w, screen_h, SDL_WINDOW_OPENGL);

if (screen != NULL)

{

sdlRenderer = SDL_CreateRenderer(screen, -1, 0);

//IYUV: Y + U + V (3 planes)

//YV12: Y + V + U (3 planes)

sdlTexture = SDL_CreateTexture(sdlRenderer, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_STREAMING,

screen_w, screen_h);

SDL_SetWindowSize(screen, 1280, 1280.0*(float)screen_h / (float)screen_w);

SDL_SetWindowPosition(screen, 300, 100);

}

SDL_Rect sdlRectS1;

SDL_Rect rect;

rect.x = 0;

rect.y = 0;

rect.w = screen_w;

rect.h = screen_h;

SDL_Rect sdlRectS2;

SDL_Rect sdlRectD1;

SDL_Rect sdlRectD2;

SDL_Texture *myTexture;

thread_exit = 0;

thread_pause = 0;

unsigned int threadID = 0;

HANDLE aThread[2];

aThread[0] = (HANDLE)_beginthreadex(NULL, 0, &video_thread, cVideo, 0, &threadID);

aThread[1] = (HANDLE)_beginthreadex(NULL, 0, &audio_thread, cVideo->getAudio(), 0, &threadID);

//------------SDL End---------2---

#if OUTPUT_YUV420P

FILE *fp_yuv = fopen("output.yuv", "wb+");

#endif

//------------------------------

start_time = av_gettime();

g_scheduler = TaskScheduler::createNew();

g_env = UsageEnvironment::createNew(g_scheduler);

int serviceport = 554;

setup();

rtspServer = intvideo_server(g_env, serviceport, (IH264LiveVideo*)cVideo, cVideo->getAudio() );

if (single_run)

cVideo->Start();

//Event Loop

int framecnt = 0;

//strcpy(myConfig.serviceAddress, "192.168.3.166");

char szMyIP[128];

//myConfig.ourAddress = ourIPAddress(); // hack

myConfig.ourAddress.tostring(szMyIP, sizeof(szMyIP));

logstr("my ip is %s", szMyIP);

#ifdef WIN32

//========================p2p===============

p2p_init();

//-------------------------------------------

#endif

fileuploader.init();

auto fun = [&](AVFrame* pFrame)

{

//SDL---------------------------

SDL_UpdateTexture(sdlTexture, NULL, pFrame->data[0], pFrame->linesize[0]);

SDL_RenderClear(sdlRenderer);

//SDL_LockYUVOverlay(bmp);

/*sdlRectS1.x = screen_w;

sdlRectS1.y = screen_h;

sdlRectD1.x = screen_w;

sdlRectD1.y = screen_h;

*/

sdlRectS1.x = screen_w / 2;

sdlRectS1.y = screen_h / 2;

sdlRectS1.w = sdlRectS2.w = screen_w / 2;

sdlRectS1.h = sdlRectS2.h = screen_h / 2;

sdlRectS2.x = 0;

sdlRectS2.y = 0;

sdlRectD2.x = 0;

sdlRectD2.y = 0;

sdlRectD2.h = sdlRectD1.h = screen_h / 2;

sdlRectD2.w = sdlRectD1.w = screen_w / 2;

sdlRectD1.x = screen_w / 2;

sdlRectD1.y = screen_h / 2;

//SDL_RenderCopy(sdlRenderer, sdlTexture, &sdlRectS1, &sdlRectD1);

//SDL_RenderCopy(sdlRenderer, sdlTexture, &sdlRectS2, &sdlRectD2);

//SDL_RenderCopy(sdlRenderer, sdlTexture, NULL, NULL);

SDL_RenderCopy(sdlRenderer, sdlTexture, NULL, NULL);

SDL_RenderPresent(sdlRenderer);

// get the sdlTexture

myTexture = SDL_GetRenderTarget(sdlRenderer);

};

int64_t last_update_time = av_gettime();

int64_t last_framenum_update = last_update_time;

int64_t nFrameNum = 0;

//SDL_WindowData* window_data = (SDL_WindowData*)->driverdata;

_EventTime timeNow;

bool bRun = true;

while (thread_exit==0)

{

//Wait

//p2p_engine_update();//单线程模式才需要调用

loop();

nFrameNum++;

if (screen != NULL)

{

SDL_WaitEventTimeout(&event, 0);

if (event.type == SDL_QUIT)

{

thread_exit = 1;

break;

}

}

fileuploader.update(cVideo->getCurrentFileID());

int64_t now_time = av_gettime();

timeNow = TimeNow();

rtspServer->Update(&timeNow);

if (now_time - last_framenum_update >= 5* AV_TIME_BASE)

{

int64_t second = (now_time - last_framenum_update)/AV_TIME_BASE;

//printf("frame rate=%lld\n", nFrameNum/second);

last_framenum_update = now_time;

nFrameNum = 0;

unsigned int nMaxFileID = currentTimefileID();

unsigned int nowFileID = cVideo->getCurrentFileID();

if (nMaxFileID != nowFileID)

{

cVideo->openNewFile();

}

}

if (last_update_time + 30*1000 < now_time)

{

last_update_time = now_time;

int64_t pts_time = now_time - start_time;

AVFrame* pFrame = NULL;

pFrame = cVideo->GetLastFrame();

if (pFrame && sdlRenderer != NULL)

fun(pFrame);

int64_t now_time = av_gettime() - start_time;

}

av_usleep(10* 1000);

}

if (sdlTexture)

SDL_DestroyTexture(sdlTexture);

if (sdlRenderer)

SDL_DestroyRenderer(sdlRenderer);

WaitForMultipleObjects(2, aThread, TRUE, INFINITE);

CloseHandle(aThread[0]);

CloseHandle(aThread[1]);

if (single_run)

cVideo->Close();

ASSERT(rtspServer->Count() == 1);

rtspServer->Release();

#ifdef WIN32

p2p_engine_destroy();

#endif

#if OUTPUT_YUV420P

fclose(fp_yuv);

#endif

cfgData.close();

delete cVideo;

delete[] mNumArrays;

//av_free(out_buffer);

//av_free(pFrameYUV);

//lib_deinit();

avformat_network_deinit();

SDL_QuitSubSystem(sdlFlag);

//end:

SDL_Quit();

return 0;

}

程序最开始调用init_ffmpeg初始化ffmpeg:

void init_ffmpeg()

{

av_register_all();//注锟斤拷

avformat_network_init();//锟斤拷锟斤拷锟绞硷拷锟?

//Register Device

avdevice_register_all();

}接着创建CCameraVideo类对象,这个类实现了实时视频流和实时音频流接口。

interface IH264LiveVideo

{

virtual bool Start() = 0;

virtual bool isH265() = 0;

virtual unsigned int GetNextFrame(unsigned char* to, unsigned int & maxSize) = 0;

virtual unsigned int GetDurationInMicroseconds() = 0;

virtual bool getVPSandSPSandPPS(CShareBuffer** vps, CShareBuffer** sps, CShareBuffer** pps) = 0;

virtual int64_t GetCurrentFrameTime() = 0;

};

interface IH264LiveAudio

{

virtual bool Start() = 0;

virtual unsigned int GetNextFrame(unsigned char* to, unsigned int maxSize) = 0;

virtual bool GetAudioParam(int & objecttype, int & sample_rate_index, int & channel_conf, int & framesize) = 0;

};

CCameraVideo类对象后面传入 intvideo_server函数,下面是intvideo_server函数的代码:

RTSPServer* intvideo_server(UsageEnvironment* env, int & rtspServerPortNum,

IH264LiveVideo* pIH264LiveVideo, IH264LiveAudio* pLiveAudio )

{

// Begin by setting up our usage environment:

UserAuthenticationDatabase* authDB = NULL;

#ifdef ACCESS_CONTROL

// To implement client access control to the RTSP server, do the following:

authDB = new UserAuthenticationDatabase;

authDB->addUserRecord("username1", "password1"); // replace these with real strings

// Repeat the above with each <username>, <password> that you wish to allow

// access to the server.

#endif

// Create the RTSP server. Try first with the default port number (554),

// and then with the alternative port number (8554):

SOCKET nSocket = INVALID_SOCKET;

rtspServerPortNum = 554;

do

{

nSocket = setUpOurSocket(env, rtspServerPortNum);

if (nSocket == INVALID_SOCKET)

{

rtspServerPortNum += 1000;

}

} while (nSocket == INVALID_SOCKET);

RTSPServer* rtspServer = new RTSPServerSupportingHTTPStreaming(env, nSocket, rtspServerPortNum, authDB);

if (rtspServer == NULL)

{

rtspServerPortNum = 8554;

rtspServer = DynamicRTSPServer::createNew(env, nSocket, rtspServerPortNum, authDB);

}

if (rtspServer == NULL) {

*env << "Failed to create RTSP server: " << env->getResultMsg() << "\n";

exit(1);

}

Boolean reuseFirstSource = False;

char const* descriptionString = "Session streamed by \"testOnDemandRTSPServer\"";

// A H.264 video elementary stream:

char *streamName = "h264video";

char *inputFileName = "test.264";

sms = ServerMediaSession::createNew(env, streamName, streamName, descriptionString);

if (pIH264LiveVideo->isH265())

subsession = H265LiveVideoServerMediaSubssion::createNew(env, pIH264LiveVideo, reuseFirstSource);

else

subsession = H264LiveVideoServerMediaSubssion::createNew(env, pIH264LiveVideo, reuseFirstSource);

sms->addSubsession(subsession);

subsession = LiveAudioServerMediaSubssion::createNew(env, pLiveAudio, reuseFirstSource);

sms->addSubsession(subsession);

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

strcpy(myConfig.streamName, streamName);

return rtspServer; // only to prevent compiler warning

}intvideo_server函数创建了一个RTSPServer,里面包括了一个实时视频流和一个实时音频流。

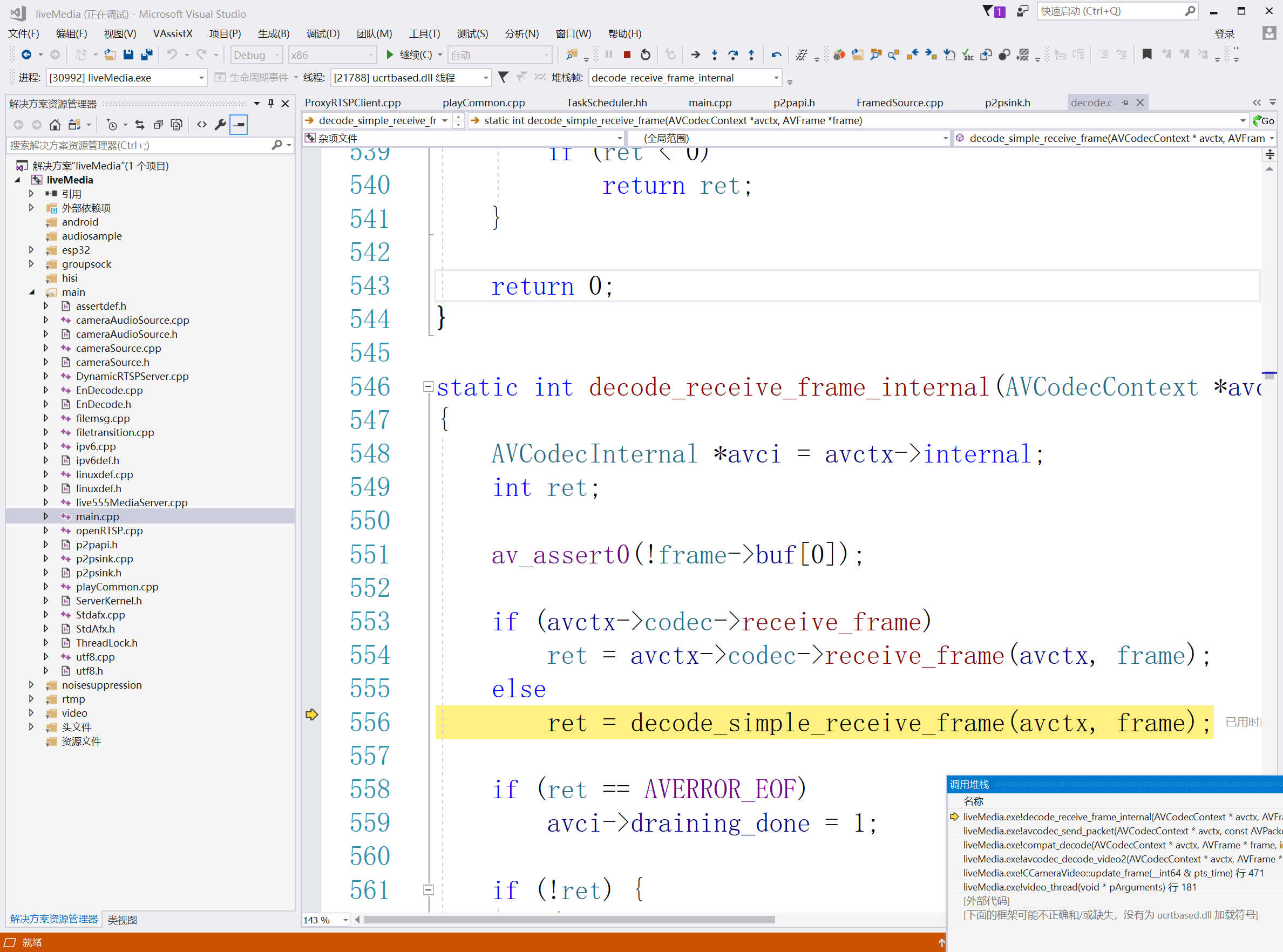

程序进入主循环后,可以用ffplay或vcl播放实时视频流。由于live555和ffmpeg的源码相对复杂,

在windows下配置编译ffmpeg的也不简单,需要源码的进qq群:384170753下载。下面是调试运行截图。

1651

1651

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?