lstmp结构

对于传统的lstm而言

i

t

=

δ

(

W

i

x

x

t

+

W

i

m

m

t

−

1

+

W

i

c

c

t

−

1

+

b

i

)

i_t=\delta(W_{ix}x_t+W_{im}m_{t-1}+W_{ic}c_{t-1}+b_i)

it=δ(Wixxt+Wimmt−1+Wicct−1+bi)

f

t

=

δ

(

W

f

x

x

t

+

W

f

m

m

t

−

1

+

W

f

c

c

t

−

1

+

b

i

)

f_t=\delta(W_{fx}x_t+W_{fm}m_{t-1}+W_{fc}c_{t-1}+b_i)

ft=δ(Wfxxt+Wfmmt−1+Wfcct−1+bi)

c

t

=

f

t

⊙

c

t

−

1

+

i

t

⊙

g

(

W

c

x

x

t

+

W

c

m

m

t

−

1

+

b

c

)

c_t=f_t\odot c_{t-1}+i_t\odot g(W_{cx}x_t+W_{cm}m_{t-1}+b_c)

ct=ft⊙ct−1+it⊙g(Wcxxt+Wcmmt−1+bc)

o

t

=

δ

(

W

o

x

x

t

+

W

o

m

m

t

−

1

+

W

o

c

c

t

+

b

o

)

o_t=\delta(W_{ox}x_t+W_{om}m_{t-1}+W_{oc}c_{t}+b_o)

ot=δ(Woxxt+Wommt−1+Wocct+bo)

m

t

=

o

t

⊙

h

(

c

t

)

m_t=o_t\odot h(c_t)

mt=ot⊙h(ct)

y

t

=

ϕ

(

W

y

m

m

t

+

b

y

)

y_t=\phi (W_{ym}m_t+b_y)

yt=ϕ(Wymmt+by)

假设一层中的cell个数为

n

c

n_c

nc,输入维度为

n

i

n_i

ni,输出维度为

n

o

n_o

no,那么对应的参数量为:

W

=

n

c

∗

n

c

∗

4

+

n

i

∗

n

c

∗

4

+

n

c

∗

n

o

+

n

c

∗

3

W=n_c*n_c*4+n_i*n_c*4+n_c*n_o+n_c*3

W=nc∗nc∗4+ni∗nc∗4+nc∗no+nc∗3

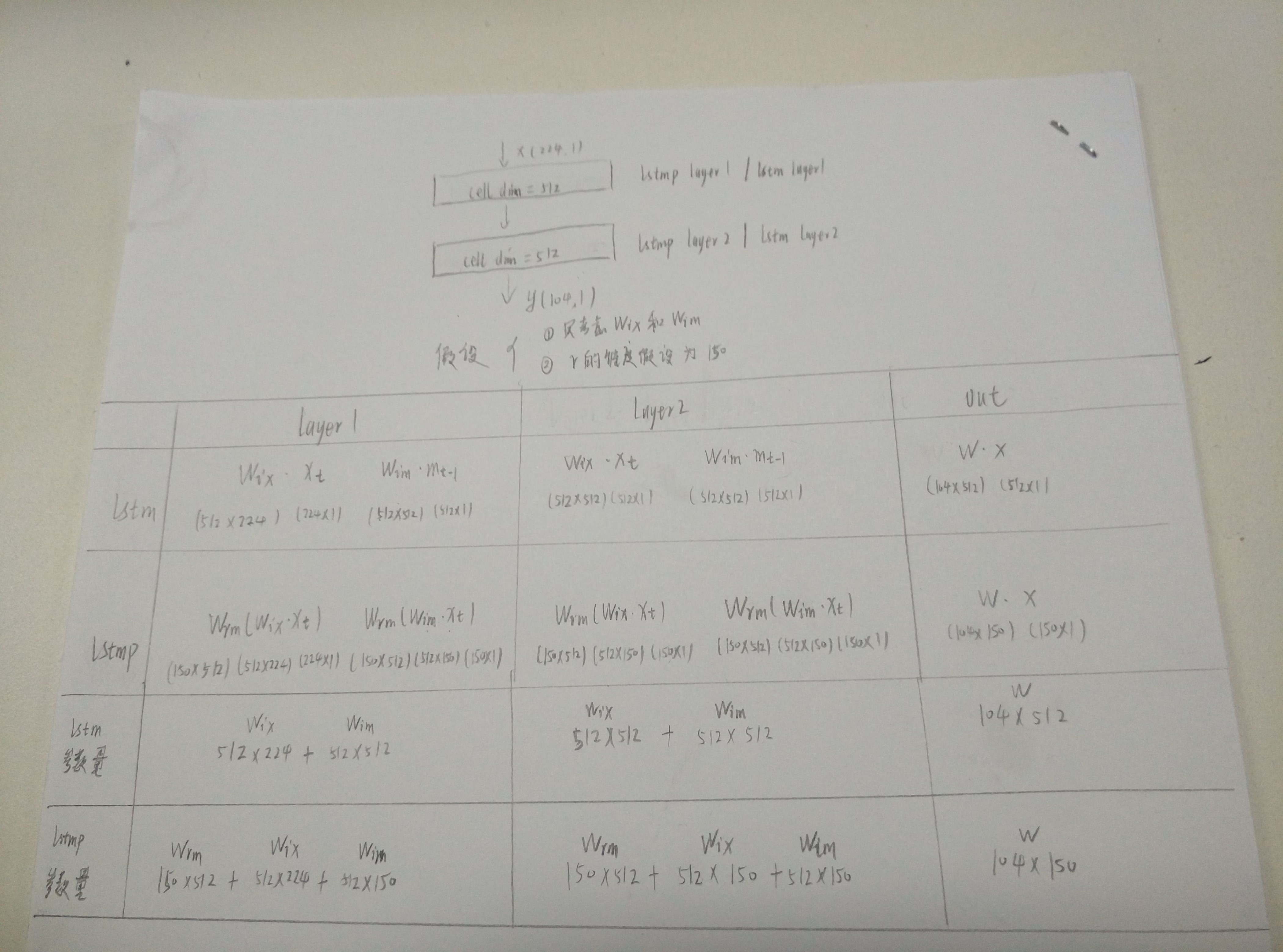

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-EmWW878Y-1618587015269)(./1479303077237.png)]

lstmp是lstm with recurrent projection layer的简称,在原有lstm基础之上增加了一个projection layer,并将这个layer连接到lstm的输入。此时的网络结构变为

i

t

=

δ

(

W

i

x

x

t

+

W

i

r

r

t

−

1

+

W

i

c

c

t

−

1

+

b

i

)

i_t=\delta(W_{ix}x_t+W_{ir}r_{t-1}+W_{ic}c_{t-1}+b_i)

it=δ(Wixxt+Wirrt−1+Wicct−1+bi)

f

t

=

δ

(

W

f

x

x

t

+

W

f

r

r

t

−

1

+

W

f

c

c

t

−

1

+

b

i

)

f_t=\delta(W_{fx}x_t+W_{fr}r_{t-1}+W_{fc}c_{t-1}+b_i)

ft=δ(Wfxxt+Wfrrt−1+Wfcct−1+bi)

c

t

=

f

t

⊙

c

t

−

1

+

i

t

⊙

g

(

W

c

x

x

t

+

W

c

r

r

t

−

1

+

b

c

)

c_t=f_t\odot c_{t-1}+i_t\odot g(W_{cx}x_t+W_{cr}r_{t-1}+b_c)

ct=ft⊙ct−1+it⊙g(Wcxxt+Wcrrt−1+bc)

o

t

=

δ

(

W

o

x

x

t

+

W

o

r

r

t

−

1

+

W

o

c

c

t

+

b

o

)

o_t=\delta(W_{ox}x_t+W_{or}r_{t-1}+W_{oc}c_{t}+b_o)

ot=δ(Woxxt+Worrt−1+Wocct+bo)

m

t

=

o

t

⊙

h

(

c

t

)

m_t=o_t\odot h(c_t)

mt=ot⊙h(ct)

r

t

=

W

r

m

m

t

r_t=W_{rm}m_t

rt=Wrmmt

y

t

=

ϕ

(

W

y

r

r

t

+

b

y

)

y_t=\phi (W_{yr}r_t+b_y)

yt=ϕ(Wyrrt+by)

projection layer的维度设为

n

r

n_r

nr,那么总的参数量将会变为:

W

=

n

c

∗

n

r

∗

4

+

n

i

∗

n

c

∗

4

+

n

r

∗

n

o

+

n

c

∗

n

r

+

n

c

∗

3

W=n_c*n_r*4+n_i*n_c*4+n_r*n_o+n_c*n_r+n_c*3

W=nc∗nr∗4+ni∗nc∗4+nr∗no+nc∗nr+nc∗3

通过设置

n

r

n_r

nr的大小,可以缩减总的参数量。

lstm压缩

直接训练lstmp网络结构

为了减少矩阵的参数量,重点优化,以

W

i

x

W_{ix}

Wix和

W

i

m

W_{im}

Wim为例,相关参数量的变化如下:

对lstm的参数做SVD压缩

参考[3],对已有的参数做压缩,主要两个矩阵:inter-layer矩阵

[

W

i

x

,

W

f

x

,

W

o

x

,

W

c

x

]

T

[W_{ix},W_{fx},W_{ox},W_{cx}]^T

[Wix,Wfx,Wox,Wcx]T和recurrent 矩阵

[

W

i

m

,

W

f

m

,

W

o

m

,

W

c

m

]

T

[W_{im},W_{fm},W_{om},W_{cm}]^T

[Wim,Wfm,Wom,Wcm]T

通过奇异值的设定将两个矩阵转化为三个小矩阵,其中一个小矩阵作为lstmp中projection layer的参数。

参考

[1].Long Short-Term Memory Based Recurrent Neural Network Architectures for Large Vocabulary Speech Recognition

[2].long short-term memory recurrent neural network architectures for large scale acoustic modeling

[3].ON THE COMPRESSION OF RECURRENT NEURAL NETWORKS WITH AN APPLICATION TO LVCSR ACOUSTIC MODELING FOR EMBEDDED SPEECH RECOGNITION

后面的技术分享转移到微信公众号上面更新了,【欢迎扫码关注交流】

1017

1017

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?