1.准备好nginx日志,我弄了部分数据,E:\log\1.log42.156.138.7 - - [26/Apr/2017:00:00:08 +0800] "GET /checkpop?v=20170425135147 HTTP/1.1" 200 934 "http://www.baidu.com/shop/22265.html" "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0b13pre) Gecko/20110307 Firefox/4.0b13pre"

42.156.138.7 - - [26/Apr/2017:00:00:08 +0800] "GET /static/js/stat.js?v=20170425135147 HTTP/1.1" 200 598 "http://www.baidu.com/shop/22265.html" "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0b13pre) Gecko/20110307 Firefox/4.0b13pre"

111.205.96.2 - - [26/Apr/2017:00:00:08 +0800] "GET /monitor/sm_check/sms_allowance HTTP/1.1" 200 20 "-" "curl/7.19.7 (i686-pc-linux-gnu) libcurl/7.19.7 NSS/3.12.6.2 zlib/1.2.3 libidn/1.18 libssh2/1.2.2"

103.21.119.132 - - [26/Apr/2017:00:00:08 +0800] "POST /ajax/checkPhone HTTP/1.1" 200 22 "http://passport.baidu.com/signup" "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)"

42.156.138.7 - - [26/Apr/2017:00:00:08 +0800] "GET /api/pv?id=22265&type=shop&t=93504578558ff728767a82 HTTP/1.1" 200 31 "http://www.baidu.com/shop/22265.html" "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0b13pre) Gecko/20110307 Firefox/4.0b13pre"

111.205.96.2 - - [26/Apr/2017:00:00:08 +0800] "GET /monitor/sm_check/order_num HTTP/1.1" 200 15 "-" "curl/7.19.7 (i686-pc-linux-gnu) libcurl/7.19.7 NSS/3.12.6.2 zlib/1.2.3 libidn/1.18 libssh2/1.2.2"input {

file {

path => "E:/log/1.log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{IP:client} - - \[%{HTTPDATE:logdate}\] \"%{WORD:verb} %{URIPATHPARAM:request} HTTP/%{NUMBER:httpversion}\" %{NUMBER:http_status_code} %{NUMBER:bytes} \"%{NOTSPACE:ref}\" \"%{DATA:user_agent}\"" }

}

date {

match => ["logdate", "dd/MMM/yyyy:HH:mm:ss Z"]

target => "@timestamp"

}

kv {

source => "request"

field_split => "&?"

value_split => "="

}

urldecode {

all_fields => true

}

}

output {

elasticsearch {

hosts => "localhost:9200"

}

stdout {

codec => json_lines

}

}

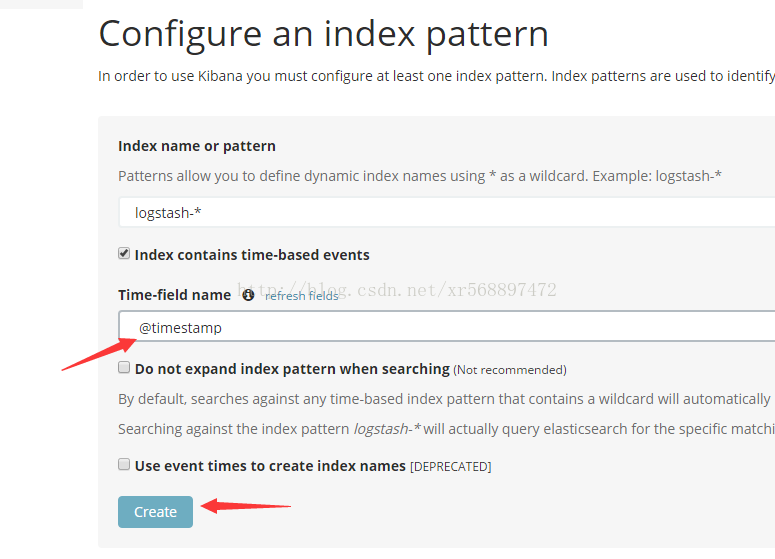

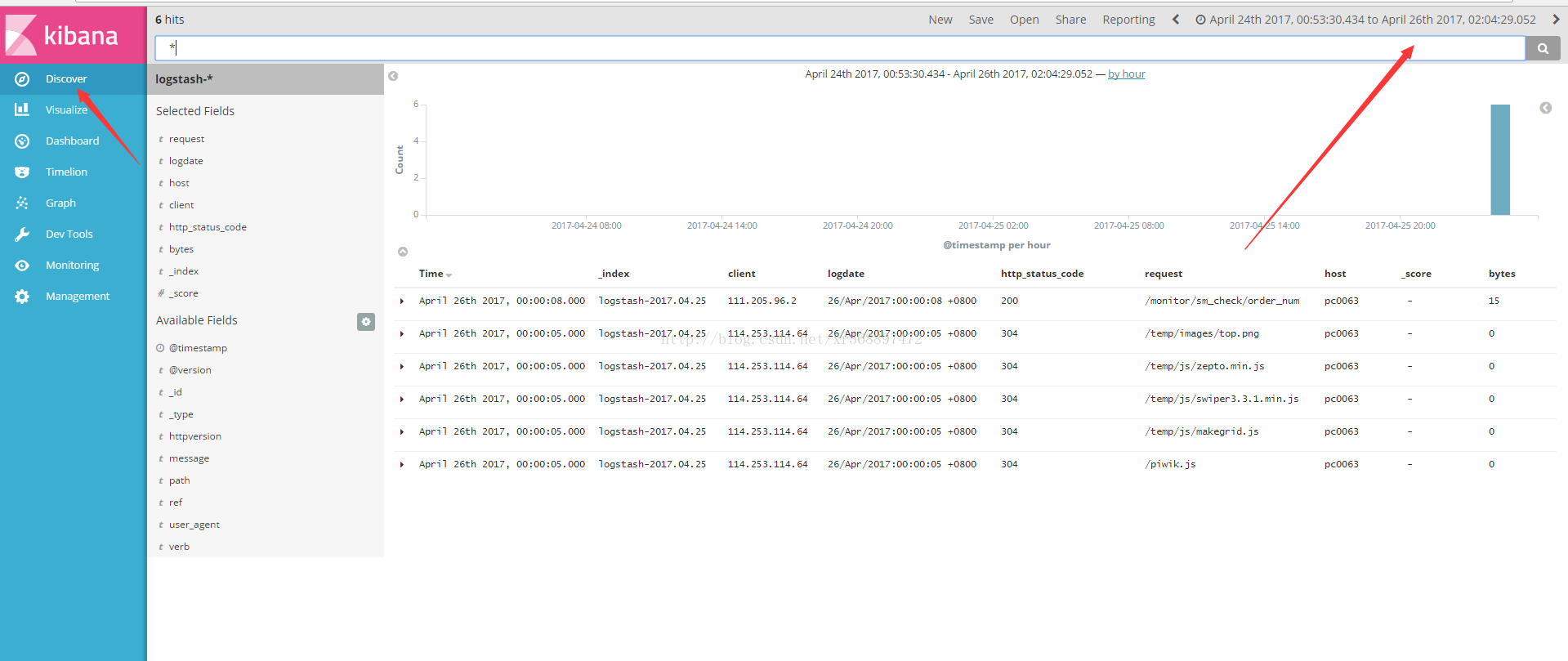

4.进行日志查看.启动kibana5.3服务,登录后左侧目录找Management->Index Patterns->add New

然后记得选择时间

471

471

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?