一、部署zookeeper服务(单机)

温馨提示:部署zookeeper前请务必自己搭建并配置好java环境

服务器环境:centos7

zookeeper版本:zookeeper-3.4.6

zookeeper安装包:zookeeper-3.4.6.tar.gz

1、zookeeper下载

cd /home/root

wget https://archive.apache.org/dist/zookeeper/zookeeper-3.4.6/zookeeper-3.4.6.tar.gz

2、解压、配置和启动zookeeper服务

# 解压

cd /home/root

tar -xf zookeeper-3.4.6.tar.gz

mv zookeeper-3.4.6 zookeeper

# 进入conf目录修改zookeeper配置参数

cd /home/root/zookeeper/conf

# 修改zoo_sample.cfg名为zoo.cfg

# 因为zookeeper启动脚本zkServer.sh启动时会执行zkEnv.sh脚本,zkEnv.sh中会去找conf目录下的zoo.cfg文件作为配置文件加载

mv zoo_sample.cfg zoo.cfg

# 修改dataDir值为/home/root/zookeeper/zookeeper/data

# 添加dataLogDir=/home/root/zookeeper/zookeeper/logs

vim zoo.cfg

# 配置zookeeper环境变量

vim /etc/profile

# 添加配置

export ZK_HOME=/home/root/zookeeper/zookeeper

export PATH=.:$ZK_HOME/bin:$PATH

# 环境变量生效

source /etc/profile

# 启动zookeeper服务

zkServer.sh start

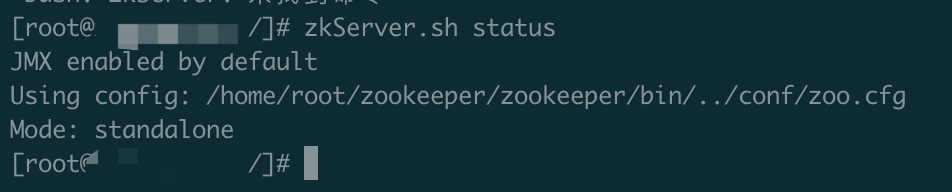

# 查看zk状态

zkServer.sh status

3、查看zookeeper服务状态

二、部署kafka服务(单机)

kafka版本:kafka_2.12-2.3.1

kafka安装包:kafka_2.12-2.3.1.tgz

1、kafka下载

cd /home/root

wget https://mirrors.tuna.tsinghua.edu.cn/apache/kafka/2.3.1/kafka_2.12-2.3.1.tgz

2、解压、配置和启动kafka服务

cd /home/root/kafka/config

# 修改server.properties

# 修改advertised.listeners值为服务器ip,否则java项目启动会报错

log.dirs=/home/root/kafka/logs

advertised.listeners=PLAINTEXT://服务器ip:9092

# 启动kafka后台运行

cd /home/root/kafka

nohup ./bin/kafka-server-start.sh config/server.properties &

3、创建kafka topic

创建topic,为我们下面java项目服务

# 创建一个topic为hello

# replication-factor : 副本数

# partitions : 分区数

# topic :主题名称

cd /home/root/kafka

./bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic hello

至此,kafka服务部署完成,接下来我们开始在java中使用kafka

三、搭建springboot项目

1、maven依赖导入

<!-- https://mvnrepository.com/artifact/org.springframework.kafka/spring-kafka -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>2.5.2.RELEASE</version>

</dependency>

2、配置

server.port=8989

###########【Kafka集群】###########

spring.kafka.bootstrap-servers=192.168.10.8:9092

###########【初始化生产者配置】###########

# 重试次数

spring.kafka.producer.retries=0

# 应答级别:多少个分区副本备份完成时向生产者发送ack确认(可选0、1、all/-1)

spring.kafka.producer.acks=1

# 批量大小

spring.kafka.producer.batch-size=16384

# 提交延时

spring.kafka.producer.properties.linger.ms=0

# 当生产端积累的消息达到batch-size或接收到消息linger.ms后,生产者就会将消息提交给kafka

# linger.ms为0表示每接收到一条消息就提交给kafka,这时候batch-size其实就没用了

# 生产端缓冲区大小

spring.kafka.producer.buffer-memory=33554432

# Kafka提供的序列化和反序列化类

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

###########【初始化消费者配置】###########

# 默认的消费组ID

spring.kafka.consumer.properties.group.id=defaultConsumerGroup

# 是否自动提交offset

spring.kafka.consumer.enable-auto-commit=true

# 提交offset延时(接收到消息后多久提交offset)

spring.kafka.consumer.auto.commit.interval.ms=0

# 当kafka中没有初始offset或offset超出范围时将自动重置offset

# earliest:重置为分区中最小的offset;

# latest:重置为分区中最新的offset(消费分区中新产生的数据);

# none:只要有一个分区不存在已提交的offset,就抛出异常;

spring.kafka.consumer.auto-offset-reset=latest

# 消费会话超时时间(超过这个时间consumer没有发送心跳,就会触发rebalance操作)

spring.kafka.consumer.properties.session.timeout.ms=120000

# 消费请求超时时间

spring.kafka.consumer.properties.request.timeout.ms=180000

# Kafka提供的序列化和反序列化类

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

# 消费端监听的topic不存在时,关掉项目启动报错

spring.kafka.listener.missing-topics-fatal=false

准备工作完成。

接下来我们采用Controller模拟传入消息触发kafka生产者生产消息,用@KafkaListener注解开启kafka消费者消费(监听消息)

以下消息输出采用日志打印,请在项目中加入maven依赖

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

3、创建KafkaController模拟消息创建者

package com.example.kafkademo;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.*;

/**

* @Description : 模拟消息进入,创建kafka消息(生产者)

* @Author : xu_teng_chao@163.com

* @CreateTime : 2020/7/7 12:39 下午

*/

@Slf4j

@RestController

public class KafkaController {

@Autowired

private KafkaTemplate<String, Object> kafkaTemplate;

// 异步发送消息(无回调)

@PostMapping("/sendMsg")

public void sendMessage1(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello"; // kafka主题

kafkaTemplate.send(topic, "你好");

}

}

4、创建kafkaConsumer监听kafka消息

package com.example.kafkademo;

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

/**

* @Description : kafka消费者

* @Author : xu_teng_chao@163.com

* @CreateTime : 2020/7/7 12:40 下午

*/

@Slf4j

@Component

public class KafkaConsumer {

// 消费监听,topics写入我们创建的topic

@KafkaListener(topics = {"hello"})

public void listen1(ConsumerRecord<String,String> record) {

String msg = record.value(); // 消息内容

Integer partition = record.partition(); // 分区

Long timestamp = record.timestamp(); // 时间戳

Long offset = record.offset(); // offset(偏移)

log.info("kafka consumer message:{}; partition:{}; offset:{}; timestamp:{}", msg, partition, offset, timestamp);

}

}

5、启动项目测试

①、使用curl发起请求:

curl -XPOST http://localhost:8989/sendMsg -d 'msg=你好'

②、使用postman发起请求:

监听到的结果:

2020-07-08 18:31:42.411 INFO 6085 --- [ntainer#0-0-C-1] com.example.kafkademo.KafkaConsumer : kafka consumer message:你好; partition:0; offset:385; timestamp:1594204302340

四、kafkaTemplate异步回调

默认情况下 KafkaTemplate 发送消息是采取异步方式,上面演示的也是异步发送消息的一种。

接下来我们演示异步回调发送消息。异步回调的好处:可以在发送消息后得知消息是否发送成功,便于方便根据成功与否及时补偿。

package com.example.kafkademo;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.*;

/**

* @Description : 模拟消息进入,创建kafka消息(生产者)

* @Author : xu_teng_chao@163.com

* @CreateTime : 2020/7/7 12:39 下午

*/

@Slf4j

@RestController

public class KafkaController {

@Autowired

private KafkaTemplate<String, Object> kafkaTemplate;

// 异步发送消息(回调)

@PostMapping("/sendMsg2")

public void sendMessage2(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

kafkaTemplate.send(topic, normalMessage).addCallback(success -> {

String topicStr = success.getRecordMetadata().topic();

int partition = success.getRecordMetadata().partition();

long offset = success.getRecordMetadata().offset();

log.info("send msg success!; topic = {}, partition = {}, offset = {}", topicStr, partition, offset);

}, failed -> {

log.error("send msg failed! errMsg : {}", failed.getMessage());

});

}

/*

// 异步发送消息(无回调)

@PostMapping("/sendMsg")

public void sendMessage1(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello"; // kafka主题

kafkaTemplate.send(topic, "你好");

}

*/

}

五、kafkaTemplate同步发消息(无时间限制)

在kafkaTemplate.send()方法之后发送消息之后调用get()方法即同步发送消息。

get 方法返回的即为结果,如果发送失败则抛出异常。

package com.example.kafkademo;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.*;

/**

* @Description : 模拟消息进入,创建kafka消息(生产者)

* @Author : xu_teng_chao@163.com

* @CreateTime : 2020/7/7 12:39 下午

*/

@Slf4j

@RestController

public class KafkaController {

@Autowired

private KafkaTemplate<String, Object> kafkaTemplate;

// 同步发送消息

@PostMapping("/sendMsg3")

public void sendMessage3(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

try {

SendResult<String, Object> result = kafkaTemplate.send(topic, normalMessage).get();

String topic1 = result.getRecordMetadata().topic(); // 消息发送到的topic

int partition = result.getRecordMetadata().partition(); // 消息发送到的分区

long offset = result.getRecordMetadata().offset(); // 消息在分区内的offset

log.info("send msg done. topic = {}, partition = {}, offset = {}", topic1, partition, offset);

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

}

/*

// 异步发送消息(回调)

@PostMapping("/sendMsg2")

public void sendMessage2(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

kafkaTemplate.send(topic, normalMessage).addCallback(success -> {

String topicStr = success.getRecordMetadata().topic();

int partition = success.getRecordMetadata().partition();

long offset = success.getRecordMetadata().offset();

log.info("send msg success!; topic = {}, partition = {}, offset = {}", topicStr, partition, offset);

}, failed -> {

log.error("send msg failed! errMsg : {}", failed.getMessage());

});

}

*/

/*

// 异步发送消息(无回调)

@PostMapping("/sendMsg")

public void sendMessage1(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello"; // kafka主题

kafkaTemplate.send(topic, "你好");

}

*/

}

六、kafkaTemplate发送同步消息(时间限制)

调用get()方法的重载方法get(long timeout, TimeUnit unit)进行发送消息时间限制。

需要注意,这里时间限制是对整个结果的耗时(time)限制的:

发送消息 -> 消费者消费完成 -> 返回消费结果。

计时是从生产者产生消息开始计算。

如果设置的timeout小于time,则会捕获到消息发送失败异常,但是消费者依然对该消息进行了消费。可以理解为:生产者不管消费者有没有成功进行消费,只要时间到还没有收到响应,就立即抛出超时异常。

我们设置时间限制(timeout)为1毫秒(故意演示失败效果),get(1, TimeUnit.MILLISECONDS)

package com.example.kafkademo;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.*;

/**

* @Description : 模拟消息进入,创建kafka消息(生产者)

* @Author : xu_teng_chao@163.com

* @CreateTime : 2020/7/7 12:39 下午

*/

@Slf4j

@RestController

public class KafkaController {

@Autowired

private KafkaTemplate<String, Object> kafkaTemplate;

// 同步发送消息(超时限制设置)

// 调用get()的重载方法get(long timeout, TimeUnit unit)

@PostMapping("/sendMsg4")

public void sendMessage4(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

try {

SendResult<String, Object> result = kafkaTemplate.send(topic, normalMessage).get(1, TimeUnit.MILLISECONDS);

String topic1 = result.getRecordMetadata().topic(); // 消息发送到的topic

int partition = result.getRecordMetadata().partition(); // 消息发送到的分区

long offset = result.getRecordMetadata().offset(); // 消息在分区内的offset

log.info("send msg done. topic = {}, partition = {}, offset = {}", topic1, partition, offset);

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

} catch (TimeoutException e) {

e.printStackTrace();

}

}

/*

// 同步发送消息

@PostMapping("/sendMsg3")

public void sendMessage3(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

try {

SendResult<String, Object> result = kafkaTemplate.send(topic, normalMessage).get();

String topic1 = result.getRecordMetadata().topic(); // 消息发送到的topic

int partition = result.getRecordMetadata().partition(); // 消息发送到的分区

long offset = result.getRecordMetadata().offset(); // 消息在分区内的offset

log.info("send msg done. topic = {}, partition = {}, offset = {}", topic1, partition, offset);

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

}

*/

/*

// 异步发送消息(回调)

@PostMapping("/sendMsg2")

public void sendMessage2(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

kafkaTemplate.send(topic, normalMessage).addCallback(success -> {

String topicStr = success.getRecordMetadata().topic();

int partition = success.getRecordMetadata().partition();

long offset = success.getRecordMetadata().offset();

log.info("send msg success!; topic = {}, partition = {}, offset = {}", topicStr, partition, offset);

}, failed -> {

log.error("send msg failed! errMsg : {}", failed.getMessage());

});

}

*/

/*

// 异步发送消息(无回调)

@PostMapping("/sendMsg")

public void sendMessage1(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello"; // kafka主题

kafkaTemplate.send(topic, "你好");

}

*/

开始测试,发送post请求:

curl -XPOST http://localhost:8989/sendMsg4 -d 'msg=测试同步发送消息的时间限制1ms'

显示结果为:

程序抛出了java.util.concurrent.TimeoutException异常,但是消费者consumer正常消费了消息

java.util.concurrent.TimeoutException

at java.util.concurrent.FutureTask.get(FutureTask.java:205)

at org.springframework.util.concurrent.SettableListenableFuture.get(SettableListenableFuture.java:134)

at com.example.kafkademo.KafkaController.sendMessage4(KafkaController.java:79)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.springframework.web.method.support.InvocableHandlerMethod.doInvoke(InvocableHandlerMethod.java:190)

at org.springframework.web.method.support.InvocableHandlerMethod.invokeForRequest(InvocableHandlerMethod.java:138)

at org.springframework.web.servlet.mvc.method.annotation.ServletInvocableHandlerMethod.invokeAndHandle(ServletInvocableHandlerMethod.java:105)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.invokeHandlerMethod(RequestMappingHandlerAdapter.java:879)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.handleInternal(RequestMappingHandlerAdapter.java:793)

at org.springframework.web.servlet.mvc.method.AbstractHandlerMethodAdapter.handle(AbstractHandlerMethodAdapter.java:87)

at org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:1040)

at org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:943)

at org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:1006)

at org.springframework.web.servlet.FrameworkServlet.doPost(FrameworkServlet.java:909)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:660)

at org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:883)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:741)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:231)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:53)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.web.filter.RequestContextFilter.doFilterInternal(RequestContextFilter.java:100)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:119)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.web.filter.FormContentFilter.doFilterInternal(FormContentFilter.java:93)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:119)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.web.filter.CharacterEncodingFilter.doFilterInternal(CharacterEncodingFilter.java:201)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:119)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:202)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:96)

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:541)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:139)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:92)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:74)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:343)

at org.apache.coyote.http11.Http11Processor.service(Http11Processor.java:373)

at org.apache.coyote.AbstractProcessorLight.process(AbstractProcessorLight.java:65)

at org.apache.coyote.AbstractProtocol$ConnectionHandler.process(AbstractProtocol.java:868)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.doRun(NioEndpoint.java:1590)

at org.apache.tomcat.util.net.SocketProcessorBase.run(SocketProcessorBase.java:49)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at org.apache.tomcat.util.threads.TaskThread$WrappingRunnable.run(TaskThread.java:61)

at java.lang.Thread.run(Thread.java:748)

2020-07-09 11:09:12.963 INFO 8665 --- [ntainer#0-0-C-1] com.example.kafkademo.KafkaConsumer : kafka consumer message:测试同步发送消息的时间限制1ms; partition:0; offset:387; timestamp:1594264152891

七、开启kafka事务

Kafka和数据库一样可以支持事务,在程序发生异常或者出现特定逻辑的时候可以进行回滚,确保消息监听器不会接收到一些错误的或者不需要的消息。

开启kafka事务有两种方法,一种是在方法上使用@Transactional注解,另一种是使用本地事务kafkaTemplate.executeInTransaction

1、调用executeInTransaction方法开启事务

开启kafka事务需要添加修改配置文件:

1、重试次数的值设置大于0

2、把应答级别设置为all

3、增加kafka事务配置

# 重试次数

spring.kafka.producer.retries=1

# 应答级别:多少个分区副本备份完成时向生产者发送ack确认(可选0、1、all/-1)

spring.kafka.producer.acks=all

# 开启kafka事务

spring.kafka.producer.transaction-id-prefix=kafka-transacation

编写事务测试Controller

package com.example.kafkademo;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.*;

/**

* @Description : 模拟消息进入,创建kafka消息(生产者)

* @Author : xu_teng_chao@163.com

* @CreateTime : 2020/7/7 12:39 下午

*/

@Slf4j

@RestController

public class KafkaController {

@Autowired

private KafkaTemplate<String, Object> kafkaTemplate;

// 事务发送消息

@PostMapping("/trans")

public void sendMessageByTrans(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

kafkaTemplate.executeInTransaction(operations -> {

operations.send(topic, normalMessage);

throw new RuntimeException("模拟异常");

});

}

/*

// 同步发送消息(超时限制设置)

// 调用get()的重载方法get(long timeout, TimeUnit unit)

@PostMapping("/sendMsg4")

public void sendMessage4(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

try {

SendResult<String, Object> result = kafkaTemplate.send(topic, normalMessage).get(1, TimeUnit.MILLISECONDS);

String topic1 = result.getRecordMetadata().topic(); // 消息发送到的topic

int partition = result.getRecordMetadata().partition(); // 消息发送到的分区

long offset = result.getRecordMetadata().offset(); // 消息在分区内的offset

log.info("send msg done. topic = {}, partition = {}, offset = {}", topic1, partition, offset);

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

} catch (TimeoutException e) {

e.printStackTrace();

}

}

*/

/*

// 同步发送消息

@PostMapping("/sendMsg3")

public void sendMessage3(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

try {

SendResult<String, Object> result = kafkaTemplate.send(topic, normalMessage).get();

String topic1 = result.getRecordMetadata().topic(); // 消息发送到的topic

int partition = result.getRecordMetadata().partition(); // 消息发送到的分区

long offset = result.getRecordMetadata().offset(); // 消息在分区内的offset

log.info("send msg done. topic = {}, partition = {}, offset = {}", topic1, partition, offset);

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

}

*/

/*

// 异步发送消息(回调)

@PostMapping("/sendMsg2")

public void sendMessage2(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello";

kafkaTemplate.send(topic, normalMessage).addCallback(success -> {

String topicStr = success.getRecordMetadata().topic();

int partition = success.getRecordMetadata().partition();

long offset = success.getRecordMetadata().offset();

log.info("send msg success!; topic = {}, partition = {}, offset = {}", topicStr, partition, offset);

}, failed -> {

log.error("send msg failed! errMsg : {}", failed.getMessage());

});

}

*/

/*

// 异步发送消息(无回调)

@PostMapping("/sendMsg")

public void sendMessage1(@RequestParam(value = "msg") String normalMessage) {

String topic = "hello"; // kafka主题

kafkaTemplate.send(topic, "你好");

}

*/

发送请求:

curl -XPOST http://localhost:8989/trans -d 'msg=测试事务'

测试结果为:

2020-07-09 11:56:53.249 ERROR 9149 --- [a-transacation0] o.s.k.support.LoggingProducerListener : Exception thrown when sending a message with key='null' and payload='测试事务' to topic hello:

org.apache.kafka.common.KafkaException: Failing batch since transaction was aborted

at org.apache.kafka.clients.producer.internals.Sender.maybeSendAndPollTransactionalRequest(Sender.java:422) [kafka-clients-2.5.0.jar:na]

at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:312) [kafka-clients-2.5.0.jar:na]

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:239) [kafka-clients-2.5.0.jar:na]

at java.lang.Thread.run(Thread.java:748) ~[na:1.8.0_221]

2020-07-09 11:56:53.294 ERROR 9149 --- [nio-8989-exec-1] o.a.c.c.C.[.[.[/].[dispatcherServlet] : Servlet.service() for servlet [dispatcherServlet] in context with path [] threw exception [Request processing failed; nested exception is java.lang.RuntimeException: 模拟异常] with root cause

java.lang.RuntimeException: 模拟异常

at com.example.kafkademo.KafkaController.lambda$sendMessageByTrans$2(KafkaController.java:101) ~[classes/:na]

at org.springframework.kafka.core.KafkaTemplate.executeInTransaction(KafkaTemplate.java:463) ~[spring-kafka-2.5.2.RELEASE.jar:2.5.2.RELEASE]

at com.example.kafkademo.KafkaController.sendMessageByTrans(KafkaController.java:99) ~[classes/:na]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[na:1.8.0_221]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[na:1.8.0_221]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_221]

at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_221]

at org.springframework.web.method.support.InvocableHandlerMethod.doInvoke(InvocableHandlerMethod.java:190) ~[spring-web-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.method.support.InvocableHandlerMethod.invokeForRequest(InvocableHandlerMethod.java:138) ~[spring-web-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.servlet.mvc.method.annotation.ServletInvocableHandlerMethod.invokeAndHandle(ServletInvocableHandlerMethod.java:105) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.invokeHandlerMethod(RequestMappingHandlerAdapter.java:879) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.handleInternal(RequestMappingHandlerAdapter.java:793) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.servlet.mvc.method.AbstractHandlerMethodAdapter.handle(AbstractHandlerMethodAdapter.java:87) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:1040) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:943) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:1006) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.servlet.FrameworkServlet.doPost(FrameworkServlet.java:909) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at javax.servlet.http.HttpServlet.service(HttpServlet.java:660) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:883) ~[spring-webmvc-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at javax.servlet.http.HttpServlet.service(HttpServlet.java:741) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:231) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:53) ~[tomcat-embed-websocket-9.0.36.jar:9.0.36]

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.springframework.web.filter.RequestContextFilter.doFilterInternal(RequestContextFilter.java:100) ~[spring-web-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:119) ~[spring-web-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.springframework.web.filter.FormContentFilter.doFilterInternal(FormContentFilter.java:93) ~[spring-web-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:119) ~[spring-web-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.springframework.web.filter.CharacterEncodingFilter.doFilterInternal(CharacterEncodingFilter.java:201) ~[spring-web-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:119) ~[spring-web-5.2.7.RELEASE.jar:5.2.7.RELEASE]

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:202) ~[tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:96) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:541) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:139) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:92) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:74) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:343) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.coyote.http11.Http11Processor.service(Http11Processor.java:373) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.coyote.AbstractProcessorLight.process(AbstractProcessorLight.java:65) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.coyote.AbstractProtocol$ConnectionHandler.process(AbstractProtocol.java:868) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.doRun(NioEndpoint.java:1590) [tomcat-embed-core-9.0.36.jar:9.0.36]

at org.apache.tomcat.util.net.SocketProcessorBase.run(SocketProcessorBase.java:49) [tomcat-embed-core-9.0.36.jar:9.0.36]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [na:1.8.0_221]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [na:1.8.0_221]

at org.apache.tomcat.util.threads.TaskThread$WrappingRunnable.run(TaskThread.java:61) [tomcat-embed-core-9.0.36.jar:9.0.36]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_221]

可以看到已经触发了事务回滚,该消息消费者也没有进行消费。

8403

8403

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?