docker 常用服务安装—有机会就补充

常用服务配置和映射目录

使用docker 命令的可以使用tab补充,安装

yum install -y bash-completion.noarch

1. mysql

docker run -d -p 3306:3306 --name mysql --privileged=true \

-v /opt/serverData/mysql/conf:/etc/mysql \

-v /opt/serverData/mysql/logs:/var/log/mysql \

-v /opt/serverData/mysql/data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=123456 mysql

如果上面报:/var/lib/mysql-files’ ,使用下面这种

docker run -d -p 3306:3306 --name mysql --privileged=true \

-v /opt/serverData/mysql/conf/:/etc/mysql/ \

-v /opt/serverData/mysql/logs/:/var/log/mysql/ \

-v /opt/serverData/mysql/data/:/var/lib/mysql/ \

-v /opt/serverData/mysql/mysql-files:/var/lib/mysql-files/ \

-e MYSQL_ROOT_PASSWORD=123456 mysql

注意事项:

-

linux下:修改my.cnf 在[mysqld]内加入secure_file_priv=/var/lib/mysql

-

** 开发模式下,设置 远程登录**

update user set host=’%’ where user=‘test’;

Grant all privileges on test.* to ‘test’@’%’; //执行两次,就成功了

alter user test identified with mysql_native_password by ‘xxx’;//修改加密方式,navicat登录

2. redis

docker run -d --privileged=true \

-p 6379:6379 \

-v /opt/serverData/redis/conf/redis.conf:/etc/redis/redis.conf \

-v /opt/serverData/redis/data:/data \

--name redis \

redis redis-server /etc/redis/redis.conf --appendonly yes

3. rabbitmq

rabbitmq

开启多端口

docker run -d -p 1883:1883 -p 4369:4369 -p 5671:5671 -p 5672:5672 --privileged=true \

-p 15672:15672 -p 25672:25672 -p 61613:61613 -p 61614:61614 -p 8883 \

-v /opt/serverData/rabbitmq/etc:/etc/rabbitmq -v /opt/serverData/rabbitmq/lib:/var/lib/rabbitmq \

-e RABBITMQ_DEFAULT_USER=admin -e RABBITMQ_DEFAULT_PASS=admin \

-v /opt/serverData/rabbitmq/log:/var/log/rabbitmq --name rabbitmq rabbitmq:3.7.27-rc.1-management

只开启仅需端口

docker run -d -p 15672:15672 -p 5672:5672 \

--name rabbitmq --privileged=true \

-v /opt/serverData/rabbitmq/conf:/etc/rabbitmq \

-v /opt/serverData/rabbitmq/data:/var/lib/rabbitmq \

-e RABBITMQ_DEFAULT_USER=admin -e RABBITMQ_DEFAULT_PASS=admin \

-v /opt/serverData/rabbitmq/log:/var/log/rabbitmq rabbitmq:3.7.27-rc.1-management

开启web

rabbitmq-plugins enable rabbitmq_management

4. clickhouse

docker run -d --name clickhouse-server --privileged=true \

--ulimit nofile=262144:262144 \

-v /opt/clickhouse-server/lib/:/var/lib/clickhouse/ \

-v /opt/clickhouse-server/config/:/etc/clickhouse-server/ \

-v /opt/clickhouse-server/log/:/var/log/clickhouse-server/ \

-p 9009:9009 -p 8123:8123 -p 9000:9000 yandex/clickhouse-server

5. Elasticsearch 单节点,多节点

单节点启动

docker run -d --name elasticsearch --privileged=true -e "discovery.type=single-node" \

-v /opt/elasticsearch/config:/usr/share/elasticsearch/config \

-v /opt/elasticsearch/logs:/usr/share/elasticsearch/logs \

-v /opt/elasticsearch/plugins:/usr/share/elasticsearch/plugins \

-v /opt/elasticsearch/data:/usr/share/elasticsearch/data \

-p 9200:9200 -p 9300:9300 docker.io/elasticsearch:7.8.0

elasticsearch 的用户id 为1000 ,,则把自己映射的目录 全部递归改成 1000:0

chown 1000:0 /opt/elasticsearch -R

多节点启动

如果单启动一个,会显示么有cluster_uuid 不能找到主节点

docker run -d --name elasticsearch --privileged=true \

-v /opt/elasticsearch/config:/usr/share/elasticsearch/config \

-v /opt/elasticsearch/logs:/usr/share/elasticsearch/logs \

-v /opt/elasticsearch/plugins:/usr/share/elasticsearch/plugins \

-v /opt/elasticsearch/data:/usr/share/elasticsearch/data \

-p 9200:9200 -p 9300:9300 docker.io/elasticsearch:7.8.0

配置文件

cluster.name: "ES"

node.master: true

node.data: true

node.name: node100

network.host: 0.0.0.0

network.publish_host: 192.168.60.100

# 每个节点中,是要补充其余节点的ip地址(为了方便可以都写)

discovery.seed_hosts: ["192.168.60.100","192.168.60.101","192.168.60.102"]

cluster.initial_master_nodes: ["192.168.60.100","192.168.60.101","192.168.60.102"] #可以指定端口,也可以不指定

discovery.zen.minimum_master_nodes: 1 # 这里在没有调整搜索其他机器的超时时间,建议最小,不然容易30s超时造成多个集群

# 配置数据收集

xpack.monitoring.collection.enabled: true

#下面是head插件的所需要的

http.cors.enabled: true

http.cors.allow-origin: "*"

6. zookeeper

单节点

docker run --name zkserver --restart always -d --privileged=true \

-v /opt/serverData/zookeeper/conf/zoo.cfg:/conf/zoo.cfg \

-v /opt/serverData/zookeeper/data/:/data \

-v /opt/serverData/zookeeper/datalog/:/datalog \

-p 2888:2888 -p 2181:2181 -p 3888:3888 \

zookeeper:3.6.1

配置文件

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true

admin.enableServer=true

server.1=192.168.11.11:2888:3888;2181

集群

docker 命令不变,配置文件修改如下

详细配置,对照官方文档

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true # 删除

admin.enableServer=true

server.1=192.168.11.11:2888:3888;2181

# 下面添加其他server

7. rpcx-ui

这个是自己重新push的容器,重新改变了目录结构和依赖zookeeper包。

docker run --name rpcx-ui --restart always -d -p 8972:8972 xyjwork/rpcx-ui:1.0.0

8. nsq

使用docker-compose 来单机多节点启动

version: '2'

services:

nsqlookupd:

image: nsqio/nsq

command: /nsqlookupd

networks:

- nsq-network

hostname: nsqlookupd

ports:

- "4161:4161"

- "4160:4160"

nsqd:

image: nsqio/nsq

command: /nsqd --lookupd-tcp-address=nsqlookupd:4160 -broadcast-address=192.168.60.100(虚拟机地址)

depends_on:

- nsqlookupd

hostname: nsqd

networks:

- nsq-network

ports:

- "4151:4151"

- "4150:4150"

nsqadmin:

image: nsqio/nsq

command: /nsqadmin --lookupd-http-address=nsqlookupd:4161

depends_on:

- nsqlookupd

hostname: nsqadmin

ports:

- "4171:4171"

networks:

- nsq-network

networks:

nsq-network:

driver: bridge

9.etcd docker-compose 安装单机多集群和web UI

version: '3'

networks:

byfn:

driver: bridge

services:

etcd1:

image: quay.io/coreos/etcd

container_name: etcd1

command: etcd -name etcd1 -advertise-client-urls http://0.0.0.0:2379 -listen-client-urls http://0.0.0.0:2379 -listen-peer-urls http://0.0.0.0:2380 -initial-cluster-token etcd-cluster -initial-cluster "etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380" -initial-cluster-state new

volumes:

- "/var/docekr/etcd/data:/opt/etcds/etcd1/data"

ports:

- "23791:2379"

- "23801:2380"

networks:

- byfn

etcd2:

image: quay.io/coreos/etcd

container_name: etcd2

command: etcd -name etcd2 -advertise-client-urls http://0.0.0.0:2379 -listen-client-urls http://0.0.0.0:2379 -listen-peer-urls http://0.0.0.0:2380 -initial-cluster-token etcd-cluster -initial-cluster "etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380" -initial-cluster-state new

volumes:

- "/var/docekr/etcd/data:/opt/etcds/etcd2/data"

ports:

- "23792:2379"

- "23802:2380"

networks:

- byfn

etcd3:

image: quay.io/coreos/etcd

container_name: etcd3

command: etcd -name etcd3 -advertise-client-urls http://0.0.0.0:2379 -listen-client-urls http://0.0.0.0:2379 -listen-peer-urls http://0.0.0.0:2380 -initial-cluster-token etcd-cluster -initial-cluster "etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380" -initial-cluster-state new

volumes:

- "/var/docekr/etcd/data:/opt/etcds/etcd3/data"

ports:

- "23793:2379"

- "23803:2380"

networks:

- byfn

etcdkeeper:

container_name: etcdkeeper

image: deltaprojects/etcdkeeper

ports:

- "8080:8080"

networks:

- byfn

注意:

- 开启端口为 4151和4150 需要开启为tcp端口,4171不需要

配置文件就不贴出来了,官网上有

根据需要的端口,开启不同的端口。我这里是三个镜像都在一个服务器

9.easy-mock 搭建

注意,这个是redis 与mongo 与easy-mock不是同步搭建,这里外联服务器

docker run -d -p 7300:7300 --name easy-mock --privileged=true -v /opt/serverData/easy-mock/production.json:/home/easy-mock/easy-mock/config/production.json -v /opt/serverData/easy-mock/logs/:/home/easy-mock/easy-mock/logs docker.io/easymock/easymock:1.6.0 /bin/bash -c "npm start"

10. mongo

无密码验证

docker run -d --name mongo -v /opt/mongo/data:/data/db -v /opt/mongo/logs/:/data/mongodb/logs -v /opt/mongo/conf:/data/configdb -p 27017:27017 mongo

有密码验证,需要admin 进入容器里面新增用户

docker run -d --name mongo -v /opt/mongo/data:/data/db -v /opt/mongo/logs/:/data/mongodb/logs -v /opt/mongo/conf:/data/configdb -p 27017:27017 mongo -auth

11. promethues

如果不知道配置文件怎么写,可以先跑一个demo,

然后复制出来文件 -》

docker cp dmeo:/etc/promethues/promethues.yml /data/promethues

demo 配置文件

# my global config

global:

scrape_interval: 1m # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 1m # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

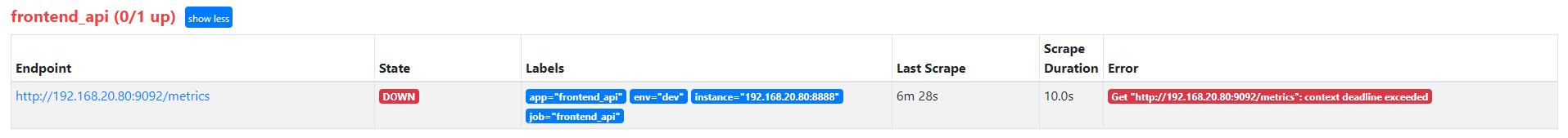

- job_name: 'frontend_api' # 以下是 自定义配置

static_configs:

- targets: ['192.168.20.80:9092']

labels:

job: frontend_api

app: frontend_api

env: dev

instance: 192.168.60.80:8888

当自定义配置成功后,:

上述是因为目标服务关闭,服务主动拉取metric 数据,而报错。

上述是因为目标服务关闭,服务主动拉取metric 数据,而报错。

动态加载配置文件

docker run -d --name=prometheus -p 9090:9090 \

-v /data/prometheus/conf/prometheus.yml:/etc/prometheus/prometheus.yml \

-v /data/prometheus/prometheus:/prometheus prom/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--storage.tsdb.path=/prometheus \

--web.console.libraries=/usr/share/prometheus/console_libraries \

--web.console.templates=/usr/share/prometheus/consoles \

--web.enable-lifecycle

执行命令

docker run -d -p 9090:9090 --name=prometheus -v /data/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml prom/prometheus

12 jaeger docker 启动

docker run -d --name jaeger \

-e COLLECTOR_ZIPKIN_HTTP_PORT=9411 \

-p 5775:5775/udp \

-p 6831:6831/udp \

-p 6832:6832/udp \

-p 5778:5778 \

-p 16686:16686 \

-p 14268:14268 \

-p 9411:9411 \

jaegertracing/all-in-one:1.12

启动成功后,打开 16686 端口可以看到界面

在不知道映射目录和配置文件案例的情况下,优先使用下面方法

1. 首先启动一个需要的容器,并且进入容器里面

docker run -d --name [容器名] --rm [目标容器]

2. 进入容器,查看容器中配置文件,数据,日志等目录地址

docker exec -it [容器名] bash [or /bin/bash]

不要使用alpine 版本,,这样版本没有bash

3. 将所需要的映射的目录,和配置文件复制出来

docker cp [容器名]:/etc/mysql.conf /opt/data/mysql/conf/mysql.conf //导出文件

docker cp [容器名]:/data:/opt/data/.../data

726

726

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?