Faster Rcnn代码走读(一) 网络框架(PRN层)

本文基于TENSORFLOW的FASTER RCNN的实现。GITHUB地址:

https://github.com/endernewton/tf-faster-rcnn

算法原理传送门,可以参考这篇知乎的文章:

https://zhuanlan.zhihu.com/p/24916624?refer=xiaoleimlnote

本文参考了:

https://blog.csdn.net/u013010889/article/details/78574879

关于ROI和损失函数的内容在第二篇:

https://blog.csdn.net/yangchengtest/article/details/80642949

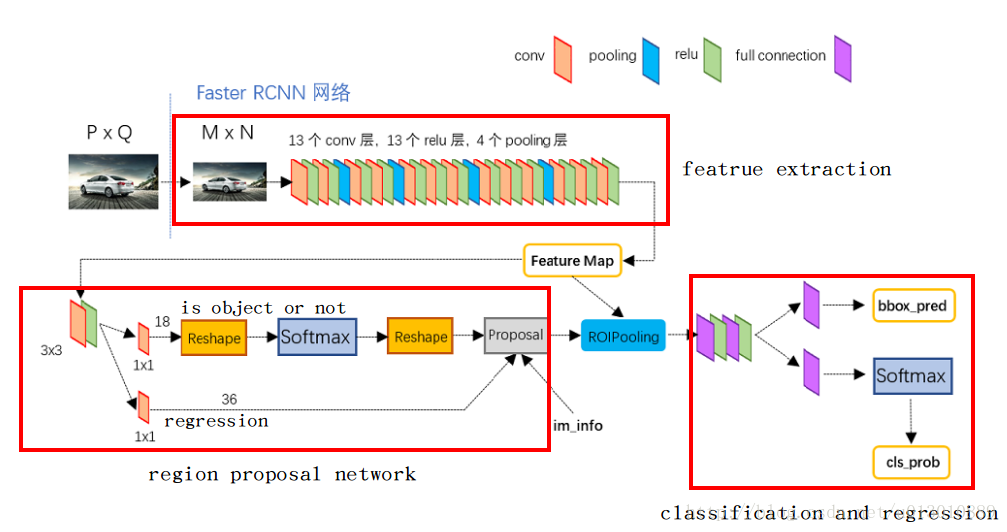

整体框架:

网络代码调用链:

trainval_net(main.train_val)->train_val(train_model.construct_graph)->network(create_architecutre)

卷积层

目前支持的网络有VGG16和RESNET。

通过复写父类的_image_to_head来实现卷积层。

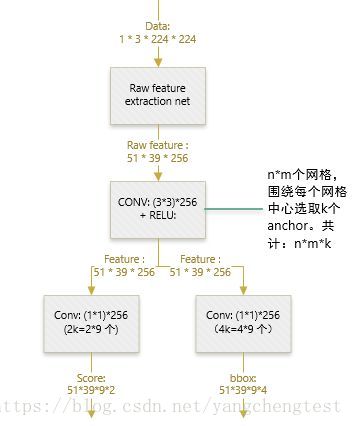

RPN的实现

ANCHOR:

network(_build_network)->network(_anchor_component)->snippets(generate_anchors_pre)

通过generate_anchors(generate_anchors)生成9个基准anchors.

生成基准anchors的流程在上述文件里,主要看一下:

def _ratio_enum(anchor, ratios):

"""

Enumerate a set of anchors for each aspect ratio wrt an anchor.

"""

w, h, x_ctr, y_ctr = _whctrs(anchor)

size = w * h

size_ratios = size / ratios

ws = np.round(np.sqrt(size_ratios))

hs = np.round(ws * ratios)

anchors = _mkanchors(ws, hs, x_ctr, y_ctr)

return anchors生成基准anchor后,

height = tf.to_int32(tf.ceil(self._im_info[0] / np.float32(self._feat_stride[0])))

width = tf.to_int32(tf.ceil(self._im_info[1] / np.float32(self._feat_stride[0])))snippets(generate_anchors_pre) 函数生成H*W的网格。

shift_x = np.arange(0, width) * feat_stride

shift_y = np.arange(0, height) * feat_stride

shift_x, shift_y = np.meshgrid(shift_x, shift_y)结合生成的9个基准anchor,

anchors = anchors.reshape((1, A, 4)) + shifts.reshape((1, K, 4)).transpose((1, 0, 2))

anchors = anchors.reshape((K * A, 4)).astype(np.float32, copy=False)

length = np.int32(anchors.shape[0])最后生成(H*W*9,4)的anchors,放入网络的类变量中.

self._anchors = anchors

self._anchor_length = anchor_length

网络框架的代码在:

newwork(_region_proposal)中实现

rpn = slim.conv2d(net_conv, cfg.RPN_CHANNELS, [3, 3], trainable=is_training, weights_initializer=initializer,

scope="rpn_conv/3x3")

self._act_summaries.append(rpn)

rpn_cls_score = slim.conv2d(rpn, self._num_anchors * 2, [1, 1], trainable=is_training,

weights_initializer=initializer,

padding='VALID', activation_fn=None, scope='rpn_cls_score')

# change it so that the score has 2 as its channel size

rpn_cls_score_reshape = self._reshape_layer(rpn_cls_score, 2, 'rpn_cls_score_reshape')

rpn_cls_prob_reshape = self._softmax_layer(rpn_cls_score_reshape, "rpn_cls_prob_reshape")

rpn_cls_pred = tf.argmax(tf.reshape(rpn_cls_score_reshape, [-1, 2]), axis=1, name="rpn_cls_pred")

rpn_cls_prob = self._reshape_layer(rpn_cls_prob_reshape, self._num_anchors * 2, "rpn_cls_prob")

rpn_bbox_pred = slim.conv2d(rpn, self._num_anchors * 4, [1, 1], trainable=is_training,

weights_initializer=initializer,

padding='VALID', activation_fn=None, scope='rpn_bbox_pred')anchor_target_layer

rpn_labels, rpn_bbox_targets, rpn_bbox_inside_weights, rpn_bbox_outside_weights = tf.py_func(

anchor_target_layer,

[rpn_cls_score, self._gt_boxes, self._im_info, self._feat_stride, self._anchors, self._num_anchors],

[tf.float32, tf.float32, tf.float32, tf.float32],

name="anchor_target")这个函数将输入的目标数据和PRN的score进行转化。

代码在:anchor_target_layer(anchor_target_layer)

样本标记:

a. 对每个标定的ground true box区域,与其重叠比例最大的anchor记为 正样本 (保证每个ground true 至少对应一个正样本anchor)b. 对a)剩余的anchor,如果其与某个标定区域重叠比例大于0.7,记为正样本(每个ground true box可能会对应多个正样本anchor。但每个正样本anchor 只可能对应一个grand true box);如果其与任意一个标定的重叠比例都小于0.3,记为负样本。

代码主要是:

label: 1 is positive, 0 is negative, -1 is dont care

labels = np.empty((len(inds_inside),), dtype=np.float32)

labels.fill(-1)

# overlaps between the anchors and the gt boxes

# overlaps (ex, gt)

overlaps = bbox_overlaps(

np.ascontiguousarray(anchors, dtype=np.float),

np.ascontiguousarray(gt_boxes, dtype=np.float))

argmax_overlaps = overlaps.argmax(axis=1)

max_overlaps = overlaps[np.arange(len(inds_inside)), argmax_overlaps]

gt_argmax_overlaps = overlaps.argmax(axis=0)

gt_max_overlaps = overlaps[gt_argmax_overlaps,

np.arange(overlaps.shape[1])]

gt_argmax_overlaps = np.where(overlaps == gt_max_overlaps)[0]

if not cfg.TRAIN.RPN_CLOBBER_POSITIVES:

# assign bg labels first so that positive labels can clobber them

# first set the negatives

labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0

# fg label: for each gt, anchor with highest overlap

labels[gt_argmax_overlaps] = 1

# fg label: above threshold IOU

labels[max_overlaps >= cfg.TRAIN.RPN_POSITIVE_OVERLAP] = 1

if cfg.TRAIN.RPN_CLOBBER_POSITIVES:

# assign bg labels last so that negative labels can clobber positives

labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0同时,最多采样数是256,正向和逆向采样数有限制。

# Max number of foreground examples

__C.TRAIN.RPN_FG_FRACTION = 0.5

# Total number of examples

__C.TRAIN.RPN_BATCHSIZE = 256bbox_targets = _compute_targets(anchors, gt_boxes[argmax_overlaps, :])

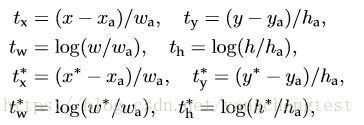

Faster-RCNN BBOX转化公式:

For bounding box regression, we adopt the parameterizations

of the 4 coordinates following

通过上述公式计算差值。

# only the positive ones have regression targets

bbox_inside_weights[labels == 1, :] = np.array(cfg.TRAIN.RPN_BBOX_INSIDE_WEIGHTS)

__C.TRAIN.RPN_BBOX_INSIDE_WEIGHTS = (1.0, 1.0, 1.0, 1.0)

if cfg.TRAIN.RPN_POSITIVE_WEIGHT < 0:

# uniform weighting of examples (given non-uniform sampling)

num_examples = np.sum(labels >= 0)

positive_weights = np.ones((1, 4)) * 1.0 / num_examples

negative_weights = np.ones((1, 4)) * 1.0 / num_examples

else:

assert ((cfg.TRAIN.RPN_POSITIVE_WEIGHT > 0) &

(cfg.TRAIN.RPN_POSITIVE_WEIGHT < 1))

positive_weights = (cfg.TRAIN.RPN_POSITIVE_WEIGHT /

np.sum(labels == 1))

negative_weights = ((1.0 - cfg.TRAIN.RPN_POSITIVE_WEIGHT) /

np.sum(labels == 0))

bbox_outside_weights[labels == 1, :] = positive_weights

bbox_outside_weights[labels == 0, :] = negative_weights

默认值:

__C.TRAIN.RPN_POSITIVE_WEIGHT = -1.0

_proposal_layer

再看一下BBOX的预测

rois, roi_scores = self._proposal_layer(rpn_cls_prob, rpn_bbox_pred, “rois”)

BBOX转化公式:

公式中,符号的含义解释一下:x 是坐标预测值,xa 是anchor坐标(预设固定值),x∗ 是坐标真实值(标注信息),其他变量 y,w,h 以此类推,t变量是偏移量。然后把前两个公式变形,就可以得到正确的公式:

x=(tx∗wa)+xa

y=(ty∗ha)+ya

同理:

w=exp(tw)*wa

h=exp(th)*ha

通过上述公式,将BBOX的预测转换成真实坐标。

proposals = bbox_transform_inv_tf(anchors, rpn_bbox_pred)

具体的代码在:bbox_transform(bbox_transform_inv_tf)中实现

接着使用TF的image.non_max_suppression基于scores做NMS预测。

indices = tf.image.non_max_suppression(proposals, scores, max_output_size=post_nms_topN, iou_threshold=nms_thresh)

boxes = tf.gather(proposals, indices)

boxes = tf.to_float(boxes)

scores = tf.gather(scores, indices)

scores = tf.reshape(scores, shape=(-1, 1))默认参数:

__C.TRAIN.RPN_NMS_THRESH = 0.7

__C.TRAIN.RPN_POST_NMS_TOP_N = 2000

__C.TEST.RPN_NMS_THRESH = 0.7

__C.TEST.RPN_POST_NMS_TOP_N = 300

# Only support single image as input

batch_inds = tf.zeros((tf.shape(indices)[0], 1), dtype=tf.float32)

blob = tf.concat([batch_inds, boxes], 1)_proposal_target_layer

在proposal_layer得到了NMS处理后的Proposal的scores和真实坐标。

rois, roi_scores = self._proposal_layer(rpn_cls_prob, rpn_bbox_pred, “rois”)

rois, _ = self._proposal_target_layer(rois, roi_scores, “rpn_rois”)

rois, roi_scores, labels, bbox_targets, bbox_inside_weights, bbox_outside_weights = tf.py_func(

proposal_target_layer,

[rois, roi_scores, self._gt_boxes, self._num_classes],

[tf.float32, tf.float32, tf.float32, tf.float32, tf.float32, tf.float32],

name="proposal_target")接着来看一下proposal_target_layer的处理:

# Minibatch size (number of regions of interest [ROIs])

__C.TRAIN.BATCH_SIZE = 128

# Fraction of minibatch that is labeled foreground (i.e. class > 0)

__C.TRAIN.FG_FRACTION = 0.25

rois_per_image = cfg.TRAIN.BATCH_SIZE / num_images

fg_rois_per_image = np.round(cfg.TRAIN.FG_FRACTION * rois_per_image)

# Sample rois with classification labels and bounding box regression

# targets

labels, rois, roi_scores, bbox_targets, bbox_inside_weights = _sample_rois(

all_rois, all_scores, gt_boxes, fg_rois_per_image,

rois_per_image, _num_classes)# Overlap threshold for a ROI to be considered foreground (if >= FG_THRESH)

__C.TRAIN.FG_THRESH = 0.5

# Overlap threshold for a ROI to be considered background (class = 0 if

# overlap in [LO, HI))

__C.TRAIN.BG_THRESH_HI = 0.5

__C.TRAIN.BG_THRESH_LO = 0.1

# Select foreground RoIs as those with >= FG_THRESH overlap

fg_inds = np.where(max_overlaps >= cfg.TRAIN.FG_THRESH)[0]

# Guard against the case when an image has fewer than fg_rois_per_image

# Select background RoIs as those within [BG_THRESH_LO, BG_THRESH_HI)

bg_inds = np.where((max_overlaps < cfg.TRAIN.BG_THRESH_HI) &

(max_overlaps >= cfg.TRAIN.BG_THRESH_LO))[0]然后就是根据各个阈值抽样。

PS:这些阈值也太多了。

bbox_target_data = _compute_targets(

rois[:, 1:5], gt_boxes[gt_assignment[keep_inds], :4], labels)

# Normalize the targets using "precomputed" (or made up) means and stdevs

# (BBOX_NORMALIZE_TARGETS must also be True)

__C.TRAIN.BBOX_NORMALIZE_TARGETS_PRECOMPUTED = True

__C.TRAIN.BBOX_NORMALIZE_MEANS = (0.0, 0.0, 0.0, 0.0)

__C.TRAIN.BBOX_NORMALIZE_STDS = (0.1, 0.1, 0.2, 0.2)

targets = ((targets - np.array(cfg.TRAIN.BBOX_NORMALIZE_MEANS))

/ np.array(cfg.TRAIN.BBOX_NORMALIZE_STDS))和anchor_target不同,这里还有归一化的操作。

bbox_targets, bbox_inside_weights = \

_get_bbox_regression_labels(bbox_target_data, num_classes)

def _get_bbox_regression_labels(bbox_target_data, num_classes):

"""Bounding-box regression targets (bbox_target_data) are stored in a

compact form N x (class, tx, ty, tw, th)

This function expands those targets into the 4-of-4*K representation used

by the network (i.e. only one class has non-zero targets).

Returns:

bbox_target (ndarray): N x 4K blob of regression targets

bbox_inside_weights (ndarray): N x 4K blob of loss weights

"""和anchor_target一样,inside_weights是[1.0,1.0,1.0,1.0]

PRN层最终返回:

rois = self._region_proposal(net_conv, is_training, initializer)

rois即经过proposal_layer和proposal_target_layer抽样后的Proposal。

1319

1319

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?