1、环境准备

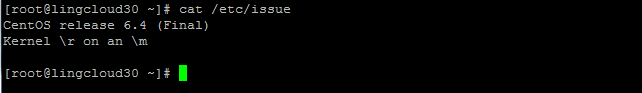

linux系统:Centos6.4 release (Final) 下载地址:http://www.centos.org/

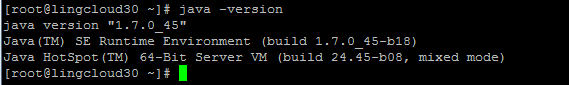

jdk:1.7.0_45 下载地址:http://www.oracle.com/technetwork/java/javase/downloads/index.html

hbase:hbase-0.98.8-hadoop2 下载地址: http://mirrors.gigenet.com/apache/hbase/stable/

2、配置步骤:

2.1 安装jdk,别忘了修改 vim /etc/profile。

2.2 ssh免密码登陆

说明:由于jdk的环境配置以及ssh面密码登陆一般不出什么问题,网上资料较多,故在此不做赘述2.3 hadoop环境配置

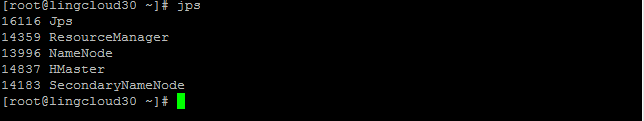

本机分布式服务器ip30:lingcloud30 (由于本台服务器配置相对较低,故用其作为NameNode,也是SecondaryNameNode。也是下面Hbase配置的HMaster)

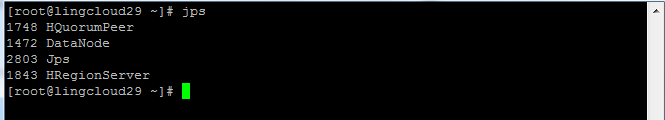

ip29:lingcloud29 (本台以及下面的两台作为DateNode。以及下面Hbase配置的HRegionServer)

ip32:lingcloud32

2.3.1 {hadoop}/etc/hadoop/core-site.xml配置

下面是我的lingcloud30的配置,有的有注释,方便理解<code=xml>

<pre name="code" class="html"><configuration>

<!-- this file must be the same with the $hbase$/conf/ "hbase.rootdir" property -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://lingcloud30:9000</value>

</property>

<!-- if the HMaster start servel seconds and then HMaster server abort ,you should remove the file hadooptmp and restart the hbase -->

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/qhl/hadoopWorkspace/hadooptmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<!--添加 httpfs 的 选项-->

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>lingcloud30</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration></code>

2.3.2 {hadoop}/etc/hadoop/hdfs-site.xml配置

<code=xml>

<pre name="code" class="html"><configuration>

<property>

<name>dfs.datanode.handler.count</name>

<value>5</value>

<description>The number of server threads for the datanode.</description>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>5</value>

<description>The number of server threads for the namenode.</description>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/qhl/hadoopWorkspace/hdfs/name</value>

<final>true</final>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.federation.nameservice.id</name>

<value>ns1</value>

</property>

<property>

<name>dfs.namenode.backup.address.ns1</name>

<value>lingcloud30:50100</value>

</property>

<property>

<name>dfs.namenode.backup.http-address.ns1</name>

<value>lingcloud30:50105</value>

</property>

<property>

<name>dfs.federation.nameservices</name>

<value>ns1</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1</name>

<value>lingcloud30:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns2</name>

<value>lingcloud30:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.ns1</name>

<value>lingcloud30:23001</value> <!-- this is the web browsre port if you browsre http://lingcloud30:23001 . you'll see some information -->

</property>

<property>

<name>dfs.namenode.http-address.ns2</name>

<value>lingcloud30:13001</value>

</property>

<property>

<name>dfs.dataname.data.dir</name>

<value>file:/usr/qhl/hadoopWorkspace/hdfs/data</value>

<final>true</final>

</property>

<property>

<name>dfs.namenode.secondary.http-address.ns1</name>

<value>lingcloud30:23002</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address.ns2</name>

<value>lingcloud30:23002</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address.ns1</name>

<value>lingcloud30:23003</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address.ns2</name>

<value>lingcloud30:23003</value>

</property>

<property>

<name>dfs.datanode.max.xcievers</name>

<value>8192</value>

</property>

</configuration>

</code>

2.3.3 {hadoop}/etc/hadoop/yarn-site.xml配置

<code=xml>

<configuration>

<property>

<name>yarn.resourcemanager.address</name>

<value>lingcloud30:18040</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>lingcloud30:18030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>lingcloud30:18088</value> <!-- this is the web browsre port, if you browsre http://lingcloud30:18088, you'll see some information -->

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>lingcloud30:18025</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>lingcloud30:18141</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce.shuffle</value>

</property>

</configuration>

</code>

2.3.4 {hadoop}/etc/hadoop/mapred-env.sh配置

添加

<code=xml>

export HADOOP_MAPRED_PID_DIR=/usr/qhl/hadoopWorkspace/haddopMapredPidDir # The pid files are stored. /tmp by default.</code>

2.3.5 {hadoop}/etc/hadoop/slaves配置

<code=xml>

lingcloud32

lingcloud31

lingcloud29</code>

2.3.6 小结

以上配置复制到其他node节点,按照相同配置即可。3.hbase配置

3.1 habs-env.sh环境配置

<code=xml>

export JAVA_HOME=/usr/lib/jdk/jdk1.7.0_45/ #你的jdk安装目录

export HBASE_PID_DIR=/usr/qhl/hbaseWorkspace/pids # The directory where pid files are stored. /tmp by default.

export HBASE_MANAGES_ZK=true #使用hhase自带的zookeeper</code>

3.2 hbase-site.xml配置

<code=xml>

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://lingcloud30:9000/hbase</value>

<!--

this must be ths same with the {hadoop}/etc/hadoop:core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://lingcloud30:9000</value>

</property>

-->

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/usr/qhl/zookeeper</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

<description>which directs HBase to run in distributed mode, with one JVM instance per daemon.</description>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/usr/qhl/hbaseWorkspace/hbasetmp</value>

<!-- if the HMaster start servel seconds and then HMaster server abort ,you should remove the file hadooptmp and restart the hbase -->

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>lingcloud29,lingcloud31,lingcloud32</value>

</property>

<property>

<name>hbase.master</name>

<value>lingcloud30:60000</value>

</property>

<property>

<name>hbase.master.port</name>

<value>60000</value>

<description>The port master should bind to.</description>

</property>

<property>

<name>hbase.master.maxclockskew</name>

<value>200000</value>

<description>Time difference of regionserver from master</description>

</property>

</configuration>

</code>

3.3 regionserver 配置

<code=xml>

lingcloud29

lingcloud31

lingcloud32

</code>

4.配置成功后浏览器截图

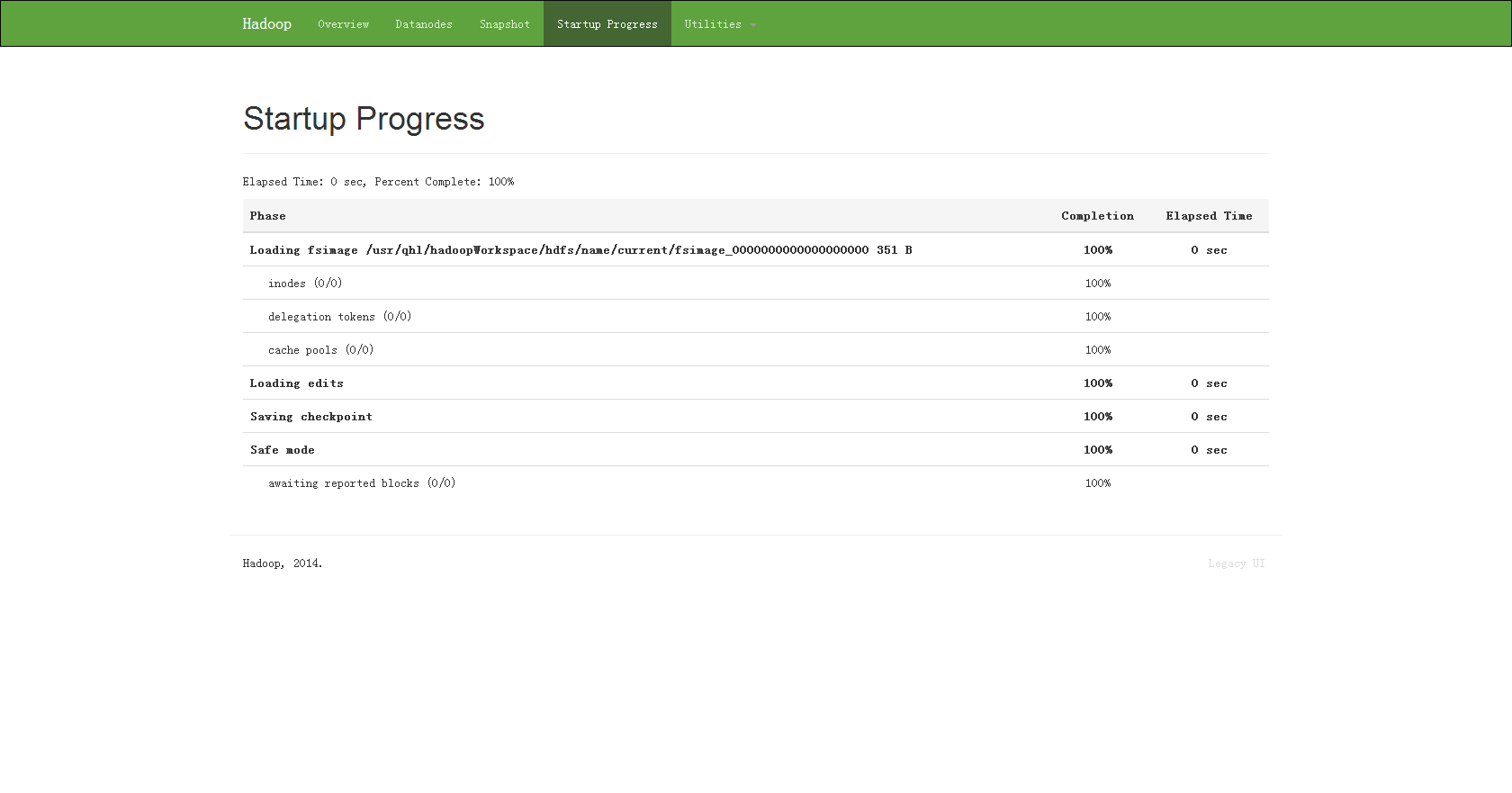

我在{hadoop}etc/hadoop下的hdfs-site.xml中配置了端口为23001. 配置项是dfs.namenode.http-address.ns1

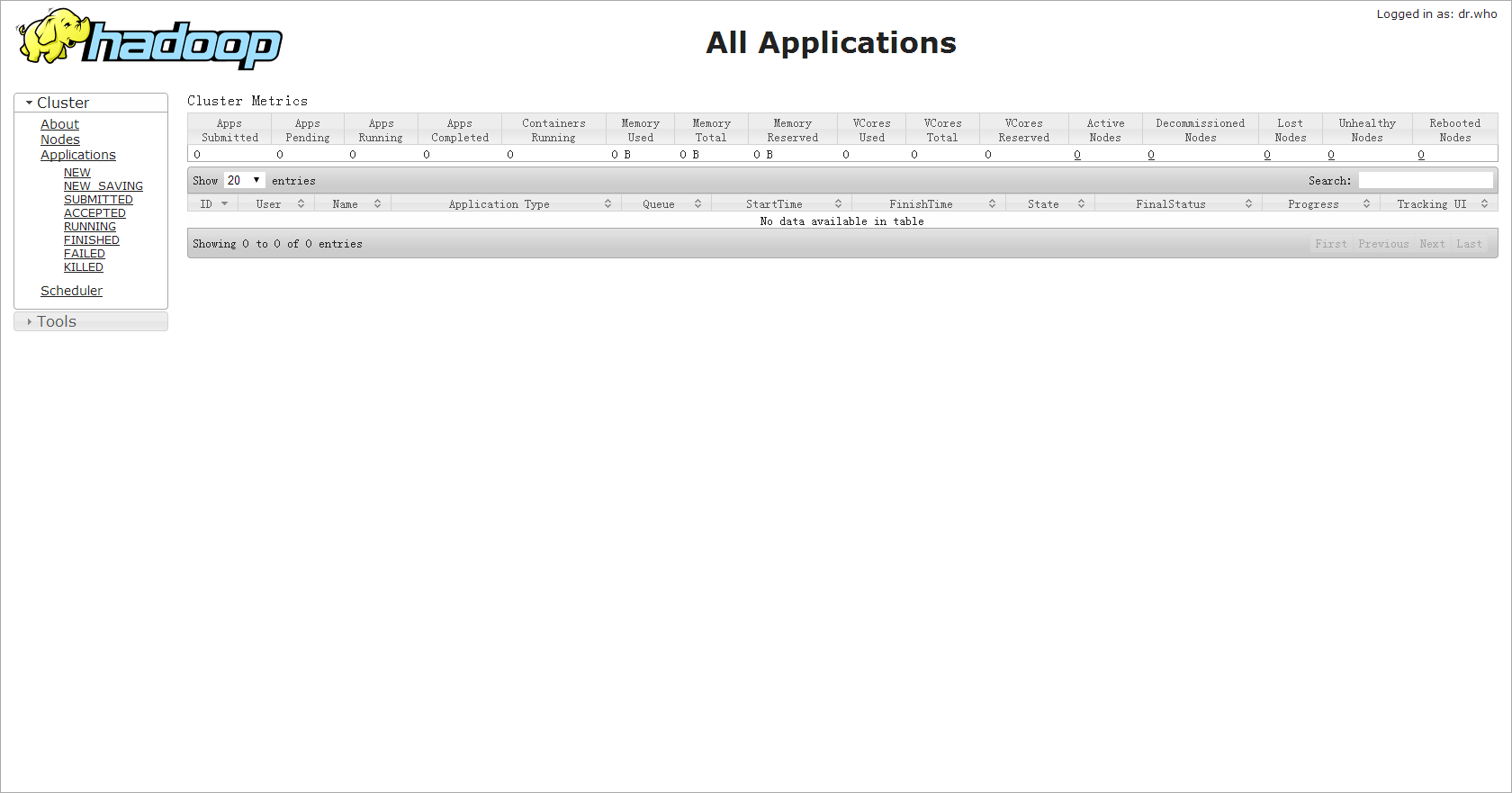

访问18088端口会出现如下界面:

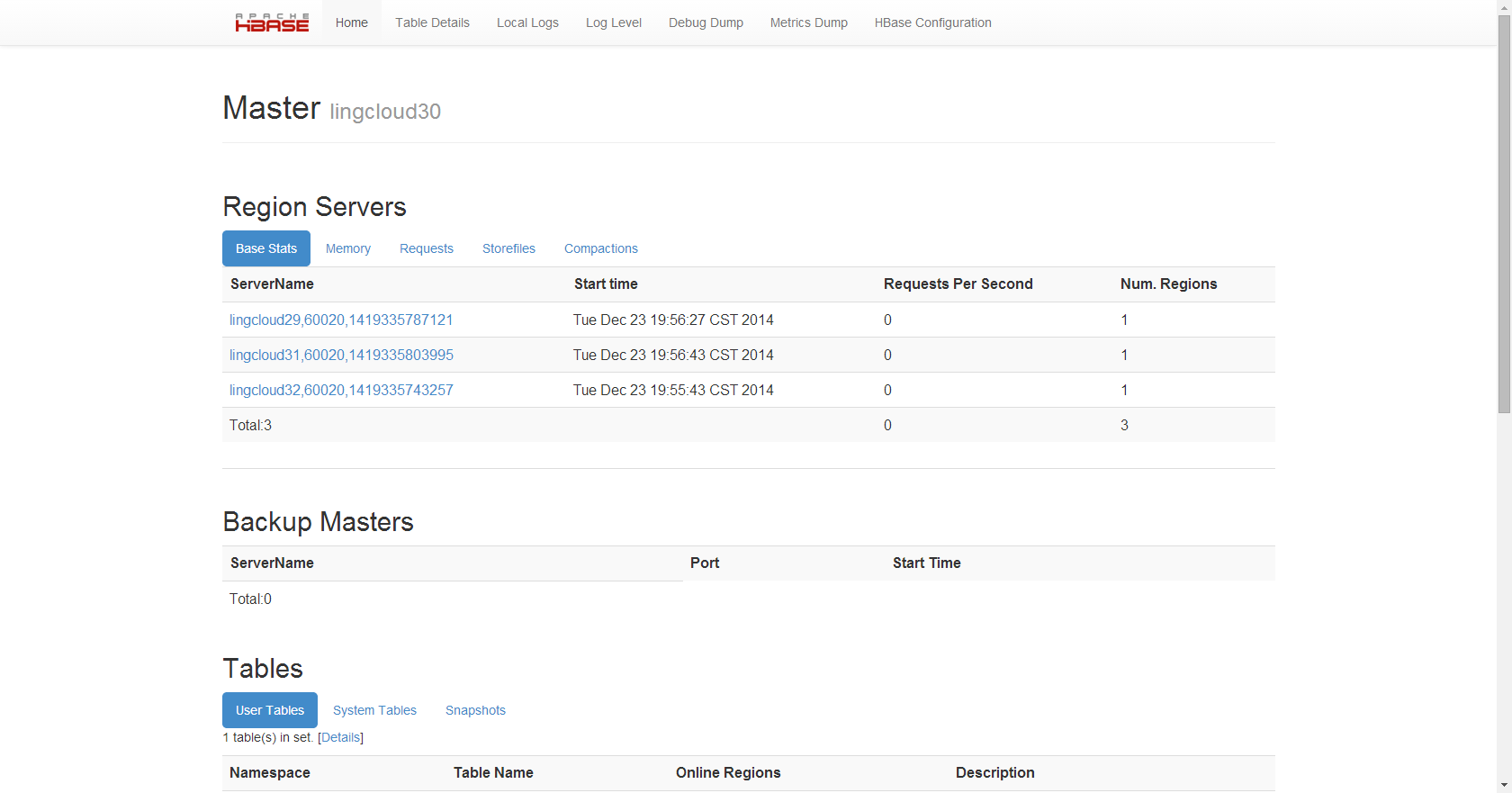

hbase默认端口为60010

5.总结和参考文献

本人必须承认,很多东西都是从网上学到的,参考了很多的博文,也遇到了很多的错误,但是由于日志中间没有保存,错误就不贴了。同时由于参考了很多的博文,在此也不能一一列举了,本篇博文是我的第一篇,有什么不对之处,还请批评指正,共同学习。

下面是参考文献:

1.hadoop的API,个人感觉资料还不错:

http://hadoop.apache.org/docs/current/api/overview-summary.html#overview_description

2.hbase官方配置。

http://hbase.apache.org/book/configuration.html

3. hadoop官方配置。

http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/SingleCluster.html

9.hbase官方文档汉语版。

http://abloz.com/hbase/book.html#hbase_default_configurations

335

335

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?