1. 环境

1. 安装python 3.6+版本环境

2. 安装好scrapy以及image

安装命令:

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple pip -U

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple scrapy

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple image

2. 创建CrawlSpider项目

scrapy startproject biquge

cd biquge

scrapy genspider -t crawl biquge98 biquge98.com

创建好爬虫项目之后,目录展示如下图所示:

3. 定义数据保存容器items.py

- items.py

import scrapy

class BiqugeItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

name = scrapy.Field()

image_urls = scrapy.Field()

detail_url = scrapy.Field()

author = scrapy.Field()

image_name = scrapy.Field()

image_path = scrapy.Field()

4. 编辑爬虫管道文件pipelines.py

- pipelines.py

我们可以清空pipelines.py里面的内容,然后修改为如下代码

from scrapy.pipelines.images import ImagesPipeline, DropItem

from scrapy import Request

class ImageDownloadPipeline(ImagesPipeline):

def get_media_requests(self, item, info):

url = item['image_urls']

yield Request(url, meta={'item': item})

def file_path(self, request, response=None, info=None):

item = request.meta['item']

file_name = item['name'] + "\\" + item['image_name'] # 修改图片文件的保存路径

return file_name

# 自定义分组保存

def item_completed(self, results, item, info):

image_paths = [x for ok, x in results if ok]

if not image_paths:

raise DropItem('Item contains no images')

item['image_path'] = image_paths # 注意这里的item['image_path']需要在items文件里面事先定义好,可以按照自己的喜好取名

return item

4. 修改爬虫项目配置文件settings.py

- settings.py

我贴出来需要编辑修改的部分,或者可以直接把这部分文件粘贴到settings.py文件中

LOG_LEVEL = 'INFO' # 只打印>=INFO级别的信息

ROBOTSTXT_OBEY = False # 不遵从爬虫协议

DOWNLOAD_DELAY = 0.2 # 设置网页/图片的下载延迟,减轻网站的负担

ITEM_PIPELINES = {

'biquge.pipelines.ImageDownloadPipeline': 300, # 自定义的管道文件,优先级为300,或者其它数字都行

}

IMAGES_STORE = 'IMAGES' # 图片保存的根路径

IMAGES_EXPIRES = 5 # 5天内不爬取重复图片

5. 编写爬虫文件biquge98.py

- biquge98.py

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from biquge.items import BiqugeItem

class Biquge98Spider(CrawlSpider):

name = 'biquge98'

allowed_domains = ['biquge98.com']

start_urls = ['https://www.biquge98.com/xiuzhenxiaoshuo/2_1.html'] # 起始url为站点的修真小说

rules = (

Rule(LinkExtractor(restrict_xpaths="//a[@class='next']")), # 定位翻页url用来实现翻页

Rule(LinkExtractor(restrict_xpaths="//div[@class='l']/ul/li/span[1]"), callback='parse_item'), # 定义需要爬取的小说详情页的url地址

)

def parse_item(self, response):

item = BiqugeItem()

item['detail_url'] = response.url

item['name'] = response.xpath("//h1/text()").extract_first()

item['image_urls'] = response.xpath('//div[@id="fmimg"]/img/@src').extract_first()

item['author'] = response.xpath('//div[@id="info"]/p[1]/a/text()').extract_first()

if item['image_urls']:

item['image_name'] = item['name'] + item['image_urls'].split('/')[-1]

else:

item['name'] = 'No cover image url'

print(item)

yield item

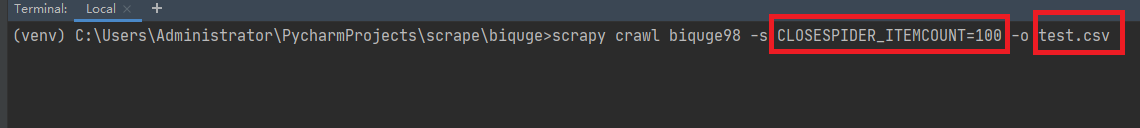

6. 启动爬虫

scrapy crawl biquge98 -s CLOSESPIDER_ITEMCOUNT=100 -o test.csv

命令含义:

CLOSESPIDER_ITEMCOUNT=100:当提取的item数量达到100的时候,提前关闭爬虫(因为scrapy的异步功能,所以一般获取的结果要比100大一些)

-o test.csv:将爬取的item保存到test.csv文件,文件后缀还可以是json,jl,xml

7. 查看效果

爬取中…

7. 爬虫结束…

- 查看日志

截取爬虫完毕之后的部分日志,我们来看几个参数,注释在对应行.

2020-07-21 20:00:40 [scrapy.extensions.feedexport] INFO: Stored csv feed (116 items) in: test.csv # 存储116个items大test.csv

2020-07-21 20:00:40 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 63344,

'downloader/request_count': 237, # 请求了url数目

'downloader/request_method_count/GET': 237,

'downloader/response_bytes': 5526869,

'downloader/response_count': 237, # 得到的结果返回数据,一般和请求数目一致

'downloader/response_status_count/200': 237,

'dupefilter/filtered': 7, # 过滤掉了重复请求

'elapsed_time_seconds': 59.590408,

'file_count': 116,

'file_status_count/downloaded': 116,

'finish_reason': 'closespider_itemcount',

'finish_time': datetime.datetime(2020, 7, 21, 12, 0, 40, 284851),

'item_scraped_count': 116, # 总共爬取了116个item,这个就是我们提前结束爬虫,保存了116个item的数据,待会再test.csv中验证

'log_count/INFO': 11,

'request_depth_max': 5,

'response_received_count': 237,

'scheduler/dequeued': 121,

'scheduler/dequeued/memory': 121,

'scheduler/enqueued': 149,

'scheduler/enqueued/memory': 149,

'start_time': datetime.datetime(2020, 7, 21, 11, 59, 40, 694443)}

2020-07-21 20:00:40 [scrapy.core.engine] INFO: Spider closed (closespider_itemcount)

- 查看保存的test.csv里面的数据

数据较多,我截取几条数据,供查看.

author,detail_url,image_name,image_path,image_urls,name

五志,https://www.biquge98.com/biquge_115505/,氪金成仙115505s.jpg,"[{'url': 'https://www.biquge98.com/image/115/115505/115505s.jpg', 'path': '氪金成仙\\氪金成仙115505s.jpg', 'checksum': 'add916ff353a3de8096ed7a14343bf70', 'status': 'downloaded'}]",https://www.biquge98.com/image/115/115505/115505s.jpg,氪金成仙

暗黑茄子,https://www.biquge98.com/biquge_119752/,猛兽博物馆119752s.jpg,"[{'url': 'https://www.biquge98.com/image/119/119752/119752s.jpg', 'path': '猛兽博物馆\\猛兽博物馆119752s.jpg', 'checksum': '7bb5fcdcd1c5027c55fc1d86c11007e2', 'status': 'downloaded'}]",https://www.biquge98.com/image/119/119752/119752s.jpg,猛兽博物馆

玄远一吹,https://www.biquge98.com/biquge_97494/,全能神医97494s.jpg,"[{'url': 'https://www.biquge98.com/image/97/97494/97494s.jpg', 'path': '全能神医\\全能神医97494s.jpg', 'checksum': '9098df5d868dc33aa66ab580f93390e5', 'status': 'downloaded'}]",https://www.biquge98.com/image/97/97494/97494s.jpg,全能神医

岐峰,https://www.biquge98.com/biquge_114792/,江湖枭雄114792s.jpg,"[{'url': 'https://www.biquge98.com/image/114/114792/114792s.jpg', 'path': '江湖枭雄\\江湖枭雄114792s.jpg', 'checksum': 'f036889f8d5275f2e75763e249b92419', 'status': 'downloaded'}]",https://www.biquge98.com/image/114/114792/114792s.jpg,江湖枭雄

神出古异,https://www.biquge98.com/biquge_108807/,十方乾坤108807s.jpg,"[{'url': 'https://www.biquge98.com/image/108/108807/108807s.jpg', 'path': '十方乾坤\\十方乾坤108807s.jpg', 'checksum': '243bf37c93293037cd04941a9b9db082', 'status': 'downloaded'}]",https://www.biquge98.com/image/108/108807/108807s.jpg,十方乾坤

...

...

除去表头,一共116条数据

- 查看分组保存的图片信息

验证图片是否正确

1710

1710

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?