数据质量模块是大数据平台中必不可少的一个功能组件,Apache Griffin(以下简称Griffin)是一个开源的大数据数据质量解决方案,它支持批处理和流模式两种数据质量检测方式,可以从不同维度(比如离线任务执行完毕后检查源端和目标端的数据数量是否一致、源表的数据空值数量等)度量数据资产,从而提升数据的准确度、可信度。

一、环境

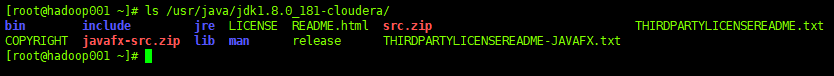

现有的Java环境:

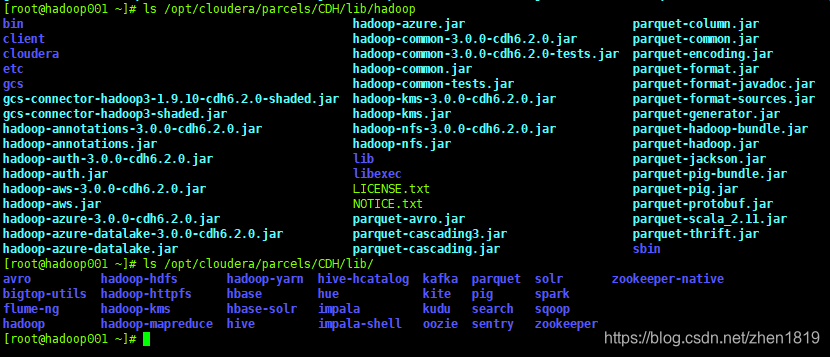

现有的Hadoop环境:

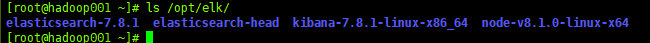

现有的Elasticsearch:

二、需要安装的软件

CDH:版本6.2.0

Hadoop:版本Hadoop3.0

Elasticsearch:版本:7.8.1

Maven:版本3.6.3

Scala:版本2.11.12

Livy:版本0.5.0

Griffin:版本0.5.0

三、安装Maven

下载地址:http://maven.apache.org/download.cgi

下载的包名为:apache-maven-3.6.3-bin.tar.gz

将下载的安装包上传到服务器上。

[root@hadoop001 ~]# mkdir -p /opt/software/griffin/

[root@hadoop001 ~]# cd /opt/software/griffin/

[root@hadoop001 griffin]# ls apache-maven-3.6.3-bin.tar.gz

apache-maven-3.6.3-bin.tar.gz

[root@hadoop001 griffin]# tar -zxf apache-maven-3.6.3-bin.tar.gz

[root@hadoop001 griffin]# ln -s /bin/mvn /opt/software/griffin/apache-maven-3.6.3/bin/mvn

[root@hadoop001 griffin]# which mvn

/bin/mvn

四、安装Scala

下载地址:https://www.scala-lang.org/download/all.html

2.11.12版本地址:https://www.scala-lang.org/download/2.11.12.html

下载的包名为:scala-2.11.12.rpm(地址:https://downloads.lightbend.com/scala/2.11.12/scala-2.11.12.rpm)

[root@hadoop001 griffin]# ls scala-2.11.12.rpm

scala-2.11.12.rpm

[root@hadoop001 griffin]# yum -y install scala-2.11.12.rpm

五、安装Livy

下载地址:http://archive.apache.org/dist/incubator/livy/

0.5.0版本下载地址:http://archive.apache.org/dist/incubator/livy/0.5.0-incubating/

下载的包名为:livy-0.5.0-incubating-bin.zip

[root@hadoop001 griffin]# ls livy-0.5.0-incubating-bin.zip

livy-0.5.0-incubating-bin.zip

[root@hadoop001 griffin]# unzip livy-0.5.0-incubating-bin.zip

[root@hadoop001 griffin]# cd livy-0.5.0-incubating-bin/

[root@hadoop001 livy-0.5.0-incubating-bin]# cp conf/livy.conf.template conf/livy.conf

[root@hadoop001 livy-0.5.0-incubating-bin]# cp conf/livy-env.sh.template conf/livy-env.sh

[root@hadoop001 livy-0.5.0-incubating-bin]# cp conf/log4j.properties.template conf/log4j.properties

5.1、配置环境变量

在livy-env.sh文件中增加以下内容,相关的路径需要根据自己的实际情况来定:

vim conf/livy-env.sh

export SPARK_HOME=/opt/cloudera/parcels/CDH/lib/spark

export HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

5.2、修改Livy配置

修改Livy配置文件中的相关项:

[root@hadoop001 livy-0.5.0-incubating-bin]# vim conf/livy.conf

livy.server.port = 8998

livy.spark.master = yarn

livy.spark.deploy-mode = client

两条关于Spark的配置,参照自己安装的Spark的配置:

[root@hadoop001 ~]# cat /opt/cloudera/parcels/CDH/lib/spark/conf/spark-defaults.conf | grep -E -i '(master|deploymode)'

spark.master=yarn

spark.submit.deployMode=client

5.3、启动Livy

启动Livy会监听8998端口:

[root@hadoop001 livy-0.5.0-incubating-bin]# pwd

/opt/software/griffin/livy-0.5.0-incubating-bin

[root@hadoop001 livy-0.5.0-incubating-bin]# ./bin/livy-server start

[root@hadoop001 livy-0.5.0-incubating-bin]# ./bin/livy-server status

livy-server is running (pid: 32232)

5.4、Livy的日志

Livy相关的日志都保存在以下目录:

[root@hadoop001 logs]# pwd

/opt/software/griffin/livy-0.5.0-incubating-bin/logs

[root@hadoop001 logs]# ls

2020_08_20.request.log livy-root-server.out livy-root-server.out.1 livy-root-server.out.2 livy-root-server.out.3

六、安装Griffin

6.1、从GitHub下载Griffin源码

[root@hadoop001 griffin-griffin-0.5.0]# pwd

/opt/software/griffin/griffin-griffin-0.5.0

[root@hadoop001 griffin-griffin-0.5.0]# git clone https://github.com/apache/griffin.git

[root@hadoop001 griffin-griffin-0.5.0]# cd griffin/

[root@hadoop001 griffin]# git tag

[root@hadoop001 griffin]# git checkout tags/griffin-0.5.0

[root@hadoop001 griffin]# git branch

* (detached from griffin-0.5.0)

master

6.2、导入SQL文件

先在MySQL中创建quartz库:

[root@hadoop001 griffin]# mysql -u root -h 127.0.0.1 -p

mysql> create database quartz;

mysql> grant all privileges on quartz.* to 'quartz'@'%' identifided by '123456';

查找要导入的SQL文件,路径为:

[root@hadoop001 griffin]# ls service/src/main/resources/Init_quartz_mysql_innodb.sql

service/src/main/resources/Init_quartz_mysql_innodb.sql

将该SQL文件导入到quartz库中:

[root@hadoop001 griffin]# mysql -u root -h 127.0.0.1 -p quartz < service/src/main/resources/Init_quartz_mysql_innodb.sql

6.3、设置环境变量

以下环境变量较多,根据自己的环境来配置:

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_COMMON_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

export HADOOP_COMMON_LIB_NATIVE_DIR=/opt/cloudera/parcels/CDH/lib/hadoop/lib/native

export HADOOP_HDFS_HOME=/opt/cloudera/parcels/CDH/lib/hadoop-hdfs

export HADOOP_INSTALL=/opt/cloudera/parcels/CDH/lib/hadoop

export HADOOP_MAPRED_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

export HADOOP_USER_CLASSPATH_FIRST=true

export SPARK_HOME=/opt/cloudera/parcels/CDH/lib/spark

export LIVY_HOME=/opt/software/griffin/livy-0.5.0-incubating-bin

export HIVE_HOME=/opt/cloudera/parcels/CDH/lib/hive/

export YARN_HOME=/opt/cloudera/parcels/CDH/lib/hadoop-yarn/

export SCALA_HOME=/usr/share/scala

export PATH=$PATH:$HIVE_HOME/bin:$HADOOP_HOME/bin:$SPARK_HOME/bin:$LIVY_HOME/bin:$SCALA_HOME/bin

6.4、配置Hive

hive-site.xml在Hive安装目录的conf目录中:

[root@hadoop001 griffin]# hadoop fs -mkdir -p /home/spark_conf #创建/home/spark_conf目录

[root@hadoop001 griffin]# hadoop fs -put $HIVE_HOME/conf/hive-site.xml /home/spark_conf/ #上传hive-site.xml

[root@hadoop001 griffin]# hadoop fs -ls /home/spark_conf

Found 1 items

-rw-r--r-- 3 root supergroup 6743 2020-08-18 11:25 /home/spark_conf/hive-site.xml

6.5、配置Griffin

相关的配置存放在service/src/main/resources/路径下:

[root@hadoop001 griffin]# ls service/src/main/resources/

6.5.1、修改application.properties

[root@hadoop001 griffin]# vim service/src/main/resources/application.properties

server.port = 8090 #指定端口

spring.application.name=griffin_service #指定名称

spring.datasource.url=jdbc:mysql://hadoop001:3306/quartz?autoReconnect=true&useSSL=false #连接MySQL的quartz库

spring.datasource.username=quartz #连接quartz库的用户名

spring.datasource.password=123456 #连接quartz库的用户的密码

spring.jpa.generate-ddl=true

spring.datasource.driver-class-name=com.mysql.jdbc.Driver #指定连接MySQL的驱动

spring.jpa.show-sql=true

hive.metastore.uris=thrift://hadoop001:9083 #Hive元数据地址,在$HIVE_HOME/conf/hive-site.xml文件中查找该地址

hive.metastore.dbname=hive #Hive元数据存储位置,此处为MySQL的hive库

hive.hmshandler.retry.attempts=15

hive.hmshandler.retry.interval=2000ms

cache.evict.hive.fixedRate.in.milliseconds=900000

kafka.schema.registry.url=http://hadoop002:9092 #Kafka连接地址

jobInstance.fixedDelay.in.milliseconds=60000

jobInstance.expired.milliseconds=604800000

predicate.job.interval=5m

predicate.job.repeat.count=12

external.config.location=

external.env.location=

login.strategy=default

ldap.url=ldap://hostname:port

ldap.email=@example.com

ldap.searchBase=DC=org,DC=example

ldap.searchPattern=(sAMAccountName={0})

fs.defaultFS=hdfs://hadoop001:8020 #指定HDFS地址

elasticsearch.host=hadoop001 #以下为ES的地址

elasticsearch.port=9200

elasticsearch.scheme=http

livy.uri=http://hadoop001:8998/batches #指定Livy地址

livy.need.queue=false

livy.task.max.concurrent.count=20

livy.task.submit.interval.second=3

livy.task.appId.retry.count=3

yarn.uri=http://hadoop001:8088 #Yarn地址

internal.event.listeners=GriffinJobEventHook

6.5.2、修改quartz.properties

[root@hadoop001 griffin]# vim service/src/main/resources/quartz.properties

org.quartz.scheduler.instanceName=spring-boot-quartz

org.quartz.scheduler.instanceId=AUTO

org.quartz.threadPool.threadCount=5

org.quartz.jobStore.class=org.quartz.impl.jdbcjobstore.JobStoreTX

org.quartz.jobStore.driverDelegateClass=org.quartz.impl.jdbcjobstore.StdJDBCDelegate #指定使用连接MySQL的驱动

org.quartz.jobStore.useProperties=true

org.quartz.jobStore.misfireThreshold=60000

org.quartz.jobStore.tablePrefix=QRTZ_

org.quartz.jobStore.isClustered=true

org.quartz.jobStore.clusterCheckinInterval=20000

6.5.3、修改sparkProperties.json

[root@hadoop001 griffin]# vim service/src/main/resources/sparkProperties.json

{

"file": "hdfs://hadoop001:8020/griffin/griffin-measure.jar", #指定jar包在HDFS的位置

"className": "org.apache.griffin.measure.Application",

"name": "griffin",

"queue": "default",

"numExecutors": 2,

"executorCores": 1,

"driverMemory": "1g",

"executorMemory": "1g",

"conf": {

"spark.yarn.dist.files": "hdfs://hadoop001:8020/home/spark_conf/hive-site.xml" #指定hive-site.xml在HDFS中的位置,该文件在第八步中上传到HDFS

},

"files": [

]

}

6.5.4、修改env/env_batch.json

[root@hadoop001 griffin]# vim service/src/main/resources/env/env_batch.json

{

"spark": {

"log.level": "WARN"

},

"sinks": [

{

"type": "CONSOLE",

"config": {

"max.log.lines": 10

}

},

{

"type": "HDFS",

"config": {

"path": "hdfs://hadoop001:8020/griffin/persist", #Griffin数据存放位置

"max.persist.lines": 10000,

"max.lines.per.file": 10000

}

},

{

"type": "ELASTICSEARCH",

"config": {

"method": "post",

"api": "http://hadoop001:9200/griffin/accuracy", #ES中的索引

"connection.timeout": "1m",

"retry": 10

}

}

],

"griffin.checkpoint": []

}

6.5.5、修改env/env_streaming.json

[root@hadoop001 griffin]# vim service/src/main/resources/env/env_streaming.json

{

"spark": {

"log.level": "WARN",

"checkpoint.dir": "hdfs://hadoop001:8020/griffin/checkpoint/${JOB_NAME}", #指定Griffin checkpoint目录

"init.clear": true,

"batch.interval": "1m",

"process.interval": "5m",

"config": {

"spark.default.parallelism": 4,

"spark.task.maxFailures": 5,

"spark.streaming.kafkaMaxRatePerPartition": 1000,

"spark.streaming.concurrentJobs": 4,

"spark.yarn.maxAppAttempts": 5,

"spark.yarn.am.attemptFailuresValidityInterval": "1h",

"spark.yarn.max.executor.failures": 120,

"spark.yarn.executor.failuresValidityInterval": "1h",

"spark.hadoop.fs.hdfs.impl.disable.cache": true

}

},

"sinks": [

{

"type": "CONSOLE",

"config": {

"max.log.lines": 100

}

},

{

"type": "HDFS",

"config": {

"path": "hdfs://hadoop001:8020/griffin/persist", #Griffin数据存放位置

"max.persist.lines": 10000,

"max.lines.per.file": 10000

}

},

{

"type": "ELASTICSEARCH",

"config": {

"method": "post",

"api": "http://es:9200/griffin/accuracy" #在ES中的索引

}

}

],

"griffin.checkpoint": [

{

"type": "zk",

"config": {

"hosts": "hadoop002:2181,hadoop003:2181,hadoop004:2181", #Zookeeper集群地址

"namespace": "griffin/infocache",

"lock.path": "lock",

"mode": "persist",

"init.clear": true,

"close.clear": false

}

}

]

}

6.5.6、创建目录和索引

以上配置过程中,使用了两个HDFS的路径和一个ES索引。

创建HDFS路径:

[root@hadoop001 griffin]# hadoop fs -mkdir -p /griffin/persist

[root@hadoop001 griffin]# hadoop fs -mkdir /griffin/checkpoint

创建ES索引:

curl -k -H "Content-Type: application/json" -X PUT http://hadoop001:9200/griffin?include_type_name=true -d '

{

"aliases": {},

"mappings": {

"accuracy": {

"properties": {

"name": {

"fields": {

"keyword": {

"ignore_above": 256,

"type": "keyword"

}

},

"type": "text"

},

"tmst": {

"type": "date"

}

}

}

},

"settings": {

"index": {

"number_of_replicas": "2",

"number_of_shards": "5"

}

}

}'

6.6、编译Griffin

开始编译前,有两个需要解决的问题。

6.6.1、问题一:MySQL驱动依赖

在编译过程中出现以下报错:

Caused by: org.springframework.beans.BeanInstantiationException: Failed to instantiate [org.apache.tomcat.jdbc.pool.DataSource]: Factory method 'dataSource' threw exception; nested exception is java.lang.IllegalStateException: Cannot load driver class: com.mysql.jdbc.Driver

……

... 36 more

Caused by: java.lang.IllegalStateException: Cannot load driver class: com.mysql.jdbc.Driver

……

... 36 more

解决办法:

在service/pom.xml文件中取消注释:

[root@hadoop001 griffin]# vim service/pom.xml

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>${mysql.java.version}</version>

</dependency>

6.6.2、问题二:typescript版本问题

在编译过程中出现以下报错:

[ERROR] ERROR in /opt/software/griffin/griffin-griffin-0.5.0/griffin/ui/angular/node_modules/@types/jquery/JQuery.d.ts (4137,26): Cannot find name 'SVGElementTagNameMap'.

解决办法:

在源码中使用typescript2.3.3版本,将其改为2.7.2以上版本:

[root@hadoop001 griffin]# vim ui/angular/package.json

"typescript": "~2.7.2",

6.6.3、开始编译

执行以下命令开始编译:

[root@hadoop001 griffin]# mvn -Dmaven.test.skip=true clean install

七、启动Griffin

创建目录存放编译完成生成的jar包:

[root@hadoop001 griffin]# mkdir /opt/griffin

[root@hadoop001 griffin]# cd /opt/griffin

[root@hadoop001 griffin]# cp /opt/software/griffin/griffin-griffin-0.5.0/griffin/service/target/service-0.5.0.jar /opt/griffin/griffin-service.jar #注意:复制时修改了jar包的名称

[root@hadoop001 griffin]# cp /opt/software/griffin/griffin-griffin-0.5.0/griffin/measure/target/measure-0.5.0.jar /opt/griffin/griffin-measure.jar #注意:复制时修改了jar包的名称

将jar包上传至HDFS:

[root@hadoop001 griffin]# hadoop fs -put /opt/griffin/griffin-measure.jar /griffin

[root@hadoop001 griffin]# hadoop fs -ls /griffin

Found 3 items

drwxr-xr-x - root supergroup 0 2020-08-18 15:16 /griffin/checkpoint

-rw-r--r-- 3 root supergroup 30720153 2020-08-20 18:55 /griffin/griffin-measure.jar

drwxr-xr-x - root supergroup 0 2020-08-20 19:00 /griffin/persist

启动Griffin:

[root@hadoop001 griffin]# nohup /usr/java/jdk1.8.0_181-cloudera/bin/java -jar /opt/griffin/griffin-service.jar>/opt/griffin/service.out 2>&1 &

使用以上命令,会在/opt/griffin目录下生成service.out文件,该文件记录Griffin日志。

3766

3766

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?