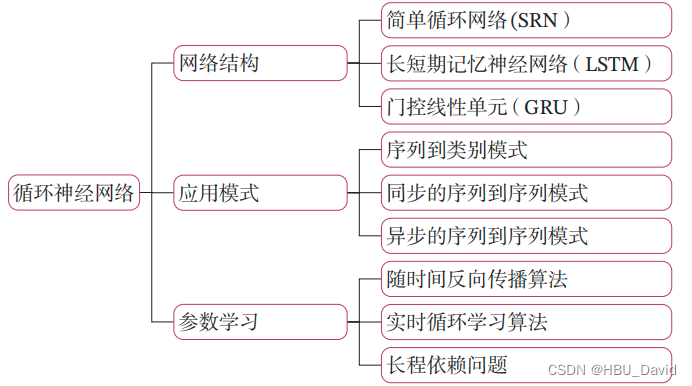

文章目录

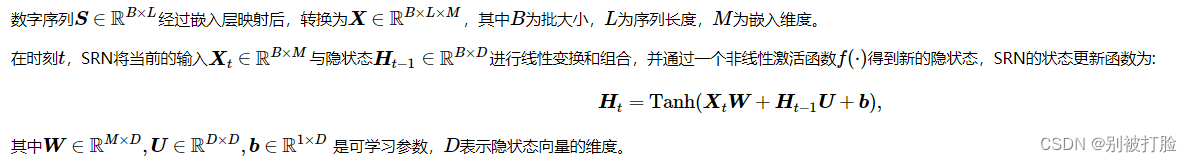

循环神经网络(Recurrent Neural Network,RNN)是一类具有短期记忆能力的神经网络.

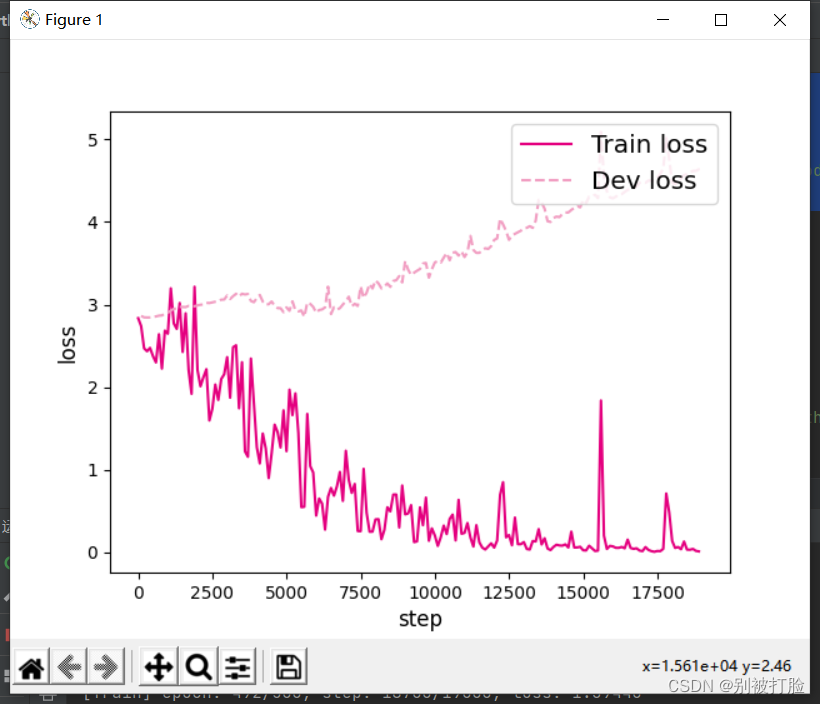

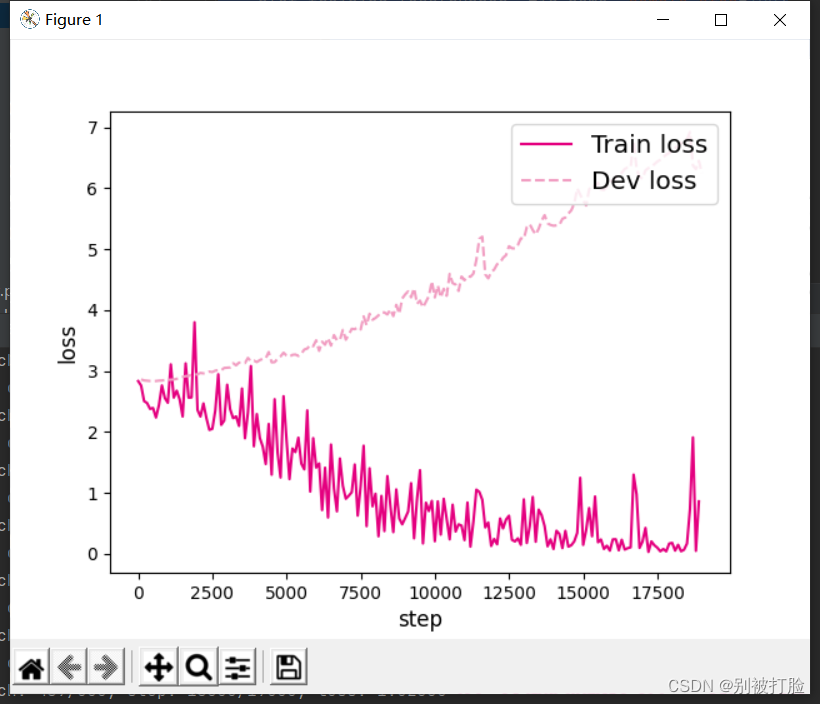

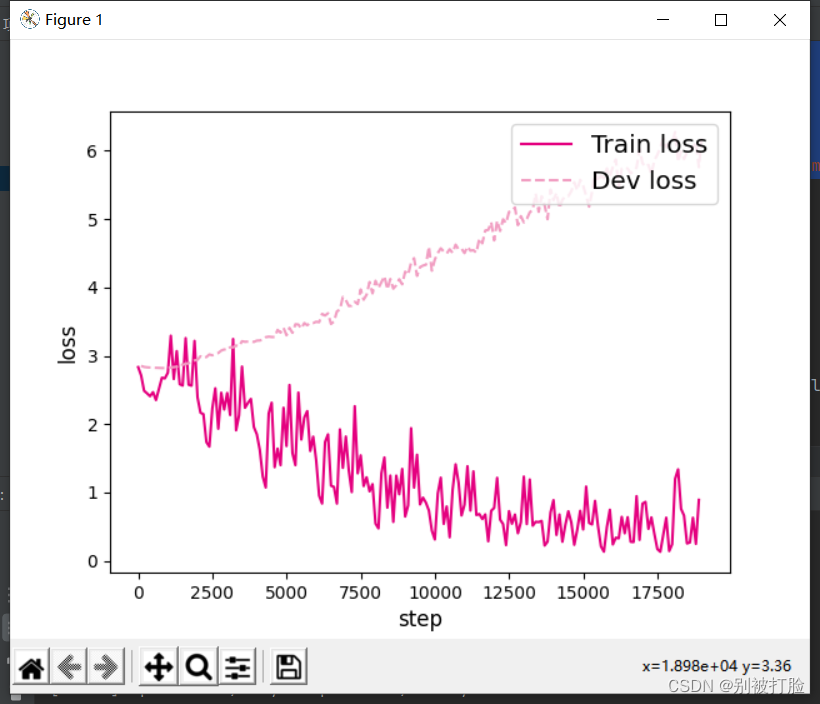

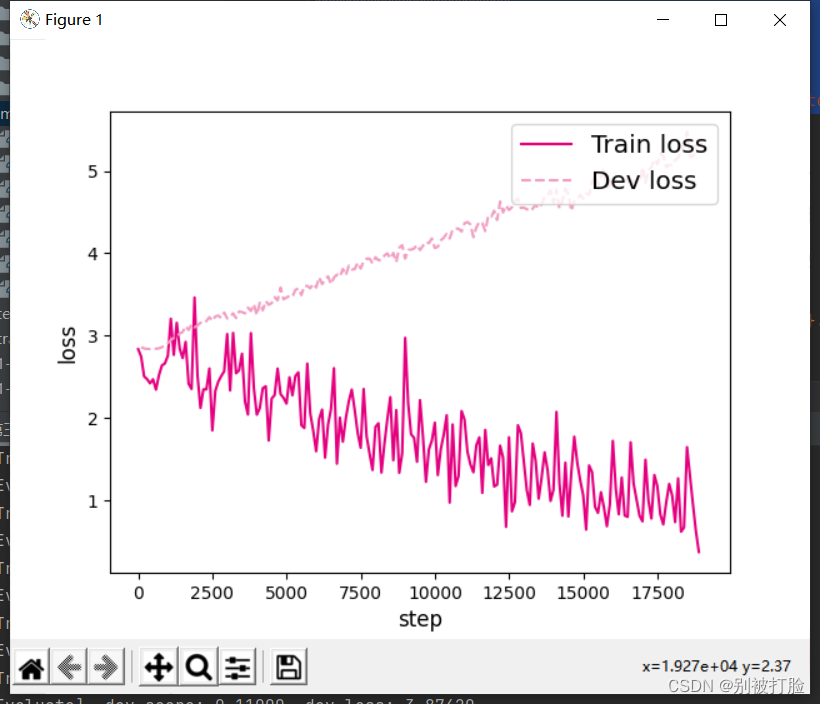

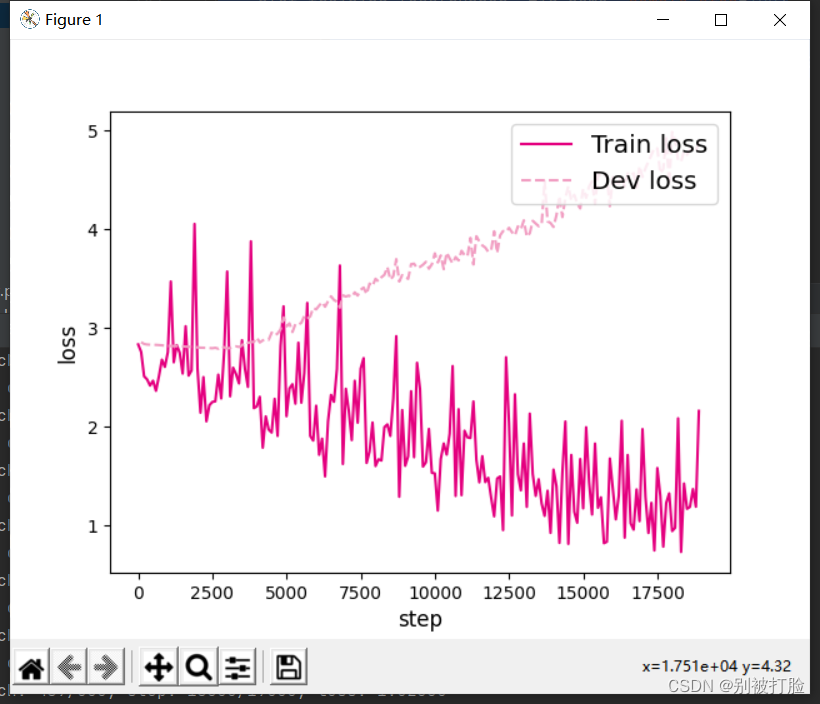

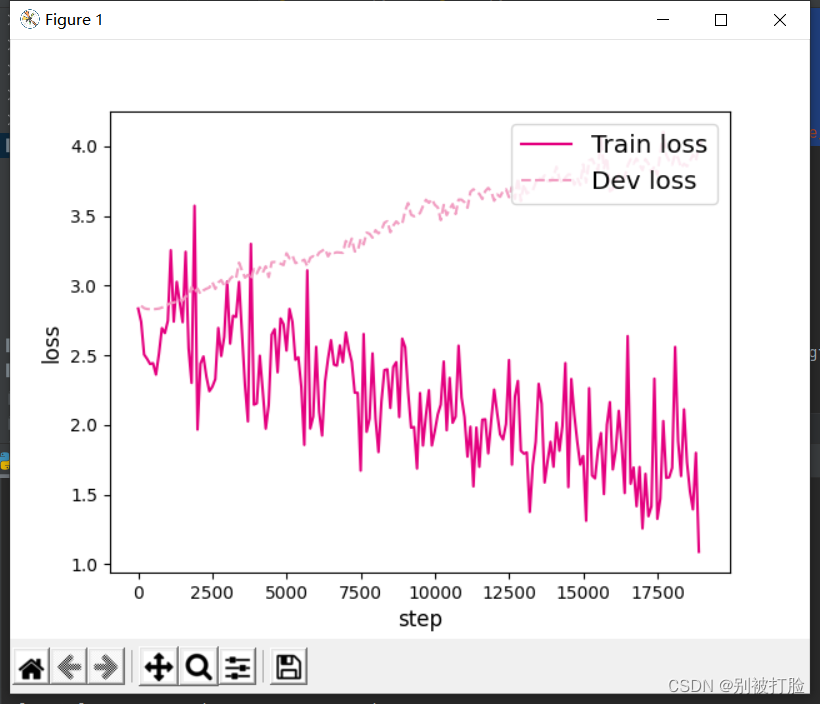

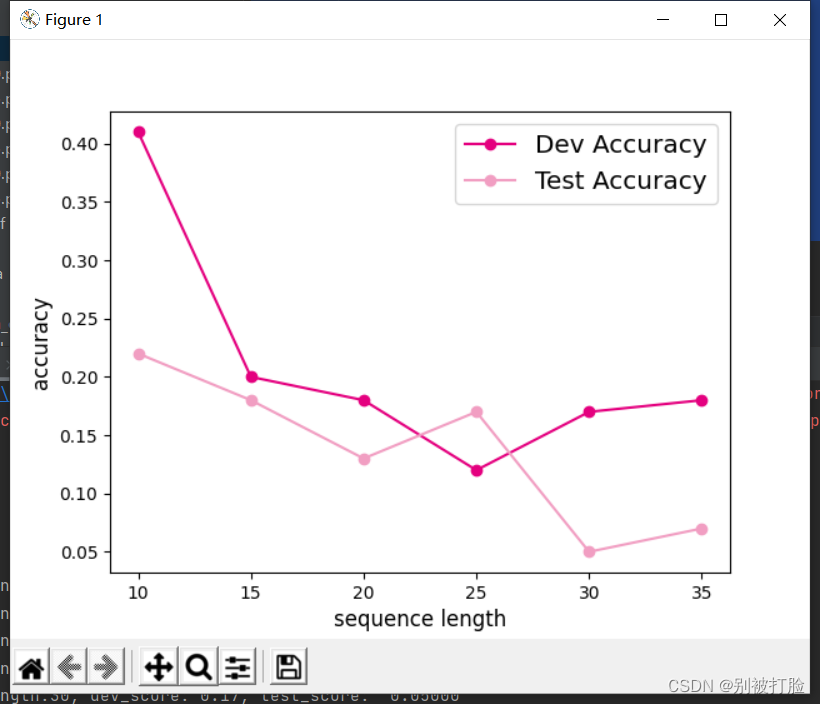

6.1.4 模型评价(模型最好0.22,实在看不出来是哪的问题)

动手练习 6.1 参考《神经网络与深度学习》中的公式(6.50),改进SRN的循环单元,加入隐状态之间的残差连接,并重复数字求和实验。观察是否可以缓解长程依赖问题。

前言

这次,写的很细,尤其是残差那部分,为什么这么改,我都给出了解释,这次才明白了残差连接原来是一种思想,不是专指模块。

最后,写的不太好请老师和各位大佬多教教我。

循环神经网络(Recurrent Neural Network,RNN)是一类具有短期记忆能力的神经网络.

在循环神经网络中,神经元不但可以接受其他神经元的信息,也可以接受自身的信息,形成具有环路的网络结构.

和前馈神经网络相比,循环神经网络更加符合生物神经网络的结构.

目前,循环神经网络已经被广泛应用在语音识别、语言模型以及自然语言生成等任务上.

简单循环网络在参数学习时存在长程依赖问题,很难建模长时间间隔(Long Range)的状态之间的依赖关系。

为了测试简单循环网络的记忆能力,本节构建一个【数字求和任务】进行实验。

数字求和任务的输入是一串数字,前两个位置的数字为0-9,其余数字随机生成(主要为0),预测目标是输入序列中前两个数字的加和。图6.3展示了长度为10的数字序列.

本章内容主要包含两部分:

- 模型解读:介绍经典循环神经网络原理,为了更好地理解长程依赖问题,我们设计一个简单的数字求和任务来验证简单循环网络的记忆能力。长程依赖问题具体可分为梯度爆炸和梯度消失两种情况。对于梯度爆炸,我们复现简单循环网络的梯度爆炸现象并尝试解决。对于梯度消失,一种有效的方式是改进模型,我们也动手实现一个长短期记忆网络,并观察是否可以缓解长程依赖问题。

- 案例实践:基于双向长短期记忆网络实现文本分类任务.并了解如何进行补齐序列数据,如何将文本数据转为向量表示,如何对补齐位置进行掩蔽等实践知识。

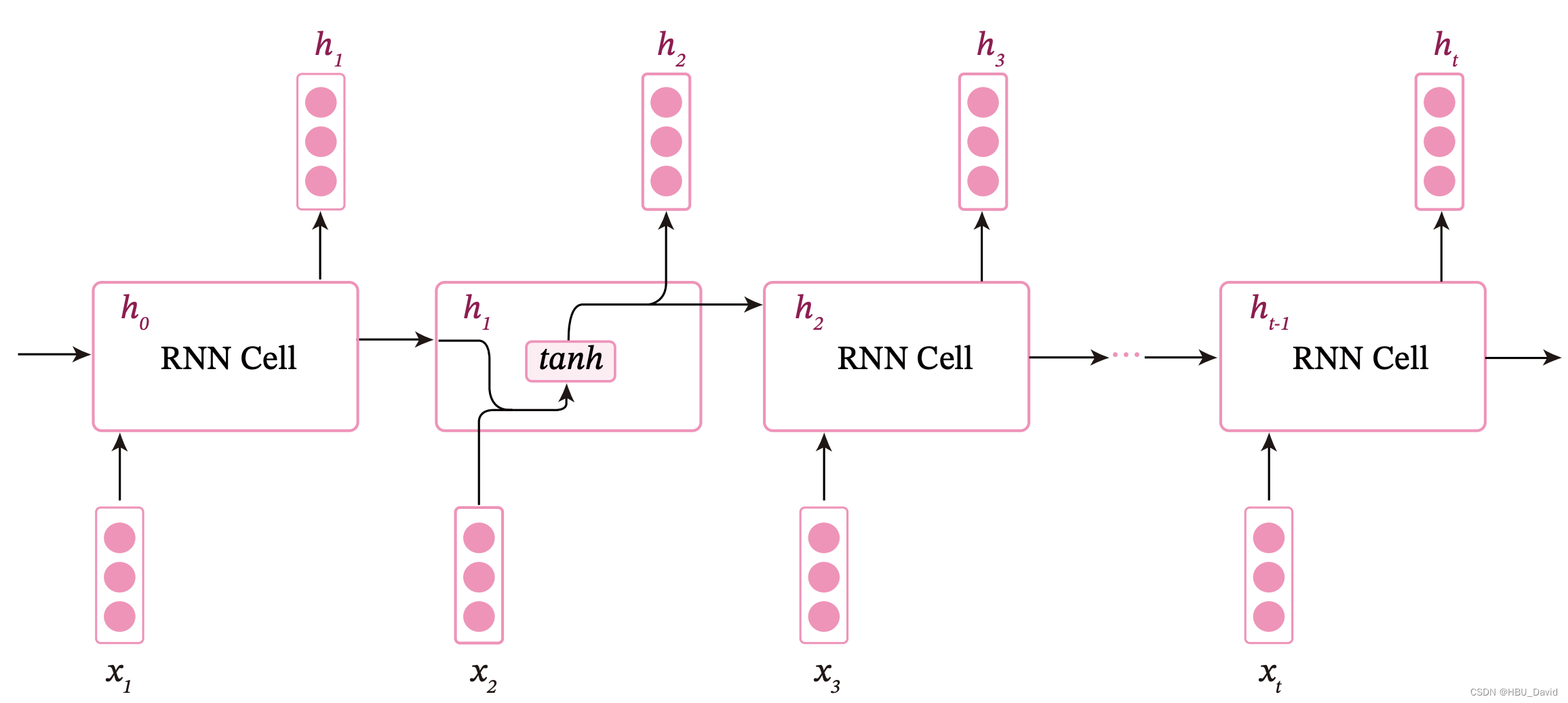

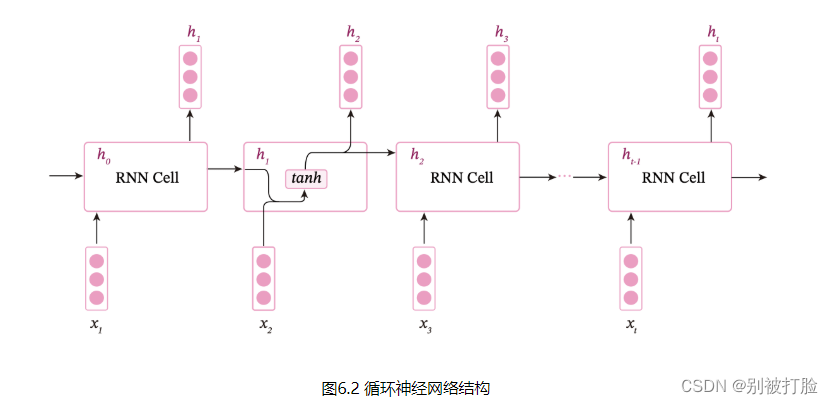

循环神经网络非常擅于处理序列数据,通过使用带自反馈的神经元,能够处理任意长度的序列数据.给定输入序列[x0,x1,x2,...],循环神经网络从左到右扫描该序列,并不断调用一个相同的组合函数f(⋅)f(⋅)来处理时序信息.这个函数也称为循环神经网络单元(RNN Cell). 在每个时刻tt,循环神经网络接受输入信息xt∈RM,并与前一时刻的隐状态ht−1∈RD一起进行计算,输出一个新的当前时刻的隐状态ht.

ht=f(ht−1,xt)

一、6.1 循环神经网络的记忆能力实验

循环神经网络的一种简单实现是简单循环网络(Simple Recurrent Network,SRN)

其中h0=0,f(⋅)是一个非线性函数.

循环神经网络的参数可以通过梯度下降法来学习。和前馈神经网络类似,我们可以使用随时间反向传播(BackPropagation Through Time,BPTT)算法高效地手工计算梯度,也可以使用自动微分的方法,通过计算图自动计算梯度。

循环神经网络被认为是图灵完备的,一个完全连接的循环神经网络可以近似解决所有的可计算问题。然而,虽然理论上循环神经网络可以建立长时间间隔的状态之间的依赖关系,但是由于具体的实现方式和参数学习方式会导致梯度爆炸或梯度消失问题,实际上,通常循环神经网络只能学习到短期的依赖关系,很难建模这种长距离的依赖关系,称为长程依赖问题(Long-Term Dependencies Problem)。

6.1 循环神经网络的记忆能力实验

循环神经网络的一种简单实现是简单循环网络(Simple Recurrent Network,SRN).

令向量xt∈RM表示在时刻tt时网络的输入,ht∈RD 表示隐藏层状态(即隐藏层神经元活性值),则ht不仅和当前时刻的输入xt相关,也和上一个时刻的隐藏层状态ht−1相关. 简单循环网络在时刻t的更新公式为

ht=f(Wxt+Uht−1+b),

其中ht为隐状态向量,U∈RD×D为状态-状态权重矩阵,W∈RD×M为状态-输入权重矩阵,b∈RD为偏置向量。

图6.2 展示了一个按时间展开的循环神经网络。

简单循环网络在参数学习时存在长程依赖问题,很难建模长时间间隔(Long Range)的状态之间的依赖关系。为了测试简单循环网络的记忆能力,本节构建一个数字求和任务进行实验。

数字求和任务的输入是一串数字,前两个位置的数字为0-9,其余数字随机生成(主要为0),预测目标是输入序列中前两个数字的加和。图6.3展示了长度为10的数字序列.

如果序列长度越长,准确率越高,则说明网络的记忆能力越好.因此,我们可以构建不同长度的数据集,通过验证简单循环网络在不同长度的数据集上的表现,从而测试简单循环网络的长程依赖能力.

6.1.1 数据集构建

我们首先构建不同长度的数字预测数据集DigitSum.

6.1.1.1 数据集的构建函数

由于在本任务中,输入序列的前两位数字为 0 − 9,其组合数是固定的,所以可以穷举所有的前两位数字组合,并在后面默认用0填充到固定长度. 但考虑到数据的多样性,这里对生成的数字序列中的零位置进行随机采样,并将其随机替换成0-9的数字以增加样本的数量.

我们可以通过设置kk的数值来指定一条样本随机生成的数字序列数量.当生成某个指定长度的数据集时,会同时生成训练集、验证集和测试集。当kk=3时,生成训练集。当kk=1时,生成验证集和测试集. 代码实现如下:

# coding=gbk

import random

import numpy as np

import os

import torch

from torch.utils.data import Dataset

import torch

import torch.nn as nn

from torch.nn.init import xavier_uniform

import torch

import torch.nn as nn

import torch.nn.functional as F

import time

import os

import random

import torch

import numpy as np

from nndl import Accuracy, RunnerV3

import matplotlib.pyplot as plt

import matplotlib.pyplot as plt

首先,先说一下用到的包,上边就是了,这里说一下,os模块是Python中整理文件和目录最为常用的模块,该模块提供了非常丰富的方法用来处理文件和目录。同时记得注意解码的方式。

# 固定随机种子

random.seed(0)

np.random.seed(0)

def generate_data(length, k, save_path):

if length < 3:

raise ValueError("The length of data should be greater than 2.")

if k == 0:

raise ValueError("k should be greater than 0.")

# 生成100条长度为length的数字序列,除前两个字符外,序列其余数字暂用0填充

base_examples = []

for n1 in range(0, 10):

for n2 in range(0, 10):

seq = [n1, n2] + [0] * (length - 2)

label = n1 + n2

base_examples.append((seq, label))

examples = []

# 数据增强:对base_examples中的每条数据,默认生成k条数据,放入examples

for base_example in base_examples:

for _ in range(k):

# 随机生成替换的元素位置和元素

idx = np.random.randint(2, length)

val = np.random.randint(0, 10)

# 对序列中的对应零元素进行替换

seq = base_example[0].copy()

label = base_example[1]

seq[idx] = val

examples.append((seq, label))

# 保存增强后的数据

with open(save_path, "w", encoding="utf-8") as f:

for example in examples:

# 将数据转为字符串类型,方便保存

seq = [str(e) for e in example[0]]

label = str(example[1])

line = " ".join(seq) + "\t" + label + "\n"

f.write(line)

print(f"generate data to: {save_path}.")

# 定义生成的数字序列长度

lengths = [5, 10, 15, 20, 25, 30, 35]

for length in lengths:

# 生成长度为length的训练数据

save_path = f"./datasets/{length}/train.txt"

k = 3

generate_data(length, k, save_path)

# 生成长度为length的验证数据

save_path = f"./datasets/{length}/dev.txt"

k = 1

generate_data(length, k, save_path)

# 生成长度为length的测试数据

save_path = f"./datasets/{length}/test.txt"

k = 1

generate_data(length, k, save_path)运行结果为:

generate data to: ./datasets/5/train.txt.

generate data to: ./datasets/5/dev.txt.

generate data to: ./datasets/5/test.txt.

generate data to: ./datasets/10/train.txt.

generate data to: ./datasets/10/dev.txt.

generate data to: ./datasets/10/test.txt.

generate data to: ./datasets/15/train.txt.

generate data to: ./datasets/15/dev.txt.

generate data to: ./datasets/15/test.txt.

generate data to: ./datasets/20/train.txt.

generate data to: ./datasets/20/dev.txt.

generate data to: ./datasets/20/test.txt.

generate data to: ./datasets/25/train.txt.

generate data to: ./datasets/25/dev.txt.

generate data to: ./datasets/25/test.txt.

generate data to: ./datasets/30/train.txt.

generate data to: ./datasets/30/dev.txt.

generate data to: ./datasets/30/test.txt.

generate data to: ./datasets/35/train.txt.

generate data to: ./datasets/35/dev.txt.

generate data to: ./datasets/35/test.txt.

同时这里要注意一点这里要建立包,或者把代码该改了,但是最简单方式就是直接建立包。

、

6.1.1.2 加载数据并进行数据划分

为方便使用,本实验提前生成了长度分别为5、10、 15、20、25、30和35的7份数据,存放于“./datasets”目录下,读者可以直接加载使用。代码实现如下:

# 加载数据

def load_data(data_path):

# 加载训练集

train_examples = []

train_path = os.path.join(data_path, "train.txt")

with open(train_path, "r", encoding="utf-8") as f:

for line in f.readlines():

# 解析一行数据,将其处理为数字序列seq和标签label

items = line.strip().split("\t")

seq = [int(i) for i in items[0].split(" ")]

label = int(items[1])

train_examples.append((seq, label))

# 加载验证集

dev_examples = []

dev_path = os.path.join(data_path, "dev.txt")

with open(dev_path, "r", encoding="utf-8") as f:

for line in f.readlines():

# 解析一行数据,将其处理为数字序列seq和标签label

items = line.strip().split("\t")

seq = [int(i) for i in items[0].split(" ")]

label = int(items[1])

dev_examples.append((seq, label))

# 加载测试集

test_examples = []

test_path = os.path.join(data_path, "test.txt")

with open(test_path, "r", encoding="utf-8") as f:

for line in f.readlines():

# 解析一行数据,将其处理为数字序列seq和标签label

items = line.strip().split("\t")

seq = [int(i) for i in items[0].split(" ")]

label = int(items[1])

test_examples.append((seq, label))

return train_examples, dev_examples, test_examples

# 设定加载的数据集的长度

length = 5

# 该长度的数据集的存放目录

data_path = f"./datasets/{length}"

# 加载该数据集

train_examples, dev_examples, test_examples = load_data(data_path)

print("dev example:", dev_examples[:2])

print("训练集数量:", len(train_examples))

print("验证集数量:", len(dev_examples))

print("测试集数量:", len(test_examples))运行结果为:

dev example: [([0, 0, 6, 0, 0], 0), ([0, 1, 0, 0, 8], 1)]

训练集数量: 300

验证集数量: 100

测试集数量: 100

6.1.1.3 构造Dataset类

为了方便使用梯度下降法进行优化,我们构造了DigitSum数据集的Dataset类,函数__getitem__负责根据索引读取数据,并将数据转换为张量。代码实现如下:

class DigitSumDataset(Dataset):

def __init__(self, data):

self.data = data

def __getitem__(self, idx):

example = self.data[idx]

seq = torch.tensor(example[0], dtype=torch.int64)

label = torch.tensor(example[1], dtype=torch.int64)

return seq, label

def __len__(self):

return len(self.data)6.1.2 模型构建

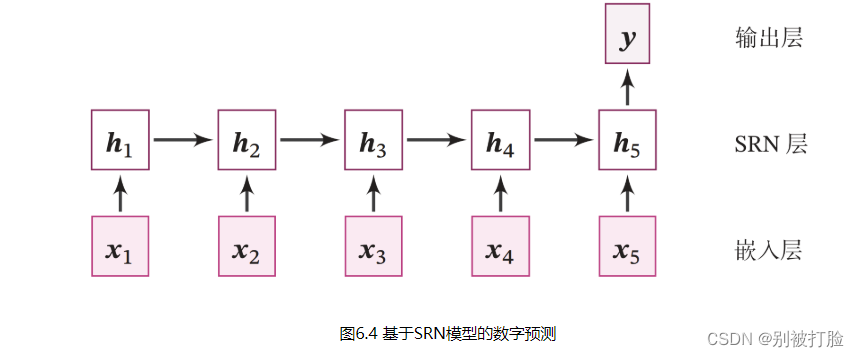

使用SRN模型进行数字加和任务的模型结构为如图6.4所示.

整个模型由以下几个部分组成:

(1) 嵌入层:将输入的数字序列进行向量化,即将每个数字映射为向量;

(2) SRN 层:接收向量序列,更新循环单元,将最后时刻的隐状态作为整个序列的表示;

(3) 输出层:一个线性层,输出分类的结果.

6.1.2.1 嵌入层

本任务输入的样本是数字序列,为了更好地表示数字,需要将数字映射为一个嵌入(Embedding)向量。嵌入向量中的每个维度均能用来刻画该数字本身的某种特性。由于向量能够表达该数字更多的信息,利用向量进行数字求和任务,可以使得模型具有更强的拟合能力。

首先,我们构建一个嵌入矩阵(Embedding Matrix),其中第i行对应数字i的嵌入向量,每个嵌入向量的维度是M。如图6.5所示。

给定一个组数字序列,其中B为批大小,L为序列长度,可以通过查表将其映射为嵌入表示

。

提醒:为了和代码的实现保持一致性,这里使用形状为(样本数量×序列长度×特征维度)(样本数量×序列长度×特征维度)的张量来表示一组样本。

或者也可以将每个数字表示为10维的one-hot向量,使用矩阵运算得到嵌入表示:

其中是序列S对应的one-hot表示。

思考:如果不使用嵌入层,直接将数字作为SRN层输入有什么问题?

基于索引方式的嵌入层的实现如下:

class Embedding(nn.Module):

def __init__(self, num_embeddings, embedding_dim,

para_attr=xavier_uniform):

super(Embedding, self).__init__()

# 定义嵌入矩阵

W=torch.zeros(size=[num_embeddings, embedding_dim], dtype=torch.float32)

self.W = torch.nn.Parameter(W)

xavier_uniform(W)

def forward(self, inputs):

# 根据索引获取对应词向量

embs = self.W[inputs]

return embs

emb_layer = Embedding(10, 5)

inputs = torch.tensor([0, 1, 2, 3])

emb_layer(inputs)6.1.2.2 SRN层

简单循环网络的代码实现如下:

torch.manual_seed(0)

# SRN模型

class SRN(nn.Module):

def __init__(self, input_size, hidden_size, W_attr=None, U_attr=None, b_attr=None):

super(SRN, self).__init__()

# 嵌入向量的维度

self.input_size = input_size

# 隐状态的维度

self.hidden_size = hidden_size

# 定义模型参数W,其shape为 input_size x hidden_size

if W_attr==None:

W=torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

else:

W=torch.tensor(W_attr,dtype=torch.float32)

self.W = torch.nn.Parameter(W)

# 定义模型参数U,其shape为hidden_size x hidden_size

if U_attr==None:

U=torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

else:

U=torch.tensor(U_attr,dtype=torch.float32)

self.U = torch.nn.Parameter(U)

# 定义模型参数b,其shape为 1 x hidden_size

if b_attr==None:

b=torch.zeros(size=[1, hidden_size], dtype=torch.float32)

else:

b=torch.tensor(b_attr,dtype=torch.float32)

self.b = torch.nn.Parameter(b)

# 初始化向量

def init_state(self, batch_size):

hidden_state = torch.zeros(size=[batch_size, self.hidden_size], dtype=torch.float32)

return hidden_state

# 定义前向计算

def forward(self, inputs, hidden_state=None):

# inputs: 输入数据, 其shape为batch_size x seq_len x input_size

batch_size, seq_len, input_size = inputs.shape

# 初始化起始状态的隐向量, 其shape为 batch_size x hidden_size

if hidden_state is None:

hidden_state = self.init_state(batch_size)

# 循环执行RNN计算

for step in range(seq_len):

# 获取当前时刻的输入数据step_input, 其shape为 batch_size x input_size

step_input = inputs[:, step, :]

# 获取当前时刻的隐状态向量hidden_state, 其shape为 batch_size x hidden_size

hidden_state = F.tanh(torch.matmul(step_input, self.W) + torch.matmul(hidden_state, self.U) + self.b)

return hidden_state提醒: 这里只保留了简单循环网络的最后一个时刻的输出向量。

## 初始化参数并运行

U_attr = [[0.0, 0.1], [0.1,0.0]]

b_attr = [[0.1, 0.1]]

W_attr=[[0.1, 0.2], [0.1,0.2]]

srn = SRN(2, 2, W_attr=W_attr, U_attr=U_attr, b_attr=b_attr)

inputs = torch.tensor([[[1, 0],[0, 2]]], dtype=torch.float32)

hidden_state = srn(inputs)

print("hidden_state", hidden_state)运行结果为:

hidden_state tensor([[0.3177, 0.4775]], grad_fn=<TanhBackward0>)

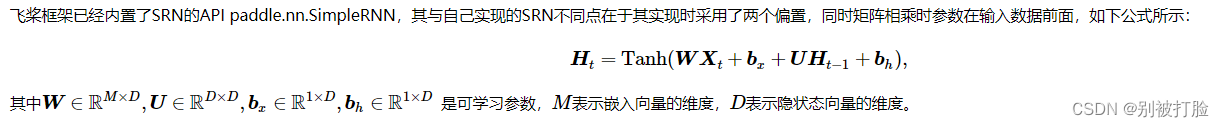

另外,内置SRN API在执行完前向计算后,会返回两个参数:序列向量和最后时刻的隐状态向量。在飞桨实现时,考虑到了双向和多层SRN的因素,返回的向量附带了这些信息。

其中序列向量outputs是指最后一层SRN的输出向量,其shape为[batch_size, seq_len, num_directions * hidden_size];最后时刻的隐状态向量shape为[num_layers * num_directions, batch_size, hidden_size]。

这里我们可以将自己实现的SRN和Paddle框架内置的SRN返回的结果进行打印展示,实现代码如下。

# 这里创建一个随机数组作为测试数据,数据shape为batch_size x seq_len x input_size

batch_size, seq_len, input_size = 8, 20, 32

inputs = torch.randn(size=[batch_size, seq_len, input_size])

# 设置模型的hidden_size

hidden_size = 32

paddle_srn = nn.RNN(input_size, hidden_size)

self_srn = SRN(input_size, hidden_size)

self_hidden_state = self_srn(inputs)

paddle_outputs, paddle_hidden_state = paddle_srn(inputs)

print("self_srn hidden_state: ", self_hidden_state.shape)

print("paddle_srn outpus:", paddle_outputs.shape)

print("paddle_srn hidden_state:", paddle_hidden_state.shape)运行结果为:

self_srn hidden_state: torch.Size([8, 32])

paddle_srn outpus: torch.Size([8, 20, 32])

paddle_srn hidden_state: torch.Size([1, 20, 32])

可以看到,自己实现的SRN由于没有考虑多层因素,因此没有层次这个维度,因此其输出shape为[8, 32]。同时由于在以上代码使用Paddle内置API实例化SRN时,默认定义的是1层的单向SRN,因此其shape为[1, 8, 32],同时隐状态向量为[8,20, 32].

接下来,我们可以将自己实现的SRN与Paddle内置的SRN在输出值的精度上进行对比,这里首先根据Paddle内置的SRN实例化模型(为了进行对比,在实例化时只保留一个偏置,将偏置bxbx设置为0),然后提取该模型对应的参数,使用该参数去初始化自己实现的SRN,从而保证两者在参数初始化时是一致的。

在进行实验时,首先定义输入数据inputs,然后将该数据分别传入Paddle内置的SRN与自己实现的SRN模型中,最后通过对比两者的隐状态输出向量。代码实现如下:

torch.manual_seed(0)

# 这里创建一个随机数组作为测试数据,数据shape为batch_size x seq_len x input_size

batch_size, seq_len, input_size, hidden_size = 2, 5, 10, 10

inputs = torch.randn(size=[batch_size, seq_len, input_size])

# 设置模型的hidden_size

bx_attr = torch.nn.Parameter(torch.zeros([hidden_size, ]))

paddle_srn = nn.RNN(input_size, hidden_size)

paddle_srn.bias_ih_l=bx_attr

# 获取paddle_srn中的参数,并设置相应的paramAttr,用于初始化SRN

W_attr = torch.nn.Parameter(paddle_srn.weight_ih_l0.T)

U_attr = torch.nn.Parameter(paddle_srn.weight_hh_l0.T)

b_attr = torch.nn.Parameter(paddle_srn.bias_hh_l0)

self_srn = SRN(input_size, hidden_size, W_attr=W_attr, U_attr=U_attr, b_attr=b_attr)

# 进行前向计算,获取隐状态向量,并打印展示

self_hidden_state = self_srn(inputs)

paddle_outputs, paddle_hidden_state = paddle_srn(inputs)

print("paddle SRN:\n", paddle_hidden_state.detach().numpy().squeeze(0))

print("self SRN:\n", self_hidden_state.detach().numpy())运行结果为:

paddle SRN:

[[-0.7879561 0.30351916 -0.8180367 -0.6886782 -0.04480647 0.21398577

0.7151095 -0.6950615 0.15764976 0.44665754]

[ 0.09500529 -0.20703138 0.41871697 -0.96180415 -0.67065084 0.3635481

0.9330359 -0.6328799 0.29817006 0.45230556]

[ 0.37487072 0.50830954 -0.70687103 0.37766257 0.08774418 0.3540142

-0.3860005 0.4884959 0.440492 0.27764338]

[-0.4694892 0.03494496 -0.8948485 0.68928754 -0.06903987 -0.11051217

-0.23742953 -0.6187381 -0.15267557 0.5742124 ]

[-0.05243767 -0.00631889 0.15062073 -0.5604651 -0.01922686 0.20319594

0.7351512 -0.4121003 0.07413491 0.6317186 ]]

self SRN:

[[ 0.12710479 0.10965557 0.30339125 -0.8991724 0.17633936 -0.30394837

-0.49593294 0.84559697 0.8441264 0.06168123]

[ 0.01825176 -0.31220886 -0.09947876 -0.19136088 -0.10779942 -0.09303827

0.33987308 0.0207502 -0.7328755 0.4166944 ]]

可以看到,两者的输出基本是一致的。另外,还可以进行对比两者在运算速度方面的差异。代码实现如下:

# 这里创建一个随机数组作为测试数据,数据shape为batch_size x seq_len x input_size

batch_size, seq_len, input_size, hidden_size = 2, 5, 10, 10

inputs = torch.randn(size=[batch_size, seq_len, input_size])

# 实例化模型

self_srn = SRN(input_size, hidden_size)

paddle_srn = nn.RNN(input_size, hidden_size)

# 计算自己实现的SRN运算速度

model_time = 0

for i in range(100):

strat_time = time.time()

out = self_srn(inputs)

# 预热10次运算,不计入最终速度统计

if i < 10:

continue

end_time = time.time()

model_time += (end_time - strat_time)

avg_model_time = model_time / 90

print('self_srn speed:', avg_model_time, 's')

# 计算Paddle内置的SRN运算速度

model_time = 0

for i in range(100):

strat_time = time.time()

out = paddle_srn(inputs)

# 预热10次运算,不计入最终速度统计

if i < 10:

continue

end_time = time.time()

model_time += (end_time - strat_time)

avg_model_time = model_time / 90

print('paddle_srn speed:', avg_model_time, 's')运行结果为:

self_srn speed: 0.0002438015407986111 s

paddle_srn speed: 0.0001108037100897895 s

可以看到,由于Paddle内部相关算子由C++实现,Paddle框架实现的SRN的运行效率显著高于自己实现的SRN效率。

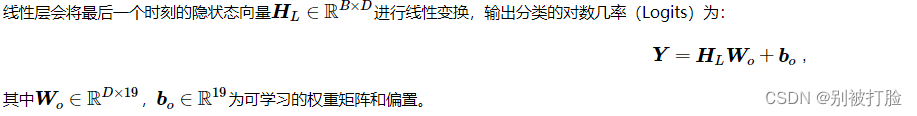

6.1.2.3 线性层

提醒:在分类问题的实践中,我们通常只需要模型输出分类的对数几率(Logits),而不用输出每个类的概率。这需要损失函数可以直接接收对数几率来损失计算。

线性层直接使用paddle.nn.Linear算子。

6.1.2.4 模型汇总

在定义了每一层的算子之后,我们定义一个数字求和模型Model_RNN4SeqClass,该模型会将嵌入层、SRN层和线性层进行组合,以实现数字求和的功能.

具体来讲,Model_RNN4SeqClass会接收一个SRN层实例,用于处理数字序列数据,同时在__init__函数中定义一个Embedding嵌入层,其会将输入的数字作为索引,输出对应的向量,最后会使用paddle.nn.Linear定义一个线性层。

提醒:为了方便进行对比实验,我们将SRN层的实例化放在\code{Model_RNN4SeqClass}类外面。通常情况下,模型内部算子的实例化是放在模型里面。

在forward函数中,调用上文实现的嵌入层、SRN层和线性层处理数字序列,同时返回最后一个位置的隐状态向量。代码实现如下:

# 基于RNN实现数字预测的模型

class Model_RNN4SeqClass(nn.Module):

def __init__(self, model, num_digits, input_size, hidden_size, num_classes):

super(Model_RNN4SeqClass, self).__init__()

# 传入实例化的RNN层,例如SRN

self.rnn_model = model

# 词典大小

self.num_digits = num_digits

# 嵌入向量的维度

self.input_size = input_size

# 定义Embedding层

self.embedding = Embedding(num_digits, input_size)

# 定义线性层

self.linear = nn.Linear(hidden_size, num_classes)

def forward(self, inputs):

# 将数字序列映射为相应向量

inputs_emb = self.embedding(inputs)

# 调用RNN模型

hidden_state = self.rnn_model(inputs_emb)

# 使用最后一个时刻的状态进行数字预测

logits = self.linear(hidden_state)

return logits

# 实例化一个input_size为4, hidden_size为5的SRN

srn = SRN(4, 5)

# 基于srn实例化一个数字预测模型实例

model = Model_RNN4SeqClass(srn, 10, 4, 5, 19)

# 生成一个shape为 2 x 3 的批次数据

inputs = torch.tensor([[1, 2, 3], [2, 3, 4]])

# 进行模型前向预测

logits = model(inputs)

print(logits)运行结果为:

tensor([[-0.2591, 0.0797, 0.0528, -0.2097, -0.1545, 0.1211, -0.3110, 0.0738,

0.1935, -0.1762, 0.3715, -0.0294, 0.2029, 0.4429, -0.1367, 0.2430,

-0.1279, -0.0653, -0.0758],

[-0.2591, 0.0797, 0.0528, -0.2097, -0.1545, 0.1211, -0.3110, 0.0738,

0.1935, -0.1762, 0.3715, -0.0294, 0.2029, 0.4429, -0.1367, 0.2430,

-0.1279, -0.0653, -0.0758]], grad_fn=<AddmmBackward0>)

6.1.3 模型训练

6.1.3.1 训练指定长度的数字预测模型

基于RunnerV3类进行训练,只需要指定length便可以加载相应的数据。设置超参数,使用Adam优化器,学习率为 0.001,实例化模型,使用第4.5.4节定义的Accuracy计算准确率。使用Runner进行训练,训练回合数设为500。代码实现如下:

# 训练轮次

num_epochs = 500

# 学习率

lr = 0.001

# 输入数字的类别数

num_digits = 10

# 将数字映射为向量的维度

input_size = 32

# 隐状态向量的维度

hidden_size = 32

# 预测数字的类别数

num_classes = 19

# 批大小

batch_size = 8

# 模型保存目录

save_dir = "./checkpoints"

# 通过指定length进行不同长度数据的实验

def train(length):

print(f"\n====> Training SRN with data of length {length}.")

# 固定随机种子

np.random.seed(0)

random.seed(0)

torch.manual_seed(0)

# 加载长度为length的数据

data_path = f"./datasets/{length}"

train_examples, dev_examples, test_examples = load_data(data_path)

train_set, dev_set, test_set = DigitSumDataset(train_examples), DigitSumDataset(dev_examples), DigitSumDataset(test_examples)

train_loader = torch.utils.data.DataLoader(train_set, batch_size=batch_size)

dev_loader = torch.utils.data.DataLoader(dev_set, batch_size=batch_size)

test_loader = torch.utils.data.DataLoader(test_set, batch_size=batch_size)

# 实例化模型

base_model = SRN(input_size, hidden_size)

model = Model_RNN4SeqClass(base_model, num_digits, input_size, hidden_size, num_classes)

# 指定优化器

optimizer = torch.optim.Adam(lr=lr, params=model.parameters())

# 定义评价指标

metric = Accuracy()

# 定义损失函数

loss_fn = nn.CrossEntropyLoss()

# 基于以上组件,实例化Runner

runner = RunnerV3(model, optimizer, loss_fn, metric)

# 进行模型训练

model_save_path = os.path.join(save_dir, f"best_srn_model_{length}.pdparams")

runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=100, log_steps=100, save_path=model_save_path)

return runner6.1.3.2 多组训练

接下来,分别进行数据长度为10, 15, 20, 25, 30, 35的数字预测模型训练实验,训练后的runner保存至runners字典中。

srn_runners = {}

lengths = [10, 15, 20, 25, 30, 35]

for length in lengths:

runner = train(length)

srn_runners[length] = runner运行结果为:

====> Training SRN with data of length 10.

[Train] epoch: 0/500, step: 0/19000, loss: 2.83505

C:\Users\LENOVO\PycharmProjects\pythonProject\深度学习\nndl.py:386: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

batch_correct = torch.sum(torch.tensor(preds == labels, dtype=torch.float32)).cpu().numpy()

[Train] epoch: 2/500, step: 100/19000, loss: 2.73908

[Evaluate] dev score: 0.09000, dev loss: 2.86240

[Evaluate] best accuracy performence has been updated: 0.00000 --> 0.09000

[Train] epoch: 5/500, step: 200/19000, loss: 2.47021

[Evaluate] dev score: 0.09000, dev loss: 2.84502

[Train] epoch: 7/500, step: 300/19000, loss: 2.43482

[Evaluate] dev score: 0.09000, dev loss: 2.84362

[Train] epoch: 10/500, step: 400/19000, loss: 2.47543

[Evaluate] dev score: 0.11000, dev loss: 2.84408

[Evaluate] best accuracy performence has been updated: 0.09000 --> 0.11000

[Train] epoch: 13/500, step: 500/19000, loss: 2.37544

[Evaluate] dev score: 0.10000, dev loss: 2.84784

[Train] epoch: 15/500, step: 600/19000, loss: 2.29882

[Evaluate] dev score: 0.10000, dev loss: 2.85641

[Train] epoch: 18/500, step: 700/19000, loss: 2.64025

[Evaluate] dev score: 0.10000, dev loss: 2.86549

[Train] epoch: 21/500, step: 800/19000, loss: 2.22483

[Evaluate] dev score: 0.08000, dev loss: 2.86844

[Train] epoch: 23/500, step: 900/19000, loss: 2.68438

[Evaluate] dev score: 0.08000, dev loss: 2.88898

[Train] epoch: 26/500, step: 1000/19000, loss: 2.64724

[Evaluate] dev score: 0.09000, dev loss: 2.90135

[Train] epoch: 28/500, step: 1100/19000, loss: 3.19629

[Evaluate] dev score: 0.09000, dev loss: 2.92629

[Train] epoch: 31/500, step: 1200/19000, loss: 2.77206

[Evaluate] dev score: 0.11000, dev loss: 2.94901

[Train] epoch: 34/500, step: 1300/19000, loss: 2.70552

[Evaluate] dev score: 0.13000, dev loss: 2.95129

[Evaluate] best accuracy performence has been updated: 0.11000 --> 0.13000

[Train] epoch: 36/500, step: 1400/19000, loss: 3.01894

[Evaluate] dev score: 0.11000, dev loss: 2.97218

[Train] epoch: 39/500, step: 1500/19000, loss: 2.42603

[Evaluate] dev score: 0.11000, dev loss: 2.97117

[Train] epoch: 42/500, step: 1600/19000, loss: 2.89388

[Evaluate] dev score: 0.10000, dev loss: 2.96882

[Train] epoch: 44/500, step: 1700/19000, loss: 2.20570

[Evaluate] dev score: 0.09000, dev loss: 2.98397

[Train] epoch: 47/500, step: 1800/19000, loss: 1.91950

[Evaluate] dev score: 0.12000, dev loss: 2.98254

[Train] epoch: 50/500, step: 1900/19000, loss: 3.21566

[Evaluate] dev score: 0.10000, dev loss: 2.97927

[Train] epoch: 52/500, step: 2000/19000, loss: 2.20726

[Evaluate] dev score: 0.11000, dev loss: 2.98990

[Train] epoch: 55/500, step: 2100/19000, loss: 2.01046

[Evaluate] dev score: 0.12000, dev loss: 2.99749

[Train] epoch: 57/500, step: 2200/19000, loss: 2.12048

[Evaluate] dev score: 0.10000, dev loss: 3.00084

[Train] epoch: 60/500, step: 2300/19000, loss: 2.21798

[Evaluate] dev score: 0.10000, dev loss: 3.02066

[Train] epoch: 63/500, step: 2400/19000, loss: 1.59704

[Evaluate] dev score: 0.09000, dev loss: 3.01792

[Train] epoch: 65/500, step: 2500/19000, loss: 1.73847

[Evaluate] dev score: 0.11000, dev loss: 3.02555

[Train] epoch: 68/500, step: 2600/19000, loss: 2.03223

[Evaluate] dev score: 0.10000, dev loss: 3.03289

[Train] epoch: 71/500, step: 2700/19000, loss: 1.84596

[Evaluate] dev score: 0.09000, dev loss: 3.04254

[Train] epoch: 73/500, step: 2800/19000, loss: 2.09853

[Evaluate] dev score: 0.12000, dev loss: 3.05910

[Train] epoch: 76/500, step: 2900/19000, loss: 2.15624

[Evaluate] dev score: 0.14000, dev loss: 3.06059

[Evaluate] best accuracy performence has been updated: 0.13000 --> 0.14000

[Train] epoch: 78/500, step: 3000/19000, loss: 2.36351

[Evaluate] dev score: 0.14000, dev loss: 3.10993

[Train] epoch: 81/500, step: 3100/19000, loss: 1.87227

[Evaluate] dev score: 0.14000, dev loss: 3.06657

[Train] epoch: 84/500, step: 3200/19000, loss: 2.48361

[Evaluate] dev score: 0.15000, dev loss: 3.10173

[Evaluate] best accuracy performence has been updated: 0.14000 --> 0.15000

[Train] epoch: 86/500, step: 3300/19000, loss: 2.50710

[Evaluate] dev score: 0.14000, dev loss: 3.13040

[Train] epoch: 89/500, step: 3400/19000, loss: 1.74455

[Evaluate] dev score: 0.15000, dev loss: 3.09751

[Train] epoch: 92/500, step: 3500/19000, loss: 2.30213

[Evaluate] dev score: 0.11000, dev loss: 3.13096

[Train] epoch: 94/500, step: 3600/19000, loss: 1.22322

[Evaluate] dev score: 0.13000, dev loss: 3.12189

[Train] epoch: 97/500, step: 3700/19000, loss: 1.15725

[Evaluate] dev score: 0.12000, dev loss: 3.12812

[Train] epoch: 100/500, step: 3800/19000, loss: 2.34680

[Evaluate] dev score: 0.16000, dev loss: 3.04341

[Evaluate] best accuracy performence has been updated: 0.15000 --> 0.16000

[Train] epoch: 102/500, step: 3900/19000, loss: 1.81340

[Evaluate] dev score: 0.14000, dev loss: 3.02824

[Train] epoch: 105/500, step: 4000/19000, loss: 1.27181

[Evaluate] dev score: 0.13000, dev loss: 3.07831

[Train] epoch: 107/500, step: 4100/19000, loss: 1.07729

[Evaluate] dev score: 0.15000, dev loss: 3.11609

[Train] epoch: 110/500, step: 4200/19000, loss: 1.43900

[Evaluate] dev score: 0.16000, dev loss: 3.03616

[Train] epoch: 113/500, step: 4300/19000, loss: 1.25830

[Evaluate] dev score: 0.17000, dev loss: 2.99934

[Evaluate] best accuracy performence has been updated: 0.16000 --> 0.17000

[Train] epoch: 115/500, step: 4400/19000, loss: 0.89935

[Evaluate] dev score: 0.14000, dev loss: 3.00428

[Train] epoch: 118/500, step: 4500/19000, loss: 1.20334

[Evaluate] dev score: 0.18000, dev loss: 3.03720

[Evaluate] best accuracy performence has been updated: 0.17000 --> 0.18000

[Train] epoch: 121/500, step: 4600/19000, loss: 1.54695

[Evaluate] dev score: 0.14000, dev loss: 2.98371

[Train] epoch: 123/500, step: 4700/19000, loss: 1.45645

[Evaluate] dev score: 0.17000, dev loss: 2.95671

[Train] epoch: 126/500, step: 4800/19000, loss: 1.27021

[Evaluate] dev score: 0.17000, dev loss: 2.95620

[Train] epoch: 128/500, step: 4900/19000, loss: 1.71935

[Evaluate] dev score: 0.19000, dev loss: 2.90806

[Evaluate] best accuracy performence has been updated: 0.18000 --> 0.19000

[Train] epoch: 131/500, step: 5000/19000, loss: 1.22521

[Evaluate] dev score: 0.23000, dev loss: 2.96573

[Evaluate] best accuracy performence has been updated: 0.19000 --> 0.23000

[Train] epoch: 134/500, step: 5100/19000, loss: 1.96995

[Evaluate] dev score: 0.21000, dev loss: 2.92426

[Train] epoch: 136/500, step: 5200/19000, loss: 1.66212

[Evaluate] dev score: 0.15000, dev loss: 3.03914

[Train] epoch: 139/500, step: 5300/19000, loss: 1.92507

[Evaluate] dev score: 0.22000, dev loss: 2.92877

[Train] epoch: 142/500, step: 5400/19000, loss: 1.44511

[Evaluate] dev score: 0.27000, dev loss: 2.91710

[Evaluate] best accuracy performence has been updated: 0.23000 --> 0.27000

[Train] epoch: 144/500, step: 5500/19000, loss: 0.54797

[Evaluate] dev score: 0.25000, dev loss: 2.92867

[Train] epoch: 147/500, step: 5600/19000, loss: 0.55282

[Evaluate] dev score: 0.26000, dev loss: 2.85153

[Train] epoch: 150/500, step: 5700/19000, loss: 1.67638

[Evaluate] dev score: 0.27000, dev loss: 3.02412

[Train] epoch: 152/500, step: 5800/19000, loss: 1.04362

[Evaluate] dev score: 0.25000, dev loss: 3.00833

[Train] epoch: 155/500, step: 5900/19000, loss: 0.96816

[Evaluate] dev score: 0.24000, dev loss: 2.97179

[Train] epoch: 157/500, step: 6000/19000, loss: 0.44596

[Evaluate] dev score: 0.30000, dev loss: 2.90125

[Evaluate] best accuracy performence has been updated: 0.27000 --> 0.30000

[Train] epoch: 160/500, step: 6100/19000, loss: 0.65244

[Evaluate] dev score: 0.29000, dev loss: 2.93372

[Train] epoch: 163/500, step: 6200/19000, loss: 0.59049

[Evaluate] dev score: 0.27000, dev loss: 2.94087

[Train] epoch: 165/500, step: 6300/19000, loss: 0.27649

[Evaluate] dev score: 0.29000, dev loss: 2.96596

[Train] epoch: 168/500, step: 6400/19000, loss: 0.67422

[Evaluate] dev score: 0.18000, dev loss: 3.21579

[Train] epoch: 171/500, step: 6500/19000, loss: 0.78047

[Evaluate] dev score: 0.32000, dev loss: 2.88458

[Evaluate] best accuracy performence has been updated: 0.30000 --> 0.32000

[Train] epoch: 173/500, step: 6600/19000, loss: 0.68724

[Evaluate] dev score: 0.30000, dev loss: 2.96731

[Train] epoch: 176/500, step: 6700/19000, loss: 0.80100

[Evaluate] dev score: 0.33000, dev loss: 2.93888

[Evaluate] best accuracy performence has been updated: 0.32000 --> 0.33000

[Train] epoch: 178/500, step: 6800/19000, loss: 0.97443

[Evaluate] dev score: 0.32000, dev loss: 2.95632

[Train] epoch: 181/500, step: 6900/19000, loss: 0.62359

[Evaluate] dev score: 0.32000, dev loss: 3.00066

[Train] epoch: 184/500, step: 7000/19000, loss: 1.23079

[Evaluate] dev score: 0.31000, dev loss: 3.04030

[Train] epoch: 186/500, step: 7100/19000, loss: 0.87591

[Evaluate] dev score: 0.30000, dev loss: 3.09521

[Train] epoch: 189/500, step: 7200/19000, loss: 0.71976

[Evaluate] dev score: 0.33000, dev loss: 2.97944

[Train] epoch: 192/500, step: 7300/19000, loss: 0.82965

[Evaluate] dev score: 0.33000, dev loss: 3.00946

[Train] epoch: 194/500, step: 7400/19000, loss: 0.25921

[Evaluate] dev score: 0.36000, dev loss: 2.98334

[Evaluate] best accuracy performence has been updated: 0.33000 --> 0.36000

[Train] epoch: 197/500, step: 7500/19000, loss: 0.25719

[Evaluate] dev score: 0.33000, dev loss: 3.22012

[Train] epoch: 200/500, step: 7600/19000, loss: 1.01207

[Evaluate] dev score: 0.34000, dev loss: 3.08092

[Train] epoch: 202/500, step: 7700/19000, loss: 0.48131

[Evaluate] dev score: 0.34000, dev loss: 3.14318

[Train] epoch: 205/500, step: 7800/19000, loss: 0.25067

[Evaluate] dev score: 0.36000, dev loss: 3.24914

[Train] epoch: 207/500, step: 7900/19000, loss: 0.25188

[Evaluate] dev score: 0.32000, dev loss: 3.19446

[Train] epoch: 210/500, step: 8000/19000, loss: 0.39977

[Evaluate] dev score: 0.26000, dev loss: 3.29251

[Train] epoch: 213/500, step: 8100/19000, loss: 0.40280

[Evaluate] dev score: 0.31000, dev loss: 3.25641

[Train] epoch: 215/500, step: 8200/19000, loss: 0.15974

[Evaluate] dev score: 0.32000, dev loss: 3.19523

[Train] epoch: 218/500, step: 8300/19000, loss: 0.27420

[Evaluate] dev score: 0.39000, dev loss: 3.21756

[Evaluate] best accuracy performence has been updated: 0.36000 --> 0.39000

[Train] epoch: 221/500, step: 8400/19000, loss: 0.54704

[Evaluate] dev score: 0.35000, dev loss: 3.25621

[Train] epoch: 223/500, step: 8500/19000, loss: 0.49683

[Evaluate] dev score: 0.34000, dev loss: 3.21325

[Train] epoch: 226/500, step: 8600/19000, loss: 0.70054

[Evaluate] dev score: 0.37000, dev loss: 3.28602

[Train] epoch: 228/500, step: 8700/19000, loss: 0.70102

[Evaluate] dev score: 0.36000, dev loss: 3.29414

[Train] epoch: 231/500, step: 8800/19000, loss: 0.30248

[Evaluate] dev score: 0.31000, dev loss: 3.34461

[Train] epoch: 234/500, step: 8900/19000, loss: 0.81018

[Evaluate] dev score: 0.35000, dev loss: 3.26771

[Train] epoch: 236/500, step: 9000/19000, loss: 0.46070

[Evaluate] dev score: 0.30000, dev loss: 3.52624

[Train] epoch: 239/500, step: 9100/19000, loss: 0.47189

[Evaluate] dev score: 0.35000, dev loss: 3.42182

[Train] epoch: 242/500, step: 9200/19000, loss: 0.57293

[Evaluate] dev score: 0.35000, dev loss: 3.35756

[Train] epoch: 244/500, step: 9300/19000, loss: 0.12502

[Evaluate] dev score: 0.32000, dev loss: 3.38542

[Train] epoch: 247/500, step: 9400/19000, loss: 0.13400

[Evaluate] dev score: 0.34000, dev loss: 3.40949

[Train] epoch: 250/500, step: 9500/19000, loss: 0.54604

[Evaluate] dev score: 0.38000, dev loss: 3.43062

[Train] epoch: 252/500, step: 9600/19000, loss: 0.33002

[Evaluate] dev score: 0.31000, dev loss: 3.49641

[Train] epoch: 255/500, step: 9700/19000, loss: 0.66392

[Evaluate] dev score: 0.32000, dev loss: 3.50343

[Train] epoch: 257/500, step: 9800/19000, loss: 0.14117

[Evaluate] dev score: 0.33000, dev loss: 3.32539

[Train] epoch: 260/500, step: 9900/19000, loss: 0.29120

[Evaluate] dev score: 0.32000, dev loss: 3.42756

[Train] epoch: 263/500, step: 10000/19000, loss: 0.20621

[Evaluate] dev score: 0.34000, dev loss: 3.50945

[Train] epoch: 265/500, step: 10100/19000, loss: 0.07904

[Evaluate] dev score: 0.34000, dev loss: 3.52622

[Train] epoch: 268/500, step: 10200/19000, loss: 0.18491

[Evaluate] dev score: 0.34000, dev loss: 3.50720

[Train] epoch: 271/500, step: 10300/19000, loss: 0.32531

[Evaluate] dev score: 0.33000, dev loss: 3.56791

[Train] epoch: 273/500, step: 10400/19000, loss: 0.22237

[Evaluate] dev score: 0.36000, dev loss: 3.62433

[Train] epoch: 276/500, step: 10500/19000, loss: 0.40556

[Evaluate] dev score: 0.35000, dev loss: 3.53585

[Train] epoch: 278/500, step: 10600/19000, loss: 0.45835

[Evaluate] dev score: 0.35000, dev loss: 3.62780

[Train] epoch: 281/500, step: 10700/19000, loss: 0.14548

[Evaluate] dev score: 0.37000, dev loss: 3.63532

[Train] epoch: 284/500, step: 10800/19000, loss: 0.63677

[Evaluate] dev score: 0.36000, dev loss: 3.59176

[Train] epoch: 286/500, step: 10900/19000, loss: 0.22609

[Evaluate] dev score: 0.35000, dev loss: 3.64333

[Train] epoch: 289/500, step: 11000/19000, loss: 0.23674

[Evaluate] dev score: 0.38000, dev loss: 3.57498

[Train] epoch: 292/500, step: 11100/19000, loss: 0.35633

[Evaluate] dev score: 0.36000, dev loss: 3.63296

[Train] epoch: 294/500, step: 11200/19000, loss: 0.18034

[Evaluate] dev score: 0.28000, dev loss: 3.83123

[Train] epoch: 297/500, step: 11300/19000, loss: 0.07131

[Evaluate] dev score: 0.34000, dev loss: 3.66045

[Train] epoch: 300/500, step: 11400/19000, loss: 0.32990

[Evaluate] dev score: 0.36000, dev loss: 3.62500

[Train] epoch: 302/500, step: 11500/19000, loss: 0.12269

[Evaluate] dev score: 0.35000, dev loss: 3.62534

[Train] epoch: 305/500, step: 11600/19000, loss: 0.05855

[Evaluate] dev score: 0.35000, dev loss: 3.65868

[Train] epoch: 307/500, step: 11700/19000, loss: 0.03570

[Evaluate] dev score: 0.37000, dev loss: 3.68163

[Train] epoch: 310/500, step: 11800/19000, loss: 0.07230

[Evaluate] dev score: 0.35000, dev loss: 3.67315

[Train] epoch: 313/500, step: 11900/19000, loss: 0.11095

[Evaluate] dev score: 0.38000, dev loss: 3.70780

[Train] epoch: 315/500, step: 12000/19000, loss: 0.05862

[Evaluate] dev score: 0.32000, dev loss: 3.78234

[Train] epoch: 318/500, step: 12100/19000, loss: 0.14433

[Evaluate] dev score: 0.30000, dev loss: 3.80039

[Train] epoch: 321/500, step: 12200/19000, loss: 0.69741

[Evaluate] dev score: 0.32000, dev loss: 4.03870

[Train] epoch: 323/500, step: 12300/19000, loss: 0.85015

[Evaluate] dev score: 0.32000, dev loss: 3.96965

[Train] epoch: 326/500, step: 12400/19000, loss: 0.18350

[Evaluate] dev score: 0.35000, dev loss: 3.89167

[Train] epoch: 328/500, step: 12500/19000, loss: 0.21078

[Evaluate] dev score: 0.39000, dev loss: 3.78287

[Train] epoch: 331/500, step: 12600/19000, loss: 0.08685

[Evaluate] dev score: 0.35000, dev loss: 3.83641

[Train] epoch: 334/500, step: 12700/19000, loss: 0.42216

[Evaluate] dev score: 0.36000, dev loss: 3.85590

[Train] epoch: 336/500, step: 12800/19000, loss: 0.10043

[Evaluate] dev score: 0.37000, dev loss: 3.87513

[Train] epoch: 339/500, step: 12900/19000, loss: 0.10008

[Evaluate] dev score: 0.37000, dev loss: 3.89377

[Train] epoch: 342/500, step: 13000/19000, loss: 0.12626

[Evaluate] dev score: 0.37000, dev loss: 3.92152

[Train] epoch: 344/500, step: 13100/19000, loss: 0.04189

[Evaluate] dev score: 0.39000, dev loss: 3.92864

[Train] epoch: 347/500, step: 13200/19000, loss: 0.03536

[Evaluate] dev score: 0.40000, dev loss: 3.94852

[Evaluate] best accuracy performence has been updated: 0.39000 --> 0.40000

[Train] epoch: 350/500, step: 13300/19000, loss: 0.13846

[Evaluate] dev score: 0.36000, dev loss: 3.92732

[Train] epoch: 352/500, step: 13400/19000, loss: 0.13291

[Evaluate] dev score: 0.39000, dev loss: 4.01619

[Train] epoch: 355/500, step: 13500/19000, loss: 0.28273

[Evaluate] dev score: 0.31000, dev loss: 4.26797

[Train] epoch: 357/500, step: 13600/19000, loss: 0.09641

[Evaluate] dev score: 0.33000, dev loss: 4.23432

[Train] epoch: 360/500, step: 13700/19000, loss: 0.17208

[Evaluate] dev score: 0.41000, dev loss: 4.15987

[Evaluate] best accuracy performence has been updated: 0.40000 --> 0.41000

[Train] epoch: 363/500, step: 13800/19000, loss: 0.04751

[Evaluate] dev score: 0.41000, dev loss: 4.00832

[Train] epoch: 365/500, step: 13900/19000, loss: 0.02865

[Evaluate] dev score: 0.38000, dev loss: 3.99966

[Train] epoch: 368/500, step: 14000/19000, loss: 0.06722

[Evaluate] dev score: 0.38000, dev loss: 4.04818

[Train] epoch: 371/500, step: 14100/19000, loss: 0.09373

[Evaluate] dev score: 0.38000, dev loss: 4.06414

[Train] epoch: 373/500, step: 14200/19000, loss: 0.08476

[Evaluate] dev score: 0.38000, dev loss: 4.05785

[Train] epoch: 376/500, step: 14300/19000, loss: 0.08240

[Evaluate] dev score: 0.38000, dev loss: 4.09540

[Train] epoch: 378/500, step: 14400/19000, loss: 0.09758

[Evaluate] dev score: 0.38000, dev loss: 4.11332

[Train] epoch: 381/500, step: 14500/19000, loss: 0.05998

[Evaluate] dev score: 0.38000, dev loss: 4.11433

[Train] epoch: 384/500, step: 14600/19000, loss: 0.25254

[Evaluate] dev score: 0.39000, dev loss: 4.14494

[Train] epoch: 386/500, step: 14700/19000, loss: 0.05989

[Evaluate] dev score: 0.38000, dev loss: 4.16993

[Train] epoch: 389/500, step: 14800/19000, loss: 0.06117

[Evaluate] dev score: 0.38000, dev loss: 4.19090

[Train] epoch: 392/500, step: 14900/19000, loss: 0.06835

[Evaluate] dev score: 0.38000, dev loss: 4.17660

[Train] epoch: 394/500, step: 15000/19000, loss: 0.02626

[Evaluate] dev score: 0.39000, dev loss: 4.26732

[Train] epoch: 397/500, step: 15100/19000, loss: 0.02413

[Evaluate] dev score: 0.39000, dev loss: 4.22565

[Train] epoch: 400/500, step: 15200/19000, loss: 0.08177

[Evaluate] dev score: 0.38000, dev loss: 4.30711

[Train] epoch: 402/500, step: 15300/19000, loss: 0.04853

[Evaluate] dev score: 0.40000, dev loss: 4.30910

[Train] epoch: 405/500, step: 15400/19000, loss: 0.01739

[Evaluate] dev score: 0.39000, dev loss: 4.32941

[Train] epoch: 407/500, step: 15500/19000, loss: 0.02223

[Evaluate] dev score: 0.34000, dev loss: 4.28811

[Train] epoch: 410/500, step: 15600/19000, loss: 1.83905

[Evaluate] dev score: 0.24000, dev loss: 5.08864

[Train] epoch: 413/500, step: 15700/19000, loss: 0.20384

[Evaluate] dev score: 0.31000, dev loss: 4.54524

[Train] epoch: 415/500, step: 15800/19000, loss: 0.04254

[Evaluate] dev score: 0.32000, dev loss: 4.37044

[Train] epoch: 418/500, step: 15900/19000, loss: 0.07964

[Evaluate] dev score: 0.34000, dev loss: 4.29761

[Train] epoch: 421/500, step: 16000/19000, loss: 0.07383

[Evaluate] dev score: 0.35000, dev loss: 4.28524

[Train] epoch: 423/500, step: 16100/19000, loss: 0.05622

[Evaluate] dev score: 0.35000, dev loss: 4.30486

[Train] epoch: 426/500, step: 16200/19000, loss: 0.05579

[Evaluate] dev score: 0.35000, dev loss: 4.33177

[Train] epoch: 428/500, step: 16300/19000, loss: 0.06673

[Evaluate] dev score: 0.36000, dev loss: 4.33678

[Train] epoch: 431/500, step: 16400/19000, loss: 0.04996

[Evaluate] dev score: 0.37000, dev loss: 4.36361

[Train] epoch: 434/500, step: 16500/19000, loss: 0.15554

[Evaluate] dev score: 0.37000, dev loss: 4.38859

[Train] epoch: 436/500, step: 16600/19000, loss: 0.05381

[Evaluate] dev score: 0.38000, dev loss: 4.39685

[Train] epoch: 439/500, step: 16700/19000, loss: 0.04363

[Evaluate] dev score: 0.38000, dev loss: 4.41487

[Train] epoch: 442/500, step: 16800/19000, loss: 0.05046

[Evaluate] dev score: 0.39000, dev loss: 4.43051

[Train] epoch: 444/500, step: 16900/19000, loss: 0.02290

[Evaluate] dev score: 0.37000, dev loss: 4.47321

[Train] epoch: 447/500, step: 17000/19000, loss: 0.01592

[Evaluate] dev score: 0.35000, dev loss: 4.47792

[Train] epoch: 450/500, step: 17100/19000, loss: 0.06534

[Evaluate] dev score: 0.33000, dev loss: 4.47302

[Train] epoch: 452/500, step: 17200/19000, loss: 0.03050

[Evaluate] dev score: 0.38000, dev loss: 4.50075

[Train] epoch: 455/500, step: 17300/19000, loss: 0.01585

[Evaluate] dev score: 0.37000, dev loss: 4.49926

[Train] epoch: 457/500, step: 17400/19000, loss: 0.00789

[Evaluate] dev score: 0.37000, dev loss: 4.50584

[Train] epoch: 460/500, step: 17500/19000, loss: 0.01774

[Evaluate] dev score: 0.37000, dev loss: 4.53489

[Train] epoch: 463/500, step: 17600/19000, loss: 0.01564

[Evaluate] dev score: 0.31000, dev loss: 4.58186

[Train] epoch: 465/500, step: 17700/19000, loss: 0.04362

[Evaluate] dev score: 0.32000, dev loss: 4.64814

[Train] epoch: 468/500, step: 17800/19000, loss: 0.71339

[Evaluate] dev score: 0.27000, dev loss: 5.01524

[Train] epoch: 471/500, step: 17900/19000, loss: 0.48517

[Evaluate] dev score: 0.27000, dev loss: 4.75857

[Train] epoch: 473/500, step: 18000/19000, loss: 0.13905

[Evaluate] dev score: 0.34000, dev loss: 4.47235

[Train] epoch: 476/500, step: 18100/19000, loss: 0.05430

[Evaluate] dev score: 0.37000, dev loss: 4.45793

[Train] epoch: 478/500, step: 18200/19000, loss: 0.06591

[Evaluate] dev score: 0.36000, dev loss: 4.48867

[Train] epoch: 481/500, step: 18300/19000, loss: 0.03872

[Evaluate] dev score: 0.37000, dev loss: 4.52743

[Train] epoch: 484/500, step: 18400/19000, loss: 0.13256

[Evaluate] dev score: 0.35000, dev loss: 4.56614

[Train] epoch: 486/500, step: 18500/19000, loss: 0.03442

[Evaluate] dev score: 0.35000, dev loss: 4.58014

[Train] epoch: 489/500, step: 18600/19000, loss: 0.03280

[Evaluate] dev score: 0.35000, dev loss: 4.60006

[Train] epoch: 492/500, step: 18700/19000, loss: 0.04295

[Evaluate] dev score: 0.35000, dev loss: 4.61778

[Train] epoch: 494/500, step: 18800/19000, loss: 0.01893

[Evaluate] dev score: 0.35000, dev loss: 4.61824

[Train] epoch: 497/500, step: 18900/19000, loss: 0.01292

[Evaluate] dev score: 0.35000, dev loss: 4.63739

[Evaluate] dev score: 0.34000, dev loss: 4.64156

[Train] Training done!====> Training SRN with data of length 15.

[Train] epoch: 0/500, step: 0/19000, loss: 2.83505

[Train] epoch: 2/500, step: 100/19000, loss: 2.76077

[Evaluate] dev score: 0.09000, dev loss: 2.86277

[Evaluate] best accuracy performence has been updated: 0.00000 --> 0.09000

[Train] epoch: 5/500, step: 200/19000, loss: 2.50634

[Evaluate] dev score: 0.09000, dev loss: 2.84586

[Train] epoch: 7/500, step: 300/19000, loss: 2.47513

[Evaluate] dev score: 0.10000, dev loss: 2.84040

[Evaluate] best accuracy performence has been updated: 0.09000 --> 0.10000

[Train] epoch: 10/500, step: 400/19000, loss: 2.37930

[Evaluate] dev score: 0.10000, dev loss: 2.83651

[Train] epoch: 13/500, step: 500/19000, loss: 2.39413

[Evaluate] dev score: 0.09000, dev loss: 2.83416

[Train] epoch: 15/500, step: 600/19000, loss: 2.23714

[Evaluate] dev score: 0.08000, dev loss: 2.83630

[Train] epoch: 18/500, step: 700/19000, loss: 2.44058

[Evaluate] dev score: 0.10000, dev loss: 2.84270

[Train] epoch: 21/500, step: 800/19000, loss: 2.76401

[Evaluate] dev score: 0.10000, dev loss: 2.84442

[Train] epoch: 23/500, step: 900/19000, loss: 2.55657

[Evaluate] dev score: 0.10000, dev loss: 2.85249

[Train] epoch: 26/500, step: 1000/19000, loss: 2.47918

[Evaluate] dev score: 0.09000, dev loss: 2.85518

[Train] epoch: 28/500, step: 1100/19000, loss: 3.10911

[Evaluate] dev score: 0.08000, dev loss: 2.85641

[Train] epoch: 31/500, step: 1200/19000, loss: 2.56384

[Evaluate] dev score: 0.08000, dev loss: 2.86113

[Train] epoch: 34/500, step: 1300/19000, loss: 2.67793

[Evaluate] dev score: 0.07000, dev loss: 2.87115

[Train] epoch: 36/500, step: 1400/19000, loss: 2.53212

[Evaluate] dev score: 0.07000, dev loss: 2.88327

[Train] epoch: 39/500, step: 1500/19000, loss: 2.25400

[Evaluate] dev score: 0.10000, dev loss: 2.90202

[Train] epoch: 42/500, step: 1600/19000, loss: 3.12542

[Evaluate] dev score: 0.08000, dev loss: 2.91608

[Train] epoch: 44/500, step: 1700/19000, loss: 2.56346

[Evaluate] dev score: 0.11000, dev loss: 2.91974

[Evaluate] best accuracy performence has been updated: 0.10000 --> 0.11000

[Train] epoch: 47/500, step: 1800/19000, loss: 2.56737

[Evaluate] dev score: 0.13000, dev loss: 2.93953

[Evaluate] best accuracy performence has been updated: 0.11000 --> 0.13000

[Train] epoch: 50/500, step: 1900/19000, loss: 3.79985

[Evaluate] dev score: 0.10000, dev loss: 2.94002

[Train] epoch: 52/500, step: 2000/19000, loss: 2.36137

[Evaluate] dev score: 0.09000, dev loss: 2.94574

[Train] epoch: 55/500, step: 2100/19000, loss: 2.25356

[Evaluate] dev score: 0.11000, dev loss: 2.96908

[Train] epoch: 57/500, step: 2200/19000, loss: 2.46889

[Evaluate] dev score: 0.10000, dev loss: 2.96135

[Train] epoch: 60/500, step: 2300/19000, loss: 2.22854

[Evaluate] dev score: 0.11000, dev loss: 2.97149

[Train] epoch: 63/500, step: 2400/19000, loss: 2.03442

[Evaluate] dev score: 0.09000, dev loss: 2.99089

[Train] epoch: 65/500, step: 2500/19000, loss: 2.05247

[Evaluate] dev score: 0.08000, dev loss: 2.98253

[Train] epoch: 68/500, step: 2600/19000, loss: 2.35390

[Evaluate] dev score: 0.12000, dev loss: 3.00641

[Train] epoch: 71/500, step: 2700/19000, loss: 2.94749

[Evaluate] dev score: 0.08000, dev loss: 3.01382

[Train] epoch: 73/500, step: 2800/19000, loss: 2.11729

[Evaluate] dev score: 0.14000, dev loss: 3.01224

[Evaluate] best accuracy performence has been updated: 0.13000 --> 0.14000

[Train] epoch: 76/500, step: 2900/19000, loss: 2.18714

[Evaluate] dev score: 0.11000, dev loss: 3.05061

[Train] epoch: 78/500, step: 3000/19000, loss: 2.77573

[Evaluate] dev score: 0.10000, dev loss: 3.05423

[Train] epoch: 81/500, step: 3100/19000, loss: 2.37429

[Evaluate] dev score: 0.13000, dev loss: 3.05848

[Train] epoch: 84/500, step: 3200/19000, loss: 2.22667

[Evaluate] dev score: 0.10000, dev loss: 3.12824

[Train] epoch: 86/500, step: 3300/19000, loss: 2.25569

[Evaluate] dev score: 0.12000, dev loss: 3.09366

[Train] epoch: 89/500, step: 3400/19000, loss: 2.09917

[Evaluate] dev score: 0.09000, dev loss: 3.13784

[Train] epoch: 92/500, step: 3500/19000, loss: 2.71311

[Evaluate] dev score: 0.10000, dev loss: 3.13360

[Train] epoch: 94/500, step: 3600/19000, loss: 1.89698

[Evaluate] dev score: 0.12000, dev loss: 3.13037

[Train] epoch: 97/500, step: 3700/19000, loss: 2.32131

[Evaluate] dev score: 0.09000, dev loss: 3.21541

[Train] epoch: 100/500, step: 3800/19000, loss: 3.08196

[Evaluate] dev score: 0.13000, dev loss: 3.16011

[Train] epoch: 102/500, step: 3900/19000, loss: 1.76609

[Evaluate] dev score: 0.11000, dev loss: 3.17026

[Train] epoch: 105/500, step: 4000/19000, loss: 2.29387

[Evaluate] dev score: 0.12000, dev loss: 3.14705

[Train] epoch: 107/500, step: 4100/19000, loss: 1.90296

[Evaluate] dev score: 0.14000, dev loss: 3.17932

[Train] epoch: 110/500, step: 4200/19000, loss: 1.76077

[Evaluate] dev score: 0.11000, dev loss: 3.20040

[Train] epoch: 113/500, step: 4300/19000, loss: 1.47468

[Evaluate] dev score: 0.16000, dev loss: 3.20798

[Evaluate] best accuracy performence has been updated: 0.14000 --> 0.16000

[Train] epoch: 115/500, step: 4400/19000, loss: 2.13162

[Evaluate] dev score: 0.08000, dev loss: 3.31182

[Train] epoch: 118/500, step: 4500/19000, loss: 1.29976

[Evaluate] dev score: 0.14000, dev loss: 3.14220

[Train] epoch: 121/500, step: 4600/19000, loss: 2.53768

[Evaluate] dev score: 0.15000, dev loss: 3.14569

[Train] epoch: 123/500, step: 4700/19000, loss: 1.65875

[Evaluate] dev score: 0.16000, dev loss: 3.20079

[Train] epoch: 126/500, step: 4800/19000, loss: 1.25330

[Evaluate] dev score: 0.11000, dev loss: 3.23940

[Train] epoch: 128/500, step: 4900/19000, loss: 2.58738

[Evaluate] dev score: 0.13000, dev loss: 3.30051

[Train] epoch: 131/500, step: 5000/19000, loss: 1.87831

[Evaluate] dev score: 0.11000, dev loss: 3.25507

[Train] epoch: 134/500, step: 5100/19000, loss: 1.22737

[Evaluate] dev score: 0.11000, dev loss: 3.23486

[Train] epoch: 136/500, step: 5200/19000, loss: 1.72890

[Evaluate] dev score: 0.11000, dev loss: 3.26590

[Train] epoch: 139/500, step: 5300/19000, loss: 1.67032

[Evaluate] dev score: 0.11000, dev loss: 3.27094

[Train] epoch: 142/500, step: 5400/19000, loss: 1.90709

[Evaluate] dev score: 0.11000, dev loss: 3.24696

[Train] epoch: 144/500, step: 5500/19000, loss: 1.48135

[Evaluate] dev score: 0.12000, dev loss: 3.28293

[Train] epoch: 147/500, step: 5600/19000, loss: 1.38752

[Evaluate] dev score: 0.08000, dev loss: 3.35263

[Train] epoch: 150/500, step: 5700/19000, loss: 2.35441

[Evaluate] dev score: 0.13000, dev loss: 3.36897

[Train] epoch: 152/500, step: 5800/19000, loss: 1.02275

[Evaluate] dev score: 0.13000, dev loss: 3.42909

[Train] epoch: 155/500, step: 5900/19000, loss: 1.89935

[Evaluate] dev score: 0.11000, dev loss: 3.40721

[Train] epoch: 157/500, step: 6000/19000, loss: 1.41635

[Evaluate] dev score: 0.10000, dev loss: 3.50392

[Train] epoch: 160/500, step: 6100/19000, loss: 1.48398

[Evaluate] dev score: 0.15000, dev loss: 3.33068

[Train] epoch: 163/500, step: 6200/19000, loss: 0.71975

[Evaluate] dev score: 0.15000, dev loss: 3.48133

[Train] epoch: 165/500, step: 6300/19000, loss: 1.41459

[Evaluate] dev score: 0.14000, dev loss: 3.43130

[Train] epoch: 168/500, step: 6400/19000, loss: 0.59737

[Evaluate] dev score: 0.15000, dev loss: 3.54142

[Train] epoch: 171/500, step: 6500/19000, loss: 1.79419

[Evaluate] dev score: 0.15000, dev loss: 3.41640

[Train] epoch: 173/500, step: 6600/19000, loss: 1.07353

[Evaluate] dev score: 0.13000, dev loss: 3.58939

[Train] epoch: 176/500, step: 6700/19000, loss: 0.69818

[Evaluate] dev score: 0.12000, dev loss: 3.50400

[Train] epoch: 178/500, step: 6800/19000, loss: 1.56548

[Evaluate] dev score: 0.17000, dev loss: 3.51000

[Evaluate] best accuracy performence has been updated: 0.16000 --> 0.17000

[Train] epoch: 181/500, step: 6900/19000, loss: 1.11598

[Evaluate] dev score: 0.13000, dev loss: 3.67556

[Train] epoch: 184/500, step: 7000/19000, loss: 0.90516

[Evaluate] dev score: 0.18000, dev loss: 3.51424

[Evaluate] best accuracy performence has been updated: 0.17000 --> 0.18000

[Train] epoch: 186/500, step: 7100/19000, loss: 0.95492

[Evaluate] dev score: 0.14000, dev loss: 3.59719

[Train] epoch: 189/500, step: 7200/19000, loss: 1.01277

[Evaluate] dev score: 0.12000, dev loss: 3.69248

[Train] epoch: 192/500, step: 7300/19000, loss: 1.46716

[Evaluate] dev score: 0.14000, dev loss: 3.68647

[Train] epoch: 194/500, step: 7400/19000, loss: 0.62821

[Evaluate] dev score: 0.12000, dev loss: 3.71594

[Train] epoch: 197/500, step: 7500/19000, loss: 1.11528

[Evaluate] dev score: 0.15000, dev loss: 3.68221

[Train] epoch: 200/500, step: 7600/19000, loss: 1.77548

[Evaluate] dev score: 0.12000, dev loss: 3.89713

[Train] epoch: 202/500, step: 7700/19000, loss: 0.45550

[Evaluate] dev score: 0.15000, dev loss: 3.75124

[Train] epoch: 205/500, step: 7800/19000, loss: 1.40486

[Evaluate] dev score: 0.14000, dev loss: 3.93964

[Train] epoch: 207/500, step: 7900/19000, loss: 0.78133

[Evaluate] dev score: 0.14000, dev loss: 3.84000

[Train] epoch: 210/500, step: 8000/19000, loss: 0.98718

[Evaluate] dev score: 0.15000, dev loss: 3.87040

[Train] epoch: 213/500, step: 8100/19000, loss: 0.28901

[Evaluate] dev score: 0.10000, dev loss: 3.91328

[Train] epoch: 215/500, step: 8200/19000, loss: 0.95248

[Evaluate] dev score: 0.12000, dev loss: 3.96304

[Train] epoch: 218/500, step: 8300/19000, loss: 0.37438

[Evaluate] dev score: 0.12000, dev loss: 3.96020

[Train] epoch: 221/500, step: 8400/19000, loss: 1.27768

[Evaluate] dev score: 0.13000, dev loss: 3.92766

[Train] epoch: 223/500, step: 8500/19000, loss: 0.79618

[Evaluate] dev score: 0.13000, dev loss: 4.00972

[Train] epoch: 226/500, step: 8600/19000, loss: 0.35729

[Evaluate] dev score: 0.13000, dev loss: 3.90088

[Train] epoch: 228/500, step: 8700/19000, loss: 1.05786

[Evaluate] dev score: 0.13000, dev loss: 4.08597

[Train] epoch: 231/500, step: 8800/19000, loss: 0.58279

[Evaluate] dev score: 0.15000, dev loss: 3.93795

[Train] epoch: 234/500, step: 8900/19000, loss: 0.48594

[Evaluate] dev score: 0.15000, dev loss: 4.18960

[Train] epoch: 236/500, step: 9000/19000, loss: 0.58695

[Evaluate] dev score: 0.11000, dev loss: 4.25615

[Train] epoch: 239/500, step: 9100/19000, loss: 0.69577

[Evaluate] dev score: 0.15000, dev loss: 4.30632

[Train] epoch: 242/500, step: 9200/19000, loss: 1.16058

[Evaluate] dev score: 0.14000, dev loss: 4.20862

[Train] epoch: 244/500, step: 9300/19000, loss: 0.25570

[Evaluate] dev score: 0.16000, dev loss: 4.35800

[Train] epoch: 247/500, step: 9400/19000, loss: 0.90334

[Evaluate] dev score: 0.13000, dev loss: 4.10298

[Train] epoch: 250/500, step: 9500/19000, loss: 1.37386

[Evaluate] dev score: 0.14000, dev loss: 4.15488

[Train] epoch: 252/500, step: 9600/19000, loss: 0.17184

[Evaluate] dev score: 0.15000, dev loss: 4.04287

[Train] epoch: 255/500, step: 9700/19000, loss: 0.84131

[Evaluate] dev score: 0.15000, dev loss: 4.13378

[Train] epoch: 257/500, step: 9800/19000, loss: 0.70315

[Evaluate] dev score: 0.14000, dev loss: 4.22905

[Train] epoch: 260/500, step: 9900/19000, loss: 0.87080

[Evaluate] dev score: 0.16000, dev loss: 4.47199

[Train] epoch: 263/500, step: 10000/19000, loss: 0.20803

[Evaluate] dev score: 0.15000, dev loss: 4.20662

[Train] epoch: 265/500, step: 10100/19000, loss: 0.86276

[Evaluate] dev score: 0.14000, dev loss: 4.40363

[Train] epoch: 268/500, step: 10200/19000, loss: 0.31701

[Evaluate] dev score: 0.13000, dev loss: 4.20517

[Train] epoch: 271/500, step: 10300/19000, loss: 0.90453

[Evaluate] dev score: 0.17000, dev loss: 4.34871

[Train] epoch: 273/500, step: 10400/19000, loss: 0.56441

[Evaluate] dev score: 0.17000, dev loss: 4.23428

[Train] epoch: 276/500, step: 10500/19000, loss: 0.23933

[Evaluate] dev score: 0.14000, dev loss: 4.59385

[Train] epoch: 278/500, step: 10600/19000, loss: 0.80815

[Evaluate] dev score: 0.15000, dev loss: 4.44130

[Train] epoch: 281/500, step: 10700/19000, loss: 0.36672

[Evaluate] dev score: 0.16000, dev loss: 4.41915

[Train] epoch: 284/500, step: 10800/19000, loss: 0.48567

[Evaluate] dev score: 0.18000, dev loss: 4.31361

[Train] epoch: 286/500, step: 10900/19000, loss: 0.46437

[Evaluate] dev score: 0.20000, dev loss: 4.54948

[Evaluate] best accuracy performence has been updated: 0.18000 --> 0.20000

[Train] epoch: 289/500, step: 11000/19000, loss: 0.22686

[Evaluate] dev score: 0.18000, dev loss: 4.48803

[Train] epoch: 292/500, step: 11100/19000, loss: 0.84354

[Evaluate] dev score: 0.16000, dev loss: 4.55678

[Train] epoch: 294/500, step: 11200/19000, loss: 0.11938

[Evaluate] dev score: 0.19000, dev loss: 4.54568

[Train] epoch: 297/500, step: 11300/19000, loss: 0.52974

[Evaluate] dev score: 0.18000, dev loss: 4.59792

[Train] epoch: 300/500, step: 11400/19000, loss: 1.05438

[Evaluate] dev score: 0.14000, dev loss: 4.82386

[Train] epoch: 302/500, step: 11500/19000, loss: 1.01673

[Evaluate] dev score: 0.12000, dev loss: 5.16799

[Train] epoch: 305/500, step: 11600/19000, loss: 0.88860

[Evaluate] dev score: 0.14000, dev loss: 5.20298

[Train] epoch: 307/500, step: 11700/19000, loss: 0.43491

[Evaluate] dev score: 0.19000, dev loss: 4.58437

[Train] epoch: 310/500, step: 11800/19000, loss: 0.51632

[Evaluate] dev score: 0.16000, dev loss: 4.52022

[Train] epoch: 313/500, step: 11900/19000, loss: 0.13031

[Evaluate] dev score: 0.17000, dev loss: 4.62133

[Train] epoch: 315/500, step: 12000/19000, loss: 0.24569

[Evaluate] dev score: 0.19000, dev loss: 4.66481

[Train] epoch: 318/500, step: 12100/19000, loss: 0.15829

[Evaluate] dev score: 0.17000, dev loss: 4.75249

[Train] epoch: 321/500, step: 12200/19000, loss: 0.58232

[Evaluate] dev score: 0.14000, dev loss: 4.77793

[Train] epoch: 323/500, step: 12300/19000, loss: 0.41828

[Evaluate] dev score: 0.18000, dev loss: 4.85725

[Train] epoch: 326/500, step: 12400/19000, loss: 0.56408

[Evaluate] dev score: 0.14000, dev loss: 4.90120

[Train] epoch: 328/500, step: 12500/19000, loss: 0.62691

[Evaluate] dev score: 0.14000, dev loss: 5.04766

[Train] epoch: 331/500, step: 12600/19000, loss: 0.23173

[Evaluate] dev score: 0.13000, dev loss: 5.01345

[Train] epoch: 334/500, step: 12700/19000, loss: 0.20522

[Evaluate] dev score: 0.12000, dev loss: 5.01045

[Train] epoch: 336/500, step: 12800/19000, loss: 0.25562

[Evaluate] dev score: 0.12000, dev loss: 5.06854

[Train] epoch: 339/500, step: 12900/19000, loss: 0.14946

[Evaluate] dev score: 0.14000, dev loss: 5.17645

[Train] epoch: 342/500, step: 13000/19000, loss: 0.89509

[Evaluate] dev score: 0.18000, dev loss: 5.16726

[Train] epoch: 344/500, step: 13100/19000, loss: 0.17927

[Evaluate] dev score: 0.15000, dev loss: 5.35971

[Train] epoch: 347/500, step: 13200/19000, loss: 0.45494

[Evaluate] dev score: 0.12000, dev loss: 5.40754

[Train] epoch: 350/500, step: 13300/19000, loss: 0.93466

[Evaluate] dev score: 0.14000, dev loss: 5.31348

[Train] epoch: 352/500, step: 13400/19000, loss: 0.19944

[Evaluate] dev score: 0.17000, dev loss: 5.23825

[Train] epoch: 355/500, step: 13500/19000, loss: 0.72734

[Evaluate] dev score: 0.15000, dev loss: 5.33326

[Train] epoch: 357/500, step: 13600/19000, loss: 0.63585

[Evaluate] dev score: 0.15000, dev loss: 5.46755

[Train] epoch: 360/500, step: 13700/19000, loss: 0.45678

[Evaluate] dev score: 0.16000, dev loss: 5.55628

[Train] epoch: 363/500, step: 13800/19000, loss: 0.12481

[Evaluate] dev score: 0.15000, dev loss: 5.42307

[Train] epoch: 365/500, step: 13900/19000, loss: 0.23945

[Evaluate] dev score: 0.14000, dev loss: 5.39611

[Train] epoch: 368/500, step: 14000/19000, loss: 0.08080

[Evaluate] dev score: 0.13000, dev loss: 5.38298

[Train] epoch: 371/500, step: 14100/19000, loss: 0.38046

[Evaluate] dev score: 0.15000, dev loss: 5.38863

[Train] epoch: 373/500, step: 14200/19000, loss: 0.32601

[Evaluate] dev score: 0.14000, dev loss: 5.39702

[Train] epoch: 376/500, step: 14300/19000, loss: 0.09506

[Evaluate] dev score: 0.15000, dev loss: 5.49716

[Train] epoch: 378/500, step: 14400/19000, loss: 0.37944

[Evaluate] dev score: 0.13000, dev loss: 5.51715

[Train] epoch: 381/500, step: 14500/19000, loss: 0.11882

[Evaluate] dev score: 0.13000, dev loss: 5.58194

[Train] epoch: 384/500, step: 14600/19000, loss: 0.14278

[Evaluate] dev score: 0.14000, dev loss: 5.63580

[Train] epoch: 386/500, step: 14700/19000, loss: 0.20634

[Evaluate] dev score: 0.14000, dev loss: 5.74823

[Train] epoch: 389/500, step: 14800/19000, loss: 0.34671

[Evaluate] dev score: 0.13000, dev loss: 5.99626

[Train] epoch: 392/500, step: 14900/19000, loss: 1.24933

[Evaluate] dev score: 0.12000, dev loss: 5.89194

[Train] epoch: 394/500, step: 15000/19000, loss: 0.14842

[Evaluate] dev score: 0.13000, dev loss: 5.80228

[Train] epoch: 397/500, step: 15100/19000, loss: 0.38922

[Evaluate] dev score: 0.13000, dev loss: 5.71438

[Train] epoch: 400/500, step: 15200/19000, loss: 0.75233

[Evaluate] dev score: 0.16000, dev loss: 5.97573

[Train] epoch: 402/500, step: 15300/19000, loss: 0.29019

[Evaluate] dev score: 0.14000, dev loss: 6.04976

[Train] epoch: 405/500, step: 15400/19000, loss: 0.94234

[Evaluate] dev score: 0.15000, dev loss: 6.02583

[Train] epoch: 407/500, step: 15500/19000, loss: 0.19138

[Evaluate] dev score: 0.13000, dev loss: 6.08674

[Train] epoch: 410/500, step: 15600/19000, loss: 0.23659

[Evaluate] dev score: 0.11000, dev loss: 5.97806

[Train] epoch: 413/500, step: 15700/19000, loss: 0.08207

[Evaluate] dev score: 0.12000, dev loss: 5.96864

[Train] epoch: 415/500, step: 15800/19000, loss: 0.13543

[Evaluate] dev score: 0.13000, dev loss: 6.03814

[Train] epoch: 418/500, step: 15900/19000, loss: 0.05355

[Evaluate] dev score: 0.14000, dev loss: 6.10606

[Train] epoch: 421/500, step: 16000/19000, loss: 0.23925

[Evaluate] dev score: 0.13000, dev loss: 6.12558

[Train] epoch: 423/500, step: 16100/19000, loss: 0.24062

[Evaluate] dev score: 0.13000, dev loss: 6.18271

[Train] epoch: 426/500, step: 16200/19000, loss: 0.05899

[Evaluate] dev score: 0.13000, dev loss: 6.22154

[Train] epoch: 428/500, step: 16300/19000, loss: 0.23340

[Evaluate] dev score: 0.13000, dev loss: 6.24922

[Train] epoch: 431/500, step: 16400/19000, loss: 0.07173

[Evaluate] dev score: 0.12000, dev loss: 6.31383

[Train] epoch: 434/500, step: 16500/19000, loss: 0.09424

[Evaluate] dev score: 0.12000, dev loss: 6.33810

[Train] epoch: 436/500, step: 16600/19000, loss: 0.10809

[Evaluate] dev score: 0.12000, dev loss: 6.33994

[Train] epoch: 439/500, step: 16700/19000, loss: 1.30112

[Evaluate] dev score: 0.10000, dev loss: 6.77052

[Train] epoch: 442/500, step: 16800/19000, loss: 0.97690

[Evaluate] dev score: 0.12000, dev loss: 6.32190

[Train] epoch: 444/500, step: 16900/19000, loss: 0.09842

[Evaluate] dev score: 0.12000, dev loss: 6.18493

[Train] epoch: 447/500, step: 17000/19000, loss: 0.20143

[Evaluate] dev score: 0.12000, dev loss: 6.15504

[Train] epoch: 450/500, step: 17100/19000, loss: 0.42670

[Evaluate] dev score: 0.13000, dev loss: 6.29108

[Train] epoch: 452/500, step: 17200/19000, loss: 0.03189

[Evaluate] dev score: 0.14000, dev loss: 6.32809

[Train] epoch: 455/500, step: 17300/19000, loss: 0.20587

[Evaluate] dev score: 0.14000, dev loss: 6.35056

[Train] epoch: 457/500, step: 17400/19000, loss: 0.14237

[Evaluate] dev score: 0.15000, dev loss: 6.39186

[Train] epoch: 460/500, step: 17500/19000, loss: 0.10222

[Evaluate] dev score: 0.15000, dev loss: 6.43560

[Train] epoch: 463/500, step: 17600/19000, loss: 0.04327

[Evaluate] dev score: 0.15000, dev loss: 6.46536

[Train] epoch: 465/500, step: 17700/19000, loss: 0.08087

[Evaluate] dev score: 0.15000, dev loss: 6.50584

[Train] epoch: 468/500, step: 17800/19000, loss: 0.04277

[Evaluate] dev score: 0.15000, dev loss: 6.54748

[Train] epoch: 471/500, step: 17900/19000, loss: 0.16980

[Evaluate] dev score: 0.14000, dev loss: 6.57638

[Train] epoch: 473/500, step: 18000/19000, loss: 0.18143

[Evaluate] dev score: 0.14000, dev loss: 6.61910

[Train] epoch: 476/500, step: 18100/19000, loss: 0.05450

[Evaluate] dev score: 0.13000, dev loss: 6.65924

[Train] epoch: 478/500, step: 18200/19000, loss: 0.15045

[Evaluate] dev score: 0.13000, dev loss: 6.68594

[Train] epoch: 481/500, step: 18300/19000, loss: 0.04379

[Evaluate] dev score: 0.13000, dev loss: 6.73629

[Train] epoch: 484/500, step: 18400/19000, loss: 0.06892

[Evaluate] dev score: 0.13000, dev loss: 6.76578

[Train] epoch: 486/500, step: 18500/19000, loss: 0.17115

[Evaluate] dev score: 0.13000, dev loss: 6.79995

[Train] epoch: 489/500, step: 18600/19000, loss: 0.73521

[Evaluate] dev score: 0.12000, dev loss: 6.92051

[Train] epoch: 492/500, step: 18700/19000, loss: 1.91153

[Evaluate] dev score: 0.13000, dev loss: 6.37057

[Train] epoch: 494/500, step: 18800/19000, loss: 0.05048

[Evaluate] dev score: 0.14000, dev loss: 6.31246

[Train] epoch: 497/500, step: 18900/19000, loss: 0.86228

[Evaluate] dev score: 0.13000, dev loss: 6.44801

[Evaluate] dev score: 0.17000, dev loss: 6.24818

[Train] Training done!====> Training SRN with data of length 20.

[Train] epoch: 0/500, step: 0/19000, loss: 2.83505

[Train] epoch: 2/500, step: 100/19000, loss: 2.71278

[Evaluate] dev score: 0.09000, dev loss: 2.85331

[Evaluate] best accuracy performence has been updated: 0.00000 --> 0.09000

[Train] epoch: 5/500, step: 200/19000, loss: 2.48851

[Evaluate] dev score: 0.11000, dev loss: 2.83772

[Evaluate] best accuracy performence has been updated: 0.09000 --> 0.11000

[Train] epoch: 7/500, step: 300/19000, loss: 2.45083

[Evaluate] dev score: 0.10000, dev loss: 2.83191

[Train] epoch: 10/500, step: 400/19000, loss: 2.40939

[Evaluate] dev score: 0.11000, dev loss: 2.82912

[Train] epoch: 13/500, step: 500/19000, loss: 2.46860

[Evaluate] dev score: 0.10000, dev loss: 2.82651

[Train] epoch: 15/500, step: 600/19000, loss: 2.35298

[Evaluate] dev score: 0.11000, dev loss: 2.82572

[Train] epoch: 18/500, step: 700/19000, loss: 2.51577

[Evaluate] dev score: 0.11000, dev loss: 2.82325

[Train] epoch: 21/500, step: 800/19000, loss: 2.67935

[Evaluate] dev score: 0.11000, dev loss: 2.82096

[Train] epoch: 23/500, step: 900/19000, loss: 2.67027

[Evaluate] dev score: 0.12000, dev loss: 2.82111

[Evaluate] best accuracy performence has been updated: 0.11000 --> 0.12000

[Train] epoch: 26/500, step: 1000/19000, loss: 2.75336

[Evaluate] dev score: 0.11000, dev loss: 2.82484

[Train] epoch: 28/500, step: 1100/19000, loss: 3.29467

[Evaluate] dev score: 0.14000, dev loss: 2.82974

[Evaluate] best accuracy performence has been updated: 0.12000 --> 0.14000

[Train] epoch: 31/500, step: 1200/19000, loss: 2.66103

[Evaluate] dev score: 0.11000, dev loss: 2.83553

[Train] epoch: 34/500, step: 1300/19000, loss: 3.07006

[Evaluate] dev score: 0.12000, dev loss: 2.84030

[Train] epoch: 36/500, step: 1400/19000, loss: 2.58561

[Evaluate] dev score: 0.12000, dev loss: 2.88344

[Train] epoch: 39/500, step: 1500/19000, loss: 2.56494

[Evaluate] dev score: 0.09000, dev loss: 2.86722

[Train] epoch: 42/500, step: 1600/19000, loss: 3.25947

[Evaluate] dev score: 0.09000, dev loss: 2.88478

[Train] epoch: 44/500, step: 1700/19000, loss: 2.57795

[Evaluate] dev score: 0.11000, dev loss: 2.89065

[Train] epoch: 47/500, step: 1800/19000, loss: 2.56337

[Evaluate] dev score: 0.10000, dev loss: 2.92017

[Train] epoch: 50/500, step: 1900/19000, loss: 3.21902

[Evaluate] dev score: 0.08000, dev loss: 2.93244

[Train] epoch: 52/500, step: 2000/19000, loss: 2.39413

[Evaluate] dev score: 0.09000, dev loss: 2.94157

[Train] epoch: 55/500, step: 2100/19000, loss: 2.16729

[Evaluate] dev score: 0.08000, dev loss: 2.99453

[Train] epoch: 57/500, step: 2200/19000, loss: 2.14643

[Evaluate] dev score: 0.08000, dev loss: 2.96920

[Train] epoch: 60/500, step: 2300/19000, loss: 1.73778

[Evaluate] dev score: 0.06000, dev loss: 2.98271

[Train] epoch: 63/500, step: 2400/19000, loss: 1.67158

[Evaluate] dev score: 0.09000, dev loss: 3.02036

[Train] epoch: 65/500, step: 2500/19000, loss: 2.23220

[Evaluate] dev score: 0.09000, dev loss: 3.00788

[Train] epoch: 68/500, step: 2600/19000, loss: 2.52511

[Evaluate] dev score: 0.12000, dev loss: 3.03022

[Train] epoch: 71/500, step: 2700/19000, loss: 1.93339

[Evaluate] dev score: 0.12000, dev loss: 3.04054

[Train] epoch: 73/500, step: 2800/19000, loss: 2.46017

[Evaluate] dev score: 0.09000, dev loss: 3.07718

[Train] epoch: 76/500, step: 2900/19000, loss: 2.21428

[Evaluate] dev score: 0.12000, dev loss: 3.09395

[Train] epoch: 78/500, step: 3000/19000, loss: 2.45600

[Evaluate] dev score: 0.11000, dev loss: 3.08172

[Train] epoch: 81/500, step: 3100/19000, loss: 2.13425

[Evaluate] dev score: 0.10000, dev loss: 3.12596

[Train] epoch: 84/500, step: 3200/19000, loss: 3.24660

[Evaluate] dev score: 0.14000, dev loss: 3.12423

[Train] epoch: 86/500, step: 3300/19000, loss: 1.91041

[Evaluate] dev score: 0.10000, dev loss: 3.15128

[Train] epoch: 89/500, step: 3400/19000, loss: 2.12914

[Evaluate] dev score: 0.15000, dev loss: 3.13862

[Evaluate] best accuracy performence has been updated: 0.14000 --> 0.15000

[Train] epoch: 92/500, step: 3500/19000, loss: 2.84311

[Evaluate] dev score: 0.13000, dev loss: 3.21457

[Train] epoch: 94/500, step: 3600/19000, loss: 2.23920

[Evaluate] dev score: 0.13000, dev loss: 3.20514

[Train] epoch: 97/500, step: 3700/19000, loss: 2.31073

[Evaluate] dev score: 0.14000, dev loss: 3.20779

[Train] epoch: 100/500, step: 3800/19000, loss: 2.36979

[Evaluate] dev score: 0.13000, dev loss: 3.21187

[Train] epoch: 102/500, step: 3900/19000, loss: 1.95807

[Evaluate] dev score: 0.16000, dev loss: 3.20338

[Evaluate] best accuracy performence has been updated: 0.15000 --> 0.16000

[Train] epoch: 105/500, step: 4000/19000, loss: 1.85178

[Evaluate] dev score: 0.13000, dev loss: 3.22291

[Train] epoch: 107/500, step: 4100/19000, loss: 1.62311

[Evaluate] dev score: 0.12000, dev loss: 3.22457

[Train] epoch: 110/500, step: 4200/19000, loss: 1.23523

[Evaluate] dev score: 0.15000, dev loss: 3.23794

[Train] epoch: 113/500, step: 4300/19000, loss: 1.07370

[Evaluate] dev score: 0.13000, dev loss: 3.27242

[Train] epoch: 115/500, step: 4400/19000, loss: 2.15857

[Evaluate] dev score: 0.14000, dev loss: 3.28070

[Train] epoch: 118/500, step: 4500/19000, loss: 2.31446

[Evaluate] dev score: 0.13000, dev loss: 3.27546

[Train] epoch: 121/500, step: 4600/19000, loss: 1.37069

[Evaluate] dev score: 0.14000, dev loss: 3.27446

[Train] epoch: 123/500, step: 4700/19000, loss: 1.64295

[Evaluate] dev score: 0.12000, dev loss: 3.37979

[Train] epoch: 126/500, step: 4800/19000, loss: 1.40054

[Evaluate] dev score: 0.12000, dev loss: 3.33888

[Train] epoch: 128/500, step: 4900/19000, loss: 2.24069

[Evaluate] dev score: 0.12000, dev loss: 3.41059

[Train] epoch: 131/500, step: 5000/19000, loss: 1.68053

[Evaluate] dev score: 0.12000, dev loss: 3.30032

[Train] epoch: 134/500, step: 5100/19000, loss: 2.57372

[Evaluate] dev score: 0.10000, dev loss: 3.40965

[Train] epoch: 136/500, step: 5200/19000, loss: 1.57735

[Evaluate] dev score: 0.13000, dev loss: 3.33615

[Train] epoch: 139/500, step: 5300/19000, loss: 1.40068

[Evaluate] dev score: 0.11000, dev loss: 3.46263

[Train] epoch: 142/500, step: 5400/19000, loss: 2.46281

[Evaluate] dev score: 0.14000, dev loss: 3.45822

[Train] epoch: 144/500, step: 5500/19000, loss: 1.77564

[Evaluate] dev score: 0.10000, dev loss: 3.39936

[Train] epoch: 147/500, step: 5600/19000, loss: 2.09715

[Evaluate] dev score: 0.12000, dev loss: 3.47987

[Train] epoch: 150/500, step: 5700/19000, loss: 2.19265

[Evaluate] dev score: 0.12000, dev loss: 3.44766

[Train] epoch: 152/500, step: 5800/19000, loss: 1.60817

[Evaluate] dev score: 0.11000, dev loss: 3.48296

[Train] epoch: 155/500, step: 5900/19000, loss: 1.81749

[Evaluate] dev score: 0.13000, dev loss: 3.47991

[Train] epoch: 157/500, step: 6000/19000, loss: 1.47164

[Evaluate] dev score: 0.14000, dev loss: 3.49852

[Train] epoch: 160/500, step: 6100/19000, loss: 0.95415

[Evaluate] dev score: 0.10000, dev loss: 3.49171

[Train] epoch: 163/500, step: 6200/19000, loss: 0.83845

[Evaluate] dev score: 0.08000, dev loss: 3.61435

[Train] epoch: 165/500, step: 6300/19000, loss: 1.73972

[Evaluate] dev score: 0.12000, dev loss: 3.58961

[Train] epoch: 168/500, step: 6400/19000, loss: 1.85176

[Evaluate] dev score: 0.11000, dev loss: 3.62294

[Train] epoch: 171/500, step: 6500/19000, loss: 1.10044

[Evaluate] dev score: 0.13000, dev loss: 3.46658

[Train] epoch: 173/500, step: 6600/19000, loss: 1.08198

[Evaluate] dev score: 0.12000, dev loss: 3.50201

[Train] epoch: 176/500, step: 6700/19000, loss: 0.83829

[Evaluate] dev score: 0.13000, dev loss: 3.65137

[Train] epoch: 178/500, step: 6800/19000, loss: 1.92407

[Evaluate] dev score: 0.13000, dev loss: 3.67594

[Train] epoch: 181/500, step: 6900/19000, loss: 1.36036

[Evaluate] dev score: 0.14000, dev loss: 3.86113

[Train] epoch: 184/500, step: 7000/19000, loss: 1.81957

[Evaluate] dev score: 0.13000, dev loss: 3.81595

[Train] epoch: 186/500, step: 7100/19000, loss: 1.32176

[Evaluate] dev score: 0.11000, dev loss: 3.72902

[Train] epoch: 189/500, step: 7200/19000, loss: 1.00638

[Evaluate] dev score: 0.11000, dev loss: 3.73342

[Train] epoch: 192/500, step: 7300/19000, loss: 2.26401

[Evaluate] dev score: 0.11000, dev loss: 3.79074

[Train] epoch: 194/500, step: 7400/19000, loss: 1.28095

[Evaluate] dev score: 0.14000, dev loss: 3.75769

[Train] epoch: 197/500, step: 7500/19000, loss: 1.54406

[Evaluate] dev score: 0.11000, dev loss: 3.97123

[Train] epoch: 200/500, step: 7600/19000, loss: 1.09457

[Evaluate] dev score: 0.13000, dev loss: 3.82094

[Train] epoch: 202/500, step: 7700/19000, loss: 1.22336

[Evaluate] dev score: 0.14000, dev loss: 3.89391

[Train] epoch: 205/500, step: 7800/19000, loss: 1.01692

[Evaluate] dev score: 0.12000, dev loss: 4.08029

[Train] epoch: 207/500, step: 7900/19000, loss: 1.12315

[Evaluate] dev score: 0.11000, dev loss: 3.91319

[Train] epoch: 210/500, step: 8000/19000, loss: 0.54665

[Evaluate] dev score: 0.13000, dev loss: 4.09880

[Train] epoch: 213/500, step: 8100/19000, loss: 0.47531

[Evaluate] dev score: 0.13000, dev loss: 3.99117

[Train] epoch: 215/500, step: 8200/19000, loss: 1.28285

[Evaluate] dev score: 0.12000, dev loss: 4.08057

[Train] epoch: 218/500, step: 8300/19000, loss: 1.51334

[Evaluate] dev score: 0.12000, dev loss: 4.16564

[Train] epoch: 221/500, step: 8400/19000, loss: 0.77826

[Evaluate] dev score: 0.10000, dev loss: 3.97465

[Train] epoch: 223/500, step: 8500/19000, loss: 1.24851

[Evaluate] dev score: 0.12000, dev loss: 4.12431

[Train] epoch: 226/500, step: 8600/19000, loss: 0.56998

[Evaluate] dev score: 0.14000, dev loss: 3.98289

[Train] epoch: 228/500, step: 8700/19000, loss: 1.24792

[Evaluate] dev score: 0.13000, dev loss: 4.03360

[Train] epoch: 231/500, step: 8800/19000, loss: 0.97499

[Evaluate] dev score: 0.14000, dev loss: 4.12086

[Train] epoch: 234/500, step: 8900/19000, loss: 1.34552

[Evaluate] dev score: 0.15000, dev loss: 4.04631

[Train] epoch: 236/500, step: 9000/19000, loss: 0.64902

[Evaluate] dev score: 0.13000, dev loss: 4.23150

[Train] epoch: 239/500, step: 9100/19000, loss: 0.81200

[Evaluate] dev score: 0.12000, dev loss: 4.18604

[Train] epoch: 242/500, step: 9200/19000, loss: 1.94176

[Evaluate] dev score: 0.13000, dev loss: 4.36409

[Train] epoch: 244/500, step: 9300/19000, loss: 1.07175

[Evaluate] dev score: 0.13000, dev loss: 4.42877

[Train] epoch: 247/500, step: 9400/19000, loss: 1.55306

[Evaluate] dev score: 0.17000, dev loss: 4.17006

[Evaluate] best accuracy performence has been updated: 0.16000 --> 0.17000

[Train] epoch: 250/500, step: 9500/19000, loss: 0.82572

[Evaluate] dev score: 0.13000, dev loss: 4.29692

[Train] epoch: 252/500, step: 9600/19000, loss: 0.92585

[Evaluate] dev score: 0.13000, dev loss: 4.32064

[Train] epoch: 255/500, step: 9700/19000, loss: 0.85117