测试前先启动hadoop

[hadoop@mini-yum ~]$ start-dfs.sh

[hadoop@mini-yum ~]$ start-yarn.sh

1在一堆给定的文本文件中统计输出每一个单词出现的总次数

代码

package cn.feizhou.wcdemo;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

/**

* 相当于一个yarn集群的客户端

* 需要在此封装我们的mr程序的相关运行参数,指定jar包

* 最后提交给yarn

* @author

*

*/

public class WordcountDriver {

public static void main(String[] args) throws Exception {

if (args == null || args.length == 0) {

args = new String[2];

args[0] = "hdfs://mini-yum:9000/wordcount/input/wordcount.txt";

args[1] = "hdfs://mini-yum:9000/wordcount/output8";

}

Configuration conf = new Configuration();

//设置的没有用! ??????

// conf.set("HADOOP_USER_NAME", "hadoop");

// conf.set("dfs.permissions.enabled", "false");

/*conf.set("mapreduce.framework.name", "yarn");

conf.set("yarn.resoucemanager.hostname", "mini1");*/

Job job = Job.getInstance(conf);

/*job.setJar("/home/hadoop/wc.jar");*/

//指定本程序的jar包所在的本地路径

job.setJarByClass(WordcountDriver.class);

//指定本业务job要使用的mapper/Reducer业务类

job.setMapperClass(WordcountMapper.class);

job.setReducerClass(WordcountReducer.class);

//指定mapper输出数据的kv类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//指定reduce最终输出的数据的kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//指定job的输入原始文件所在目录

FileInputFormat.setInputPaths(job, new Path(args[0]));

//指定job的输出结果所在目录

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//将job中配置的相关参数,以及job所用的java类所在的jar包,提交给yarn去运行

/*job.submit();*/

boolean res = job.waitForCompletion(true);

System.exit(res?0:1);

}

}

--------------------------------------------------

package cn.feizhou.wcdemo;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

/**

* KEYIN: 默认情况下,是mr框架所读到的一行文本的起始偏移量,Long,

* 但是在hadoop中有自己的更精简的序列化接口,所以不直接用Long,而用LongWritable

*

* VALUEIN:默认情况下,是mr框架所读到的一行文本的内容,String,同上,用Text

*

* KEYOUT:是用户自定义逻辑处理完成之后输出数据中的key,在此处是单词,String,同上,用Text

* VALUEOUT:是用户自定义逻辑处理完成之后输出数据中的value,在此处是单词次数,Integer,同上,用IntWritable

*

* @author

*

*/

public class WordcountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

/**

* map阶段的业务逻辑就写在自定义的map()方法中 maptask会对每一行输入数据调用一次我们自定义的map()方法

*/

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 将maptask传给我们的文本内容先转换成String

String line = value.toString();

// 根据空格将这一行切分成单词

String[] words = line.split(" ");

// 将单词输出为<单词,1>

for (String word : words) {

// 将单词作为key,将次数1作为value,以便于后续的数据分发,可以根据单词分发,以便于相同单词会到相同的reduce task

context.write(new Text(word), new IntWritable(1));

}

}

}

--------------------------------------------------

package cn.feizhou.wcdemo;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

/**

* KEYIN, VALUEIN 对应 mapper输出的KEYOUT,VALUEOUT类型对应

*

* KEYOUT, VALUEOUT 是自定义reduce逻辑处理结果的输出数据类型

* KEYOUT是单词

* VLAUEOUT是总次数

* @author

*

*/

public class WordcountReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

/**

* <angelababy,1><angelababy,1><angelababy,1><angelababy,1><angelababy,1>

* <hello,1><hello,1><hello,1><hello,1><hello,1><hello,1>

* <banana,1><banana,1><banana,1><banana,1><banana,1><banana,1>

* 入参key,是一组相同单词kv对的key

*/

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int count=0;

/*Iterator<IntWritable> iterator = values.iterator();

while(iterator.hasNext()){

count += iterator.next().get();

}*/

for(IntWritable value:values){

count += value.get();

}

context.write(key, new IntWritable(count));

}

}

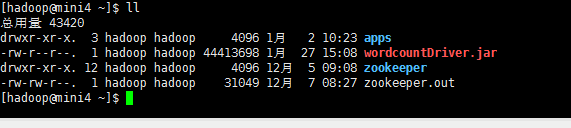

2项目打包为:wordcountDriver.jar

3上传jar包

4上传被解析的文件到hdfs

[hadoop@mini4 hadoop-2.6.4]$ hadoop fs -mkdir -p /wordcount/input

[hadoop@mini4 hadoop-2.6.4]$ hadoop fs -put LICENSE.txt NOTICE.txt README.txt /wordcount/input

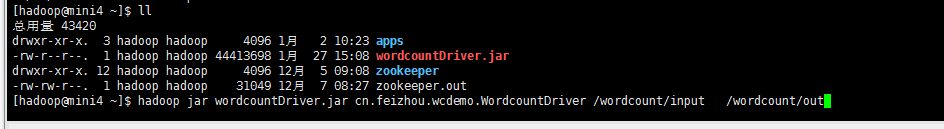

5执行测试

[hadoop@mini4 ~]$ hadoop jar wordcountDriver.jar cn.feizhou.wcdemo.WordcountDriver /wordcount/input /wordcount/out

测试报告

[hadoop@mini4 ~]$ hadoop jar wordcountDriver.jar cn.feizhou.wcdemo.WordcountDriver /wordcount/input /wordcount/out

19/01/27 16:01:56 INFO client.RMProxy: Connecting to ResourceManager at mini-yum/192.168.232.128:8032

19/01/27 16:01:56 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

19/01/27 16:01:58 INFO input.FileInputFormat: Total input paths to process : 3

19/01/27 16:01:58 INFO mapreduce.JobSubmitter: number of splits:3

19/01/27 16:01:58 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1548576099360_0001

19/01/27 16:01:59 INFO impl.YarnClientImpl: Submitted application application_1548576099360_0001

19/01/27 16:01:59 INFO mapreduce.Job: The url to track the job: http://mini-yum:8088/proxy/application_1548576099360_0001/

19/01/27 16:01:59 INFO mapreduce.Job: Running job: job_1548576099360_0001

19/01/27 16:02:11 INFO mapreduce.Job: Job job_1548576099360_0001 running in uber mode : false

19/01/27 16:02:11 INFO mapreduce.Job: map 0% reduce 0%

19/01/27 16:02:25 INFO mapreduce.Job: map 33% reduce 0%

19/01/27 16:02:30 INFO mapreduce.Job: map 67% reduce 0%

19/01/27 16:02:31 INFO mapreduce.Job: map 100% reduce 0%

19/01/27 16:02:43 INFO mapreduce.Job: map 100% reduce 100%

19/01/27 16:02:43 INFO mapreduce.Job: Job job_1548576099360_0001 completed successfully

19/01/27 16:02:43 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=38854

FILE: Number of bytes written=504645

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=17233

HDFS: Number of bytes written=8989

HDFS: Number of read operations=12

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=3

Launched reduce tasks=1

Data-local map tasks=3

Total time spent by all maps in occupied slots (ms)=47704

Total time spent by all reduces in occupied slots (ms)=13574

Total time spent by all map tasks (ms)=47704

Total time spent by all reduce tasks (ms)=13574

Total vcore-milliseconds taken by all map tasks=47704

Total vcore-milliseconds taken by all reduce tasks=13574

Total megabyte-milliseconds taken by all map tasks=48848896

Total megabyte-milliseconds taken by all reduce tasks=13899776

Map-Reduce Framework

Map input records=322

Map output records=3664

Map output bytes=31520

Map output materialized bytes=38866

Input split bytes=337

Combine input records=0

Combine output records=0

Reduce input groups=841

Reduce shuffle bytes=38866

Reduce input records=3664

Reduce output records=841

Spilled Records=7328

Shuffled Maps =3

Failed Shuffles=0

Merged Map outputs=3

GC time elapsed (ms)=976

CPU time spent (ms)=9200

Physical memory (bytes) snapshot=735395840

Virtual memory (bytes) snapshot=3372982272

Total committed heap usage (bytes)=441135104

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=16896

File Output Format Counters

Bytes Written=8989测试结果:数据很多,我就截取一点

2统计不同手机手机号的流量信息

- 01统计每个用户的总上行流量,下行流程,总流量,

- 02并按手机号的归属地输出到不同文件中

- 03统计结果按总流量倒序排序,

数据源

1363157985066 13726230503 00-FD-07-A4-72-B8:CMCC 120.196.100.82 i02.c.aliimg.com 24 27 2481 24681 200

1363157995052 13826544101 5C-0E-8B-C7-F1-E0:CMCC 120.197.40.4 4 0 264 0 200

1363157991076 13926435656 20-10-7A-28-CC-0A:CMCC 120.196.100.99 2 4 132 1512 200

1363154400022 13926251106 5C-0E-8B-8B-B1-50:CMCC 120.197.40.4 4 0 240 0 200

1363157993044 18211575961 94-71-AC-CD-E6-18:CMCC-EASY 120.196.100.99 iface.qiyi.com 视频网站 15 12 1527 2106 200

1363157995074 84138413 5C-0E-8B-8C-E8-20:7DaysInn 120.197.40.4 122.72.52.12 20 16 4116 1432 200

1363157993055 13560439658 C4-17-FE-BA-DE-D9:CMCC 120.196.100.99 18 15 1116 954 200

1363157995033 15920133257 5C-0E-8B-C7-BA-20:CMCC 120.197.40.4 sug.so.360.cn 信息安全 20 20 3156 2936 200

1363157983019 13719199419 68-A1-B7-03-07-B1:CMCC-EASY 120.196.100.82 4 0 240 0 200

1363157984041 13660577991 5C-0E-8B-92-5C-20:CMCC-EASY 120.197.40.4 s19.cnzz.com 站点统计 24 9 6960 690 200

1363157973098 15013685858 5C-0E-8B-C7-F7-90:CMCC 120.197.40.4 rank.ie.sogou.com 搜索引擎 28 27 3659 3538 200

1363157986029 15989002119 E8-99-C4-4E-93-E0:CMCC-EASY 120.196.100.99 www.umeng.com 站点统计 3 3 1938 180 200

1363157992093 13560439658 C4-17-FE-BA-DE-D9:CMCC 120.196.100.99 15 9 918 4938 200

1363157986041 13480253104 5C-0E-8B-C7-FC-80:CMCC-EASY 120.197.40.4 3 3 180 180 200

1363157984040 13602846565 5C-0E-8B-8B-B6-00:CMCC 120.197.40.4 2052.flash2-http.qq.com 综合门户 15 12 1938 2910 200

1363157995093 13922314466 00-FD-07-A2-EC-BA:CMCC 120.196.100.82 img.qfc.cn 12 12 3008 3720 200

1363157982040 13502468823 5C-0A-5B-6A-0B-D4:CMCC-EASY 120.196.100.99 y0.ifengimg.com 综合门户 57 102 7335 110349 200

1363157986072 18320173382 84-25-DB-4F-10-1A:CMCC-EASY 120.196.100.99 input.shouji.sogou.com 搜索引擎 21 18 9531 2412 200

1363157990043 13925057413 00-1F-64-E1-E6-9A:CMCC 120.196.100.55 t3.baidu.com 搜索引擎 69 63 11058 48243 200

1363157988072 13760778710 00-FD-07-A4-7B-08:CMCC 120.196.100.82 2 2 120 120 200

1363157985066 13726238888 00-FD-07-A4-72-B8:CMCC 120.196.100.82 i02.c.aliimg.com 24 27 2481 24681 200

1363157993055 13560436666 C4-17-FE-BA-DE-D9:CMCC 120.196.100.99 18 15 1116 954 200先处理01,02的问题

代码

package cn.feizhou.provinceflow;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.Writable;

public class FlowBean implements Writable{

private long upFlow;

private long dFlow;

private long sumFlow;

//反序列化时,需要反射调用空参构造函数,所以要显示定义一个

public FlowBean(){}

public FlowBean(long upFlow, long dFlow) {

this.upFlow = upFlow;

this.dFlow = dFlow;

this.sumFlow = upFlow + dFlow;

}

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getdFlow() {

return dFlow;

}

public void setdFlow(long dFlow) {

this.dFlow = dFlow;

}

public long getSumFlow() {

return sumFlow;

}

public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

}

/**

* 序列化方法

*/

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(upFlow);

out.writeLong(dFlow);

out.writeLong(sumFlow);

}

/**

* 反序列化方法

* 注意:反序列化的顺序跟序列化的顺序完全一致

*/

@Override

public void readFields(DataInput in) throws IOException {

upFlow = in.readLong();

dFlow = in.readLong();

sumFlow = in.readLong();

}

@Override

public String toString() {

return upFlow + "\t" + dFlow + "\t" + sumFlow;

}

}

--------------------------------------------------

package cn.feizhou.provinceflow;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FlowCount {

static class FlowCountMapper extends Mapper<LongWritable, Text, Text, FlowBean>{

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString(); //将一行内容转成string

String[] fields = line.split("\t"); //切分字段

String phoneNbr = fields[1]; //取出手机号

long upFlow = Long.parseLong(fields[fields.length-3]); //取出上行流量下行流量

long dFlow = Long.parseLong(fields[fields.length-2]);

context.write(new Text(phoneNbr), new FlowBean(upFlow, dFlow));

}

}

static class FlowCountReducer extends Reducer<Text, FlowBean, Text, FlowBean>{

//<183323,bean1><183323,bean2><183323,bean3><183323,bean4>.......

@Override

protected void reduce(Text key, Iterable<FlowBean> values, Context context) throws IOException, InterruptedException {

long sum_upFlow = 0;

long sum_dFlow = 0;

//遍历所有bean,将其中的上行流量,下行流量分别累加

for(FlowBean bean: values){

sum_upFlow += bean.getUpFlow();

sum_dFlow += bean.getdFlow();

}

FlowBean resultBean = new FlowBean(sum_upFlow, sum_dFlow);

context.write(key, resultBean);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

/*conf.set("mapreduce.framework.name", "yarn");

conf.set("yarn.resoucemanager.hostname", "mini1");*/

Job job = Job.getInstance(conf);

/*job.setJar("/home/hadoop/wc.jar");*/

//指定本程序的jar包所在的本地路径

job.setJarByClass(FlowCount.class);

//指定本业务job要使用的mapper/Reducer业务类

job.setMapperClass(FlowCountMapper.class);

job.setReducerClass(FlowCountReducer.class);

//指定我们自定义的数据分区器

job.setPartitionerClass(ProvincePartitioner.class);

//同时指定相应“分区”数量的reducetask

job.setNumReduceTasks(5);

//指定mapper输出数据的kv类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FlowBean.class);

//指定最终输出的数据的kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

//指定job的输入原始文件所在目录

FileInputFormat.setInputPaths(job, new Path(args[0]));

//指定job的输出结果所在目录

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//将job中配置的相关参数,以及job所用的java类所在的jar包,提交给yarn去运行

/*job.submit();*/

boolean res = job.waitForCompletion(true);

System.exit(res?0:1);

}

}

-------------------------------------------------------

package cn.feizhou.provinceflow;

import java.util.HashMap;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

/**

* K2 V2 对应的是map输出kv的类型

* @author

*

*/

public class ProvincePartitioner extends Partitioner<Text, FlowBean>{

public static HashMap<String, Integer> proviceDict = new HashMap<String, Integer>();

static{

proviceDict.put("136", 0);

proviceDict.put("137", 1);

proviceDict.put("138", 2);

proviceDict.put("139", 3);

}

@Override

public int getPartition(Text key, FlowBean value, int numPartitions) {

String prefix = key.toString().substring(0, 3);

Integer provinceId = proviceDict.get(prefix);

//返回分区号,136,137,138,139以外的返回分区号是4

return provinceId==null?4:provinceId;

}

}

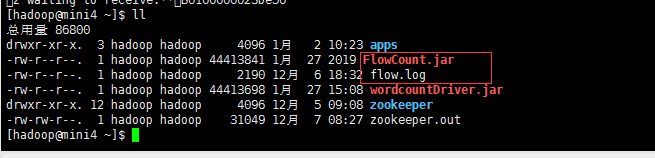

上传jar和测试数据

上传被解析的文件到hdfs

[hadoop@mini4 ~]$ hadoop fs -mkdir -p /FlowCount/input

[hadoop@mini4 ~]$ hadoop fs -put flow.log /FlowCount/input

执行测试

[hadoop@mini4 ~]$ hadoop jar FlowCount.jar cn.feizhou.provinceflow.FlowCount /FlowCount/input /FlowCount/out

测试报告

19/01/27 19:17:08 INFO client.RMProxy: Connecting to ResourceManager at mini-yum/192.168.232.128:8032

19/01/27 19:17:10 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

19/01/27 19:17:22 INFO input.FileInputFormat: Total input paths to process : 1

19/01/27 19:17:22 INFO mapreduce.JobSubmitter: number of splits:1

19/01/27 19:17:23 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1548576099360_0002

19/01/27 19:17:23 INFO impl.YarnClientImpl: Submitted application application_1548576099360_0002

19/01/27 19:17:23 INFO mapreduce.Job: The url to track the job: http://mini-yum:8088/proxy/application_1548576099360_0002/

19/01/27 19:17:23 INFO mapreduce.Job: Running job: job_1548576099360_0002

19/01/27 19:17:45 INFO mapreduce.Job: Job job_1548576099360_0002 running in uber mode : false

19/01/27 19:17:45 INFO mapreduce.Job: map 0% reduce 0%

19/01/27 19:18:04 INFO mapreduce.Job: map 100% reduce 0%

19/01/27 19:18:21 INFO mapreduce.Job: map 100% reduce 20%

19/01/27 19:18:22 INFO mapreduce.Job: map 100% reduce 40%

19/01/27 19:18:23 INFO mapreduce.Job: map 100% reduce 60%

19/01/27 19:18:42 INFO mapreduce.Job: map 100% reduce 100%

19/01/27 19:18:45 INFO mapreduce.Job: Job job_1548576099360_0002 completed successfully

19/01/27 19:18:45 INFO mapreduce.Job: Counters: 50

File System Counters

FILE: Number of bytes read=863

FILE: Number of bytes written=643259

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2300

HDFS: Number of bytes written=551

HDFS: Number of read operations=18

HDFS: Number of large read operations=0

HDFS: Number of write operations=10

Job Counters

Killed reduce tasks=2

Launched map tasks=1

Launched reduce tasks=6

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=16543

Total time spent by all reduces in occupied slots (ms)=111014

Total time spent by all map tasks (ms)=16543

Total time spent by all reduce tasks (ms)=111014

Total vcore-milliseconds taken by all map tasks=16543

Total vcore-milliseconds taken by all reduce tasks=111014

Total megabyte-milliseconds taken by all map tasks=16940032

Total megabyte-milliseconds taken by all reduce tasks=113678336

Map-Reduce Framework

Map input records=22

Map output records=22

Map output bytes=789

Map output materialized bytes=863

Input split bytes=110

Combine input records=0

Combine output records=0

Reduce input groups=21

Reduce shuffle bytes=863

Reduce input records=22

Reduce output records=21

Spilled Records=44

Shuffled Maps =5

Failed Shuffles=0

Merged Map outputs=5

GC time elapsed (ms)=1466

CPU time spent (ms)=9710

Physical memory (bytes) snapshot=755515392

Virtual memory (bytes) snapshot=5083746304

Total committed heap usage (bytes)=294457344

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=2190

File Output Format Counters

Bytes Written=551

结果:

读取part-r-00004分区内容

[hadoop@mini4 ~]$ hadoop fs -cat /FlowCount/out/part-r-00004

13480253104 180 180 360

13502468823 7335 110349 117684

13560436666 1116 954 2070

13560439658 2034 5892 7926

15013685858 3659 3538 7197

15920133257 3156 2936 6092

15989002119 1938 180 2118

18211575961 1527 2106 3633

18320173382 9531 2412 11943

84138413 4116 1432 5548

结论:

- 01 统计每个用户的总上行流量,下行流程,总流量。OK

- 02并按手机号的归属地输出到不同文件中。OK

接下问题:03统计结果按总流量倒序排序

我的做法是,将上面的每一个输出文件作为输入文件,重新解析。这样就对每一个文件进行排序了。

因为Map的输出是按照key来排序的,所以我们要用bean来作为key。

代码

package cn.feizhou.flowsum;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.WritableComparable;

/**

*

* 要实现序列化、排序接口(WritableComparable),如果只要序列化,实现Writable就行了

*

*/

public class FlowBean implements WritableComparable<FlowBean>{

private long upFlow;

private long dFlow;

private long sumFlow;

//反序列化时,需要反射调用空参构造函数,所以要显示定义一个

public FlowBean(){}

public FlowBean(long upFlow, long dFlow) {

this.upFlow = upFlow;

this.dFlow = dFlow;

this.sumFlow = upFlow + dFlow;

}

public void set(long upFlow, long dFlow) {

this.upFlow = upFlow;

this.dFlow = dFlow;

this.sumFlow = upFlow + dFlow;

}

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getdFlow() {

return dFlow;

}

public void setdFlow(long dFlow) {

this.dFlow = dFlow;

}

public long getSumFlow() {

return sumFlow;

}

public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

}

/**

* 序列化方法

*/

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(upFlow);

out.writeLong(dFlow);

out.writeLong(sumFlow);

}

/**

* 反序列化方法

* 注意:反序列化的顺序跟序列化的顺序完全一致

*/

@Override

public void readFields(DataInput in) throws IOException {

upFlow = in.readLong();

dFlow = in.readLong();

sumFlow = in.readLong();

}

@Override

public String toString() {

return upFlow + "\t" + dFlow + "\t" + sumFlow;

}

/**

* 排序方法

*/

@Override

public int compareTo(FlowBean o) {

return this.sumFlow>o.getSumFlow()?-1:1; //从大到小, 当前对象和要比较的对象比, 如果当前对象大, 返回-1, 交换他们的位置(自己的理解)

}

}

--------------------------------------------

/**

* 13480253104 180 180 360 13502468823 7335 110349 117684 13560436666 1116 954

* 2070

*

* @author

*

*/

public class FlowCountSort {

static class FlowCountSortMapper extends Mapper<LongWritable, Text, FlowBean, Text> {

FlowBean bean = new FlowBean();

Text v = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//将一行内容转成string

String line = value.toString();

//切分字段

String[] fields = line.split("\t");

//取出手机号

String phoneNbr = fields[0];

//取出上行流量下行流量

long upFlow = Long.parseLong(fields[1]);

long dFlow = Long.parseLong(fields[2]);

bean.set(upFlow, dFlow);

v.set(phoneNbr);

//这里保存的是序列化后的数据,所以不用担忧引用地址对应的对象内容变化的问题

//key:FlowBean

//value:phone

context.write(bean, v);

}

}

/**

* 根据key来掉, 传过来的是对象, 每个对象都是不一样的, 所以每个对象都调用一次reduce方法

* @author: 张政

* @date: 2016年4月11日 下午7:08:18

* @package_name: day07.sample

*/

static class FlowCountSortReducer extends Reducer<FlowBean, Text, Text, FlowBean> {

// <bean(),phonenbr>

@Override

protected void reduce(FlowBean bean, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//values内容只有一个--》电话

context.write(values.iterator().next(), bean);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

/*conf.set("mapreduce.framework.name", "yarn");

conf.set("yarn.resoucemanager.hostname", "mini1");*/

Job job = Job.getInstance(conf);

/*job.setJar("/home/hadoop/wc.jar");*/

//指定本程序的jar包所在的本地路径

job.setJarByClass(FlowCountSort.class);

//指定本业务job要使用的mapper/Reducer业务类

job.setMapperClass(FlowCountSortMapper.class);

job.setReducerClass(FlowCountSortReducer.class);

//指定mapper输出数据的kv类型

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(Text.class);

//指定最终输出的数据的kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

//指定job的输入原始文件所在目录

FileInputFormat.setInputPaths(job, new Path(args[0]));

//指定job的输出结果所在目录

Path outPath = new Path(args[1]);

/*FileSystem fs = FileSystem.get(conf);

if(fs.exists(outPath)){

fs.delete(outPath, true);

}*/

FileOutputFormat.setOutputPath(job, outPath);

//将job中配置的相关参数,以及job所用的java类所在的jar包,提交给yarn去运行

/*job.submit();*/

boolean res = job.waitForCompletion(true);

System.exit(res?0:1);

}

}

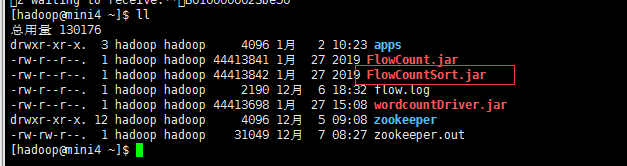

上传jar

执行测试

[hadoop@mini4 ~]$ hadoop jar FlowCountSort.jar cn.feizhou.flowsum.FlowCountSort /FlowCount/out/part-r-00004 /FlowCountSort/out/

测试报告

19/01/27 19:34:59 INFO client.RMProxy: Connecting to ResourceManager at mini-yum/192.168.232.128:8032

19/01/27 19:35:00 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

19/01/27 19:35:03 INFO input.FileInputFormat: Total input paths to process : 1

19/01/27 19:35:03 INFO mapreduce.JobSubmitter: number of splits:1

19/01/27 19:35:03 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1548576099360_0003

19/01/27 19:35:03 INFO impl.YarnClientImpl: Submitted application application_1548576099360_0003

19/01/27 19:35:04 INFO mapreduce.Job: The url to track the job: http://mini-yum:8088/proxy/application_1548576099360_0003/

19/01/27 19:35:04 INFO mapreduce.Job: Running job: job_1548576099360_0003

19/01/27 19:35:15 INFO mapreduce.Job: Job job_1548576099360_0003 running in uber mode : false

19/01/27 19:35:15 INFO mapreduce.Job: map 0% reduce 0%

19/01/27 19:35:47 INFO mapreduce.Job: map 100% reduce 0%

19/01/27 19:35:59 INFO mapreduce.Job: map 100% reduce 100%

19/01/27 19:36:01 INFO mapreduce.Job: Job job_1548576099360_0003 completed successfully

19/01/27 19:36:04 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=383

FILE: Number of bytes written=214283

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=379

HDFS: Number of bytes written=267

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=9820

Total time spent by all reduces in occupied slots (ms)=9427

Total time spent by all map tasks (ms)=9820

Total time spent by all reduce tasks (ms)=9427

Total vcore-milliseconds taken by all map tasks=9820

Total vcore-milliseconds taken by all reduce tasks=9427

Total megabyte-milliseconds taken by all map tasks=10055680

Total megabyte-milliseconds taken by all reduce tasks=9653248

Map-Reduce Framework

Map input records=10

Map output records=10

Map output bytes=357

Map output materialized bytes=383

Input split bytes=112

Combine input records=0

Combine output records=0

Reduce input groups=10

Reduce shuffle bytes=383

Reduce input records=10

Reduce output records=10

Spilled Records=20

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=239

CPU time spent (ms)=2790

Physical memory (bytes) snapshot=314318848

Virtual memory (bytes) snapshot=1691697152

Total committed heap usage (bytes)=168103936

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=267

File Output Format Counters

Bytes Written=267结果:

[hadoop@mini4 ~]$ hadoop fs -cat /FlowCountSort/out/part-r-00000

13502468823 7335 110349 117684

18320173382 9531 2412 11943

13560439658 2034 5892 7926

15013685858 3659 3538 7197

15920133257 3156 2936 6092

84138413 4116 1432 5548

18211575961 1527 2106 3633

15989002119 1938 180 2118

13560436666 1116 954 2070

13480253104 180 180 360

结论:

该文件已经按照总流量大小排序

备注:标红的是错误数据,该数据应该Map解析的时候就过滤出去,这样因为没有过滤,所以才出现

所有代码

https://download.csdn.net/download/zhou920786312/10940769

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?