云计算-使用Kubeadm在阿里云搭建单Master多Node的K8S

阿里云的机器搭建

- 进入阿里云选择云服务器 ECS

- 单击管理控制台

- 创建我的ECS

- 开始创建,然后选择按量付费,其他可以自行选择

机器环境配置

本次搭建三台机器,一台Master,两台Node,默认情况下是在三台机器上都操作,说明的是在一台master机器上操作,在阿里云上搭建的时候,ip使用阿里的私有ip,只有浏览器访问的时候使用外网IP

| ip | 节点 | hostname |

|---|---|---|

| 8.134.74.86 172.17.75.165 | master | kubeadm001 |

| 8.134.83.65 172.17.75.163 | node | kubeadm002 |

| 8.134.86.26 172.17.75.164 | node | kubeadm003 |

安装个可能用到的基础包

yum install -y openssh-clients openssh-clients ntpdate yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm

修改主机hosts文件

修改每台机器的/etc/hosts文件,主要添加三行

172.17.75.162 kubeadm001 kubeadm001

172.17.75.160 kubeadm002 kubeadm002

172.17.75.161 kubeadm003 kubeadm003

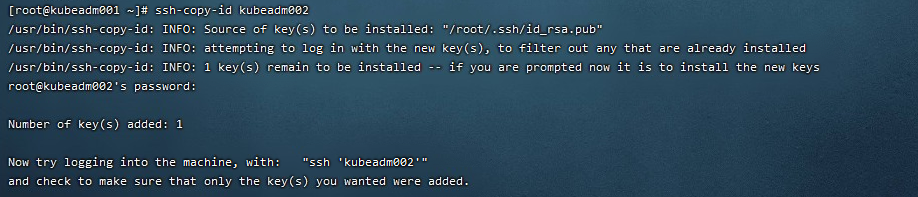

主机间免密登录

主要是kubeadm001和其他机器之间的免密登录

- 输入ssh-keygen,任何一路回车,不输入任何密码

ssk-keygen

- 把本地生成的密钥文件拷贝到远程主机kubeadm002和kubeadm003上面

ssh-copy-id kubeadm002

ssh-copy-id kubeadm003

### 关闭交换区,提升性能

swapoff -a

#### 为什么关闭交换区?

Swap是交换区,在机器内存不够的情况下,就会适应Swap,但是Swap的性能不是很好,K8S设计的时候为了提升性能,默认不允许使用Swap分区。Kubeadm初始化的时候会检测Swap是否关闭,如果没有关闭,初始化会失败。**如果不想关闭交换分区,安装k8s的时候可以指定-- ignore -preflight-errors=Swap**解决。

### 修改机器内核参数

modprobe br_netfilter

echo "modprobe br_netfilter" >> /etc/profile

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

```

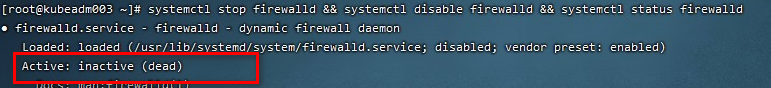

### 关闭防火墙

```

systemctl stop firewalld && systemctl disable firewalld && systemctl status firewalld

```

如果你不习惯使用firewalld,可以安装`iptables`,在三台机器上做如下操作

* 安装`iptables`基础包

```

yum install iptables-services -y

```

* 关闭`iptables`

```

service iptables stop && systemctl disable iptabl

```

* 清空防火墙规则

```

iptables -F

```

### 关闭selinux

使用`getenforce` 查看selinux是否关闭

```

[root@kubeadm003 ~]# getenforce

```

**如果结果不是**Disabled,使用以下命令关闭selinux

```

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

```

**注**:修改selinux需要重启机器。

### 时间同步

把三台机器上的时间同网络时间进行同步

* 将机器时间同网络时间进行同步

```

ntpdate cn.pool.ntp.org

```

* 做一个定时任务将机器时间每小时和网络时间同步一次

```

crontab -e

```

* 添加定时任务

```

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

```

* 重启定时任务

```

systemctl start crond

```

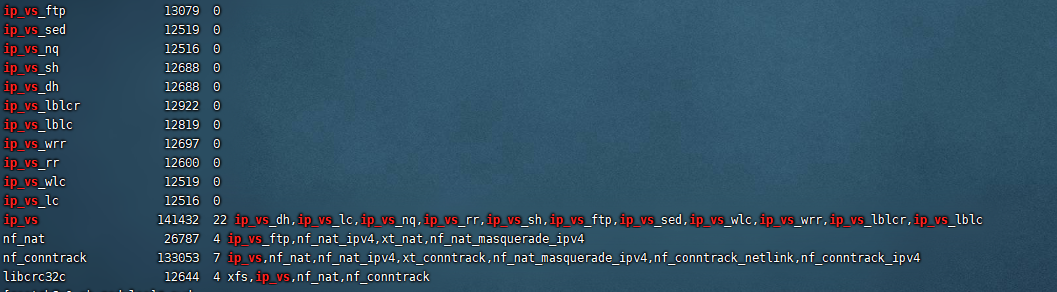

### 开启ipvs

* 在`/etc/sysconfig/modules`目录下添加ipvs.modules文件内容如下

```

vi /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

ipvs_modules=“ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack”

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ 0 -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

* 将`ipvs.modules`添加为可执行文件,并执行文件和查看执行情况

chmod +x /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

#### 什么是ipvs

ipvs(IP Virtual Server)实现的是传输层负载均衡,是Linux内核的一部分。ipvs运行在宿主机上,充当负载均衡器。

### 配置K8S组件需要的repo源

* 使用vi 添加/etc/yum.repos.d/kubernetes.repo

vi /etc/yum.repos.d/kubernetes.repo

文件内容如下

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

### 安装 docker 服务

#### 旧版本的卸载

在安装新的docker的时候,机器可能在以前安装过docker旧版本,我们需要将其进行卸载:

yum remove docker

docker-client

docker-client-latest

docker-common

docker-latest

docker-latest-logrotate

docker-logrotate

docker-engine

#### 镜像仓的设置

一般我们在国内的话使用国内的镜像仓,我一般使用阿里的镜像仓

使用阿里国内源安装docker

yum-config-manager

–add-repo

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo sed -i ‘s+download.docker.com+mirrors.aliyun.com/docker-ce+’ /etc/yum.repos.d/docker-ce.repo

#### 更新并安装Docker-ce

docker-ce 社区版 , docker-ee企业版, 一般安装社区版,企业版需要授权

#更新并安装Docker-CE

sudo yum makecache fast

yum install -y docker-ce docker-ce-cli containerd.io

#### 启动安装并查看Docker状态

systemctl start docker && systemctl enable docker && systemctl status docker

#### 配置 docker 镜像加速器和驱动

vi /etc/docker/daemon.json

* 本文添加了多个镜像加速器,包含阿里镜像加速器、163镜像加速器等加速器

{

“registry-mirrors”:[“https://b5b7g6yt.mirror.aliyuncs.com”,“https://registry.docker-cn.com”,“https://docker.mirrors.ustc.edu.cn”,“https://dockerhub.azk8s.cn”,“http://hub-mirror.c.163.com”,“http://qtid6917.mirror.aliyuncs.com”,“https://rncxm540.mirror.aliyuncs.com”],

“exec-opts”: [“native.cgroupdriver=systemd”]

}

* 阿里云加速器每一个人的都不一样,添加如下

* 添加以后重启`docker`和`daemon-reload`

systemctl daemon-reload && systemctl restart docker && systemctl status docker

k8s安装

-----

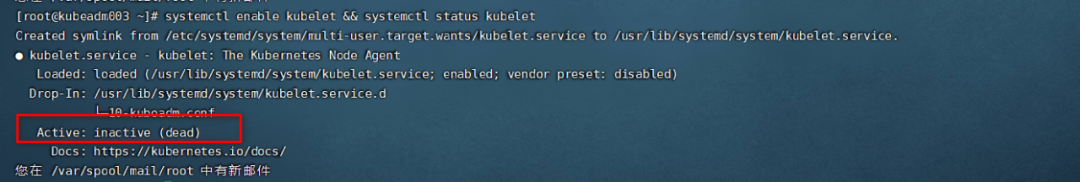

### 安装初始化 k8s 需要的软件包

* 安装`kubelet`、`kubeadm`、`kubectl`

yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

* 将`kubelet`设置为开启自启动以及查看`kubelet`状态

systemctl enable kubelet && systemctl status kubelet

现在看起来`kubelet`还不是`running`状态,现在这个状态是正常的,k8s正常启动启动以后,状态就正常了

**注**:

* `kubeadm`:`kubeadm`是一个工具,用于初始化k8s集群

* `kubelet`:`kubelet`**需要安装在集群的所有结点,作用是启动Pod**

* `kubectl`: `kubectl`可以部署和管理应用的工具

### 使用kubeadm初始化k8s集群

**这个操作只需要在master节点上操作就可以了**,本次搭建k8s的master内网节点是172.17.75.165

* \--kubernetes-version=1.20.6 表示版本

* \--apiserver-advertise-address=172.17.75.165 表示master节点在阿里云的内网IP

* \--image-repository registry.aliyuncs.com/google\_containers 指定镜像仓的地址

kubeadm init --kubernetes-version=1.20.6 --apiserver-advertise-address=172.17.75.165 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=SystemVerification

kubeadm默认拉取镜像的地址是k8s.grc.io,但是在国内访问效果差,所以设置为国内的镜像仓效果会更好。

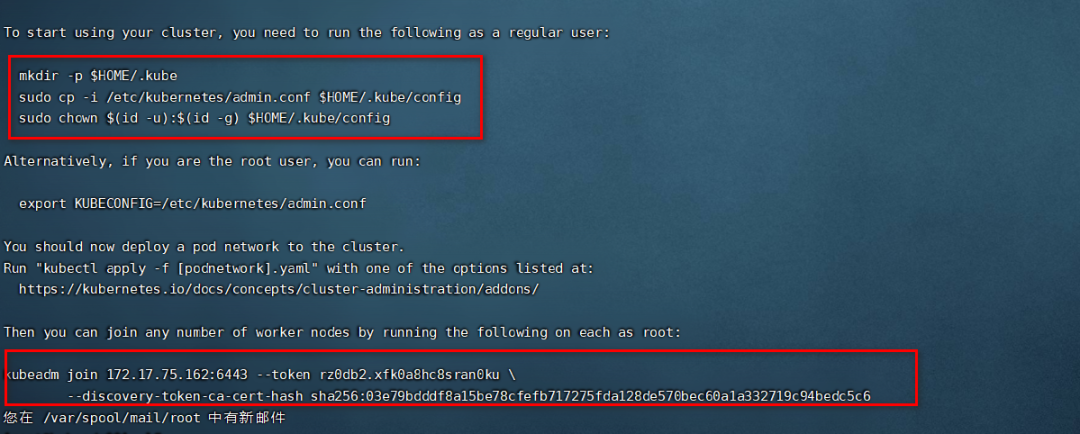

* 如下说明在master上安装成功

* 配置kubectl的配置文件config就是上图标出的文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown

(

i

d

−

u

)

:

(id -u):

(id−u):(id -g) $HOME/.kube/config

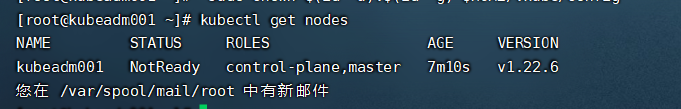

* 配置好以后查看nodes

kubectl get nodes

* 使用`kubeadm token create`命令生成添加节点的指令

kubeadm token create --print-join-command

**生成的指令如下,每次生成可能都会不同**

kubeadm join 172.17.75.165:6443 --token vsnwiz.9tdg2hklcmx26gbb --discovery-token-ca-cert-hash sha256:03e79bdddf8a15be78cfefb717275fda128de570bec60a1a332719c94bedc5c6

**我们需要将上面的`kubeadm join`指令在kubeadm002和kubeadm003两台机器上运行**

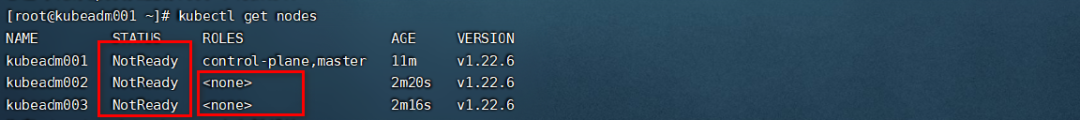

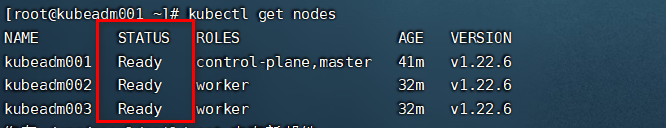

* 再次查看nodes节点

kubectl get nodes

我们发现新添加的node节点的**ROLES**是空的,也表示它是工作节点,但是我们可以将变成work

kubectl label node kubeadm002 node-role.kubernetes.io/worker=worker

kubectl label node kubeadm003 node-role.kubernetes.io/worker=worker

然后再次查看结果变为

[root@kubeadm001 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubeadm001 NotReady control-plane,master 14m v1.20.6

kubeadm002 NotReady worker 5m48s v1.20.6

kubeadm003 NotReady worker 5m44s v1.20.6

**上面的STSTUS中的NotReady表示没有安装网卡插件**

### 安装k8s网络组件-Calico

链接:https://pan.baidu.com/s/1QMAAh6iL-jbke\_lM0dZxBg 提取码:1234 编写一个`calico.yaml`,我将它上传到百度云盘,可以自行下载,也可以到https://docs.projectcalico.org/manifests/calico.yaml进行下载

kubectl apply -f calico.yaml

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

**需要等待一会**

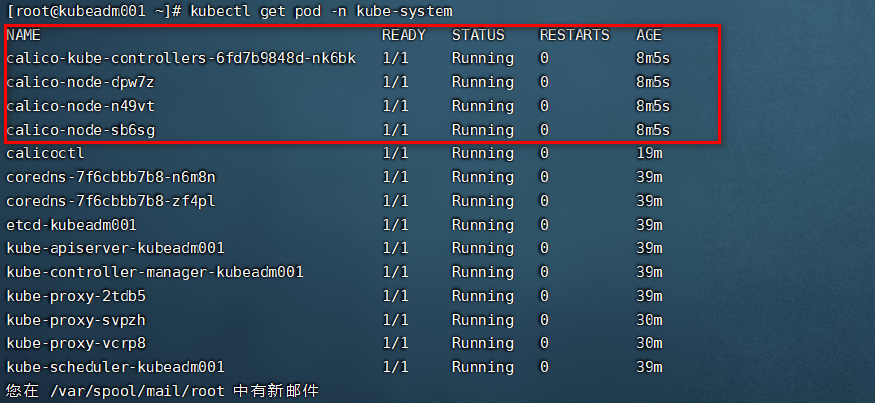

* 查看kube-system环境下的集群状态

kubectl get pod -n kube-system

* 再次查看k8s的集群状态

kubectl get nodes

我们发现现在集群的STATUS的状态变成了**Ready**,说明集群已经正常运行

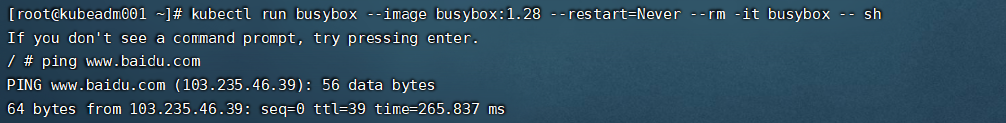

#### 测试k8s创建pod是否可以正常访问网络

创建一个busybox容器,并进入到容器内部

kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox – sh

然后在里面输入`ping www.baidu.com`

通过ping我们发现网络可以访问,说明Calico安装正常

#### 测试coredns是否正常

还是上面的busybos容器,输入以下命令

nslookup kubernetes.default.svc.cluster.local

结果为以下,10.96.0.10就是我们coreDNS的clusterIP,说明coredns正常

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default.svc.cluster.local

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

### k8s集群中安装tomcat服务

* 创建一个`tomcat.yaml`

vi tomcat.yaml

apiVersion: v1 #pod属于k8s核心组v1

kind: Pod #创建的是一个Pod资源

metadata: #元数据

name: tomcat-pod #pod名字

namespace: default #pod所属的名称空间

labels:

app: myapp #pod具有的标签

env: dev #pod具有的标签

spec:

containers: #定义一个容器,容器是对象列表,下面可以有多个name

- name: tomcat-pod-test #容器的名字

ports:

- containerPort: 8080

image: tomcat:8.5-jre8-alpine #容器使用的镜像

imagePullPolicy: IfNotPresent

```

* 使用kubect部署tomcat

```

kubectl apply -f tomcat.yaml

```

* 查看pods

```

[root@kubeadm001 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

tomcat-pod 1/1 Running 0 58s

```

* 创建tomcat的Service, `tomcat-service.yaml`

```

vi tomcat-service.yaml

```

内容如下

```

apiVersion: v1

kind: Service

metadata:

name: tomcat

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30080

selector:

app: myapp

env: dev

```

* 运行tomcat的`service`

```

kubectl apply -f tomcat-service.yaml

```

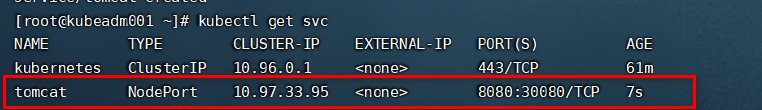

* 查看service的状态

```

kubectl get svc

```

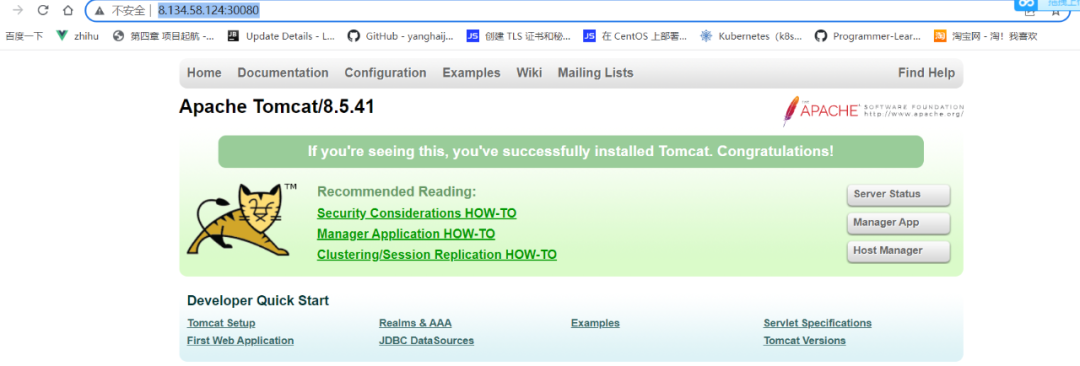

在浏览器中http://8.134.58.124:30080/ (8.134.58.124表示master节点)

安装k8s可视化UI界面dashboard

------------------------

* 创建kubernetes-dashboard.yaml文件

```

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

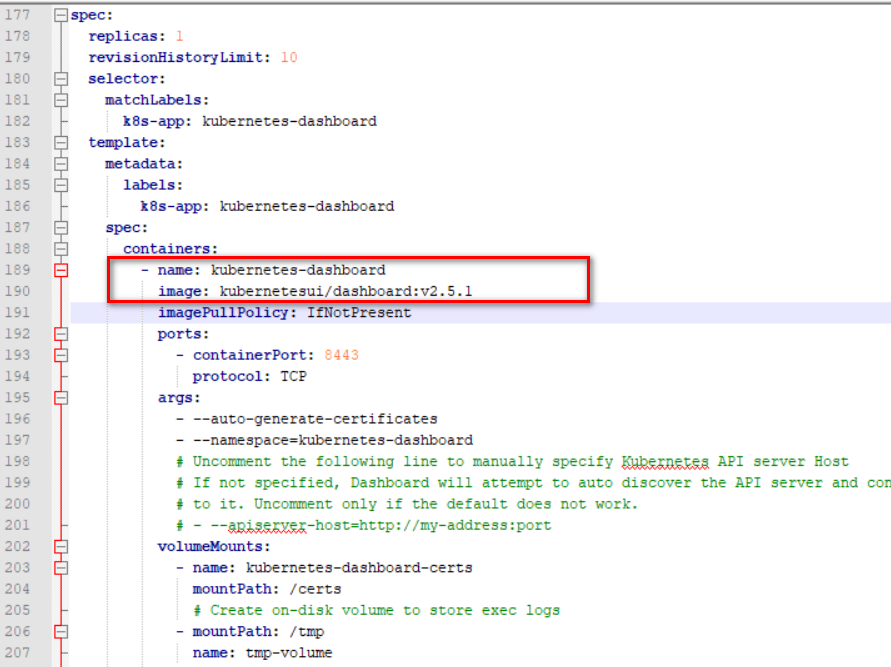

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta8

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

```

* 安装 kubernetes-dashboard

```

kubectl apply -f kubernetes-dashboard.yaml

```

* 查看dashboard的pod状态(需要等待一点时间)

```

kubectl get pods -n kubernetes-dashboard

```

查看结果为如下,说明安装成功

```

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-778b77d469-r6cwm 1/1 Running 0 2m

kubernetes-dashboard-648b6964bf-gnbw4 1/1 Running 0 2m

```

* 查看dashboard的前端service

```

kubectl get svc -n kubernetes-dashboard

```

结果为

```

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.97.73.249 <none> 8000/TCP 2m57s

kubernetes-dashboard ClusterIP 10.106.69.73 <none> 443/TCP 2m57s

```

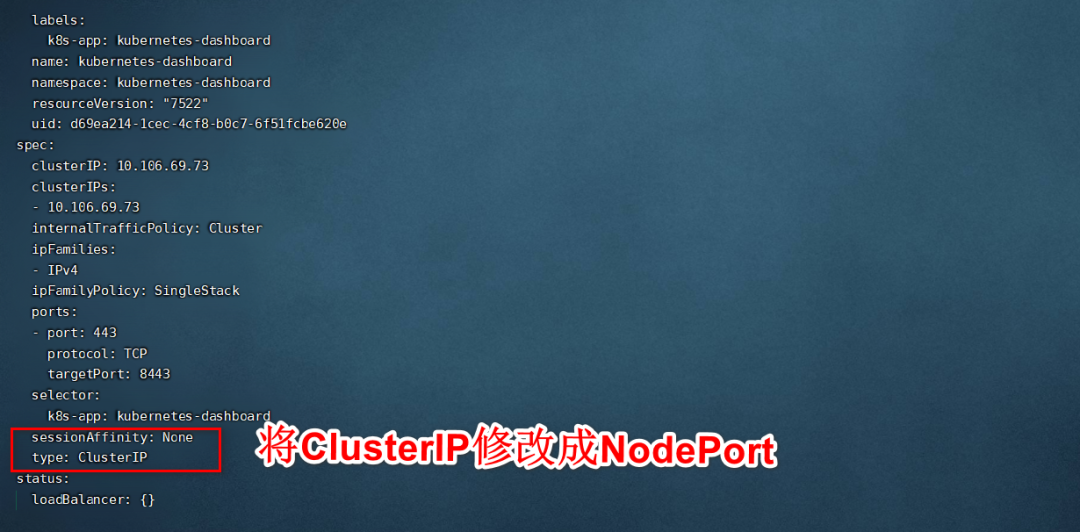

我们发现kubernetes-dashboard的TYPE状态ClusterIP,我们需要将其修改为**NodePort**状态,

```

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

```

* 再次查看 kubernetes-dashboard的svc

```

[root@kubeadm001 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.97.73.249 <none> 8000/TCP 7m31s

kubernetes-dashboard NodePort 10.106.69.73 <none> 443:31284/TCP 7m31s

```

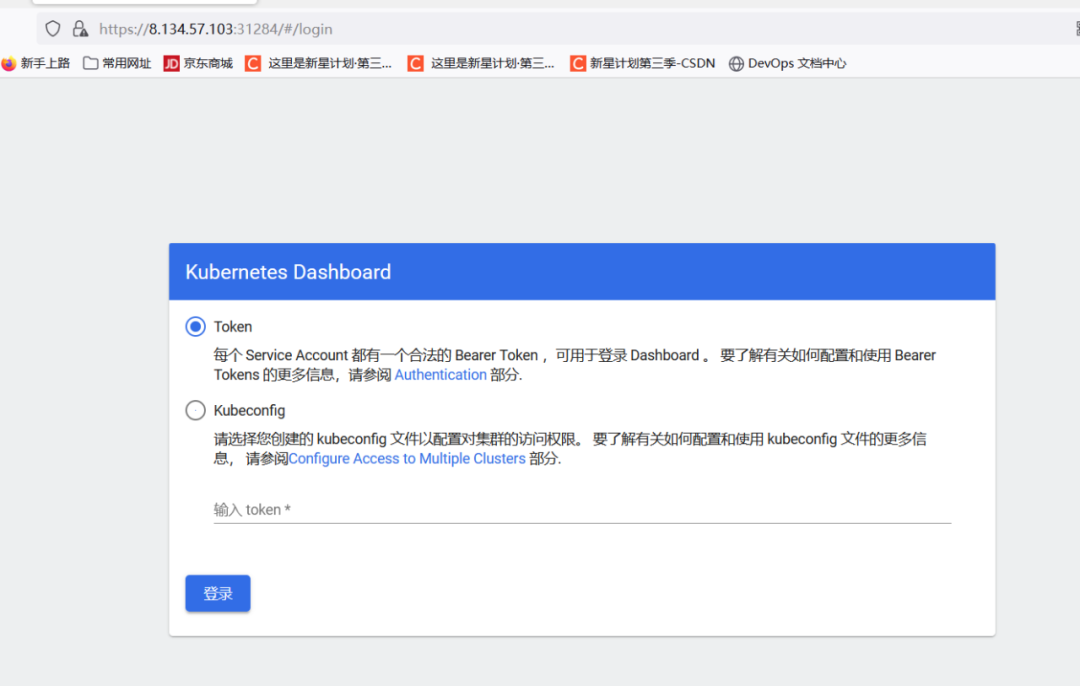

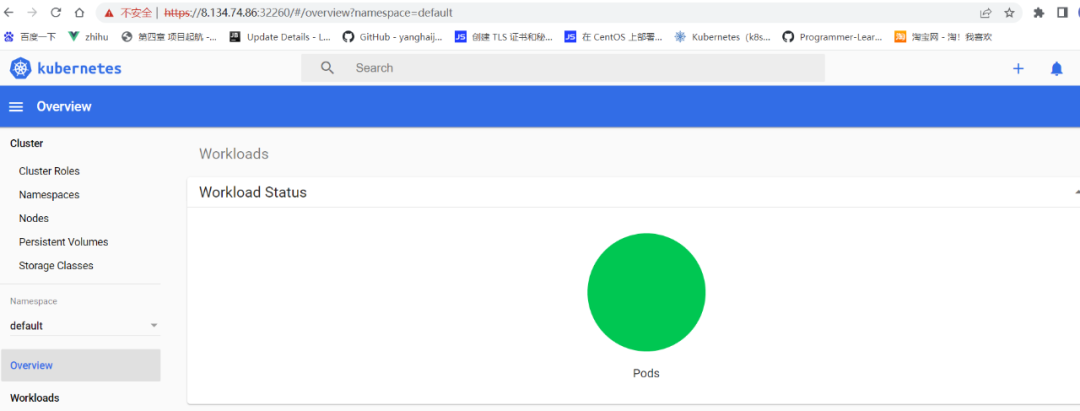

查看到端口是31284,然后使用任何一个node:31284进行查看

可看到出现了dashboard界面

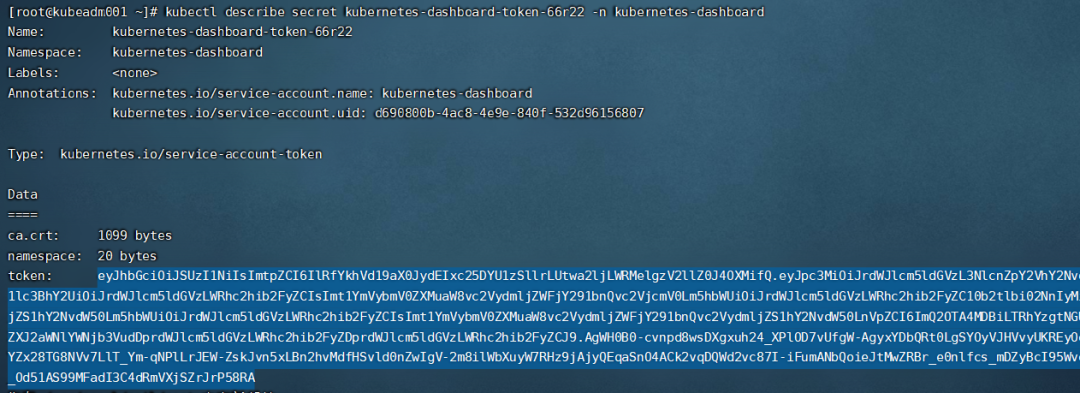

#### 通过token令牌访问dashboard

* 创建管理员token,具有查看任何空间的权限,可以管理所有资源对象

```

kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kubernetes-dashboard

```

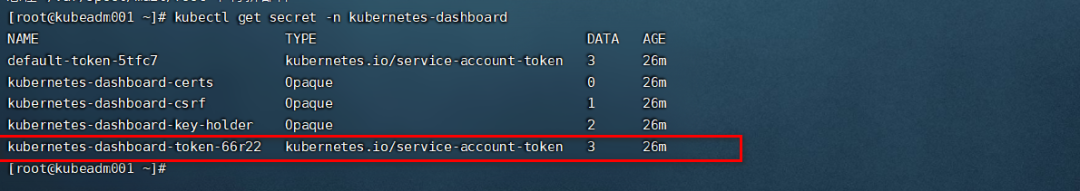

* 查看kubernetes-dashboard名称空间下的secret

```

kubectl get secret -n kubernetes-dashboard

```

找到对应的带有oken的kubernetes-dashboard-token-66r22 ,**每次kubernetes-dashboard-token的name不同**

```

kubectl describe secret kubernetes-dashboard-token-9mwrn -n kubernetes-dashboard

```

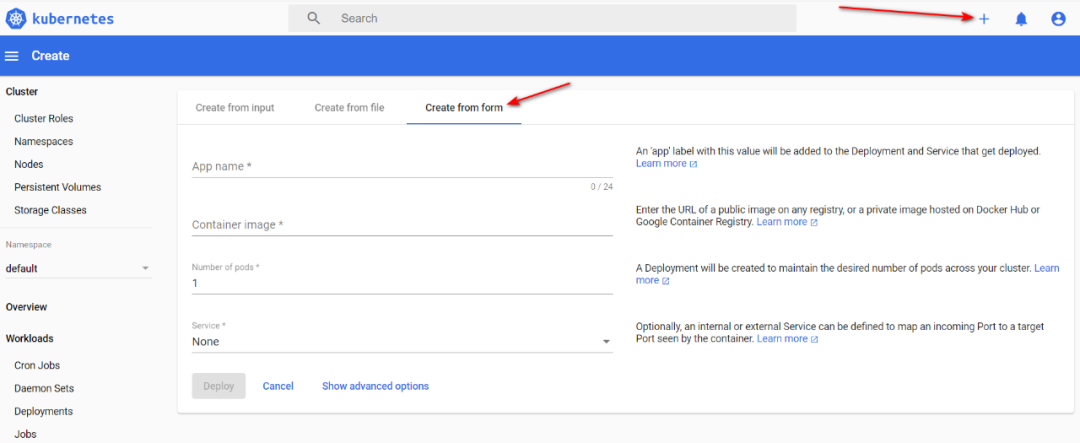

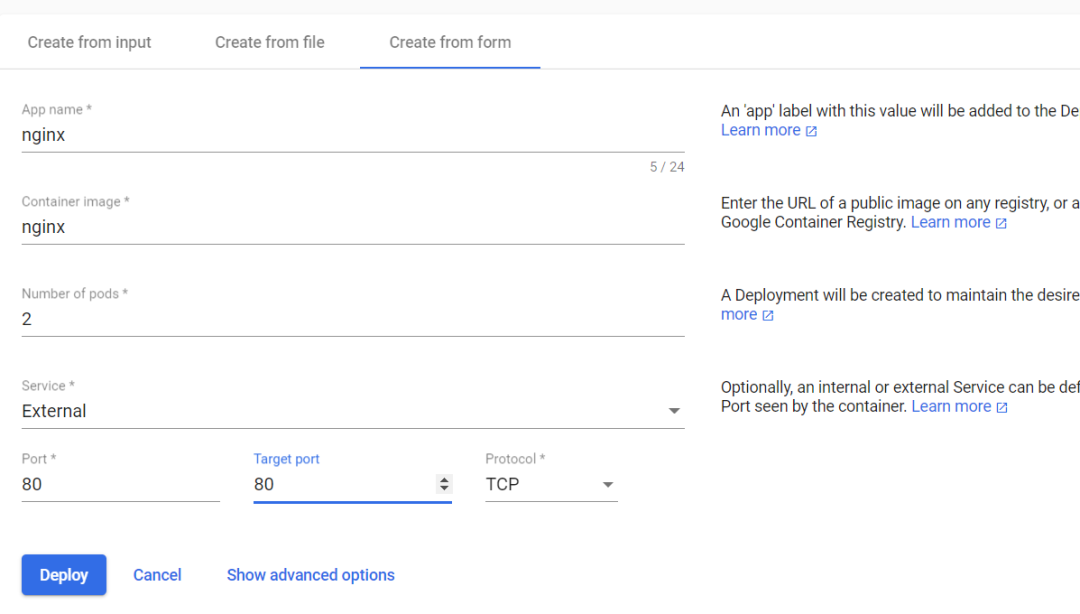

#### 通过 kubernetes-dashboard 创建容器

打开 kubernetes 的 dashboard 界面点开右上角红色箭头标注的 “+”,如下图所示:

选择Create from form

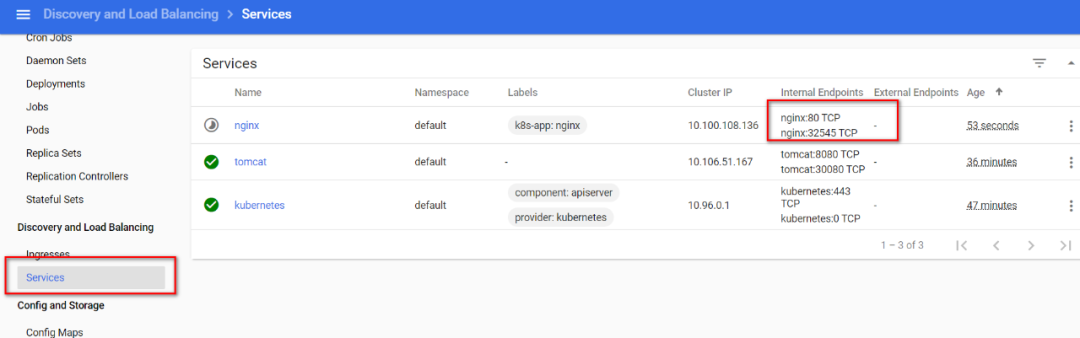

**在dashboard的左侧选择Services**

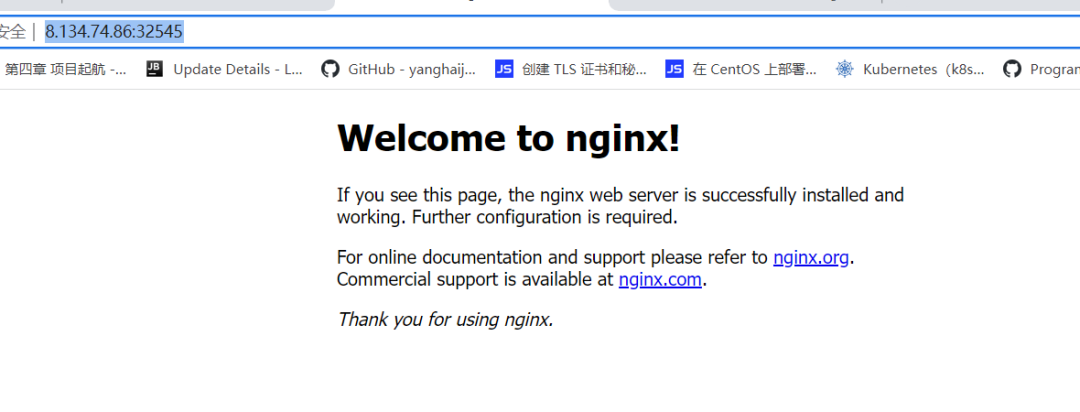

**上图可看到刚才创建的nginx的service在宿主机映射的端口是32545,在浏览器访问:http://8.134.74.86:32545/**

**错误解决:**可能会出现dashboard打不开的情况,在谷歌浏览器,点击属性,在尾部添加\--disable-infobars --ignore-certificate-errors

694

694

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?