开始我们的工作,为什么编译tensorflow,主要是使用tesnorflow训练的模型,供c++使用。

1:无用功

第一次尝试,编译tensorflow1.12,网上说不能用cmake,要使用bazel。编译中。。。。。。。。。。。。(省略一千字)成功

什么鬼,为什么是64位的,因为我们的机器是32位的。开始查找怎么编译32位的,查了几天,竟然没找到。

2:开始cmake编译

准备工具

1:cmake-gui一个我的是3.150;

3:tensorflow1.80源码;

4:anaconda

5:xxx工具(你懂的,比如蓝灯呀什么,最好是收费的,收费的快呀)

6:Git(这很重要,编译过程中要下载文件的,就是通过Git);

开始干活,打开cmake-gui,选择编译的cmake文件,路径如下

2:然后选择你要编译的地方,随便那里都行,最好不要c盘,有点大。如下

![]()

3:

如果编译64位的,就按照上面的选择,如果编译32位的就选择win32。,点击编译,会报错

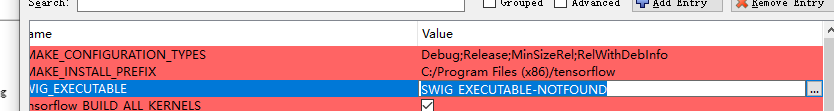

选中部分需要更改路径,就是你下载的swigwin-3.0.12的路径,具体操作如下

![]()

点击编译,成功,然后选选择

点击编译,成功以后点击生成。

我这里是用vs2017打开All_build.vcxproj这个工程,网上都是vs2015,不想在装,懒=====

打开你的xxx工具,因为编译的时候需要下载一些资源包,很多都在Google上。

开始生成,坐等,肯定会报错,如果没报错,赶紧买彩票去,肯定中。。。。。。。。。。

等生成以后,看是那里报错了,就去找这个文件,选择这个文件,例如

如果编译成功,按照这种方法重复编译其它的文件,一般都是资源没下载成功。最后编译tensorflow这个文件,生成dll文件

无用功

想着64位的都成功了,32位的改一下cmake就行了,其实这是不对的,按64位的走了一遍。报错

这是 什么鬼,开始百度,Google并没有找到有人遇见过,是不是版本问题,然后开始漫长的测试,vs2017不行,换vs2015,还是同样的错误,vs2019,还是同样的错误,是不是版本的问题,看是从tf1.4测试,慢慢试,然后到tf1.9成功.

二 tensorflow的c++使用

编译成功了怎么用,不会呀,开始各种百度。发现一个问题,为毛还是官网的可以调,自己的又报错误。我是使用slim框架训练自己的模型。一直报keepout没有参数传入,istraing没有传入。参考代码如下

#include <fstream>

#include <utility>

#include <vector>

#include "tensorflow/cc/ops/const_op.h"

#include "tensorflow/cc/ops/image_ops.h"

#include "tensorflow/cc/ops/standard_ops.h"

#include "tensorflow/core/framework/graph.pb.h"

#include "tensorflow/core/framework/tensor.h"

#include "tensorflow/core/graph/default_device.h"

#include "tensorflow/core/graph/graph_def_builder.h"

#include "tensorflow/core/lib/core/errors.h"

#include "tensorflow/core/lib/core/stringpiece.h"

#include "tensorflow/core/lib/core/threadpool.h"

#include "tensorflow/core/lib/io/path.h"

#include "tensorflow/core/lib/strings/str_util.h"

#include "tensorflow/core/lib/strings/stringprintf.h"

#include "tensorflow/core/platform/env.h"

#include "tensorflow/core/platform/init_main.h"

#include "tensorflow/core/platform/logging.h"

#include "tensorflow/core/platform/types.h"

#include "tensorflow/core/public/session.h"

#include "tensorflow/core/util/command_line_flags.h"

// These are all common classes it's handy to reference with no namespace.

using tensorflow::Flag;

using tensorflow::Tensor;

using tensorflow::Status;

using tensorflow::string;

using tensorflow::int32;

// Takes a file name, and loads a list of labels from it, one per line, and

// returns a vector of the strings. It pads with empty strings so the length

// of the result is a multiple of 16, because our model expects that.

Status ReadLabelsFile(const string& file_name, std::vector<string>* result,

size_t* found_label_count) {

std::ifstream file(file_name);

if (!file) {

return tensorflow::errors::NotFound("Labels file ", file_name,

" not found.");

}

result->clear();

string line;

while (std::getline(file, line)) {

result->push_back(line);

}

*found_label_count = result->size();

const int padding = 16;

while (result->size() % padding) {

result->emplace_back();

}

return Status::OK();

}

static Status ReadEntireFile(tensorflow::Env* env, const string& filename,

Tensor* output) {

tensorflow::uint64 file_size = 0;

TF_RETURN_IF_ERROR(env->GetFileSize(filename, &file_size));

string contents;

contents.resize(file_size);

std::unique_ptr<tensorflow::RandomAccessFile> file;

TF_RETURN_IF_ERROR(env->NewRandomAccessFile(filename, &file));

tensorflow::StringPiece data;

TF_RETURN_IF_ERROR(file->Read(0, file_size, &data, &(contents)[0]));

if (data.size() != file_size) {

return tensorflow::errors::DataLoss("Truncated read of '", filename,

"' expected ", file_size, " got ",

data.size());

}

output->scalar<string>()() = data.ToString();

return Status::OK();

}

// Given an image file name, read in the data, try to decode it as an image,

// resize it to the requested size, and then scale the values as desired.

Status ReadTensorFromImageFile(const string& file_name, const int input_height,

const int input_width, const float input_mean,

const float input_std,

std::vector<Tensor>* out_tensors) {

auto root = tensorflow::Scope::NewRootScope();

using namespace ::tensorflow::ops; // NOLINT(build/namespaces)

string input_name = "file_reader";

string output_name = "normalized";

// read file_name into a tensor named input

Tensor input(tensorflow::DT_STRING, tensorflow::TensorShape());

TF_RETURN_IF_ERROR(

ReadEntireFile(tensorflow::Env::Default(), file_name, &input));

// use a placeholder to read input data

auto file_reader =

Placeholder(root.WithOpName("input"), tensorflow::DataType::DT_STRING);

std::vector<std::pair<string, tensorflow::Tensor>> inputs = {

{"input", input},

};

// Now try to figure out what kind of file it is and decode it.

const int wanted_channels = 3;

tensorflow::Output image_reader;

if (tensorflow::str_util::EndsWith(file_name, ".png")) {

image_reader = DecodePng(root.WithOpName("png_reader"), file_reader,

DecodePng::Channels(wanted_channels));

} else if (tensorflow::str_util::EndsWith(file_name, ".gif")) {

// gif decoder returns 4-D tensor, remove the first dim

image_reader =

Squeeze(root.WithOpName("squeeze_first_dim"),

DecodeGif(root.WithOpName("gif_reader"), file_reader));

} else if (tensorflow::str_util::EndsWith(file_name, ".bmp")) {

image_reader = DecodeBmp(root.WithOpName("bmp_reader"), file_reader);

} else {

// Assume if it's neither a PNG nor a GIF then it must be a JPEG.

image_reader = DecodeJpeg(root.WithOpName("jpeg_reader"), file_reader,

DecodeJpeg::Channels(wanted_channels));

}

// Now cast the image data to float so we can do normal math on it.

auto float_caster =

Cast(root.WithOpName("float_caster"), image_reader, tensorflow::DT_FLOAT);

// The convention for image ops in TensorFlow is that all images are expected

// to be in batches, so that they're four-dimensional arrays with indices of

// [batch, height, width, channel]. Because we only have a single image, we

// have to add a batch dimension of 1 to the start with ExpandDims().

auto dims_expander = ExpandDims(root, float_caster, 0);

// Bilinearly resize the image to fit the required dimensions.

auto resized = ResizeBilinear(

root, dims_expander,

Const(root.WithOpName("size"), {input_height, input_width}));

// Subtract the mean and divide by the scale.

Div(root.WithOpName(output_name), Sub(root, resized, {input_mean}),

{input_std});

// This runs the GraphDef network definition that we've just constructed, and

// returns the results in the output tensor.

tensorflow::GraphDef graph;

TF_RETURN_IF_ERROR(root.ToGraphDef(&graph));

std::unique_ptr<tensorflow::Session> session(

tensorflow::NewSession(tensorflow::SessionOptions()));

TF_RETURN_IF_ERROR(session->Create(graph));

TF_RETURN_IF_ERROR(session->Run({inputs}, {output_name}, {}, out_tensors));

return Status::OK();

}

// Reads a model graph definition from disk, and creates a session object you

// can use to run it.

Status LoadGraph(const string& graph_file_name,

std::unique_ptr<tensorflow::Session>* session) {

tensorflow::GraphDef graph_def;

Status load_graph_status =

ReadBinaryProto(tensorflow::Env::Default(), graph_file_name, &graph_def);

if (!load_graph_status.ok()) {

return tensorflow::errors::NotFound("Failed to load compute graph at '",

graph_file_name, "'");

}

session->reset(tensorflow::NewSession(tensorflow::SessionOptions()));

Status session_create_status = (*session)->Create(graph_def);

if (!session_create_status.ok()) {

return session_create_status;

}

return Status::OK();

}

// Analyzes the output of the Inception graph to retrieve the highest scores and

// their positions in the tensor, which correspond to categories.

Status GetTopLabels(const std::vector<Tensor>& outputs, int how_many_labels,

Tensor* indices, Tensor* scores) {

auto root = tensorflow::Scope::NewRootScope();

using namespace ::tensorflow::ops; // NOLINT(build/namespaces)

string output_name = "top_k";

TopK(root.WithOpName(output_name), outputs[0], how_many_labels);

// This runs the GraphDef network definition that we've just constructed, and

// returns the results in the output tensors.

tensorflow::GraphDef graph;

TF_RETURN_IF_ERROR(root.ToGraphDef(&graph));

std::unique_ptr<tensorflow::Session> session(

tensorflow::NewSession(tensorflow::SessionOptions()));

TF_RETURN_IF_ERROR(session->Create(graph));

// The TopK node returns two outputs, the scores and their original indices,

// so we have to append :0 and :1 to specify them both.

std::vector<Tensor> out_tensors;

TF_RETURN_IF_ERROR(session->Run({}, {output_name + ":0", output_name + ":1"},

{}, &out_tensors));

*scores = out_tensors[0];

*indices = out_tensors[1];

return Status::OK();

}

// Given the output of a model run, and the name of a file containing the labels

// this prints out the top five highest-scoring values.

Status PrintTopLabels(const std::vector<Tensor>& outputs,

const string& labels_file_name) {

std::vector<string> labels;

size_t label_count;

Status read_labels_status =

ReadLabelsFile(labels_file_name, &labels, &label_count);

if (!read_labels_status.ok()) {

//LOG(ERROR) << read_labels_status;

return read_labels_status;

}

const int how_many_labels = std::min(5, static_cast<int>(label_count));

Tensor indices;

Tensor scores;

TF_RETURN_IF_ERROR(GetTopLabels(outputs, how_many_labels, &indices, &scores));

tensorflow::TTypes<float>::Flat scores_flat = scores.flat<float>();

tensorflow::TTypes<int32>::Flat indices_flat = indices.flat<int32>();

for (int pos = 0; pos < how_many_labels; ++pos) {

const int label_index = indices_flat(pos);

const float score = scores_flat(pos);

LOG(INFO) << labels[label_index] << " (" << label_index << "): " << score;

}

return Status::OK();

}

// This is a testing function that returns whether the top label index is the

// one that's expected.

Status CheckTopLabel(const std::vector<Tensor>& outputs, int expected,

bool* is_expected) {

*is_expected = false;

Tensor indices;

Tensor scores;

const int how_many_labels = 1;

TF_RETURN_IF_ERROR(GetTopLabels(outputs, how_many_labels, &indices, &scores));

tensorflow::TTypes<int32>::Flat indices_flat = indices.flat<int32>();

if (indices_flat(0) != expected) {

LOG(ERROR) << "Expected label #" << expected << " but got #"

<< indices_flat(0);

*is_expected = false;

} else {

*is_expected = true;

}

return Status::OK();

}

int main(int argc, char* argv[]) {

// These are the command-line flags the program can understand.

// They define where the graph and input data is located, and what kind of

// input the model expects. If you train your own model, or use something

// other than inception_v3, then you'll need to update these.

string image = "/Users/xxx/Downloads/inception_v3_model/grace_hopper.jpg";

string graph =

"/Users/xxx/Downloads/inception_v3_model/inception_v3_2016_08_28_frozen.pb";

string labels =

"/Users/xxx/Downloads/inception_v3_model/imagenet_slim_labels.txt";

int32 input_width = 299;

int32 input_height = 299;

float input_mean = 0;

float input_std = 255;

string input_layer = "input";

string output_layer = "InceptionV3/Predictions/Reshape_1";

bool self_test = false;

string root_dir = "";

std::vector<Flag> flag_list = {

Flag("image", &image, "image to be processed"),

Flag("graph", &graph, "graph to be executed"),

Flag("labels", &labels, "name of file containing labels"),

Flag("input_width", &input_width, "resize image to this width in pixels"),

Flag("input_height", &input_height,

"resize image to this height in pixels"),

Flag("input_mean", &input_mean, "scale pixel values to this mean"),

Flag("input_std", &input_std, "scale pixel values to this std deviation"),

Flag("input_layer", &input_layer, "name of input layer"),

Flag("output_layer", &output_layer, "name of output layer"),

Flag("self_test", &self_test, "run a self test"),

Flag("root_dir", &root_dir,

"interpret image and graph file names relative to this directory"),

};

string usage = tensorflow::Flags::Usage(argv[0], flag_list);

const bool parse_result = tensorflow::Flags::Parse(&argc, argv, flag_list);

if (!parse_result) {

LOG(ERROR) << usage;

return -1;

}

// We need to call this to set up global state for TensorFlow.

tensorflow::port::InitMain(argv[0], &argc, &argv);

if (argc > 1) {

LOG(ERROR) << "Unknown argument " << argv[1] << "\n" << usage;

return -1;

}

// First we load and initialize the model.

std::unique_ptr<tensorflow::Session> session;

string graph_path = tensorflow::io::JoinPath(root_dir, graph);

Status load_graph_status = LoadGraph(graph_path, &session);

if (!load_graph_status.ok()) {

//LOG(ERROR) << load_graph_status;

return -1;

}

// Get the image from disk as a float array of numbers, resized and normalized

// to the specifications the main graph expects.

std::vector<Tensor> resized_tensors;

string image_path = tensorflow::io::JoinPath(root_dir, image);

Status read_tensor_status =

ReadTensorFromImageFile(image_path, input_height, input_width, input_mean,

input_std, &resized_tensors);

if (!read_tensor_status.ok()) {

// LOG(ERROR) << read_tensor_status;

return -1;

}

const Tensor& resized_tensor = resized_tensors[0];

// Actually run the image through the model.

std::vector<Tensor> outputs;

Status run_status = session->Run({{input_layer, resized_tensor}},

{output_layer}, {}, &outputs);

if (!run_status.ok()) {

LOG(ERROR) << "Running model failed: " << run_status;

return -1;

}

// This is for automated testing to make sure we get the expected result with

// the default settings. We know that label 653 (military uniform) should be

// the top label for the Admiral Hopper image.

if (self_test) {

bool expected_matches;

Status check_status = CheckTopLabel(outputs, 653, &expected_matches);

if (!check_status.ok()) {

LOG(ERROR) << "Running check failed: " << check_status;

return -1;

}

if (!expected_matches) {

LOG(ERROR) << "Self-test failed!";

return -1;

}

}

// Do something interesting with the results we've generated.

Status print_status = PrintTopLabels(outputs, labels);

if (!print_status.ok()) {

LOG(ERROR) << "Running print failed: " << print_status;

return -1;

}

return 0;

}

这里面的模型是官网的下载链接 https://storage.googleapis.com/download.tensorflow.org/models/inception_v3_2016_08_28_frozen.pb.tar.gz,测试一下可以运行。(具体的怎么加载tensorflow.dll,自己百度去)

打算修改代码,讲道理了说,python可以运行,编译好的c++也应该运行。其实就是keepout没有传,修改代码如下。

#include <fstream>

#include <utility>

#include <vector>

#include "tensorflow/cc/ops/const_op.h"

#include "tensorflow/cc/ops/image_ops.h"

#include "tensorflow/cc/ops/standard_ops.h"

#include "tensorflow/core/framework/graph.pb.h"

#include "tensorflow/core/framework/tensor.h"

#include "tensorflow/core/graph/default_device.h"

#include "tensorflow/core/graph/graph_def_builder.h"

#include "tensorflow/core/lib/core/errors.h"

#include "tensorflow/core/lib/core/stringpiece.h"

#include "tensorflow/core/lib/core/threadpool.h"

#include "tensorflow/core/lib/io/path.h"

#include "tensorflow/core/lib/strings/str_util.h"

#include "tensorflow/core/lib/strings/stringprintf.h"

#include "tensorflow/core/platform/env.h"

#include "tensorflow/core/platform/init_main.h"

#include "tensorflow/core/platform/logging.h"

#include "tensorflow/core/platform/types.h"

#include "tensorflow/core/public/session.h"

#include "tensorflow/core/util/command_line_flags.h"

#include <opencv2\\opencv.hpp>

#include "tensorflow/core/protobuf/meta_graph.pb.h"

#include "tensorflow/cc/client/client_session.h"

using namespace std;

using namespace tensorflow;

using namespace cv;

// These are all common classes it's handy to reference with no namespace.

using tensorflow::Flag;

using tensorflow::Tensor;

using tensorflow::Status;

using tensorflow::string;

using tensorflow::int32;

// Takes a file name, and loads a list of labels from it, one per line, and

// returns a vector of the strings. It pads with empty strings so the length

// of the result is a multiple of 16, because our model expects that.

Status ReadLabelsFile(const string& file_name, std::vector<string>* result,

size_t* found_label_count) {

std::ifstream file(file_name);

if (!file) {

return tensorflow::errors::NotFound("Labels file ", file_name,

" not found.");

}

result->clear();

string line;

while (std::getline(file, line)) {

result->push_back(line);

}

*found_label_count = result->size();

const int padding = 16;

while (result->size() % padding) {

result->emplace_back();

}

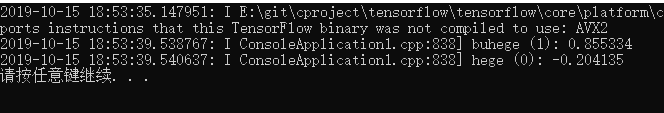

ret修改成功开始测试32位(或者64位是否成功)

测试成功,不要急,同样的模型和图像用python测试一下,如果返回值相差很小,证明成功。 我看了一下,很多人都是编译gpu的,但是gpu的一般不能直接 用,他会检测你的电脑是否配置了相同型号的cudnn,如果没有不会自动变cpu,而是报错,编译的cpu版本的就没有这个问题。

如果c++想使用opencv读数据,代码如下

#include"stdafx.h"

//*********** tensorflow C++接口调用图像分类pb模型代码 inception_v3_2016_08_28_frozen.pb ******************************

//#include <fstream>

//#include <utility>

//#include <Eigen/Core>

//#include <Eigen/Dense>

//#include <iostream>

//

//#include "tensorflow/cc/ops/const_op.h"

//#include "tensorflow/cc/ops/image_ops.h"

//#include "tensorflow/cc/ops/standard_ops.h"

//

//#include "tensorflow/core/framework/graph.pb.h"

//#include "tensorflow/core/framework/tensor.h"

//

//#include "tensorflow/core/graph/default_device.h"

//#include "tensorflow/core/graph/graph_def_builder.h"

//

//#include "tensorflow/core/lib/core/errors.h"

//#include "tensorflow/core/lib/core/stringpiece.h"

//#include "tensorflow/core/lib/core/threadpool.h"

//#include "tensorflow/core/lib/io/path.h"

//#include "tensorflow/core/lib/strings/stringprintf.h"

//

//#include "tensorflow/core/public/session.h"

//#include "tensorflow/core/util/command_line_flags.h"

//

//#include "tensorflow/core/platform/env.h"

//#include "tensorflow/core/platform/init_main.h"

//#include "tensorflow/core/platform/logging.h"

//#include "tensorflow/core/platform/types.h"

//

//#include "opencv2/opencv.hpp"

//

//using namespace tensorflow::ops;

//using namespace tensorflow;

//using namespace std;

//using namespace cv;

//using tensorflow::Flag;

//using tensorflow::Tensor;

//using tensorflow::Status;

//using tensorflow::string;

//using tensorflow::int32;

//

定义一个函数讲OpenCV的Mat数据转化为tensor,python里面只要对cv2.read读进来的矩阵进行np.reshape之后,

数据类型就成了一个tensor,即tensor与矩阵一样,然后就可以输入到网络的入口了,但是C++版本,我们网络开放的入口

也需要将输入图片转化成一个tensor,所以如果用OpenCV读取图片的话,就是一个Mat,然后就要考虑怎么将Mat转化为

Tensor了

//void CVMat_to_Tensor(Mat img, Tensor* output_tensor, int input_rows, int input_cols)

//{

// //imshow("input image",img);

// //图像进行resize处理

// resize(img, img, cv::Size(input_cols, input_rows));

// //imshow("resized image",img);

//

// //归一化

// img.convertTo(img, CV_32FC3);

// //img = 1 - img / 255;

// img = img/255.0;

//

//

// //创建一个指向tensor的内容的指针

// float *p = output_tensor->flat<float>().data();

//

// //创建一个Mat,与tensor的指针绑定,改变这个Mat的值,就相当于改变tensor的值

// cv::Mat tempMat(input_rows, input_cols, CV_32FC3, p);

// img.convertTo(tempMat, CV_32FC3);

//

// // waitKey(0);

//

//}

//

//int main(int argc, char** argv)

//{

// /*--------------------------------配置关键信息------------------------------*/

// string model_path = "inception_v3_2016_08_28_frozen.pb";

// string image_path = "grace_hopper.png";

// int input_height = 299;

// int input_width = 299;

// string input_tensor_name = "input";

// string output_tensor_name = "InceptionV3/Predictions/Reshape_1";

//

// /*--------------------------------创建session------------------------------*/

// Session* session;

// Status status = NewSession(SessionOptions(), &session);//创建新会话Session

//

// /*--------------------------------从pb文件中读取模型--------------------------------*/

// GraphDef graphdef; //Graph Definition for current model

//

// Status status_load = ReadBinaryProto(Env::Default(), model_path, &graphdef); //从pb文件中读取图模型;

// if (!status_load.ok()) {

// cout << "ERROR: Loading model failed..." << model_path << std::endl;

// cout << status_load.ToString() << "\n";

// return -1;

// }

// Status status_create = session->Create(graphdef); //将模型导入会话Session中;

// if (!status_create.ok()) {

// cout << "ERROR: Creating graph in session failed..." << status_create.ToString() << std::endl;

// return -1;

// }

// cout << "<----Successfully created session and load graph.------->" << endl;

//

// /*---------------------------------载入测试图片-------------------------------------*/

// cout << endl << "<------------loading test_image-------------->" << endl;

// //Mat img = imread(image_path, 0);

// Mat img = imread(image_path);

// if (img.empty())

// {

// cout << "can't open the image!!!!!!!" << endl;

// return -1;

// }

//

// //创建一个tensor作为输入网络的接口

// Tensor resized_tensor(DT_FLOAT, TensorShape({ 1,input_height,input_width,3 }));

//

// //将Opencv的Mat格式的图片存入tensor

// CVMat_to_Tensor(img, &resized_tensor, input_height, input_width);

//

// cout << resized_tensor.DebugString() << endl;

//

// /*-----------------------------------用网络进行测试-----------------------------------------*/

// cout << endl << "<-------------Running the model with test_image--------------->" << endl;

// //前向运行,输出结果一定是一个tensor的vector

// vector<tensorflow::Tensor> outputs;

// string output_node = output_tensor_name;

// Status status_run = session->Run({ { input_tensor_name, resized_tensor } }, { output_node }, {}, &outputs);

//

// if (!status_run.ok()) {

// cout << "ERROR: RUN failed..." << std::endl;

// cout << status_run.ToString() << "\n";

// return -1;

// }

// //把输出值给提取出来

// cout << "Output tensor size:" << outputs.size() << std::endl;

// for (std::size_t i = 0; i < outputs.size(); i++) {

// cout << outputs[i].DebugString() << endl;

// }

//

// Tensor t = outputs[0]; // Fetch the first tensor

// auto tmap = t.tensor<float, 2>(); // Tensor Shape: [batch_size, target_class_num]

// int output_dim = t.shape().dim_size(1); // Get the target_class_num from 1st dimension

//

// // Argmax: Get Final Prediction Label and Probability

// int output_class_id = -1;

// double output_prob = 0.0;

// for (int j = 0; j < output_dim; j++)

// {

// cout << "Class " << j << " prob:" << tmap(0, j) << "," << std::endl;

// if (tmap(0, j) >= output_prob) {

// output_class_id = j;

// output_prob = tmap(0, j);

// }

// }

//

// // 输出结果

// cout << "Final class id: " << output_class_id << std::endl;

// cout << "Final class prob: " << output_prob << std::endl;

//

// return 0;

//}

//************************************** test mobilenet model *******************************************************

#include <fstream>

#include <utility>

#include <Eigen/Core>

#include <Eigen/Dense>

#include <iostream>

#include "tensorflow/cc/ops/const_op.h"

#include "tensorflow/cc/ops/image_ops.h"

#include "tensorflow/cc/ops/standard_ops.h"

#include "tensorflow/core/framework/graph.pb.h"

#include "tensorflow/core/framework/tensor.h"

#include "tensorflow/core/graph/default_device.h"

#include "tensorflow/core/graph/graph_def_builder.h"

#include "tensorflow/core/lib/core/errors.h"

#include "tensorflow/core/lib/core/stringpiece.h"

#include "tensorflow/core/lib/core/threadpool.h"

#include "tensorflow/core/lib/io/path.h"

#include "tensorflow/core/lib/strings/stringprintf.h"

#include "tensorflow/core/public/session.h"

#include "tensorflow/core/util/command_line_flags.h"

#include "tensorflow/core/platform/env.h"

#include "tensorflow/core/platform/init_main.h"

#include "tensorflow/core/platform/logging.h"

#include "tensorflow/core/platform/types.h"

#include "opencv2/opencv.hpp"

using namespace tensorflow::ops;

using namespace tensorflow;

using namespace std;

using namespace cv;

using tensorflow::Flag;

using tensorflow::Tensor;

using tensorflow::Status;

using tensorflow::string;

using tensorflow::int32;

// 定义一个函数讲OpenCV的Mat数据转化为tensor,python里面只要对cv2.read读进来的矩阵进行np.reshape之后,

// 数据类型就成了一个tensor,即tensor与矩阵一样,然后就可以输入到网络的入口了,但是C++版本,我们网络开放的入口

// 也需要将输入图片转化成一个tensor,所以如果用OpenCV读取图片的话,就是一个Mat,然后就要考虑怎么将Mat转化为

// Tensor了

void CVMat_to_Tensor(Mat img, Tensor* output_tensor, int input_rows, int input_cols)

{

//imshow("input image",img);

//图像进行resize处理

resize(img, img, cv::Size(input_cols, input_rows));

//imshow("resized image",img);

//归一化

img.convertTo(img, CV_32FC3);

//img = 1 - img / 255;

img = img/255.0;

//创建一个指向tensor的内容的指针

float *p = output_tensor->flat<float>().data();

//创建一个Mat,与tensor的指针绑定,改变这个Mat的值,就相当于改变tensor的值

cv::Mat tempMat(input_rows, input_cols, CV_32FC3, p);

img.convertTo(tempMat, CV_32FC3);

// waitKey(0);

}

int main(int argc, char** argv)

{

/*--------------------------------配置关键信息------------------------------*/

string model_path = "D:/testmoooodel/tooth_mobilenet.pb";

string image_path = "valclose_12.jpg";

int input_height = 224;

int input_width = 224;

string input_tensor_name = "input";

string output_tensor_name = "MobilenetV1/Logits/SpatialSqueeze";

/*--------------------------------创建session------------------------------*/

Session* session;

Status status = NewSession(SessionOptions(), &session);//创建新会话Session

/*--------------------------------从pb文件中读取模型--------------------------------*/

GraphDef graphdef; //Graph Definition for current model

Status status_load = ReadBinaryProto(Env::Default(), model_path, &graphdef); //从pb文件中读取图模型;

if (!status_load.ok()) {

cout << "ERROR: Loading model failed..." << model_path << std::endl;

cout << status_load.ToString() << "\n";

return -1;

}

Status status_create = session->Create(graphdef); //将模型导入会话Session中;

if (!status_create.ok()) {

cout << "ERROR: Creating graph in session failed..." << status_create.ToString() << std::endl;

return -1;

}

cout << "<----Successfully created session and load graph.------->" << endl;

/*---------------------------------载入测试图片-------------------------------------*/

cout << endl << "<------------loading test_image-------------->" << endl;

//Mat img = imread(image_path, 0);

Mat img = imread(image_path);

Mat RGBImage;

cvtColor(img, RGBImage, COLOR_BGR2RGB);

if (img.empty())

{

cout << "can't open the image!!!!!!!" << endl;

return -1;

}

//创建一个tensor作为输入网络的接口

Tensor resized_tensor(DT_FLOAT, TensorShape({ 1,input_height,input_width,3 }));

//将Opencv的Mat格式的图片存入tensor

CVMat_to_Tensor(RGBImage, &resized_tensor, input_height, input_width);

cout << resized_tensor.DebugString() << endl;

/*-----------------------------------用网络进行测试-----------------------------------------*/

// 输入inputs,“ x_input”是我在模型中定义的输入数据名称,此外模型用到了dropout,所以这里有个“keep_prob”

Tensor keep_prob(DT_FLOAT, TensorShape());

Tensor is_training(DT_BOOL, TensorShape());

keep_prob.scalar<float>()() = 1.0;

is_training.scalar<bool>()() = false;

std::vector<std::pair<std::string, tensorflow::Tensor>> inputs = {

{ "input", resized_tensor },

{ "keep_prob", keep_prob },

{ "is_training", is_training },

};

cout << endl << "<-------------Running the model with test_image--------------->" << endl;

//前向运行,输出结果一定是一个tensor的vector

vector<tensorflow::Tensor> outputs;

string output_node = output_tensor_name;

//Status status_run = session->Run({ { input_tensor_name, resized_tensor } }, { output_node }, {}, &outputs);

Status status_run = session->Run({ inputs }, { output_node }, {}, &outputs);

if (!status_run.ok()) {

cout << "ERROR: RUN failed..." << std::endl;

cout << status_run.ToString() << "\n";

return -1;

}

//把输出值给提取出来

cout << "Output tensor size:" << outputs.size() << std::endl;

for (std::size_t i = 0; i < outputs.size(); i++) {

cout << "outputs[i].DebugString() :"<<outputs[i].DebugString() << endl;

Tensor t = outputs[0]; // Fetch the first tensor

auto tmap = t.tensor<float, 2>(); // Tensor Shape: [batch_size, target_class_num]

cout << "tmap :"<< tmap << endl;

int output_dim = t.shape().dim_size(1); // Get the target_class_num from 1st dimension

cout << "output_dim :" << output_dim << endl;

// Argmax: Get Final Prediction Label and Probability

int output_class_id = -1;

double output_prob = 0.0;

for (int j = 0; j < output_dim; j++)

{

cout << "Class " << j << " prob:" << tmap(0, j) << "," << std::endl;

if (tmap(0, j) >= output_prob) {

output_class_id = j;

output_prob = tmap(0, j);

}

}

// 输出结果

cout << "Final class id: " << output_class_id << std::endl;

cout << "Final class prob: " << output_prob << std::endl;

return 0;

}

}#define COMPILER_MSVC

#define NOMINMAX

#include <fstream>

#include <utility>

#include <vector>

#include "tensorflow/cc/ops/const_op.h"

#include "tensorflow/cc/ops/image_ops.h"

#include "tensorflow/cc/ops/standard_ops.h"

#include "tensorflow/core/framework/graph.pb.h"

#include "tensorflow/core/framework/tensor.h"

#include "tensorflow/core/graph/default_device.h"

#include "tensorflow/core/graph/graph_def_builder.h"

#include "tensorflow/core/lib/core/errors.h"

#include "tensorflow/core/lib/core/stringpiece.h"

#include "tensorflow/core/lib/core/threadpool.h"

#include "tensorflow/core/lib/io/path.h"

#include "tensorflow/core/lib/strings/str_util.h"

#include "tensorflow/core/lib/strings/stringprintf.h"

#include "tensorflow/core/platform/env.h"

#include "tensorflow/core/platform/init_main.h"

#include "tensorflow/core/platform/logging.h"

#include "tensorflow/core/platform/types.h"

#include "tensorflow/core/public/session.h"

#include "tensorflow/core/util/command_line_flags.h"

#include <opencv2\\opencv.hpp>

#include "tensorflow/core/protobuf/meta_graph.pb.h"

#include "tensorflow/cc/client/client_session.h"

using namespace std;

using namespace tensorflow;

using namespace cv;

// These are all common classes it's handy to reference with no namespace.

using tensorflow::Flag;

using tensorflow::Tensor;

using tensorflow::Status;

using tensorflow::string;

using tensorflow::int32;如果想要64位的或者32位的dll,可以留言呀============

1047

1047

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?