泰坦尼克号之灾难分析整合

背景及方法描述:寒小阳——泰坦尼克号之灾分析

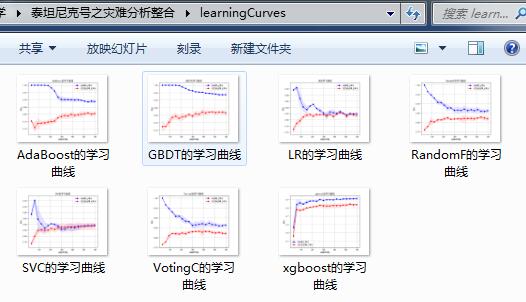

包含方法有:Adaboost,GBDT,LR,RF,SVM,VotingC,xgboost等方法。

下载链接:点击打开链接或https://pan.baidu.com/s/1xF_0QdiDZIi61kfCp07zMA 密码:7eof

文件夹内容包括:

python代码:

import numpy as np

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import RandomForestRegressor, RandomForestClassifier

from sklearn.ensemble import AdaBoostClassifier, GradientBoostingClassifier

from sklearn.ensemble import VotingClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.model_selection import cross_val_score

import os

#########################################################

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import learning_curve

from pylab import *

mpl.rcParams['font.sans-serif'] = ['SimHei'] # 若不添加,中文无法在图中显示

import matplotlib

matplotlib.rcParams['axes.unicode_minus']=False # 若不添加,无法在图中显示负号

# 用sklearn的learning_curve得到training_score和cv_score,使用matplotlib画出learning curve

###############################################################################

def mkdir(path):

folder = os.path.exists(path)

if not folder: # 判断是否存在文件夹如果不存在则创建为文件夹

os.makedirs(path) # makedirs 创建文件时如果路径不存在会创建这个路径

submissionFilesDir = "submissionFiles"

mkdir(submissionFilesDir)

learningCurvesDir = "learningCurves"

mkdir(learningCurvesDir)

# step 1. 数据读入及预处理

# root_path = 'Datasets\\Titanic'

# data_train = pd.read_csv('%s/%s' % (root_path, 'train.csv'))

data_train = pd.read_csv('data/train.csv')

data_train.info()

print(data_train.describe())

# step 2. 去除唯一属性特

data_train.drop(['PassengerId', 'Ticket'], axis=1, inplace=True)

print(data_train.head())

# step 3. 类别特征One-Hot编码

data_train['Sex'] = data_train['Sex'].map({'female': 0, 'male': 1}).astype(np.int64) #对年龄进行编码,女性female为0,男性male为1

data_train.loc[data_train.Embarked.isnull(), 'Embarked'] = 'S' # 2个Embarked缺失值直接填充为S

data_train = pd.concat([data_train, pd.get_dummies(data_train.Embarked)], axis=1)

data_train = data_train.rename(columns={'C': 'Cherbourg','Q': 'Queenstown','S': 'Southampton'}) #对Embarkd的几种情况进行编码分类

# step 4. 将名字转换

def replace_name(x):

if 'Mrs' in x: return 'Mrs'

elif 'Mr' in x: return 'Mr'

elif 'Miss' in x: return 'Miss'

else: return 'Other'

data_train['Name'] = data_train['Name'].map(lambda x:replace_name(x)) # 将data_train中的名字替换

data_train = pd.concat([data_train, pd.get_dummies(data_train.Name)], axis=1) # 在将data_train后添加名字列

data_train = data_train.rename(columns={'Miss': 'Name_Miss','Mr': 'Name_Mr',

'Mrs': 'Name_Mrs','Other': 'Name_Other'})

print(data_train.head())

# step 5. 数值特征标准化

def fun_scale(df_feature):

np_feature = df_feature.values.reshape(-1,1).astype(np.float64)

feature_scale = StandardScaler().fit(np_feature)

feature_scaled = StandardScaler().fit_transform(np_feature, feature_scale)

return feature_scale, feature_scaled

Pclass_scale, data_train['Pclass_scaled'] = fun_scale(data_train['Pclass'])

Fare_scale, data_train['Fare_scaled'] = fun_scale(data_train['Fare'])

SibSp_scale, data_train['SibSp_scaled'] = fun_scale(data_train['SibSp'])

Parch_scale, data_train['Parch_scaled'] = fun_scale(data_train['Parch'])

print(data_train.head(10))

# step 6. 缺失值补全及相应处理

# 处理Age缺失值并标准化

# 缺失值处理函数

def set_missing_feature(train_for_missingkey, data, info):

known_feature = train_for_missingkey[train_for_missingkey.Age.notnull()].as_matrix()

unknown_feature = train_for_missingkey[train_for_missingkey.Age.isnull()].as_matrix()

y = known_feature[:, 0] # 第1列作为待补全属性

x = known_feature[:, 1:] # 第2列及之后的属性作为预测属性

rf = RandomForestRegressor(random_state=0, n_estimators=100)

rf.fit(x, y)

print(info, "缺失值预测得分", rf.score(x, y))

predictage = rf.predict(unknown_feature[:, 1:])

data.loc[data.Age.isnull(), 'Age'] = predictage

return data

train_for_missingkey_train = data_train[['Age','Survived','Sex','Name_Miss','Name_Mr','Name_Mrs',

'Name_Other','Fare_scaled','SibSp_scaled','Parch_scaled']]

data_train = set_missing_feature(train_for_missingkey_train, data_train,'Train_Age')

Age_scale, data_train['Age_scaled'] = fun_scale(data_train['Age'])

# 处理Cabin特征

def set_Cabin_type(df):

df.loc[ (df.Cabin.notnull()), 'Cabin' ] = 1.

df.loc[ (df.Cabin.isnull()), 'Cabin' ] = 0.

return df

data_train = set_Cabin_type(data_train)

# 5. 整合数据

train_X = data_train[['Sex','Cabin','Cherbourg','Queenstown','Southampton','Name_Miss','Name_Mr','Name_Mrs','Name_Other',

'Pclass_scaled','Fare_scaled','SibSp_scaled','Parch_scaled','Age_scaled']].as_matrix()

train_y = data_train['Survived'].as_matrix()

print(train_X)

# 6. 模型搭建及交叉验证

lr = LogisticRegression(C=1.0, tol=1e-6)

svc = SVC(C=1.1, kernel='rbf', decision_function_shape='ovo')

adaboost = AdaBoostClassifier(n_estimators=490, random_state=0)

randomf = RandomForestClassifier(n_estimators=185, max_depth=5, random_state=0)

gbdt = GradientBoostingClassifier(n_estimators=436, max_depth=2, random_state=0)

VotingC = VotingClassifier(estimators=[('LR',lr),('SVC',svc),('AdaBoost',adaboost),

('RandomF',randomf),('GBDT',gbdt)])

'''

# 交叉验证部分 #####

param_test = {

'n_estimators': np.arange(200, 240, 1),

'max_depth': np.arange(4, 7, 1),

#'min_child_weight': np.arange(1, 6, 2),

#'C': (1, 1.1, 1.2, 1.3, 1.4, 1.5, 1.6, 1.7, 1.8, 1.9)

}

from sklearn.grid_search import GridSearchCV

grid_search = GridSearchCV(estimator=xgbClassifier, param_grid=param_test, scoring='roc_auc', cv=5)

grid_search.fit(train_X,train_y)

grid_search.grid_scores_, grid_search.best_params_, grid_search.best_score_

# 交叉验证部分 #####

'''

# 模型训练及交叉验证,同时绘制学习曲线用以分辨该模型的学习状态,过拟合?欠拟合?

def plot_learning_curve(estimator,name, learningCurvesDir,title, X, y, ylim=None, cv=None, n_jobs=1,

train_sizes=np.linspace(.05, 1., 20), verbose=0, plot=True):

"""

画出data在某模型上的learning curve.

参数解释

----------

estimator : 你用的分类器。

name: 你用的分类器名称。

learningCurvesDir: 保存的路径。

title : 表格的标题。

X : 输入的feature,numpy类型

y : 输入的target vector

ylim : tuple格式的(ymin, ymax), 设定图像中纵坐标的最低点和最高点

cv : 做cross-validation的时候,数据分成的份数,其中一份作为cv集,其余n-1份作为training(默认为3份)

n_jobs : 并行的的任务数(默认1)

"""

train_sizes, train_scores, test_scores = learning_curve(

estimator, X, y, cv=cv, n_jobs=n_jobs, train_sizes=train_sizes, verbose=verbose)

train_scores_mean = np.mean(train_scores, axis=1)

train_scores_std = np.std(train_scores, axis=1)

test_scores_mean = np.mean(test_scores, axis=1)

test_scores_std = np.std(test_scores, axis=1)

if plot:

fig = plt.figure()

plt.title(title)

if ylim is not None:

plt.ylim(*ylim)

plt.xlabel(u"训练样本数")

plt.ylabel(u"得分")

plt.gca().invert_yaxis()

plt.grid()

plt.fill_between(train_sizes, train_scores_mean - train_scores_std, train_scores_mean + train_scores_std,

alpha=0.1, color="b")

plt.fill_between(train_sizes, test_scores_mean - test_scores_std, test_scores_mean + test_scores_std,

alpha=0.1, color="r")

plt.plot(train_sizes, train_scores_mean, 'o-', color="b", label=u"训练集上得分")

plt.plot(train_sizes, test_scores_mean, 'o-', color="r", label=u"交叉验证集上得分")

plt.legend(loc="best")

plt.draw()

plt.gca().invert_yaxis()

plt.show()

fig.savefig(learningCurvesDir+"/"+name + "的学习曲线" + ".png")

midpoint = ((train_scores_mean[-1] + train_scores_std[-1]) + (test_scores_mean[-1] - test_scores_std[-1])) / 2

diff = (train_scores_mean[-1] + train_scores_std[-1]) - (test_scores_mean[-1] - test_scores_std[-1])

return midpoint, diff

######

classifierlist = [('LR',lr),('SVC',svc),('AdaBoost',adaboost),('RandomF',randomf),('GBDT',gbdt),('VotingC',VotingC)]

for name, classifier in classifierlist:

# 分类器训练与下一步交叉验证无关,训练是为下面测试集预测使用

classifier.fit(train_X, train_y)

plot_learning_curve(classifier,name, learningCurvesDir,(name + "的学习曲线"), train_X, train_y)

print(name, "Mean_Cross_Val_Score is:", cross_val_score(classifier, train_X, train_y, cv=5, scoring='accuracy').mean(), "\n")

# 7. 测试集处理

data_test = pd.read_csv('data/test.csv')

data_test.drop(['Ticket'], axis=1, inplace=True)

data_test['Sex'] = data_test['Sex'].map({'female': 0, 'male': 1}).astype(np.int64)

data_test = pd.concat([data_test, pd.get_dummies(data_test.Embarked)], axis=1)

data_test = data_test.rename(columns={'C': 'Cherbourg','Q': 'Queenstown','S': 'Southampton'})

data_test['Name'] = data_test['Name'].map(lambda x:replace_name(x))

data_test = pd.concat([data_test, pd.get_dummies(data_test.Name)], axis=1)

data_test = data_test.rename(columns={'Miss': 'Name_Miss','Mr': 'Name_Mr',

'Mrs': 'Name_Mrs','Other': 'Name_Other'})

# 测试集标准化函数

def fun_test_scale(feature_scale, df_feature):

np_feature = df_feature.values.reshape(-1,1).astype(np.float64)

feature_scaled = StandardScaler().fit_transform(np_feature, feature_scale)

return feature_scaled

data_test['Pclass_scaled'] = fun_test_scale(Pclass_scale, data_test['Pclass'])

data_test.loc[data_test.Fare.isnull(),'Fare'] = 0 # 缺失值置为0

data_test['Fare_scaled'] = fun_test_scale(Fare_scale, data_test['Fare'])

data_test['SibSp_scaled'] = fun_test_scale(SibSp_scale, data_test['SibSp'])

data_test['Parch_scaled'] = fun_test_scale(Parch_scale, data_test['Parch'])

# 处理测试集Age缺失值并归一化

train_for_missingkey_test = data_test[['Age','Sex','Name_Miss','Name_Mr','Name_Mrs','Name_Other',

'Fare_scaled','SibSp_scaled','Parch_scaled']]

data_test = set_missing_feature(train_for_missingkey_test, data_test, 'Test_Age')

data_test['Age_scaled'] = fun_test_scale(Age_scale, data_test['Age'])

data_test = set_Cabin_type(data_test)

test_X = data_test[['Sex','Cabin','Cherbourg','Queenstown','Southampton','Name_Miss','Name_Mr','Name_Mrs','Name_Other',

'Pclass_scaled','Fare_scaled','SibSp_scaled','Parch_scaled','Age_scaled']].as_matrix()

# print(len(classifierlist))

# print(classifierlist)

# 8. 模型预测

for i in range(len(classifierlist)):

model = classifierlist[i] # 选择分类器

print("Test in %s!" % model[0])

predictions = model[1].predict(test_X).astype(np.int32)

result = pd.DataFrame({'PassengerId': data_test['PassengerId'].as_matrix(), 'Survived': predictions})

result.to_csv(submissionFilesDir+"/"+'Result_with_%s.csv' % model[0], index=False)

print('...\nAll Finish!')

# 9. XGBoost

import xgboost as xgb

from sklearn.model_selection import train_test_split

x_train, x_valid, y_train, y_valid = train_test_split(train_X, train_y, test_size=0.1, random_state=0)

print(x_train.shape, x_valid.shape)

xgbClassifier = xgb.XGBClassifier(learning_rate = 0.1,

n_estimators= 234,

max_depth= 6,

min_child_weight= 5,

gamma=0,

subsample=0.8,

colsample_bytree=0.8,

objective= 'binary:logistic',

scale_pos_weight=1)

xgbClassifier.fit(train_X, train_y)

plot_learning_curve(xgbClassifier,"xgboost", learningCurvesDir, ("xgboost" + "的学习曲线"), train_X, train_y) #绘制xgbboost的学习曲线

xgbpred_test = xgbClassifier.predict(test_X).astype(np.int32)

print("Test in xgboost!")

result = pd.DataFrame({'PassengerId':data_test['PassengerId'].as_matrix(), 'Survived':xgbpred_test})

result.to_csv(submissionFilesDir+"/"+'Result_with_%s.csv' % 'XGBoost', index=False)参考网址:

1、https://blog.csdn.net/han_xiaoyang/article/details/49797143

本文介绍了一种基于多种机器学习算法(如Adaboost、GBDT、LR、RF、SVM、VotingC和xgboost等)的泰坦尼克号乘客生存预测模型,并详细展示了数据预处理、特征工程、模型训练与评估的过程。

本文介绍了一种基于多种机器学习算法(如Adaboost、GBDT、LR、RF、SVM、VotingC和xgboost等)的泰坦尼克号乘客生存预测模型,并详细展示了数据预处理、特征工程、模型训练与评估的过程。

1300

1300

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?