做什么?

统计各个范围Session步长、访问时长占比统计

-

访问时长:session的最早时间与最晚时间之差。

-

访问步长:session中的action操作个数。

即:统计出符合筛选条件的session中,访问时长在1s3s、4s6s、7s9s、10s30s、30s60s、1m3m、3m10m、10m30m、30m,访问步长在1_3、4_6、…以上各个范围内的各种session的占比

需求分析:

对应的类:

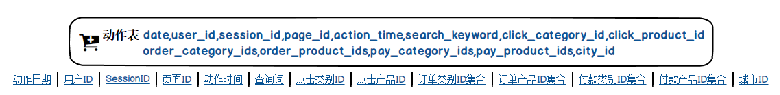

case class UserVisitAction(date: String,

user_id: Long,

session_id: String,

page_id: Long,

action_time: String,

search_keyword: String,

click_category_id: Long,

click_product_id: Long,

order_category_ids: String,

order_product_ids: String,

pay_category_ids: String,

pay_product_ids: String,

city_id: Long

)

每一条action数据代表了一个用户的行为,即为点击,下单,付款等其中一种行为,所有的记录中包含了同一个用户的多个action

- 基于这一点,我们很容想到,可以用groupByKey对同一用户的所有行为进行一个聚合操作,接着取过滤判断用户的行为是否符合条件限制即可.

- 那么问题是,一个用户有很多的行为,我们不可能一条一条的去判断是否符合条件,所以在判断之前,还需要一个统计操作,将一个用户的所有行为统计成一条信息,统计的同时计算访问时长和步长,最后格式如下:

UserId:searchKeywords=Lamer,小龙虾,机器学习,吸尘器,苹果,洗面奶,保温杯,华为手机|clickCategoryIds=|visitLength=3471|stepLength=48|startTime=2020-05-23 10:00:26)

- 接着,根据userId,将这条信息与UserInfo进行一个映射关系,新成一条新的,更完整的用户信息

(e8ef831e7fd4475990a80e425af946ad,sessionid=e8ef831e7fd4475990a80e425af946ad|searchKeywords=Lamer,小龙虾,机器学习,吸尘器,苹果,洗面奶,保温杯,华为手机|clickCategoryIds=|visitLength=3471|stepLength=48|startTime=2020-05-23 10:00:26|age=45|professional=professional43|sex=male|city=city2)

- 接着就可以进心我们的过滤信息,并且在过滤的同时,更新每个区域范围内的累加器(自定义累加器,统计各个范围的时长和步长)

- 最后,将各个范围的时长,步长除于总的时间,就是占比了

流程图如下:

步骤解析:

- 读取hadoop数据,返回基本的action信息:

def basicActions(session:SparkSession,task:JSONObject)={

import session.implicits._;

val df=session.read.parquet("hdfs://hadoop1:9000/data/user_visit_action").as[UserVisitAction];

df.filter(item=>{

val date=item.action_time;

val start=task.getString(Constants.PARAM_START_DATE);

val end=task.getString(Constants.PARAM_END_DATE);

date>=start&&date<=end;

})

df.rdd;

}

- 转化actions,格式为(sessionId,action),groupByKey算子,聚合同一用户的actions

val basicActionMap=basicActions.map(item=>{

val sessionId=item.session_id;

(sessionId,item);

})

val groupBasicActions=basicActionMap.groupByKey();

- 读取hadoop,获取用户的基本信息

def getUserInfo(session: SparkSession) = {

import session.implicits._;

val ds=session.read.parquet("hdfs://hadoop1:9000/data/user_Info").as[UserInfo].map(item=>(item.user_id,item));

ds.rdd;

}

- 映射用户信息与聚合信息,形成新的完整的信息

def AggInfoAndActions(aggUserActions: RDD[(Long, String)], userInfo: RDD[(Long, UserInfo)])={

//根据user_id建立映射关系===>用Join算子

userInfo.join(aggUserActions).map{

case (userId,(userInfo: UserInfo,aggrInfo))=>{

val age = userInfo.age

val professional = userInfo.professional

val sex = userInfo.sex

val city = userInfo.city

val fullInfo = aggrInfo + "|" +

Constants.FIELD_AGE + "=" + age + "|" +

Constants.FIELD_PROFESSIONAL + "=" + professional + "|" +

Constants.FIELD_SEX + "=" + sex + "|" +

Constants.FIELD_CITY + "=" + city

val sessionId = StringUtil.getFieldFromConcatString(aggrInfo, "\\|", Constants.FIELD_SESSION_ID)

(sessionId, fullInfo)

}

}

- 过滤数据,更新累加器

累加器的定义:

package scala

import org.apache.spark.util.AccumulatorV2

import scala.collection.mutable

class sessionAccumulator extends AccumulatorV2[String,mutable.HashMap[String,Int]] {

val countMap=new mutable.HashMap[String,Int]();

override def isZero: Boolean = {

countMap.isEmpty;

}

override def copy(): AccumulatorV2[String, mutable.HashMap[String, Int]] = {

val acc=new sessionAccumulator;

acc.countMap++=this.countMap;

acc

}

override def reset(): Unit = {

countMap.clear;

}

override def add(v: String): Unit = {

if (!countMap.contains(v)){

countMap+=(v->0);

}

countMap.update(v,countMap(v)+1);

}

override def merge(other: AccumulatorV2[String, mutable.HashMap[String, Int]])={

other match{

case acc:sessionAccumulator=>acc.countMap.foldLeft(this.countMap){

case(map,(k,v))=>map+=(k->(map.getOrElse(k,0)+v));

}

}

}

override def value: mutable.HashMap[String, Int] = {

this.countMap;

}

}

过滤函数:

def filterInfo(finalInfo: RDD[(String, String)],task:JSONObject,accumulator:sessionAccumulator) = {

//1.获取限制条件

//获取限制条件的基本信息

val startAge = task.get(Constants.PARAM_START_AGE);

val endAge = task.get( Constants.PARAM_END_AGE);

val professionals = task.get(Constants.PARAM_PROFESSIONALS)

val cities = task.get(Constants.PARAM_CITIES)

val sex = task.get(Constants.PARAM_SEX)

val keywords =task.get(Constants.PARAM_KEYWORDS)

val categoryIds = task.get(Constants.PARAM_CATEGORY_IDS)

//拼接基本条件

var filterInfo = (if(startAge != null) Constants.PARAM_START_AGE + "=" + startAge + "|" else "") +

(if (endAge != null) Constants.PARAM_END_AGE + "=" + endAge + "|" else "") +

(if (professionals != null) Constants.PARAM_PROFESSIONALS + "=" + professionals + "|" else "") +

(if (cities != null) Constants.PARAM_CITIES + "=" + cities + "|" else "") +

(if (sex != null) Constants.PARAM_SEX + "=" + sex + "|" else "") +

(if (keywords != null) Constants.PARAM_KEYWORDS + "=" + keywords + "|" else "") +

(if (categoryIds != null) Constants.PARAM_CATEGORY_IDS + "=" + categoryIds else "")

if(filterInfo.endsWith("\\|"))

filterInfo = filterInfo.substring(0, filterInfo.length - 1)

finalInfo.filter{

case (sessionId,fullInfo)=>{

var success=true;

if(!ValidUtils.between(fullInfo, Constants.FIELD_AGE, filterInfo, Constants.PARAM_START_AGE, Constants.PARAM_END_AGE)){

success = false

}else if(!ValidUtils.in(fullInfo, Constants.FIELD_PROFESSIONAL, filterInfo, Constants.PARAM_PROFESSIONALS)){

success = false

}else if(!ValidUtils.in(fullInfo, Constants.FIELD_CITY, filterInfo, Constants.PARAM_CITIES)){

success = false

}else if(!ValidUtils.equal(fullInfo, Constants.FIELD_SEX, filterInfo, Constants.PARAM_SEX)){

success = false

}else if(!ValidUtils.in(fullInfo, Constants.FIELD_SEARCH_KEYWORDS, filterInfo, Constants.PARAM_KEYWORDS)){

success = false

}else if(!ValidUtils.in(fullInfo, Constants.FIELD_CLICK_CATEGORY_IDS, filterInfo, Constants.PARAM_CATEGORY_IDS)){

success = false

}

//跟新累加器

if (success){

//先累加总的session数量

accumulator.add(Constants.SESSION_COUNT);

val visitLength=StringUtil.getFieldFromConcatString(fullInfo,"\\|",Constants.FIELD_VISIT_LENGTH).toLong;

val stepLength=StringUtil.getFieldFromConcatString(fullInfo,"\\|",Constants.FIELD_STEP_LENGTH).toLong;

calculateVisitLength(visitLength,accumulator);

calculateStepLength(stepLength,accumulator);

}

success;

}

}

}

calculateVisitLength:

def calculateVisitLength(visitLength: Long, sessionStatisticAccumulator: sessionAccumulator) = {

if(visitLength >= 1 && visitLength <= 3){

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_1s_3s)

}else if(visitLength >=4 && visitLength <= 6){

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_4s_6s)

}else if (visitLength >= 7 && visitLength <= 9) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_7s_9s)

} else if (visitLength >= 10 && visitLength <= 30) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_10s_30s)

} else if (visitLength > 30 && visitLength <= 60) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_30s_60s)

} else if (visitLength > 60 && visitLength <= 180) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_1m_3m)

} else if (visitLength > 180 && visitLength <= 600) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_3m_10m)

} else if (visitLength > 600 && visitLength <= 1800) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_10m_30m)

} else if (visitLength > 1800) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_30m)

}

}

- 计算各个范围内的占比,写入数据库

def getSessionRatio(sparkSession: SparkSession,taskUUID:String, FilterInfo: RDD[(String, String)], value:mutable.HashMap[String,Int]) = {

val session_count = value.getOrElse(Constants.SESSION_COUNT, 1).toDouble

val visit_length_1s_3s = value.getOrElse(Constants.TIME_PERIOD_1s_3s, 0)

val visit_length_4s_6s = value.getOrElse(Constants.TIME_PERIOD_4s_6s, 0)

val visit_length_7s_9s = value.getOrElse(Constants.TIME_PERIOD_7s_9s, 0)

val visit_length_10s_30s = value.getOrElse(Constants.TIME_PERIOD_10s_30s, 0)

val visit_length_30s_60s = value.getOrElse(Constants.TIME_PERIOD_30s_60s, 0)

val visit_length_1m_3m = value.getOrElse(Constants.TIME_PERIOD_1m_3m, 0)

val visit_length_3m_10m = value.getOrElse(Constants.TIME_PERIOD_3m_10m, 0)

val visit_length_10m_30m = value.getOrElse(Constants.TIME_PERIOD_10m_30m, 0)

val visit_length_30m = value.getOrElse(Constants.TIME_PERIOD_30m, 0)

val step_length_1_3 = value.getOrElse(Constants.STEP_PERIOD_1_3, 0)

val step_length_4_6 = value.getOrElse(Constants.STEP_PERIOD_4_6, 0)

val step_length_7_9 = value.getOrElse(Constants.STEP_PERIOD_7_9, 0)

val step_length_10_30 = value.getOrElse(Constants.STEP_PERIOD_10_30, 0)

val step_length_30_60 = value.getOrElse(Constants.STEP_PERIOD_30_60, 0)

val step_length_60 = value.getOrElse(Constants.STEP_PERIOD_60, 0)

val visit_length_1s_3s_ratio = NumberUtils.formatDouble(visit_length_1s_3s / session_count, 2)

val visit_length_4s_6s_ratio = NumberUtils.formatDouble(visit_length_4s_6s / session_count, 2)

val visit_length_7s_9s_ratio = NumberUtils.formatDouble(visit_length_7s_9s / session_count, 2)

val visit_length_10s_30s_ratio = NumberUtils.formatDouble(visit_length_10s_30s / session_count, 2)

val visit_length_30s_60s_ratio = NumberUtils.formatDouble(visit_length_30s_60s / session_count, 2)

val visit_length_1m_3m_ratio = NumberUtils.formatDouble(visit_length_1m_3m / session_count, 2)

val visit_length_3m_10m_ratio = NumberUtils.formatDouble(visit_length_3m_10m / session_count, 2)

val visit_length_10m_30m_ratio = NumberUtils.formatDouble(visit_length_10m_30m / session_count, 2)

val visit_length_30m_ratio = NumberUtils.formatDouble(visit_length_30m / session_count, 2)

val step_length_1_3_ratio = NumberUtils.formatDouble(step_length_1_3 / session_count, 2)

val step_length_4_6_ratio = NumberUtils.formatDouble(step_length_4_6 / session_count, 2)

val step_length_7_9_ratio = NumberUtils.formatDouble(step_length_7_9 / session_count, 2)

val step_length_10_30_ratio = NumberUtils.formatDouble(step_length_10_30 / session_count, 2)

val step_length_30_60_ratio = NumberUtils.formatDouble(step_length_30_60 / session_count, 2)

val step_length_60_ratio = NumberUtils.formatDouble(step_length_60 / session_count, 2)

//数据封装

val stat = SessionAggrStat(taskUUID, session_count.toInt, visit_length_1s_3s_ratio, visit_length_4s_6s_ratio, visit_length_7s_9s_ratio,

visit_length_10s_30s_ratio, visit_length_30s_60s_ratio, visit_length_1m_3m_ratio,

visit_length_3m_10m_ratio, visit_length_10m_30m_ratio, visit_length_30m_ratio,

step_length_1_3_ratio, step_length_4_6_ratio, step_length_7_9_ratio,

step_length_10_30_ratio, step_length_30_60_ratio, step_length_60_ratio)

val sessionRatioRDD = sparkSession.sparkContext.makeRDD(Array(stat))

//写入数据库

import sparkSession.implicits._

sessionRatioRDD.toDF().write

.format("jdbc")

.option("url", ConfigurationManager.config.getString(Constants.JDBC_URL))

.option("user", ConfigurationManager.config.getString(Constants.JDBC_USER))

.option("password", ConfigurationManager.config.getString(Constants.JDBC_PASSWORD))

.option("dbtable", "session_stat_ratio_0416")

.mode(SaveMode.Append)

.save()

sessionRatioRDD;

}

完整代码:

主函数:

package scala

import java.util.UUID

import com.alibaba.fastjson.{JSON, JSONObject}

import commons.conf.ConfigurationManager

import commons.constant.Constants

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

import server.serverOne

object sessionStat {

def main(args: Array[String]): Unit = {

//server

val oneServer=new serverOne;

//sparksession

val conf=new SparkConf().setAppName("session").setMaster("local[*]");

val session=SparkSession.builder().config(conf).getOrCreate();

session.sparkContext.setLogLevel("ERROR");

//获取配置

val str=ConfigurationManager.config.getString(Constants.TASK_PARAMS);

val task:JSONObject=JSON.parseObject(str);

//主键

val taskUUID=UUID.randomUUID().toString;

val filterInfo=getFilterFullResult(oneServer,session,task,taskUUID);

}

def getFilterFullResult(oneServer: serverOne, session: SparkSession, task: JSONObject,taskUUID:String) ={

//1.获取基本的action信息

val basicActions=oneServer.basicActions(session,task);

//2.根据session聚合信息

val basicActionMap=basicActions.map(item=>{

val sessionId=item.session_id;

(sessionId,item);

})

val groupBasicActions=basicActionMap.groupByKey();

//3.根据每个用户的sessionId->actions,将actions统计成一条str信息

val aggUserActions=oneServer.AggActionGroup(groupBasicActions);

//4.读取hadoop文件,获取用户的基本信息

val userInfo=oneServer.getUserInfo(session);

//5.根据user_Id,将userInfo的信息插入到aggUserActions,形成更完整的信息

val finalInfo=oneServer.AggInfoAndActions(aggUserActions,userInfo);

finalInfo.cache();

//6.根据common模块里的限制条件过滤数据,跟新累加器

val accumulator=new sessionAccumulator;

session.sparkContext.register(accumulator);

val FilterInfo=oneServer.filterInfo(finalInfo,task,accumulator);

FilterInfo.foreach(println);

/*

目前为止,我们已经得到了所有符合条件的过滤总和信息,以及每个范围内的session数量(累加器),

*/

//7.计算每个范围内的session占比,

val sessionRatioCount= oneServer.getSessionRatio(session,taskUUID,FilterInfo,accumulator.value);

sessionRatioCount.foreach(println);

}

}

服务类:

package server

import java.util.Date

import com.alibaba.fastjson.JSONObject

import commons.conf.ConfigurationManager

import commons.constant.Constants

import commons.model.{SessionAggrStat, UserInfo, UserVisitAction}

import commons.utils.{DateUtils, NumberUtils, StringUtil, ValidUtils}

import org.apache.commons.lang.StringUtils

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{SaveMode, SparkSession}

import org.spark_project.jetty.server.Authentication.User

import scala.collection.mutable

class serverOne extends Serializable {

def getSessionRatio(sparkSession: SparkSession,taskUUID:String, FilterInfo: RDD[(String, String)], value:mutable.HashMap[String,Int]) = {

val session_count = value.getOrElse(Constants.SESSION_COUNT, 1).toDouble

val visit_length_1s_3s = value.getOrElse(Constants.TIME_PERIOD_1s_3s, 0)

val visit_length_4s_6s = value.getOrElse(Constants.TIME_PERIOD_4s_6s, 0)

val visit_length_7s_9s = value.getOrElse(Constants.TIME_PERIOD_7s_9s, 0)

val visit_length_10s_30s = value.getOrElse(Constants.TIME_PERIOD_10s_30s, 0)

val visit_length_30s_60s = value.getOrElse(Constants.TIME_PERIOD_30s_60s, 0)

val visit_length_1m_3m = value.getOrElse(Constants.TIME_PERIOD_1m_3m, 0)

val visit_length_3m_10m = value.getOrElse(Constants.TIME_PERIOD_3m_10m, 0)

val visit_length_10m_30m = value.getOrElse(Constants.TIME_PERIOD_10m_30m, 0)

val visit_length_30m = value.getOrElse(Constants.TIME_PERIOD_30m, 0)

val step_length_1_3 = value.getOrElse(Constants.STEP_PERIOD_1_3, 0)

val step_length_4_6 = value.getOrElse(Constants.STEP_PERIOD_4_6, 0)

val step_length_7_9 = value.getOrElse(Constants.STEP_PERIOD_7_9, 0)

val step_length_10_30 = value.getOrElse(Constants.STEP_PERIOD_10_30, 0)

val step_length_30_60 = value.getOrElse(Constants.STEP_PERIOD_30_60, 0)

val step_length_60 = value.getOrElse(Constants.STEP_PERIOD_60, 0)

val visit_length_1s_3s_ratio = NumberUtils.formatDouble(visit_length_1s_3s / session_count, 2)

val visit_length_4s_6s_ratio = NumberUtils.formatDouble(visit_length_4s_6s / session_count, 2)

val visit_length_7s_9s_ratio = NumberUtils.formatDouble(visit_length_7s_9s / session_count, 2)

val visit_length_10s_30s_ratio = NumberUtils.formatDouble(visit_length_10s_30s / session_count, 2)

val visit_length_30s_60s_ratio = NumberUtils.formatDouble(visit_length_30s_60s / session_count, 2)

val visit_length_1m_3m_ratio = NumberUtils.formatDouble(visit_length_1m_3m / session_count, 2)

val visit_length_3m_10m_ratio = NumberUtils.formatDouble(visit_length_3m_10m / session_count, 2)

val visit_length_10m_30m_ratio = NumberUtils.formatDouble(visit_length_10m_30m / session_count, 2)

val visit_length_30m_ratio = NumberUtils.formatDouble(visit_length_30m / session_count, 2)

val step_length_1_3_ratio = NumberUtils.formatDouble(step_length_1_3 / session_count, 2)

val step_length_4_6_ratio = NumberUtils.formatDouble(step_length_4_6 / session_count, 2)

val step_length_7_9_ratio = NumberUtils.formatDouble(step_length_7_9 / session_count, 2)

val step_length_10_30_ratio = NumberUtils.formatDouble(step_length_10_30 / session_count, 2)

val step_length_30_60_ratio = NumberUtils.formatDouble(step_length_30_60 / session_count, 2)

val step_length_60_ratio = NumberUtils.formatDouble(step_length_60 / session_count, 2)

//数据封装

val stat = SessionAggrStat(taskUUID, session_count.toInt, visit_length_1s_3s_ratio, visit_length_4s_6s_ratio, visit_length_7s_9s_ratio,

visit_length_10s_30s_ratio, visit_length_30s_60s_ratio, visit_length_1m_3m_ratio,

visit_length_3m_10m_ratio, visit_length_10m_30m_ratio, visit_length_30m_ratio,

step_length_1_3_ratio, step_length_4_6_ratio, step_length_7_9_ratio,

step_length_10_30_ratio, step_length_30_60_ratio, step_length_60_ratio)

val sessionRatioRDD = sparkSession.sparkContext.makeRDD(Array(stat))

//写入数据库

import sparkSession.implicits._

sessionRatioRDD.toDF().write

.format("jdbc")

.option("url", ConfigurationManager.config.getString(Constants.JDBC_URL))

.option("user", ConfigurationManager.config.getString(Constants.JDBC_USER))

.option("password", ConfigurationManager.config.getString(Constants.JDBC_PASSWORD))

.option("dbtable", "session_stat_ratio_0416")

.mode(SaveMode.Append)

.save()

sessionRatioRDD;

}

def filterInfo(finalInfo: RDD[(String, String)],task:JSONObject,accumulator:sessionAccumulator) = {

//1.获取限制条件

//获取限制条件的基本信息

val startAge = task.get(Constants.PARAM_START_AGE);

val endAge = task.get( Constants.PARAM_END_AGE);

val professionals = task.get(Constants.PARAM_PROFESSIONALS)

val cities = task.get(Constants.PARAM_CITIES)

val sex = task.get(Constants.PARAM_SEX)

val keywords =task.get(Constants.PARAM_KEYWORDS)

val categoryIds = task.get(Constants.PARAM_CATEGORY_IDS)

//拼接基本条件

var filterInfo = (if(startAge != null) Constants.PARAM_START_AGE + "=" + startAge + "|" else "") +

(if (endAge != null) Constants.PARAM_END_AGE + "=" + endAge + "|" else "") +

(if (professionals != null) Constants.PARAM_PROFESSIONALS + "=" + professionals + "|" else "") +

(if (cities != null) Constants.PARAM_CITIES + "=" + cities + "|" else "") +

(if (sex != null) Constants.PARAM_SEX + "=" + sex + "|" else "") +

(if (keywords != null) Constants.PARAM_KEYWORDS + "=" + keywords + "|" else "") +

(if (categoryIds != null) Constants.PARAM_CATEGORY_IDS + "=" + categoryIds else "")

if(filterInfo.endsWith("\\|"))

filterInfo = filterInfo.substring(0, filterInfo.length - 1)

finalInfo.filter{

case (sessionId,fullInfo)=>{

var success=true;

if(!ValidUtils.between(fullInfo, Constants.FIELD_AGE, filterInfo, Constants.PARAM_START_AGE, Constants.PARAM_END_AGE)){

success = false

}else if(!ValidUtils.in(fullInfo, Constants.FIELD_PROFESSIONAL, filterInfo, Constants.PARAM_PROFESSIONALS)){

success = false

}else if(!ValidUtils.in(fullInfo, Constants.FIELD_CITY, filterInfo, Constants.PARAM_CITIES)){

success = false

}else if(!ValidUtils.equal(fullInfo, Constants.FIELD_SEX, filterInfo, Constants.PARAM_SEX)){

success = false

}else if(!ValidUtils.in(fullInfo, Constants.FIELD_SEARCH_KEYWORDS, filterInfo, Constants.PARAM_KEYWORDS)){

success = false

}else if(!ValidUtils.in(fullInfo, Constants.FIELD_CLICK_CATEGORY_IDS, filterInfo, Constants.PARAM_CATEGORY_IDS)){

success = false

}

//跟新累加器

if (success){

//先累加总的session数量

accumulator.add(Constants.SESSION_COUNT);

val visitLength=StringUtil.getFieldFromConcatString(fullInfo,"\\|",Constants.FIELD_VISIT_LENGTH).toLong;

val stepLength=StringUtil.getFieldFromConcatString(fullInfo,"\\|",Constants.FIELD_STEP_LENGTH).toLong;

calculateVisitLength(visitLength,accumulator);

calculateStepLength(stepLength,accumulator);

}

success;

}

}

}

def calculateVisitLength(visitLength: Long, sessionStatisticAccumulator: sessionAccumulator) = {

if(visitLength >= 1 && visitLength <= 3){

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_1s_3s)

}else if(visitLength >=4 && visitLength <= 6){

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_4s_6s)

}else if (visitLength >= 7 && visitLength <= 9) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_7s_9s)

} else if (visitLength >= 10 && visitLength <= 30) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_10s_30s)

} else if (visitLength > 30 && visitLength <= 60) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_30s_60s)

} else if (visitLength > 60 && visitLength <= 180) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_1m_3m)

} else if (visitLength > 180 && visitLength <= 600) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_3m_10m)

} else if (visitLength > 600 && visitLength <= 1800) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_10m_30m)

} else if (visitLength > 1800) {

sessionStatisticAccumulator.add(Constants.TIME_PERIOD_30m)

}

}

def calculateStepLength(stepLength: Long, sessionStatisticAccumulator: sessionAccumulator) = {

if(stepLength >=1 && stepLength <=3){

sessionStatisticAccumulator.add(Constants.STEP_PERIOD_1_3)

}else if (stepLength >= 4 && stepLength <= 6) {

sessionStatisticAccumulator.add(Constants.STEP_PERIOD_4_6)

} else if (stepLength >= 7 && stepLength <= 9) {

sessionStatisticAccumulator.add(Constants.STEP_PERIOD_7_9)

} else if (stepLength >= 10 && stepLength <= 30) {

sessionStatisticAccumulator.add(Constants.STEP_PERIOD_10_30)

} else if (stepLength > 30 && stepLength <= 60) {

sessionStatisticAccumulator.add(Constants.STEP_PERIOD_30_60)

} else if (stepLength > 60) {

sessionStatisticAccumulator.add(Constants.STEP_PERIOD_60)

}

}

def getUserInfo(session: SparkSession) = {

import session.implicits._;

val ds=session.read.parquet("hdfs://hadoop1:9000/data/user_Info").as[UserInfo].map(item=>(item.user_id,item));

ds.rdd;

}

def basicActions(session:SparkSession,task:JSONObject)={

import session.implicits._;

val df=session.read.parquet("hdfs://hadoop1:9000/data/user_visit_action").as[UserVisitAction];

df.filter(item=>{

val date=item.action_time;

val start=task.getString(Constants.PARAM_START_DATE);

val end=task.getString(Constants.PARAM_END_DATE);

date>=start&&date<=end;

})

df.rdd;

}

def AggActionGroup(groupBasicActions: RDD[(String, Iterable[UserVisitAction])])={

groupBasicActions.map{

case (sessionId,actions)=>{

var userId = -1L

var startTime:Date = null

var endTime:Date = null

var stepLength = 0

val searchKeywords = new StringBuffer("")

val clickCategories = new StringBuffer("")

//循环遍历actions,更新信息

for (action<-actions){

if(userId == -1L){

userId=action.user_id;

}

val time=DateUtils.parseTime(action.action_time);

if (startTime==null||startTime.after(time))startTime=time;

if (endTime==null||endTime.before(time))endTime=time;

val key=action.search_keyword;

if (!StringUtils.isEmpty(key) && !searchKeywords.toString.contains(key))searchKeywords.append(key+",");

val click=action.click_category_id;

if ( click!= -1L && clickCategories.toString.contains(click))searchKeywords.append(click+",");

stepLength+=1;

}

// searchKeywords.toString.substring(0, searchKeywords.toString.length)

val searchKw = StringUtil.trimComma(searchKeywords.toString)

val clickCg = StringUtil.trimComma(clickCategories.toString)

val visitLength = (endTime.getTime - startTime.getTime) / 1000

val aggrInfo = Constants.FIELD_SESSION_ID + "=" + sessionId + "|" +

Constants.FIELD_SEARCH_KEYWORDS + "=" + searchKw + "|" +

Constants.FIELD_CLICK_CATEGORY_IDS + "=" + clickCg + "|" +

Constants.FIELD_VISIT_LENGTH + "=" + visitLength + "|" +

Constants.FIELD_STEP_LENGTH + "=" + stepLength + "|" +

Constants.FIELD_START_TIME + "=" + DateUtils.formatTime(startTime)

(userId, aggrInfo)

}

}

}

def AggInfoAndActions(aggUserActions: RDD[(Long, String)], userInfo: RDD[(Long, UserInfo)])={

//根据user_id建立映射关系===>用Join算子

userInfo.join(aggUserActions).map{

case (userId,(userInfo: UserInfo,aggrInfo))=>{

val age = userInfo.age

val professional = userInfo.professional

val sex = userInfo.sex

val city = userInfo.city

val fullInfo = aggrInfo + "|" +

Constants.FIELD_AGE + "=" + age + "|" +

Constants.FIELD_PROFESSIONAL + "=" + professional + "|" +

Constants.FIELD_SEX + "=" + sex + "|" +

Constants.FIELD_CITY + "=" + city

val sessionId = StringUtil.getFieldFromConcatString(aggrInfo, "\\|", Constants.FIELD_SESSION_ID)

(sessionId, fullInfo)

}

}

}

}

总结

- 一个用户对应多条数据,应先对数据进行统计聚合操作,转化为一条整体的数据

- 对于有相同键,但是值的结构不同的k-v类型RDD,可以用join进行聚合

- 自定义累加器

436

436

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?