一、bisheng所有的服务

- mysql:8.0

- redis:7.0.4

- onlyoffice/documentserver:7.2.1

- dataelement/bisheng-backend:latest

- dataelement/bisheng-frontend:latest

- docker.io/bitnami/elasticsearch:8.12.0

- quay.io/coreos/etcd:v3.5.5

- minio/minio:RELEASE.2023-03-20T20-16-18Z

- milvusdb/milvus:v2.3.3

下面的是模型相关的服务 - dataelement/bisheng-rt:0.0.6.3rc1

- dataelement/bisheng-ft:latest

- dataelement/bisheng-unstructured:0.0.3.4

二、开始部署

(一)mysql部署

这个是原始docker-compose的部署文件

mysql:

container_name: bisheng-mysql

image: mysql:8.0

ports:

- "3306:3306"

environment:

MYSQL_ROOT_PASSWORD: "1234" # 数据库密码,如果修改需要同步修改bisheng/congfig/config.yaml配置database_url的mysql连接密码

MYSQL_DATABASE: bisheng

TZ: Asia/Shanghai

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/mysql/conf/my.cnf:/etc/mysql/my.cnf

- ${DOCKER_VOLUME_DIRECTORY:-.}/mysql/data:/var/lib/mysql

healthcheck:

test: ["CMD-SHELL", "exit | mysql -u root -p$$MYSQL_ROOT_PASSWORD"]

start_period: 30s

interval: 20s

timeout: 10s

retries: 4

restart: on-failure

改造成k8s的方式

1、增加my.cnf配置文件,这个文件在bisheng/docker/mysql/conf/my.cnf目录

vim bisheng-mysql-cnf.yaml

bisheng-mysql-cnf.yaml里的内容

apiVersion: v1

kind: ConfigMap

metadata:

namespace: bisheng-zxp #此处为定义的项目整体命名空间

labels: {}

name: bisheng-mysql-cnf #配置名称

spec:

template:

metadata:

labels: {}

data:

my.cnf: >-

[client]

default-character-set=utf8mb4

[mysql]

default-character-set=utf8mb4

[mysqld]

init_connect='SET collation_connection = utf8mb4_unicode_ci, NAMES utf8mb4'

character-set-server=utf8mb4

collation-server=utf8mb4_unicode_ci

# skip-character-set-client-handshake

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION

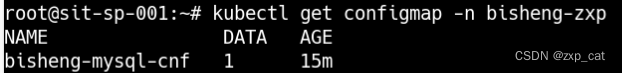

执行该文件

kubectl apply -f bisheng-mysql-cnf.yaml

2、创建mysql服务

vim bisheng-mysql.yaml

注意事项:笔者本身有openebs 存储类, 实际要根据自己的情况进行修改,同时一定要做好数据持久化工作,否则容器重启数据将无法找回

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-mysql

name: bisheng-mysql

spec:

replicas: 1

selector:

matchLabels:

app: bisheng-mysql

template:

metadata:

labels:

app: bisheng-mysql

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-4g37k3

imagePullPolicy: IfNotPresent

image: 'mysql:8.0'

ports:

- name: http-3306

protocol: TCP

containerPort: 3306

servicePort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '1234'

- name: MYSQL_DATABASE

value: bisheng

- name: TZ

value: Asia/Shanghai

volumeMounts:

- readOnly: false

mountPath: /var/lib/mysql

name: bisheng-mysql

- name: volume-ccdmi9

readOnly: true

mountPath: /etc/mysql/my.cnf

subPath: my.cnf

serviceAccount: default

initContainers: []

volumes:

- name: volume-ccdmi9

configMap:

name: bisheng-mysql-cnf

items:

- key: my.cnf

path: my.cnf

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: bisheng-mysql-6kgj

volumeClaimTemplates:

- spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi #根据实际情况填写

storageClassName: ebs #根据实际情况选择存储类

metadata:

name: bisheng-mysql

namespace: bisheng-zxp

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-mysql

name: bisheng-mysql-6kgj

annotations:

kubesphere.io/alias-name: bisheng-mysql

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: bisheng-mysql

ports:

- name: http-3306

protocol: TCP

port: 3306

targetPort: 3306

clusterIP: None

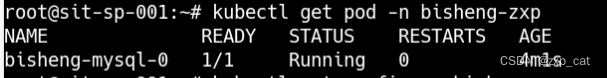

kubectl apply -f bisheng-mysql.yaml

运行截图

(二)redis部署

**原始docker-compose文件

redis:

container_name: bisheng-redis

image: redis:7.0.4

ports:

- "6379:6379"

environment:

TZ: Asia/Shanghai

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/data/redis:/data

- ${DOCKER_VOLUME_DIRECTORY:-.}/redis/redis.conf:/etc/redis.conf

command: redis-server /etc/redis.conf

healthcheck:

test: ["CMD-SHELL", 'redis-cli ping|grep -e "PONG\|NOAUTH"']

interval: 10s

timeout: 5s

retries: 3

restart: on-failure

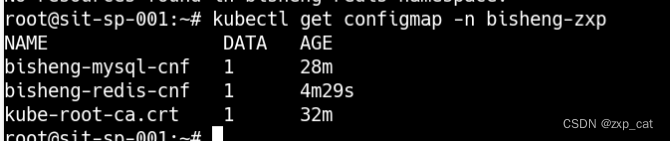

改造成k8s 的yaml形式

1、按照部署mysql的配置文件,将redis配置文件同样建好

2、部署redis服务

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-redis

name: bisheng-redis

spec:

replicas: 1

selector:

matchLabels:

app: bisheng-redis

template:

metadata:

labels:

app: bisheng-redis

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-v3tttu

imagePullPolicy: IfNotPresent

image: 'redis:7.0.4'

ports:

- name: http-6379

protocol: TCP

containerPort: 6379

servicePort: 6379

command:

- redis-server

args:

- /etc/redis.conf

env:

- name: TZ

value: Asia/Shanghai

volumeMounts:

- readOnly: false

mountPath: /data

name: bisheng-redis

- name: volume-7elktd

readOnly: true

mountPath: /etc/redis.conf

subPath: redis.conf

serviceAccount: default

initContainers: []

volumes:

- name: volume-7elktd

configMap:

name: bisheng-redis-cnf

items:

- key: redis.conf

path: redis.conf

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: bisheng-redis-gx43

volumeClaimTemplates:

- spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi #要修改的地方

storageClassName: ebs #要修改的地方

metadata:

name: bisheng-redis

namespace: bisheng-zxp

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-redis

name: bisheng-redis-gx43

annotations:

kubesphere.io/alias-name: bisheng-redis

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: bisheng-redis

ports:

- name: http-6379

protocol: TCP

port: 6379

targetPort: 6379

clusterIP: None

三、部署onlyoffice

原始的docker compose文件

office:

container_name: bisheng-office

image: onlyoffice/documentserver:7.2.1

ports:

- "8701:80"

environment:

TZ: Asia/Shanghai

JWT_ENABLED: false

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/office/bisheng:/var/www/onlyoffice/documentserver/sdkjs-plugins/bisheng

command: bash -c "supervisorctl restart all"

restart: on-failure

部署成k8s 的yaml文件

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-office

name: bisheng-office

spec:

replicas: 1

selector:

matchLabels:

app: bisheng-office

template:

metadata:

labels:

app: bisheng-office

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-2rsreg

imagePullPolicy: IfNotPresent

image: 'onlyoffice/documentserver:7.2.1'

ports:

- name: http-80

protocol: TCP

containerPort: 80

servicePort: 8701

env:

- name: TZ

value: Asia/Shanghai

- name: JWT_ENABLED

value: 'false'

volumeMounts:

- readOnly: false

mountPath: /var/www/onlyoffice/documentserver/sdkjs-plugins/bisheng

name: bisheng-office

serviceAccount: default

initContainers: []

volumes: []

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: bisheng-office-cf1h

volumeClaimTemplates:

- spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ebs

metadata:

name: bisheng-office

namespace: bisheng-zxp

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-office

name: bisheng-office-cf1h

annotations:

kubesphere.io/alias-name: bisheng-office

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: bisheng-office

ports:

- name: http-80

protocol: TCP

port: 8701

targetPort: 80

clusterIP: None

四、部署elasticsearch项目

原始的docker-compose

elasticsearch:

container_name: bisheng-es

image: docker.io/bitnami/elasticsearch:8.12.0

user: root

ports:

- "9200:9200"

- "9300:9300"

environment:

TZ: Asia/Shanghai

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/data/es:/bitnami/elasticsearch/data

restart: on-failure

**改造成k8s **

注:这里使用openebs 会出现文件权限问题,遂采用nfs的方式,此问题待探寻问题根源

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-es

name: bisheng-es

spec:

replicas: 1

selector:

matchLabels:

app: bisheng-es

template:

metadata:

labels:

app: bisheng-es

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-4wrrhk

imagePullPolicy: IfNotPresent

image: 'docker.io/bitnami/elasticsearch:8.12.0'

ports:

- name: http-9200

protocol: TCP

containerPort: 9200

servicePort: 9200

- name: http-9300

protocol: TCP

containerPort: 9300

servicePort: 9300

env:

- name: TZ

value: Asia/Shanghai

volumeMounts:

- readOnly: false

mountPath: /bitnami/elasticsearch/data

name: bisheng-es

serviceAccount: default

initContainers: []

volumes: []

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: bisheng-es-ijpq

volumeClaimTemplates:

- spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: nfs

metadata:

name: bisheng-es

namespace: bisheng-zxp

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-es

name: bisheng-es-ijpq

annotations:

kubesphere.io/alias-name: bisheng-es

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: bisheng-es

ports:

- name: http-9200

protocol: TCP

port: 9200

targetPort: 9200

- name: http-9300

protocol: TCP

port: 9300

targetPort: 9300

clusterIP: None

五、部署minio服务

原始的docker-compose

minio:

container_name: milvus-minio

image: minio/minio:RELEASE.2023-03-20T20-16-18Z

environment:

MINIO_ACCESS_KEY: minioadmin

MINIO_SECRET_KEY: minioadmin

ports:

- "9100:9000"

- "9101:9001"

volumes:

- /etc/localtime:/etc/localtime:ro

- ${DOCKER_VOLUME_DIRECTORY:-.}/data/milvus-minio:/minio_data

command: minio server /minio_data --console-address ":9001"

restart: on-failure

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]

interval: 30s

timeout: 20s

retries: 3

改造成k8s

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: milvus-minio

name: milvus-minio

spec:

replicas: 1

selector:

matchLabels:

app: milvus-minio

template:

metadata:

labels:

app: milvus-minio

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-e8f3zy

imagePullPolicy: IfNotPresent

image: 'minio/minio:RELEASE.2023-03-20T20-16-18Z'

ports:

- name: http-0

protocol: TCP

containerPort: 9000

servicePort: 9000

- name: http-1

protocol: TCP

containerPort: 9001

servicePort: 9001

env:

- name: MINIO_ACCESS_KEY

value: minioadmin

- name: MINIO_SECRET_KEY

value: minioadmin

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: false

- readOnly: false

mountPath: /minio_data

name: milvus-minio

command:

- minio

args:

- 'server'

- '/minio_data'

serviceAccount: default

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: milvus-minio-uq6k

volumeClaimTemplates:

- spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ebs

metadata:

name: milvus-minio

namespace: bisheng-zxp

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: milvus-minio

name: milvus-minio-uq6k

annotations:

kubesphere.io/alias-name: milvus-minio

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: milvus-minio

ports:

- name: http-0

protocol: TCP

port: 9000

targetPort: 9000

- name: http-1

protocol: TCP

port: 9001

targetPort: 9001

clusterIP: None

六、部署etcd服务

原始的docker-compose

etcd:

container_name: milvus-etcd

image: quay.io/coreos/etcd:v3.5.5

environment:

ETCD_AUTO_COMPACTION_MODE: revision

ETCD_AUTO_COMPACTION_RETENTION: "1000"

ETCD_QUOTA_BACKEND_BYTES: "4294967296"

ETCD_SNAPSHOT_COUNT: "50000"

TZ: Asia/Shanghai

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/data/milvus-etcd:/etcd

command: etcd -advertise-client-urls=http://127.0.0.1:2379 -listen-client-urls http://0.0.0.0:2379 --data-dir /etcd

restart: on-failure

healthcheck:

test: ["CMD", "etcdctl", "endpoint", "health"]

interval: 30s

timeout: 20s

修改成k8s 的yaml文件

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: milvus-etcd

name: milvus-etcd

spec:

replicas: 1

selector:

matchLabels:

app: milvus-etcd

template:

metadata:

labels:

app: milvus-etcd

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-qecx8k

imagePullPolicy: IfNotPresent

image: 'quay.io/coreos/etcd:v3.5.5'

ports:

- name: http-2379

protocol: TCP

containerPort: 2379

servicePort: 2379

env:

- name: ETCD_AUTO_COMPACTION_MODE

value: revision

- name: ETCD_AUTO_COMPACTION_RETENTION

value: '1000'

- name: ETCD_QUOTA_BACKEND_BYTES

value: '4294967296'

- name: ETCD_SNAPSHOT_COUNT

value: '50000'

volumeMounts:

- readOnly: false

mountPath: /etcd

name: milvus-etcd

command:

- etcd

args:

- '-advertise-client-urls=http://0.0.0.0:2379'

- '-listen-client-urls=http://0.0.0.0:2379'

- '--data-dir=/etcd'

serviceAccount: default

initContainers: []

volumes: []

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: milvus-etcd-01io

volumeClaimTemplates:

- spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ebs

metadata:

name: milvus-etcd

namespace: bisheng-zxp

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: milvus-etcd

name: milvus-etcd-01io

annotations:

kubesphere.io/alias-name: milvus-etcd

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: milvus-etcd

ports:

- name: http-2379

protocol: TCP

port: 2379

targetPort: 2379

clusterIP: None

七、部署milvus服务

原始的docker-compose文件

milvus:

container_name: milvus-standalone

image: milvusdb/milvus:v2.3.3

command: ["milvus", "run", "standalone"]

security_opt:

- seccomp:unconfined

environment:

ETCD_ENDPOINTS: etcd:2379

MINIO_ADDRESS: minio:9000

volumes:

- /etc/localtime:/etc/localtime:ro

- ${DOCKER_VOLUME_DIRECTORY:-.}/data/milvus:/var/lib/milvus

restart: on-failure

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9091/healthz"]

start_period: 90s

interval: 30s

timeout: 20s

retries: 3

ports:

- "19530:19530"

- "9091:9091"

depends_on:

- etcd

- minio

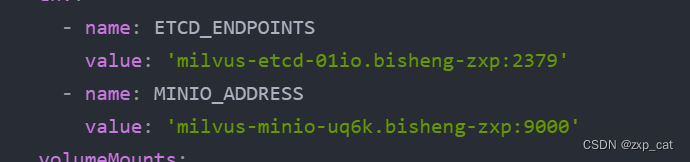

这里要注意下,因为milvus配置的环境变量是etcd 和minio的服务链接,所以这里我们也要改成k8s容器的服务链接,简单来讲就是服务名拼接命名空间,如下图:

修改后的k8s yaml文件

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: milvus-standalone

name: milvus-standalone

spec:

replicas: 1

selector:

matchLabels:

app: milvus-standalone

template:

metadata:

labels:

app: milvus-standalone

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-allzfa

imagePullPolicy: IfNotPresent

image: 'milvusdb/milvus:v2.3.3'

env:

- name: ETCD_ENDPOINTS

value: 'milvus-etcd-01io.bisheng-zxp:2379'

- name: MINIO_ADDRESS

value: 'milvus-minio-uq6k.bisheng-zxp:9000'

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: false

- readOnly: false

mountPath: /var/lib/milvus

name: milvus-standalone

ports:

- name: http-0

protocol: TCP

containerPort: 19530

servicePort: 19530

- name: http-1

protocol: TCP

containerPort: 9091

servicePort: 9091

command:

- milvus

args:

- 'run'

- 'standalone'

serviceAccount: default

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: milvus-standalone-rhtl

volumeClaimTemplates:

- spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ebs

metadata:

name: milvus-standalone

namespace: bisheng-zxp

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: milvus-standalone

name: milvus-standalone-rhtl

annotations:

kubesphere.io/alias-name: milvus-standalone

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: milvus-standalone

ports:

- name: http-0

protocol: TCP

port: 19530

targetPort: 19530

- name: http-1

protocol: TCP

port: 9091

targetPort: 9091

clusterIP: None

等以上服务全部正常运行后即可开始部署前端和后端服务

八、部署后端服务

原始的docker-compose 文件

backend:

container_name: bisheng-backend

image: dataelement/bisheng-backend:latest

ports:

- "7860:7860"

environment:

TZ: Asia/Shanghai

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/bisheng/config/config.yaml:/app/bisheng/config.yaml

- ${DOCKER_VOLUME_DIRECTORY:-.}/data/bisheng:/app/data

security_opt:

- seccomp:unconfined

command: bash -c "uvicorn bisheng.main:app --host 0.0.0.0 --port 7860 --no-access-log --workers 2" # --workers 表示使用几个进程,提高并发度

restart: on-failure

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:7860/health"]

start_period: 30s

interval: 90s

timeout: 30s

retries: 3

depends_on:

mysql:

condition: service_healthy

redis:

condition: service_healthy

office:

condition: service_started

修改后的k8s yaml文件

1、加入后端配置文件,这里要注意一定要将数据库和redis的链接地址改为k8s部署的服务地址,如果密码有变化,也要一并更改

apiVersion: v1

kind: ConfigMap

metadata:

namespace: bisheng-zxp

labels: {}

name: bisheng-backend-cnf

spec:

template:

metadata:

labels: {}

data:

config.yaml: >+

# 数据库配置, 当前加密串的密码是1234,

# 密码加密参考

https://dataelem.feishu.cn/wiki/BSCcwKd4Yiot3IkOEC8cxGW7nPc#Gxitd1xEeof1TzxdhINcGS6JnXd

database_url:

"mysql+pymysql://root:gAAAAABlp4b4c59FeVGF_OQRVf6NOUIGdxq8246EBD-b0hdK_jVKRs1x4PoAn0A6C5S6IiFKmWn0Nm5eBUWu-7jxcqw6TiVjQA==@bisheng-mysql-6kgj.bisheng-zxp:3306/bisheng?charset=utf8mb4"

# 缓存配置 redis://[[username]:[password]]@localhost:6379/0

# 普通模式:

redis_url: "redis://bisheng-redis-gx43.bisheng-zxps:6379/1"

# 集群模式或者哨兵模式(只能选其一):

# redis_url:

# mode: "cluster"

# startup_nodes:

# - {"host": "192.168.106.115", "port": 6002}

# password:

encrypt(gAAAAABlp4b4c59FeVGF_OQRVf6NOUIGdxq8246EBD-b0hdK_jVKRs1x4PoAn0A6C5S6IiFKmWn0Nm5eBUWu-7jxcqw6TiVjQA==)

# #sentinel

# mode: "sentinel"

# sentinel_hosts: [("redis", 6379)]

# sentinel_master: "mymaster"

# sentinel_password:

encrypt(gAAAAABlp4b4c59FeVGF_OQRVf6NOUIGdxq8246EBD-b0hdK_jVKRs1x4PoAn0A6C5S6IiFKmWn0Nm5eBUWu-7jxcqw6TiVjQA==)

# db: 1

environment:

env: dev

uns_support: ['png','jpg','jpeg','bmp','doc', 'docx', 'ppt', 'pptx', 'xls', 'xlsx', 'txt', 'md', 'html', 'pdf', 'csv', 'tiff']

2、部署服务

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-backend

name: bisheng-backend

spec:

replicas: 1

selector:

matchLabels:

app: bisheng-backend

template:

metadata:

labels:

app: bisheng-backend

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-9tnx7d

imagePullPolicy: IfNotPresent

image: 'dataelement/bisheng-backend:latest'

ports:

- name: http-0

protocol: TCP

containerPort: 7860

servicePort: 7860

command:

- bash

args:

- '-c'

- >-

uvicorn bisheng.main:app --host 0.0.0.0 --port 7860 --no-access-log

--workers 2

volumeMounts:

- readOnly: false

mountPath: /app/data

name: bisheng-backend

- name: volume-roavut

readOnly: true

mountPath: /app/bisheng/config.yaml

subPath: config.yaml

serviceAccount: default

initContainers: []

volumes:

- name: volume-roavut

configMap:

name: bisheng-backend-cnf

items:

- key: config.yaml

path: config.yaml

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: bisheng-backend-mx3x

volumeClaimTemplates:

- spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ebs

metadata:

name: bisheng-backend

namespace: bisheng-zxp

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-backend

name: bisheng-backend-mx3x

annotations:

kubesphere.io/alias-name: bisheng-backend

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: bisheng-backend

ports:

- name: http-0

protocol: TCP

port: 7860

targetPort: 7860

clusterIP: None

九、创建前端服务

frontend:

container_name: bisheng-frontend

image: dataelement/bisheng-frontend:latest

ports:

- "3001:3001"

environment:

TZ: Asia/Shanghai

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/nginx/nginx.conf:/etc/nginx/nginx.conf

- ${DOCKER_VOLUME_DIRECTORY:-.}/nginx/conf.d:/etc/nginx/conf.d

restart: on-failure

depends_on:

- backend

1、添加配置文件,注意这里要修改nginx 的upstream 的服务请求地址

kind: ConfigMap

apiVersion: v1

metadata:

name: bisheng-nginx

namespace: bisheng-zxp

annotations:

kubesphere.io/creator: admin

data:

default.conf: "\n# 在http区域内一定要添加下面配置, 支持websocket\nmap $http_upgrade $connection_upgrade {\n\tdefault upgrade;\n\t'' close;\n}\n\n\n\nserver {\n\tgzip on;\n\tgzip_comp_level 2;\n\tgzip_min_length 1000;\n\tgzip_types text/xml text/css;\n\tgzip_http_version 1.1;\n\tgzip_vary on;\n\tgzip_disable \"MSIE [4-6] \\.\";\n\n\tlisten 3001;\n\n\tlocation / {\n\t\troot /usr/share/nginx/html;\n\t\tindex index.html index.htm;\n\t\ttry_files $uri $uri/ /index.html =404;\n\t\tadd_header X-Frame-Options SAMEORIGIN;\n\t}\n\n\tlocation /api {\n\t\tproxy_pass http://bisheng-backend-mx3x.bisheng-zxp:7860;\n\t\tproxy_read_timeout 300s;\n\t\tproxy_set_header Host $host;\n\t\tproxy_set_header X-Real-IP $remote_addr;\n\t\tproxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;\n\t\tproxy_http_version 1.1;\n\t\tproxy_set_header Upgrade $http_upgrade;\n\t\tproxy_set_header Connection $connection_upgrade;\n\t\tclient_max_body_size 50m;\n\t\tadd_header Access-Control-Allow-Origin $host;\n\t\tadd_header X-Frame-Options SAMEORIGIN;\n\t}\n\n\tlocation /bisheng {\n\t\tproxy_pass http://milvus-minio-uq6k.bisheng-zxp:9000;\n\t}\n}"

nginx.conf: |-

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

websocket.conf: "\n# 在http区域内一定要添加下面配置, 支持websocket\nmap $http_upgrade $connection_upgrade {\n\tdefault upgrade;\n\t'' close;\n}\n\nserver {\n\tgzip on;\n\tgzip_comp_level 2;\n\tgzip_min_length 1000;\n\tgzip_types text/xml text/css;\n\tgzip_http_version 1.1;\n\tgzip_vary on;\n\tgzip_disable \"MSIE [4-6] \\.\";\n\n\tlisten 8443;\n\tlocation /api {\n\t\tproxy_pass http://bisheng-backend-mx3x.bisheng-zxp:7860;\n\t\tproxy_read_timeout 300s;\n\t\tproxy_set_header Host $host;\n\t\tproxy_set_header X-Real-IP $remote_addr;\n\t\tproxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;\n\t\tproxy_http_version 1.1;\n\t\tproxy_set_header Upgrade $http_upgrade;\n\t\tproxy_set_header Connection $connection_upgrade;\n\t\tclient_max_body_size 50m;\n\t}\n}"

2、部署服务

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-frontend

name: bisheng-frontend

spec:

replicas: 1

selector:

matchLabels:

app: bisheng-frontend

template:

metadata:

labels:

app: bisheng-frontend

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

spec:

containers:

- name: container-6iebk7

imagePullPolicy: IfNotPresent

image: 'dataelement/bisheng-frontend:latest'

ports:

- name: port-3001

protocol: TCP

containerPort: 3001

servicePort: 3001

env:

- name: TZ

value: Asia/Shanghai

volumeMounts:

- name: volume-oxx35k

readOnly: true

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

- name: volume-trlzgf

readOnly: true

mountPath: /etc/nginx/conf.d

serviceAccount: default

initContainers: []

volumes:

- name: volume-oxx35k

configMap:

name: bisheng-nginx

items:

- key: nginx.conf

path: nginx.conf

- name: volume-trlzgf

configMap:

name: bisheng-nginx

items:

- key: default.conf

path: default.conf

- key: websocket.conf

path: websocket.conf

imagePullSecrets: null

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

serviceName: bisheng-frontend-rzhg

---

apiVersion: v1

kind: Service

metadata:

namespace: bisheng-zxp

labels:

app: bisheng-frontend

name: bisheng-frontend-rzhg

annotations:

kubesphere.io/alias-name: bisheng-frontend

kubesphere.io/serviceType: statefulservice

spec:

sessionAffinity: None

selector:

app: bisheng-frontend

ports:

- name: port-3001

protocol: TCP

port: 3001

targetPort: 3001

clusterIP: None

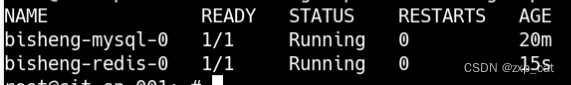

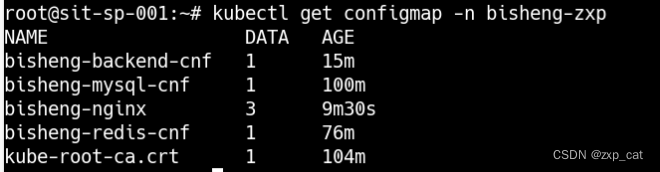

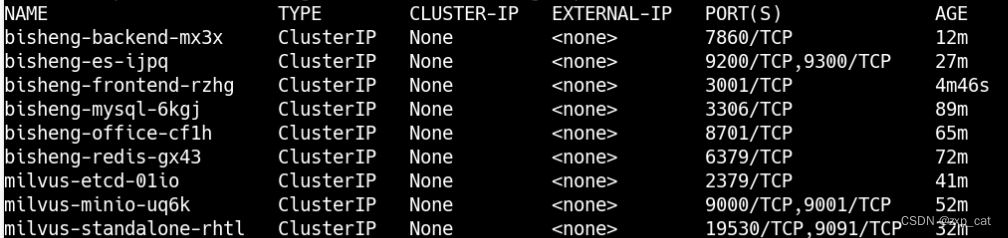

总体的服务运行情况

三、总结

本次只完成了必要服务的部署,能够基本满足bisheng整体服务运行,后续将完成rt、uns服务部署,同时完成平台相关应用的配置文件修改,如有问题,请批评指正,感谢阅读。

4491

4491

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?