Nacos 实战

作者:行癫(盗版必究)

一:Nacos简介

1.简介

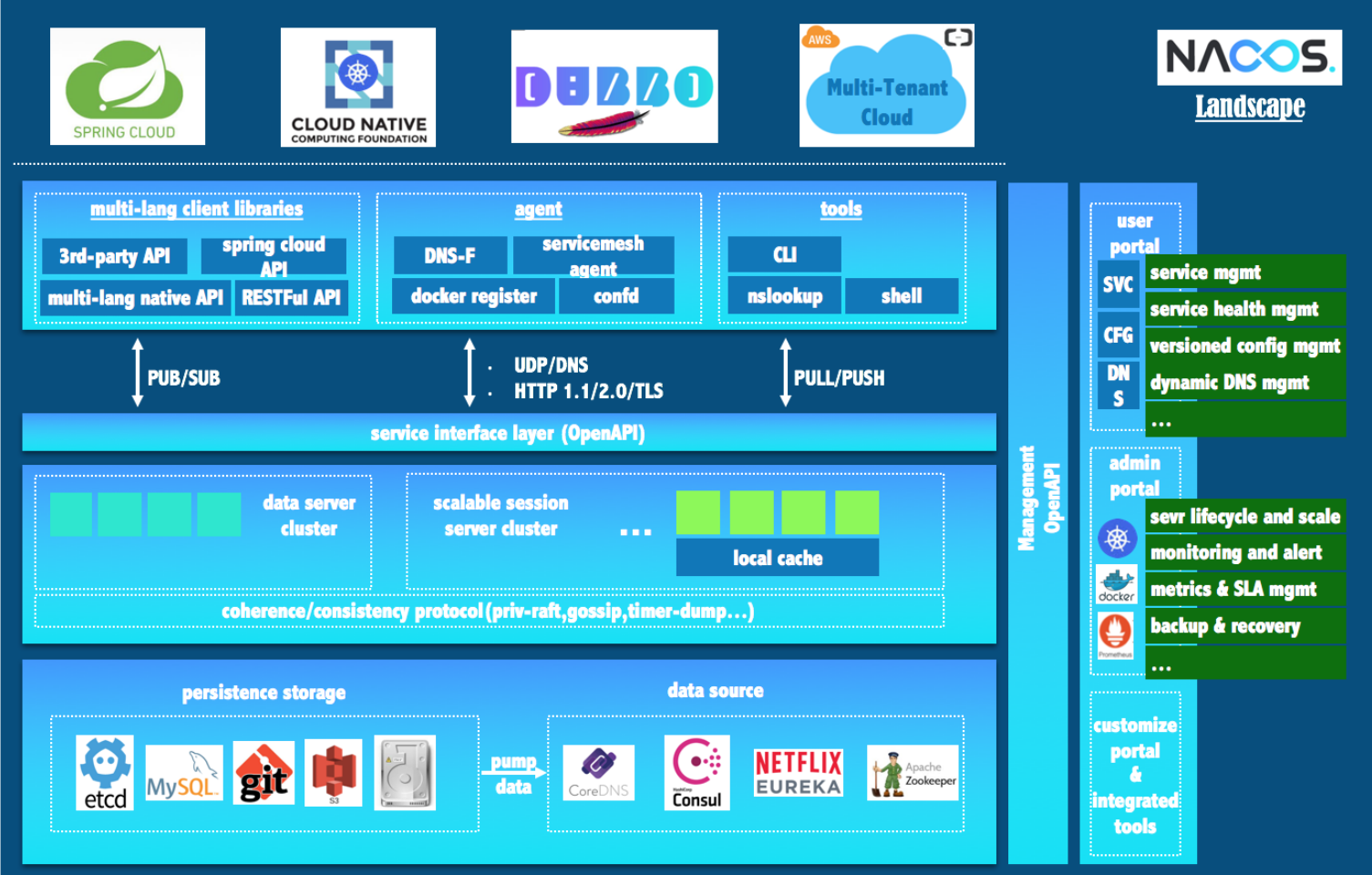

Nacos是 Dynamic Naming and Configuration Service的首字母简称,一个更易于构建云原生应用的动态服务发现、配置管理和服务管理平台;Nacos 致力于帮助您发现、配置和管理微服务;Nacos 提供了一组简单易用的特性集,快速实现动态服务发现、服务配置、服务元数据及流量管理;Nacos 更敏捷和容易地构建、交付和管理微服务平台;Nacos 是构建以“服务”为中心的现代应用架构 (例如微服务范式、云原生范式) 的服务基础设施

二:基于Kubernetes部署Nacos

1.环境部署

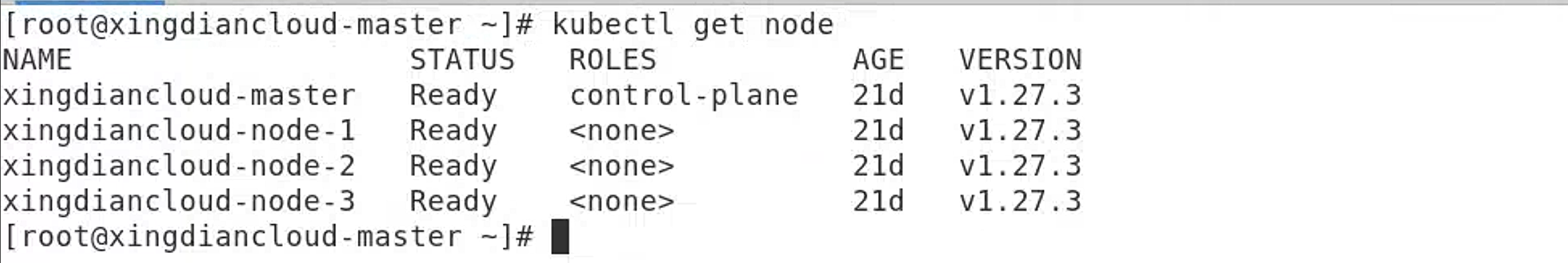

kubernetes集群环境:

nacos版本:2.2.0

2.Nacos部署

数据持久化准备

NFS-Server部署:

[root@nfs-server ~]# yum -y install nfs-utils

[root@nfs-server ~]# systemctl restart nfs

创建共享目录/data/nfs-share(Nacos使用)

创建共享目录/data/mysql(Mysql使用)

共享脚本:

[root@xingdiancloud nacos]# cat nfs.sh

#!/bin/bash

read -p "请输入您要创建的共享目录:" dir

if [ -d $dir ];then

echo "请重新输入共享目录: "

read again_dir

mkdir $again_dir -p

echo "共享目录创建成功"

read -p "请输入共享对象:" ips

echo "$again_dir ${ips}(rw,sync,no_root_squash)" >> /etc/exports

xingdian=`cat /etc/exports |grep "$again_dir" |wc -l`

if [ $xingdian -eq 1 ];then

echo "成功配置共享"

exportfs -rv >/dev/null

exit

else

exit

fi

else

mkdir $dir -p

echo "共享目录创建成功"

read -p "请输入共享对象:" ips

echo "$dir ${ips}(rw,sync,no_root_squash)" >> /etc/exports

xingdian=`cat /etc/exports |grep "$dir" |wc -l`

if [ $xingdian -eq 1 ];then

echo "成功配置共享"

exportfs -rv >/dev/null

exit

else

exit

fi

fi

例如:

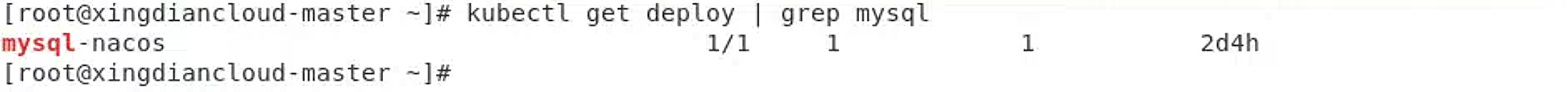

部署Nacos-Mysql服务

[root@xingdiancloud mysql]# cat mysql-nfs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

labels:

name: mysql

spec:

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

name: mysql

template:

metadata:

labels:

name: mysql

spec:

containers:

- name: mysql

image: nacos/nacos-mysql:5.7

ports:

- containerPort: 3306

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: "root"

- name: MYSQL_DATABASE

value: "nacos_devtest"

- name: MYSQL_USER

value: "nacos"

- name: MYSQL_PASSWORD

value: "nacos"

volumes:

- name: mysql-data

nfs:

server: 10.9.12.250

path: /opt/mysql_nacos

---

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306

selector:

name: mysql

[root@xingdiancloud mysql]# kubectl create -f mysql-nfs.yaml

验证:

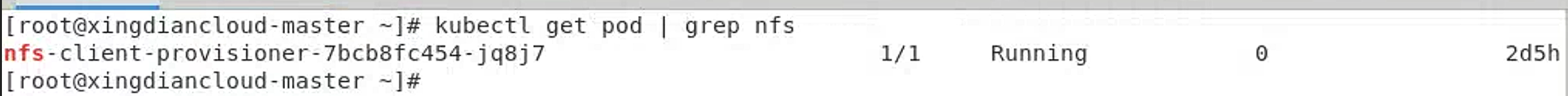

部署NFS-Client

注意:由于本集群采用Kubernetes 1.27.3版本;故NFS-Client采用4.0.2版本;低版本Kubernetes采用Nacos提供即可

创建RBAC:

[root@xingdiancloud deploy]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

[root@xingdiancloud deploy]# kubectl create -f rbac.yaml

指定NFS-Server的地址:10.9.12.250;修改共享目录

[root@xingdiancloud deploy]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: 10.9.12.201/xingdian/nfs:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 10.9.12.250

- name: NFS_PATH

value: /data/nfs-share

volumes:

- name: nfs-client-root

nfs:

server: 10.9.12.250

path: /data/nfs-share

[root@xingdiancloud deploy]# kubernetes create -f deployment.yaml

创建storageclass:

[root@xingdiancloud deploy]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs

parameters:

archiveOnDelete: "false"

[root@xingdiancloud deploy]# kubernetes create -f class.yaml

验证:

部署Nacos服务

注意:部署nacos采用statefulset方式

[root@xingdiancloud nacos]# cat nacos-pvc-nfs.yaml

---

apiVersion: v1

kind: Service

metadata:

name: nacos-headless

labels:

app: nacos

spec:

publishNotReadyAddresses: true

ports:

- port: 8848

name: server

targetPort: 8848

- port: 9848

name: client-rpc

targetPort: 9848

- port: 9849

name: raft-rpc

targetPort: 9849

## 兼容1.4.x版本的选举端口

- port: 7848

name: old-raft-rpc

targetPort: 7848

clusterIP: None

selector:

app: nacos

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nacos-cm

data:

mysql.host: "mysql"

mysql.db.name: "nacos_devtest"

mysql.port: "3306"

mysql.user: "nacos"

mysql.password: "nacos"

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nacos

spec:

podManagementPolicy: Parallel

serviceName: nacos-headless

replicas: 3

template:

metadata:

labels:

app: nacos

annotations:

pod.alpha.kubernetes.io/initialized: "true"

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- nacos

topologyKey: "kubernetes.io/hostname"

serviceAccountName: nfs-client-provisioner

initContainers:

- name: peer-finder-plugin-install

image: nacos/nacos-peer-finder-plugin:1.1

imagePullPolicy: Always

volumeMounts:

- mountPath: /home/nacos/plugins/peer-finder

name: data

subPath: peer-finder

containers:

- name: nacos

imagePullPolicy: Always

image: nacos/nacos-server:v2.2.0

resources:

requests:

memory: "2Gi"

cpu: "500m"

ports:

- containerPort: 8848

name: client-port

- containerPort: 9848

name: client-rpc

- containerPort: 9849

name: raft-rpc

- containerPort: 7848

name: old-raft-rpc

env:

- name: NACOS_REPLICAS

value: "3"

- name: SERVICE_NAME

value: "nacos-headless"

- name: DOMAIN_NAME

value: "cluster.local"

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: MYSQL_SERVICE_HOST

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.host

- name: MYSQL_SERVICE_DB_NAME

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.db.name

- name: MYSQL_SERVICE_PORT

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.port

- name: MYSQL_SERVICE_USER

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.user

- name: MYSQL_SERVICE_PASSWORD

valueFrom:

configMapKeyRef:

name: nacos-cm

key: mysql.password

- name: SPRING_DATASOURCE_PLATFORM

value: "mysql"

- name: NACOS_SERVER_PORT

value: "8848"

- name: NACOS_APPLICATION_PORT

value: "8848"

- name: PREFER_HOST_MODE

value: "hostname"

volumeMounts:

- name: data

mountPath: /home/nacos/plugins/peer-finder

subPath: peer-finder

- name: data

mountPath: /home/nacos/data

subPath: data

- name: data

mountPath: /home/nacos/logs

subPath: logs

volumeClaimTemplates:

- metadata:

name: data

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes: [ "ReadWriteMany" ]

resources:

requests:

storage: 20Gi

selector:

matchLabels:

app: nacos

[root@xingdiancloud nacos]# kubectl create -f nacos-pvc-nfs.yaml

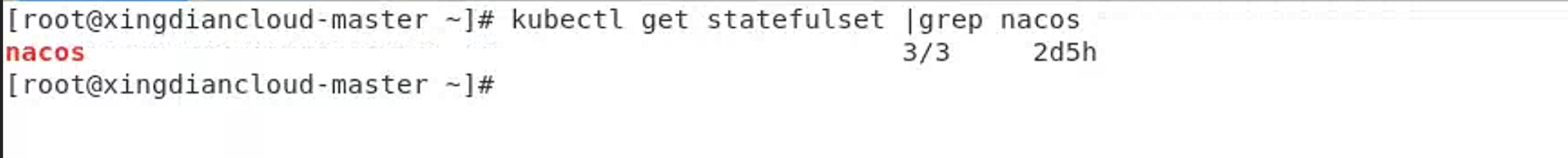

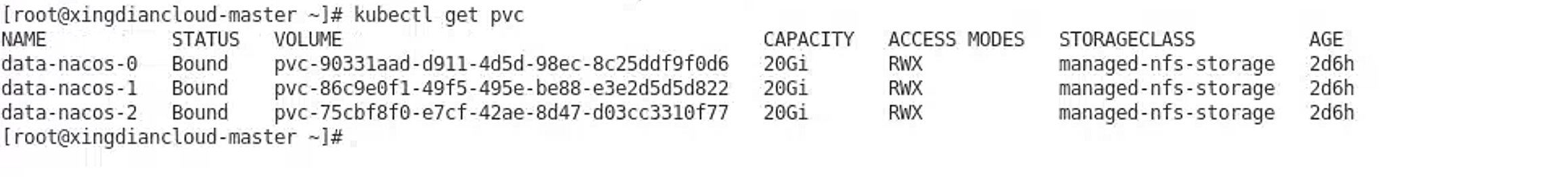

验证:

部署外部访问

注意:

为了安全期间;是不应该进行外部暴露,但是在实验环境为了配置nacos,需要单独创建Services

此处固定了ClusterIP,防止因为重启改变

同时暴露8848;9848

注意selector;否则无法绑定Nacos服务

[root@xingdiancloud nacos]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nacos

name: nacos-ss

spec:

clusterIP: 10.96.211.199

clusterIPs:

- 10.96.211.199

ports:

- name: imdgss

port: 8848

protocol: TCP

targetPort: 8848

nodePort: 30050

- name: xingdian

port: 9848

protocol: TCP

targetPort: 9848

nodePort: 30051

selector:

app: nacos

type: NodePort

[root@xingdiancloud nacos]# kubectl create -f svc.yaml

访问地址:IP+端口/nacos

用户名:nacos

密码:nacos

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-L3LNfoVT-1689690771387)(C:/Users/huawei/AppData/Roaming/Typora/typora-user-images/image-20230718213644541.png)]

配置项目数据库

[root@xingdiancloud mysql]# cat mysql-cloud.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-cloud

labels:

name: mysql-cloud

spec:

replicas: 1

revisionHistoryLimit: 5

selector:

matchLabels:

name: mysql-cloud

template:

metadata:

labels:

name: mysql-cloud

spec:

containers:

- name: mysql

image: nacos/mysql:5.7

ports:

- containerPort: 3306

volumeMounts:

- name: mysql-data-1

mountPath: /var/lib/mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: "cloud"

- name: MYSQL_USER

value: "cloud"

- name: MYSQL_PASSWORD

value: "123456"

volumes:

- name: mysql-data-1

nfs:

server: 10.9.12.250

path: /opt/mysql

---

apiVersion: v1

kind: Service

metadata:

name: mysql-cloud

labels:

name: mysql-cloud

spec:

type: NodePort

ports:

- port: 3306

targetPort: 3306

nodePort: 30052

selector:

name: mysql-cloud

[root@xingdiancloud mysql]# kubectl create -f mysql-cloud.yaml

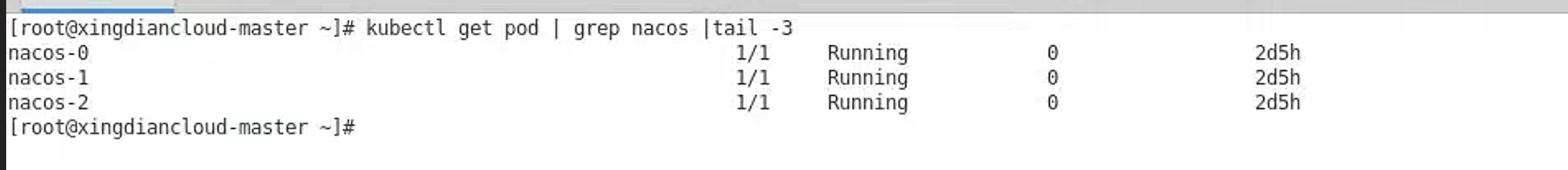

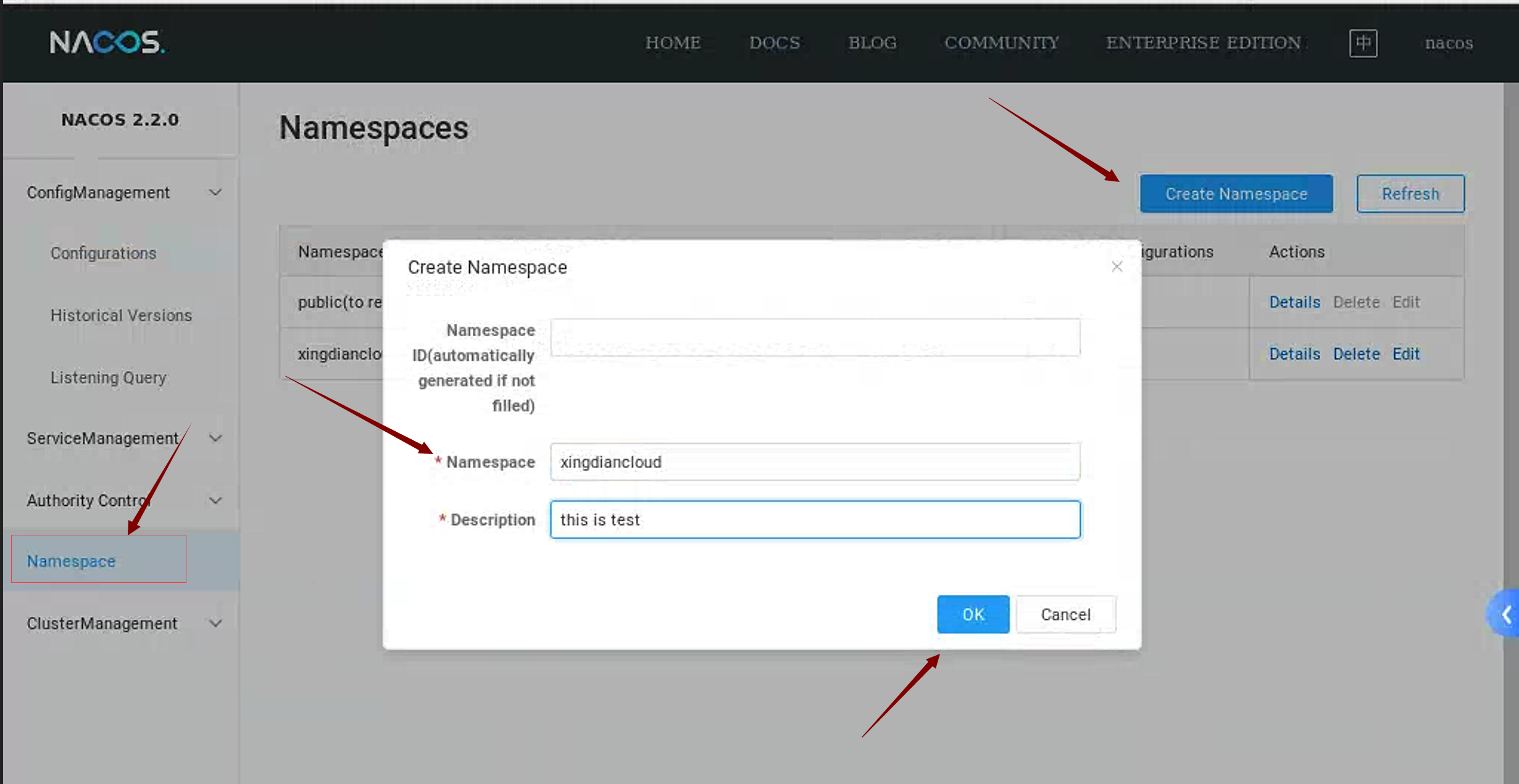

3.配置Nacos

注意:此处的配置为了完成以下应用

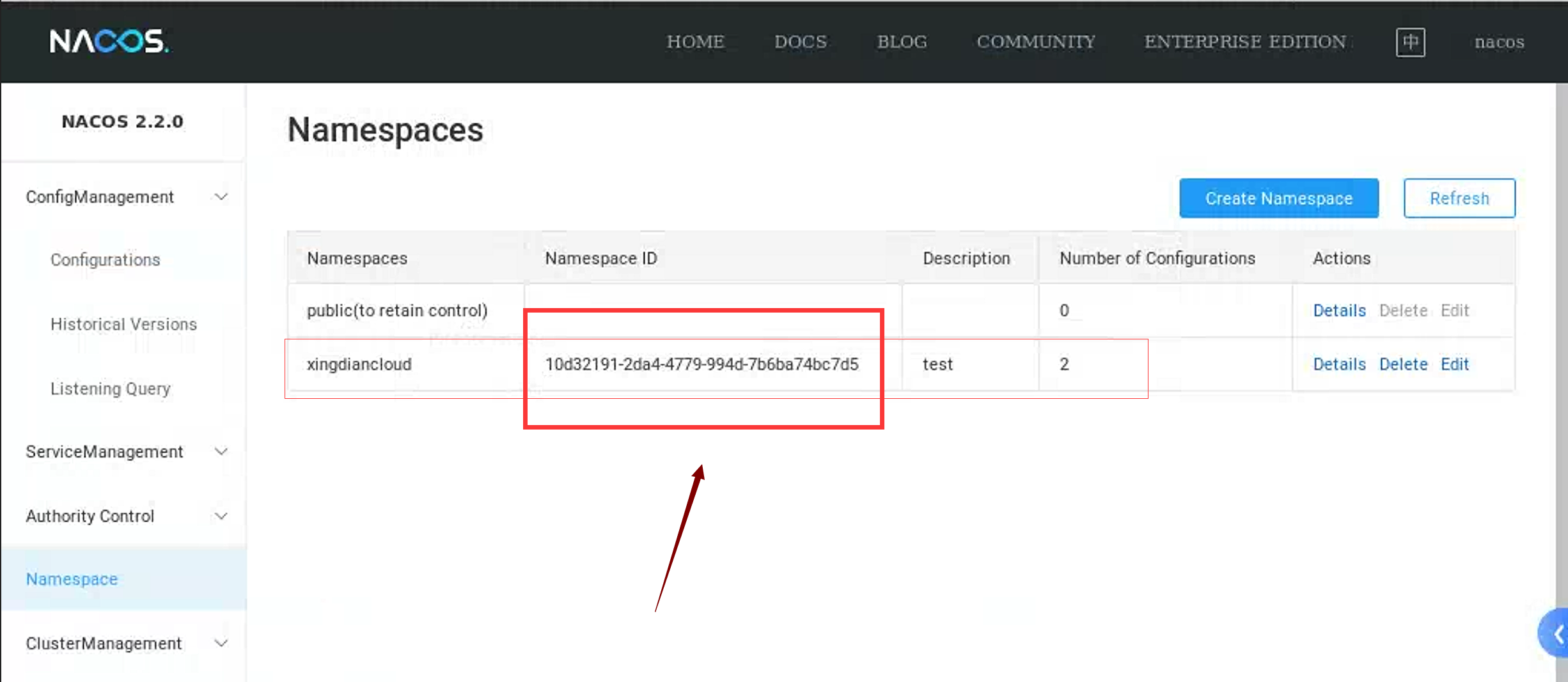

创建Namespaces

备用namespacesID

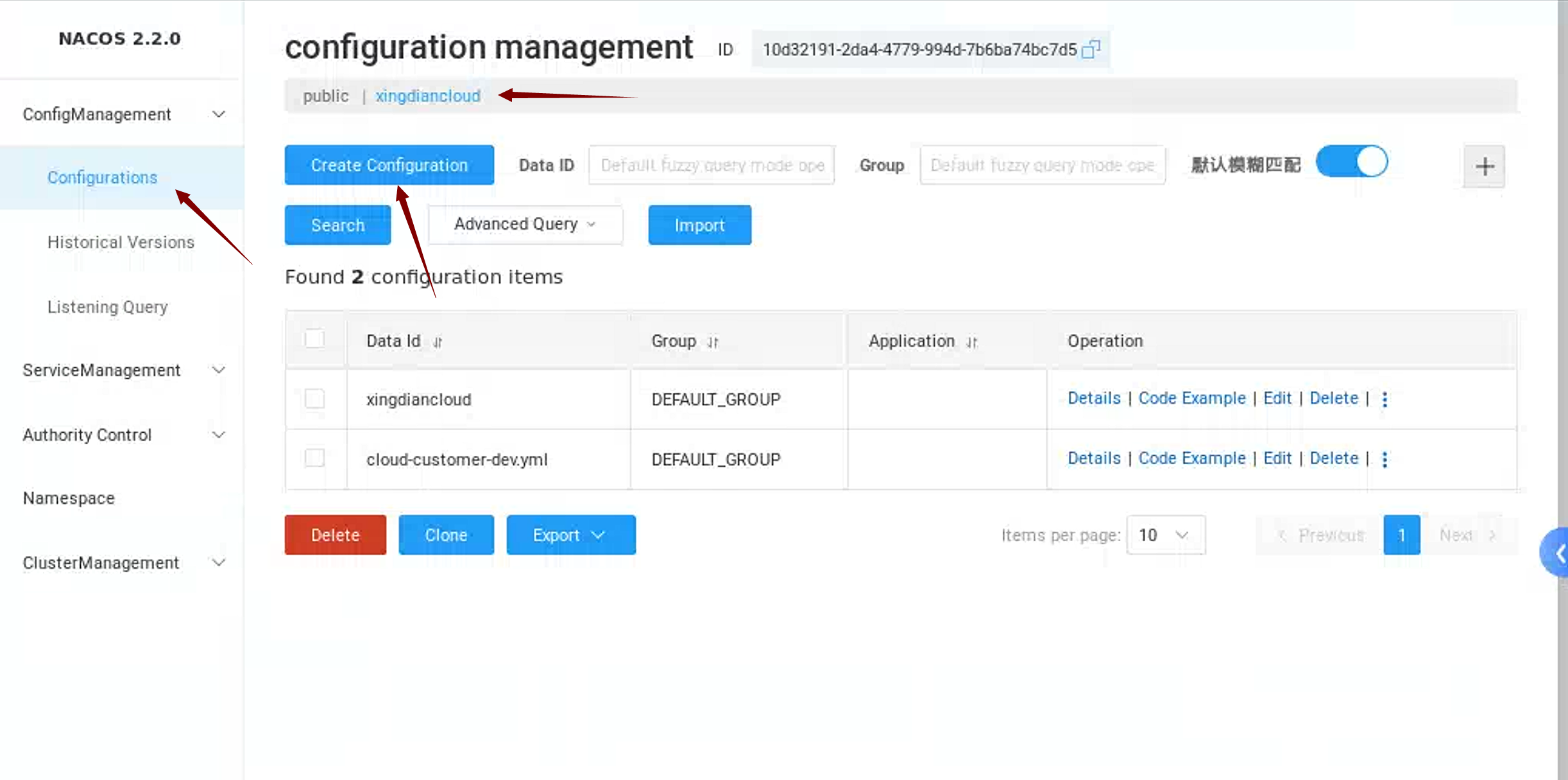

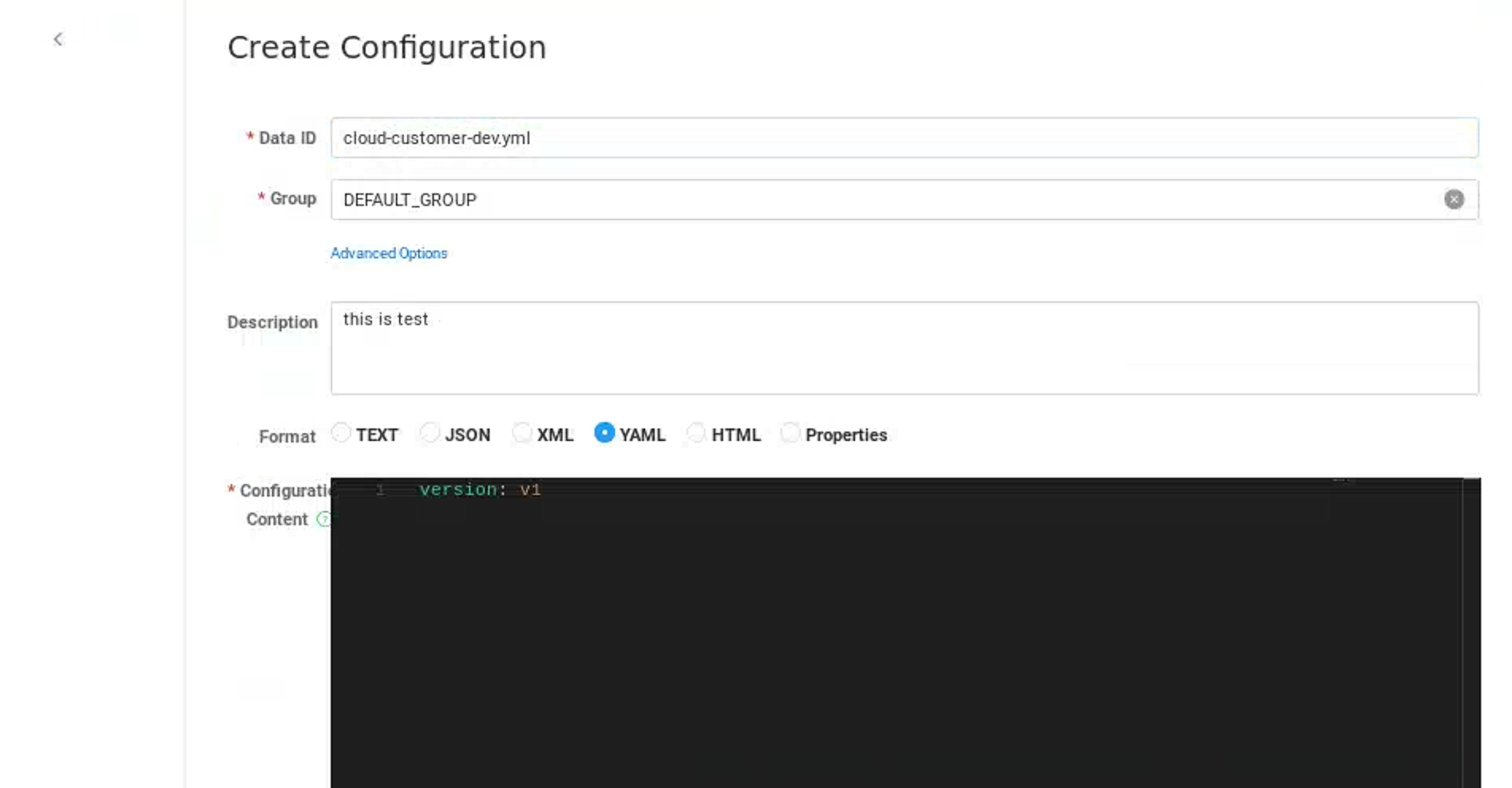

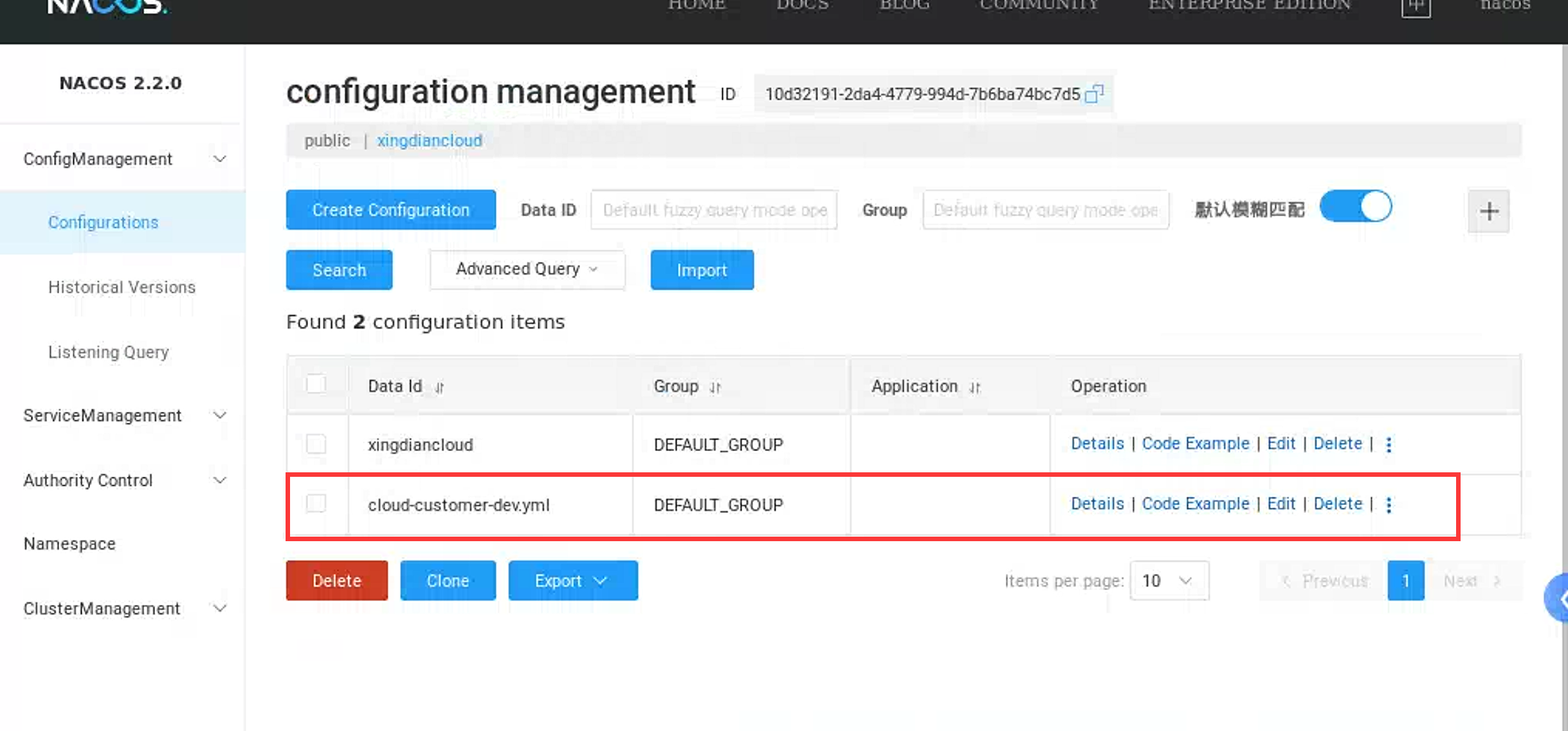

创建配置Configurations

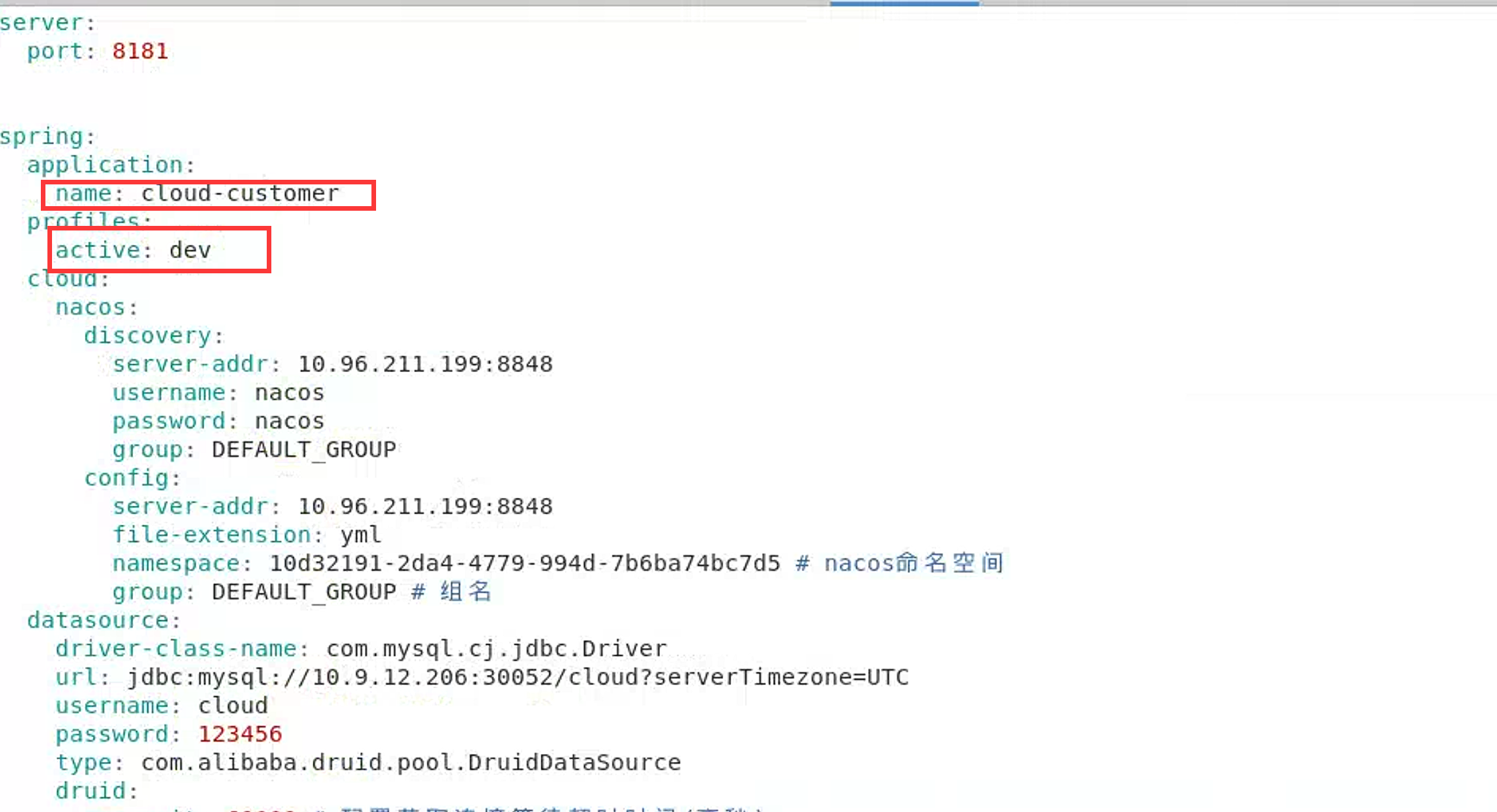

注意:Data ID 来自下图中的标注

4.项目准备

项目源码地址

https://gitee.com/kunyun_code/cloud_customer.git

https://gitee.com/kunyun_code/cloud_provider.git

修改项目中nacos地址,数据库连接信息

项目构建

略

构建镜像Dockerfile文件

[root@xingdiancloud nacos]# cat Dockerfile

FROM 10.9.12.201/xingdian/centos:7

ADD jdk-8u211-linux-x64.tar.gz /usr/local/

ENV JAVA_HOME=/usr/local/java

ENV PATH=$JAVA_HOME/bin:$PATH

ENV CATALINA_HOME=/usr/local/tomcat

ENV export JAVA_HOME PATH

WORKDIR /usr/local

RUN mv jdk1.8.0_211 java

COPY cloud_customer-1.0-SNAPSHOT.jar /

EXPOSE 8181

CMD java -Xms128m -Xmx512m -jar /cloud_customer-1.0-SNAPSHOT.jar

[root@xingdiancloud nacos]# cat Dockerfile_1

FROM 10.9.12.201/xingdian/centos:7

ADD jdk-8u211-linux-x64.tar.gz /usr/local/

ENV JAVA_HOME=/usr/local/java

ENV PATH=$JAVA_HOME/bin:$PATH

ENV export JAVA_HOME PATH

RUN echo "ulimit -n 65535" >> /etc/profile

RUN source /etc/profile

WORKDIR /usr/local

RUN mv jdk1.8.0_211 java

COPY cloud_provider-1.0-SNAPSHOT.jar /

EXPOSE 8180

CMD java -jar /cloud_provider-1.0-SNAPSHOT.jar

5.项目发布

项目发布yaml文件

[root@xingdiancloud nacos]# cat cloud_1.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: cloud-1

name: cloud-1

namespace: default

resourceVersion: '3280839'

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: cloud-1

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

kubectl.kubernetes.io/restartedAt: '2023-07-17T18:16:19+08:00'

creationTimestamp: null

labels:

k8s.kuboard.cn/name: cloud-1

spec:

containers:

- image: '10.9.12.201/xingdian/cloud-customer:v6'

imagePullPolicy: IfNotPresent

name: cloud

ports:

- containerPort: 8181

name: http

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: cloud-1

name: cloud-1

namespace: default

resourceVersion: '3087574'

spec:

clusterIP: 10.99.204.46

clusterIPs:

- 10.99.204.46

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: rf5cws

nodePort: 30053

port: 8181

protocol: TCP

targetPort: 8181

selector:

k8s.kuboard.cn/name: cloud-1

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

type: NodePort

[root@xingdiancloud nacos]# cat cloud_2.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: cloud-2

name: cloud-2

namespace: default

resourceVersion: '3437643'

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: cloud-2

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

k8s.kuboard.cn/name: cloud-2

spec:

containers:

- image: '10.9.12.201/xingdian/cloud_provider:v4'

imagePullPolicy: IfNotPresent

name: cloud-2

ports:

- containerPort: 8180

name: http

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: cloud-2

name: cloud-2

namespace: default

resourceVersion: '3276493'

spec:

clusterIP: 10.98.2.186

clusterIPs:

- 10.98.2.186

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: dwxakr

nodePort: 30055

port: 8180

protocol: TCP

targetPort: 8180

selector:

k8s.kuboard.cn/name: cloud-2

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

type: NodePort

发布

[root@xingdiancloud nacos]# kubectl create -f cloud_1.yaml

[root@xingdiancloud nacos]# kubectl create -f cloud_2.yaml

6.项目接口调试

cloud-1测试接口:IP+端口/customer/version

cloud-2测试接口:IP+端口/customer/echo/任意字符串

2.186

clusterIPs:

- 10.98.2.186

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: dwxakr

nodePort: 30055

port: 8180

protocol: TCP

targetPort: 8180

selector:

k8s.kuboard.cn/name: cloud-2

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

type: NodePort

##### 发布

```shell

[root@xingdiancloud nacos]# kubectl create -f cloud_1.yaml

[root@xingdiancloud nacos]# kubectl create -f cloud_2.yaml

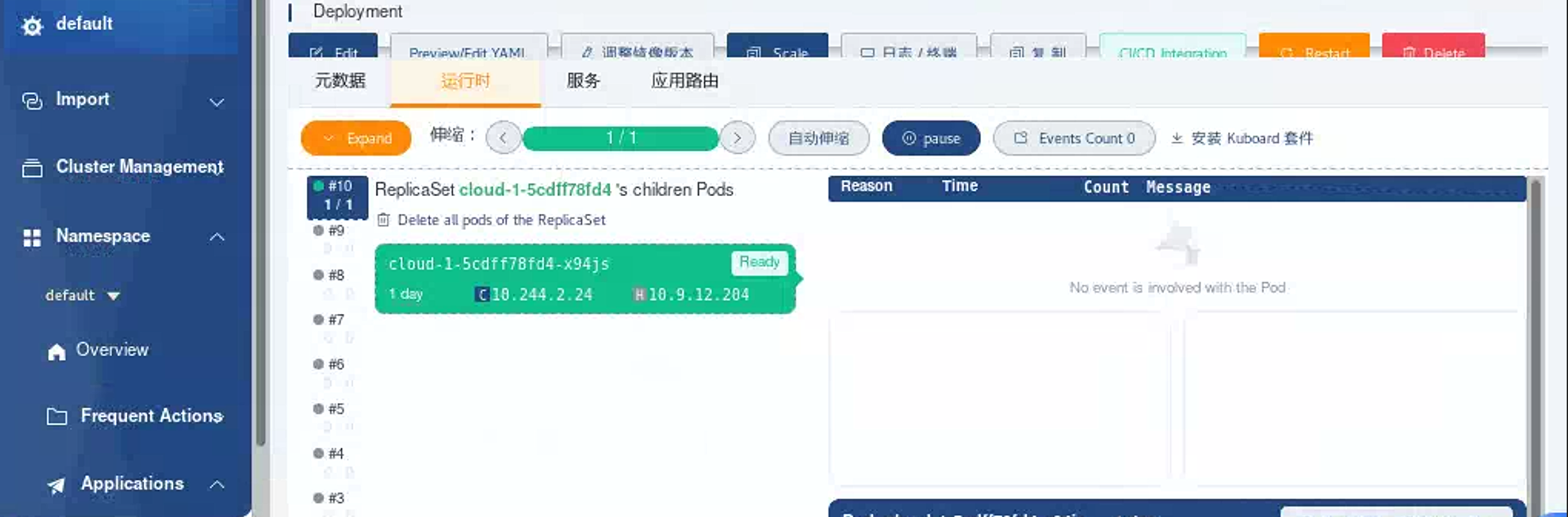

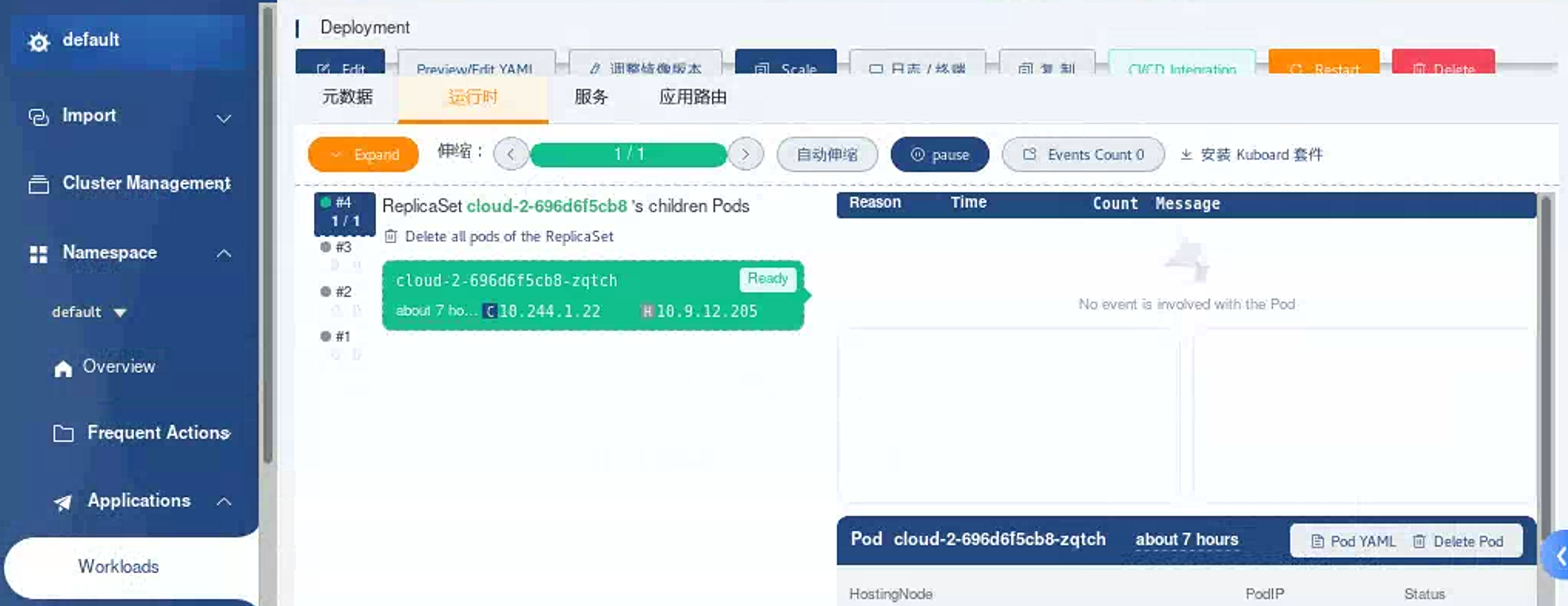

[外链图片转存中…(img-fPYB2bSq-1689690771393)]

[外链图片转存中…(img-FA4ccpnt-1689690771393)]

6.项目接口调试

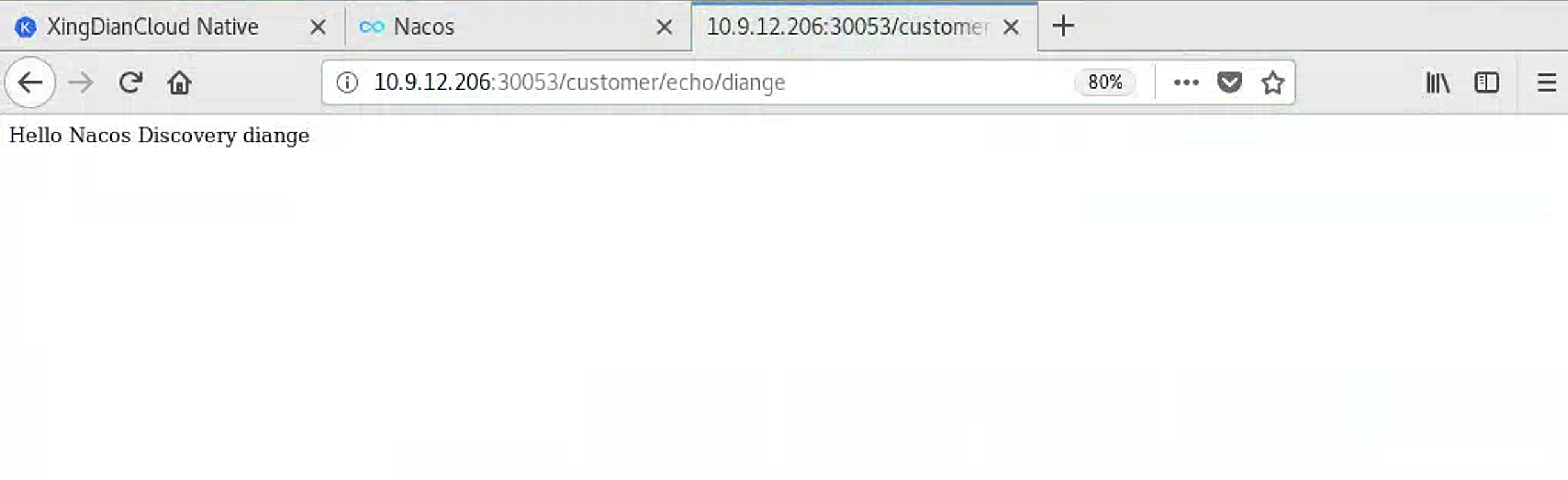

cloud-1测试接口:IP+端口/customer/version

[外链图片转存中…(img-vDJGvkSH-1689690771394)]

cloud-2测试接口:IP+端口/customer/echo/任意字符串

3666

3666

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?