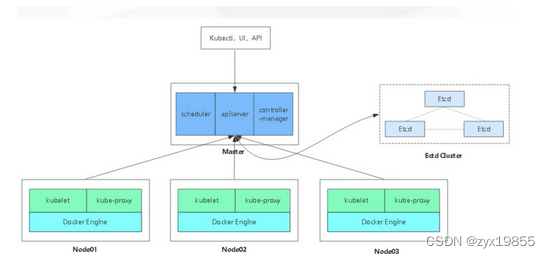

一、架构拓扑图

版本信息:

kubernetes v1.18.20/

etcd-v3.4.21

docker 18.09.9-3.el7

calico/node v3.8.9

安装所需要镜像:

harbor.k8s.local/google_containers/metrics-server:v0.5.2

harbor.k8s.local/google_containers/metrics-server:v0.4.1

harbor.k8s.local/calico/node:v3.8.9

harbor.k8s.local/calico/pod2daemon-flexvol:v3.8.9

harbor.k8s.local/calico/cni:v3.8.9

harbor.k8s.local/k8s/pause-amd64:3.2

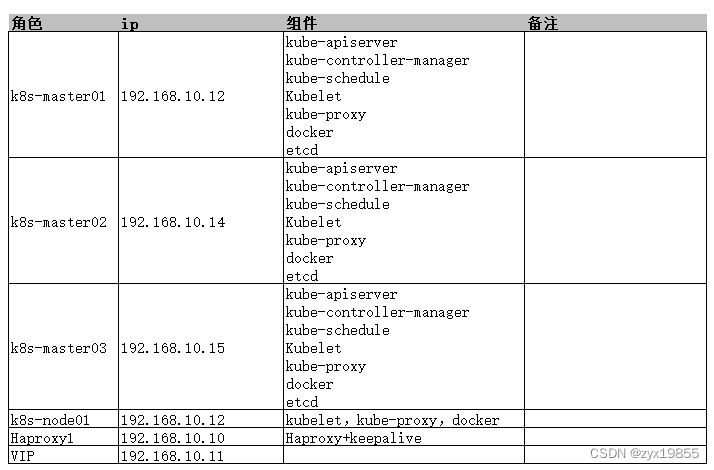

二、环境介绍

部署规划

网络信息:

K8s 主机网络:192.168.10.0/24

K8s service 网络:10.96.0.0/12

K8s pod 网络:172.16.0.0/16

HA VIP: 192.168.10.11

主机信息,服务器IP地址,主机名:

不能设置为dhcp,要配置静态IP。

VIP(虚拟IP)不要和公司内网IP重复,首先去ping一下,不通才可用。VIP需要和主机在同一个局域网内!

192.168.10.12 k8s-master01 # 2C2G 20G

192.168.10.14 k8s-master02 # 2C2G 20G

192.168.10.15 k8s-master03 # 2C2G 20G

192.168.10.13 k8s-node01 # 2C2G 20G

192.168.10.10 harbor.k8s.local # 2C2G 200G

192.168.10.11 k8s-master-lb # VIP虚IP不占用机器资源 # 如果不是高可用集群,该IP为Master01的IP

二 基础环境准备配置:

修改每台机器的主机名

cat > /etc/hostname << EOF

k8s-master01

EOF

cat > /etc/hostname << EOF

k8s-master02

EOF

cat > /etc/hostname << EOF

k8s-master03

EOF

cat > /etc/hostname << EOF

k8s-node01

EOF

# 每台机器添加hosts

cat > /etc/hosts << EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.10.12 k8s-master01

192.168.10.14 k8s-master02

192.168.10.15 k8s-master03

192.168.10.13 k8s-node01

192.168.10.10 harbor.k8s.local

EOF

操作机器上生成密钥,无密码ssh登陆所有机器

[root@k8s-master01 ~]# ssh-keygen -t rsa

Master01配置免密码登录其他节点

for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 ;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

升级系统以及内核

查看内核版本:uname -r

升级系统

# 将系统升级至最新,暂不升级内核,后续单独升级

yum update -y --exclude=kernel* && reboot

升级内核,建议4.18以上 升级内核4.19.12-1.el7.elrepo.x86_64,早期的内核会导致一些奇怪的问题,尤其是pod数量较多的时候

可以在The Linux Kernel Archives 查看内核版本信息

可以在Index of /elrepo/kernel/el7/x86_64/RPMS 找到各版本kernel安装包

需要注意的是不要盲目升级到最新版本,系统有可能会无法启动(老旧服务器)

# 下载选好的内核版本

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

发送升级包到所有节点

for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 ;do scp -r /root/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm /root/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/; done

# 安装

yum localinstall -y kernel-ml*

# 更改启动内核

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

# 查看启动内核是否是我们需要的

grubby --default-kernel

grub2-editenv list

# 重启生效,确认使用内核版本

reboot

uname -a

环境配置(所有节点)

系统环境:

[root@master01 ~]# cat /etc/redhat-release

CentOS Linux release 7.9.2009 (Core)

[root@master01 ~]#

CentOS7安装yum源(yum地址根据实际情况配置,此处配置mirrors.163.com)

cd /etc/yum.repos.d

[root@localhost yum.repos.d]# vi CentOS-Base.repo

[base]

name=CentOS-$releasever - Base

baseurl=http://mirrors.163.com/centos/7/os/$basearch/

gpgcheck=1

gpgkey=http://mirrors.163.com/centos/7/os/x86_64/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-$releasever - Updates

baseurl=http://mirrors.163.com/centos/7/updates/$basearch/

gpgcheck=1

gpgkey=http://mirrors.163.com/centos/7/os/x86_64/RPM-GPG-KEY-CentOS-7

[extras]

name=CentOS-$releasever - Extras

baseurl=http://mirrors.163.com/centos/7/extras//$basearch/

gpgcheck=1

gpgkey=http://mirrors.163.com/centos/7/os/x86_64/RPM-GPG-KEY-CentOS-7

[centosplus]

name=CentOS-$releasever - Plus

baseurl=http://mirrors.163.com/centos/7/centosplus//$basearch/

gpgcheck=1

enabled=0

所有节点关闭防火墙,swap分区,SELinux

systemctl stop firewalld && systemctl disable firewalld

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

所有节点修改文件句柄数

ulimit -SHn 65535

vi /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 65536

* hard nproc 65536

* soft memlock unlimited

* hard memlock unlimited

所有节点修改系统参数

cat > /etc/sysctl.d/k8s.conf <<EOF

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.ipv4.ip_conntrack_max = 65536

net.ipv4.ip_forward = 1

net.ipv4.conf.all.route_localnet = 1

net.ipv4.tcp_keepalive_time=7200

net.ipv4.tcp_keepalive_intvl=75

net.ipv4.tcp_keepalive_probes=9

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

net.core.somaxconn = 16384

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.netfilter.nf_conntrack_max = 2310720

net.bridge.bridge-nf-call-arptables = 1

vm.swappiness = 0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.may_detach_mounts = 1

fs.file-max = 52706963

fs.nr_open = 52706963

EOF

# 加载配置

sysctl --system

生产环境建议预留内存,避免内存耗尽导致ssh连不上主机(测试环境不需要配置)

(32G的机器留2G,251的留3G, 500G的留5G)。下面是预留3G

echo 'vm.min_free_kbytes=3000000' >> /etc/sysctl.conf

sysctl -p

所有节点开启ipvs支持

默认使用iptables,在pod较多的时候网络转发慢,建议使用ipvs

安装ipvs

yum install ipvsadm ipset sysstat conntrack libseccomp -y

所有节点配置ipvs模块

# 编辑配置信息

cat > /etc/modules-load.d/ipvs.conf <<EOF

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

# 重新加载

systemctl enable --now systemd-modules-load.service

# 重启

reboot

# 确认

lsmod | grep -e ip_vs -e nf_conntrack

# 检查时间同步

所有节点同步时间

安装ntpdate

yum install ntpdate -y

所有节点同步时间。时间同步配置如下:

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone

ntpdate time2.aliyun.com

# 加入到crontab(修改可用的ntp同步服务器)

crontab -e

*/5 * * * * ntpdate time2.aliyun.com

必备工具安装

yum install -y yum-utils device-mapper-persistent-data lvm2 nfs-utils

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

三 . 搭建keepalived+haproxy (单节点)

安装haproxy

yum install -y haproxy

# 配置HAProxy 注意修改ip

cat > /etc/haproxy/haproxy.cfg << EOF

global

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server master01 192.168.10.12:6443 check

server master02 192.168.10.14:6443 check

server master03 192.168.10.15:6443 check

EOF

启动haproxy

systemctl start haproxy

systemctl status haproxy

systemctl enable haproxy

安装keepalived

yum install -y keepalived

# 配置keepalived

## 注意修改ip,网卡名称

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.10.10

virtual_router_id 51

priority 101

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.10.11

}

track_script {

chk_apiserver

} }

EOF

所有haproxy启动keepalived

systemctl restart keepalived

启动时会自动添加一个drop的防火墙规则,需要清空

iptables -F

systemctl status keepalived

systemctl enable keepalived

查看vip

ip add | grep 10.11

# 健康检测脚本,单节点

cat > /etc/keepalived/check_apiserver.sh << EOF

#!/bin/bash

err=0

for k in $(seq 1)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

# 给执行权限

chmod +x /etc/keepalived/check_apiserver.sh

# 所有master节点启动haproxy和keepalived

systemctl enable --now haproxy

systemctl enable --now keepalived

# 验证

ping 192.168.10.11

重要:如果安装了keepalived和haproxy, 需要测试keepalived是否是正常的

[root@k8s-master01 pki]# telnet 192.168.10.11 8443

Trying 192.168.10.11...

Connected to 192.168.10.11.

Escape character is '^]'.

Connection closed by foreign host.

如果ping不通且telnet没有出现']',则认为VIP不可以,不可再继续往下执行,需要排查keepalived的问题,比如防火墙和selinux,haproxy和keepalived的状态,监听端口灯

所有节点查看防火墙状态必须为disable和inactive:systemctl status firewalld

所有节点查看selinux状态,必须为disable:getenforce

master节点查看haproxy和keepalived状态:systemctl status keepalived haproxy

docker安装,所有节点

卸载旧版本的docker

[root@localhost yum.repos.d]# yum -y remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine \

docker-ce \

docker-ce-cli \

containerd.io

使用yum安装

执行一下命令安装依赖包:

[root@localhost yum.repos.d]# yum install -y yum-utils

执行下面的命令添加 yum 软件源:

[root@localhost yum.repos.d]# yum-config-manager \

--add-repo \

https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

上述命令执行完成后,执行如下命令

[root@localhost yum.repos.d]# cd /etc/yum.repos.d

[root@localhost yum.repos.d]#

[root@localhost yum.repos.d]# ll

-rw-r--r--. 1 root root 1991 Jul 3 21:43 docker-ce.repo ## 这个是执行Yum-config-manager命令后生产的

## 编辑 docker-ce.repo文件,把releasever全部替换成7,可以粘贴如下公式

[root@localhost yum.repos.d]# vi docker-ce.repo

:%s/$releasever/7/g (全部替换公式)

docker-ce.repo修改后的内容如下:

[root@localhost yum.repos.d]# cat docker-ce.repo

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-debuginfo]

name=Docker CE Stable - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-source]

name=Docker CE Stable - Sources

baseurl=docker-ce-linux-centos-7-source-stable安装包下载_开源镜像站-阿里云

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test]

name=Docker CE Test - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-debuginfo]

name=Docker CE Test - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-source]

name=Docker CE Test - Sources

baseurl=docker-ce-linux-centos-7-source-test安装包下载_开源镜像站-阿里云

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly]

name=Docker CE Nightly - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-debuginfo]

name=Docker CE Nightly - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-source]

name=Docker CE Nightly - Sources

baseurl=docker-ce-linux-centos-7-source-nightly安装包下载_开源镜像站-阿里云

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

# 查看版本

yum list docker-ce --showduplicates | sort -r

yum list docker-ce-cli --showduplicates | sort -r

# 安装docker 18.09

yum -y install docker-ce-18.09.9-3.el7 docker-ce-cli-18.09.9-3.el7

# 新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemd, 配置私有harbor镜像仓库地址:harbor.k8s.local

# 日志设置

# 上传下载设置

# 镜像仓库设置

mkdir /etc/docker

cat > /etc/docker/daemon.json <<EOF

{ "registry-mirrors": [

"https://harbor.k8s.local"

],

"insecure-registries":[

"https://harbor.k8s.local"

],

"exec-opts": ["native.cgroupdriver=systemd"],

"max-concurrent-downloads": 10, "max-concurrent-uploads": 5, "log-opts": { "max-size": "300m", "max-file": "2" },

"live-restore": true

}

EOF

# 重新加载配置,并启动

systemctl daemon-reload && systemctl enable --now docker

systemctl daemon-reload && systemctl restart docker.service

# 查看docker信息

docker info

因为harbor是https部署需要导入harbor证书到/etc/docker/

登陆harbor仓库

docker login harbor.k8s.local -u admin -p Harbor12345

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

docker login | Docker Documentation

Login Succeeded

Etcd安装,所有maste节点

创建etcd证书

签发ETCD证书

1、创建证书工具

Master01下载生成证书工具:

wget "https://pkg.cfssl.org/R1.2/cfssl_linux-amd64" -O /usr/local/bin/cfssl

wget "https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64" -O /usr/local/bin/cfssljson

wget "https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64" -O /usr/local/bin/cfssl-certinfo

把cfssl_linux-amd64放入/usr/local/bin/cfssl

把cfssljson_linux-amd64放入/usr/local/bin/cfssljson

把cfssl-certinfo_linux-amd64放入/usr/local/bin/cfssl-certinfo

cfssl: 证书签发的工具命令

cfssljson: 将cfssl 生成的证书(json格式)变为文件承载式证书

cfssl-certinfo:验证证书的信息

cfssl-certinfo -cert < 证书名称>

复可执行权限

chmod +x /usr/local/bin/cfssl*

# etcd证书签发,master01签发,之后复制到其他节点

# 所有master节点创建etcd证书目录

生成证书

二进制安装最关键步骤,一步错误全盘皆输,一定要注意每个步骤都要是正确的

for NODE in k8s-master01 k8s-master02 k8s-master03; do

ssh $NODE "mkdir /etc/etcd/ssl -p"

done

生成证书的CSR文件:证书签名请求文件,配置了一些域名、公司、单位

# 生成ca证书csr

cd /etc/etcd/ssl

cat > etcd-ca-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

# 生成ca证书和ca证书的key

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

# 使用ca证书签发客户端证书

# 生成etcd csr文件

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

# 生成ca config

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

# 生成etcd证书和key

# 替换主机名称和IP

# 曾在这里因为hostname问题导致etcd服务无法启动,后删除无用的ip地址,并修改节点域名为IP地址解决

cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,192.168.10.12,192.168.10.14,192.168.10.15\

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

# 复制证书到其他master节点

for NODE in k8s-master02 k8s-master03; do

ssh $NODE "mkdir -p /etc/etcd/ssl"

for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do

scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}

done

done

验证证书有效时间是否延长到100年

openssl x509 -in /etc/etcd/ssl/etcd.pem -noout -text | grep Not

Not Before: Sep 6 07:51:00 2022 GMT

Not After : Aug 13 07:51:00 2122 GMT

etcd集群创建

# 编辑配置文件,所有master节点,etcd对磁盘IO要求很高,建议使用高性能存储

## master01 ip地址修改为可用的ip

## 注意修改涉及name,listen-peer-urls,listen-client-urls,initial-advertise-peer-urls,advertise-client-urls,initial-cluster

cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master01'

data-dir: /etc/etcd/etcd.data

wal-dir: /etc/etcd/etcd.wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.10.12:2380'

listen-client-urls: 'https://192.168.10.12:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.10.12:2380'

advertise-client-urls: 'https://192.168.10.12:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://192.168.10.12:2380,k8s-master02=https://192.168.10.14:2380,k8s-master03=https://192.168.10.15:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

## master02 ip地址修改为可用的ip

## 注意修改涉及name,listen-peer-urls,listen-client-urls,initial-advertise-peer-urls,advertise-client-urls,initial-cluster

cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master02'

data-dir: /etc/etcd/etcd.data

wal-dir: /etc/etcd/etcd.wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.10.14:2380'

listen-client-urls: 'https://192.168.10.14:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.10.14:2380'

advertise-client-urls: 'https://192.168.10.14:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://192.168.10.12:2380,k8s-master02=https://192.168.10.14:2380,k8s-master03=https://192.168.10.15:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

## master03 ip地址修改为可用的ip

## 注意修改,涉及name,listen-peer-urls,listen-client-urls,initial-advertise-peer-urls,advertise-client-urls,initial-cluster

cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master03'

data-dir: /etc/etcd/etcd.data

wal-dir: /etc/etcd/etcd.wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.10.15:2380'

listen-client-urls: 'https://192.168.10.15:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.10.15:2380'

advertise-client-urls: 'https://192.168.10.15:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://192.168.10.12:2380,k8s-master02=https://192.168.10.14:2380,k8s-master03=https://192.168.10.15:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

# 创建etcd service,三节点一样,在一个节点创建,然后复制到其他节点

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Service

Documentation=etcd

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

EOF

# 复制etcd.service到其他master节点

for NODE in k8s-master02 k8s-master03; do

scp /usr/lib/systemd/system/etcd.service $NODE:/usr/lib/systemd/system/etcd.service

done

# 创建etcd的证书目录,将证书文件做个软连接,所有master节点

for NODE in k8s-master01 k8s-master02 k8s-master03; do

ssh $NODE "mkdir -p /etc/kubernetes/pki/etcd"

done

for NODE in k8s-master01 k8s-master02 k8s-master03; do

ssh $NODE "ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/"

done

# 启动应用(所有master)

systemctl daemon-reload

systemctl enable --now etcd

systemctl enable etcd.service

# 查看状态

export ETCDCTL_API=3

etcdctl --endpoints="192.168.10.12:2379,192.168.10.14:2379,192.168.10.15:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

+--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 192.168.10.12:2379 | 288ee9d54b2a8c5c | 3.4.21 | 20 kB | true | false | 4 | 9 | 9 | |

| 192.168.10.14:2379 | b106159d0a7cfe8d | 3.4.21 | 25 kB | false | false | 4 | 9 | 9 | |

| 192.168.10.15:2379 | a3470eebe71e06f9 | 3.4.21 | 20 kB | false | false | 4 | 9 | 9 | |

+--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

ETCD:配置参数

可重用的配置文件是YAML文件,其名称和值由一个或多个下面描述的命令行标志组成。为了使用此文件,请将文件路径指定为--config-file标志或ETCD_CONFIG_FILE环境变量的值。如果需要的话配置文件示例可以作为入口点创建新的配置文件。

在命令行上设置的选项优先于环境中的选项。 如果提供了配置文件,则其他命令行标志和环境变量将被忽略。例如,etcd --config-file etcd.conf.yml.sample --data-dir /tmp将会忽略--data-dir参数。

参数--my-flag的环境变量的格式为ETCD_MY_FLAG.它适用于所有参数。

客户端请求官方的etcd端口为2379,2380是节点通信端口。可以将etcd端口设置为接受TLS流量,非TLS流量,或同时接受TLS和非TLS流量。

要在Linux启动时使用自定义设置自动启动etcd,强烈建议使用systemd单元。

--name

人类可读的该成员的名字

默认值:"default"

环境变量:ETCD_DATA_DIR

该值被该节点吃的--initial-cluster参数引用(例如 default=http://localhost:2380).如果使用静态引导程序,则需要与标志中使用的键匹配。当使用发现服务时,每一个成员需要有唯一的名字。Hostname或者machine-id是好的选择。

--data-dir

数据目录的路径

默认值:"${name}.etcd"

环境变量:ETCD_DATA_DIR

--wal-dir

专用的wal目录的路径。如果这个参数被设置,etcd将会写WAL文件到walDir而不是dataDir,允许使用专用磁盘,并有助于避免日志记录和其他IO操作之间的io竞争。

默认值:""

环境变量:ETCD_WAL_DIR

--snapshot-count

触发一个快照到磁盘的已提交交易的数量

默认值:"100000"

环境变量:ETCD_SNAPSHOP_COUNT

--heartbeat-interval

心跳间隔(毫秒为单位)

默认值:"100"

环境变量:ETCD_HEARTBEAT_INTERVAL

--election-timeout

选举超时时间(毫秒为单位),从文档/tuning.md发现更多细节

默认值:"1000"

环境变量:ETCD_ELECTION_TIMEOUT

--listen-peer-urls

监听在对等节点流量上的URL列表,该参数告诉etcd在指定的协议://IP:port组合上接受来自其对等方的传入请求。协议可以是http或者https。或者,使用unix://<file-path>或者unixs://<file-path>到unix sockets。如果将0.0.0.0作为IP,etcd将监听在所有的接口上的给定端口。如果给定了Ip和端口,etcd将监听指定的接口和端口。可以使用多个URL指定要监听的地址和端口的数量。 etcd将响应来自任何列出的地址和端口的请求。

默认值:"http://localhost:2380"

环境变量:ETCD_LISTEN_PEER_URLS

示例:"http://10.0.0.1:2380"

无效的示例:"http://example.com:2380"(绑定的域名是无效的)

--listen-client-urls

监听在客户端流量上的URL列表,该参数告诉etcd在指定的协议://IP:port组合上接受来自客户端的传入请求。协议可以是http或者https。或者,使用unix://<file-path>或者unixs://<file-path>到unix sockets。如果将0.0.0.0作为IP,etcd将监听在所有的接口上的给定端口。如果给定了Ip和端口,etcd将监听指定的接口和端口。可以使用多个URL指定要监听的地址和端口的数量。 etcd将响应来自任何列出的地址和端口的请求。

默认值:"http://localhost:2379"

环境变量:ETCD_LISTEN_CLIENT_URLS

示例:"http://10.0.0.1:2379"

无效的示例:"http://example.com:2379"(绑定的域名是无效的)

--max-snapshots

保留的快照文件最大数量(0为无限)

默认值:5

环境变量:ETCD_MAX_SNAPSHOTS

Windows用户的默认设置是无限制的,建议手动设置到5(或出于安全性的考虑)。

--max-wals

保留的wal文件最大数量(0为无限)

默认值:5

环境变量:ETCD_MAX_WALS

Windows用户的默认设置是无限制的,建议手动设置到5(或出于安全性的考虑)。

--cors

以逗号分隔的CORS来源白名单(跨来源资源共享)。

默认值:""

环境变量:ETCD_CORS

--quota-backent-bytes

后端大小超过给定配额时引发警报(0默认为低空间配额)。

默认值:0

环境变量:ETCD_QUOTA_BACKEND_BYTES

--backend-batch-limit

BackendBatchLimit是提交后端事务之前的最大数量的操作。

默认值:0

环境变量:ETCD_BACKEND_BATCH_LIMIT

--backend-bbolt-freelist-type

etcd后端(bboltdb)使用的自由列表类型(支持数组和映射的类型)。

默认值:map

环境变量:ETCD_BACKEND_BBOLT_FREELIST_TYPE

--backend-batch-interval

BackendBatchInterval是提交后端事务之前的最长时间。

默认值:0

环境变量:ETCD_BACKEND_BATCH_INTERVAL

--max-txn-ops

交易中允许的最大操作数。

默认值:128

环境变量:ETCD_MAX_TXN_OPS

--max-request-bytes

服务器将接受的最大客户端请求大小(以字节为单位)。

默认值:1572864

环境变量:ETCD_MAX_REQUEST_BYTES

--grpc-keepalive-min-time

客户端在ping服务器之前应等待的最小持续时间间隔。

默认值:5s

环境变量:ETCD_GRPC_KEEPALIVE_MIN_TIME

--grpc-keepalive-interval

服务器到客户端ping的频率持续时间,以检查连接是否有效(0禁用)。

默认值:2h

环境变量:ETCD_GRPC_KEEPALIVE_INTERVAL

--grpc-keepalive-timeout

关闭无响应的连接之前的额外等待时间(0禁用)。

默认值:20s

环境变量:ETCD_GRPC_KEEPALIVE_TIMEOUT

集群参数

--initial-advertise-peer-urls,--initial-cluster,--initial-cluster-state,和--initial-cluster-token参数用于启动(静态启动,发现服务启动或者运行时重新配置)一个新成员,当重启已经存在的成员时将忽略。

前缀为--discovery的参数在使用发现服务时需要被设置。

--initial-advertise-peer-urls

此成员的对等URL的列表,以通告到集群的其余部分。 这些地址用于在集群周围传送etcd数据。 所有集群成员必须至少有一个路由。 这些URL可以包含域名。

默认值:"http://localhost:2380"

环境变量:ETCD_INITIAL_ADVERTISE_PEER_URLS

示例:"http://example.com:2380, http://10.0.0.1:2380"

--initial-cluster

启动集群的初始化配置

默认值:"default=http://localhost:2380"

环境变量:ETCD_INITIAL_CLUSTER

关键是所提供的每个节点的--name参数的值。 默认值使用default作为密钥,因为这是--name参数的默认值。

--initial-cluster-state

初始群集状态(“新”或“现有”)。 对于在初始静态或DNS引导过程中存在的所有成员,将其设置为new。 如果此选项设置为existing,则etcd将尝试加入现存集群。 如果设置了错误的值,etcd将尝试启动,但会安全地失败。

默认值:"new:

环境变量:ETCD_INITIAL_CLUSTER_STATE

--initial-cluster-token

引导期间etcd群集的初始集群令牌。

默认值:"etcd-cluster"

环境变量:ETCD_INITIAL_CLUSTER_TOKEN

--advertise-client-urls

此成员的客户端URL的列表,这些URL广播给集群的其余部分。 这些URL可以包含域名。

默认值:http://localhost:2379

环境变量:ETCD_ADVERTISE_CLIENT_URLS

示例:"http://example.com:2379, http://10.0.0.1:2379"

如果从集群成员中发布诸如http://localhost:2379之类的URL并使用etcd的代理功能,请小心。这将导致循环,因为代理将向其自身转发请求,直到其资源(内存,文件描述符)最终耗尽为止。

--discovery

发现URL用于引导启动集群

默认值:""

环境变量:ETCD_DISCOVERY

--discovery-srv

用于引导集群的DNS srv域。

默认值:""

环境变量:ETCD_DISCOVERY_SRV

--discovery-srv-name

使用DNS引导时查询的DNS srv名称的后缀。

默认值:""

环境变量:ETCD_DISCOVERY_SRV_NAME

--discovery-fallback

发现服务失败时的预期行为(“退出”或“代理”)。“代理”仅支持v2 API。

默认值: "proxy"

环境变量:ETCD_DISCOVERY_FALLBACK

--discovery-proxy

HTTP代理,用于发现服务的流量。

默认值:""

环境变量:ETCD_DISCOVERY_PROXY

--strict-reconfig-check

拒绝可能导致quorum丢失的重新配置请求。

默认值:true

环境变量:ETCD_STRICT_RECONFIG_CHECK

--auto-compaction-retention

mvcc密钥值存储的自动压缩保留时间(小时)。 0表示禁用自动压缩。

默认值:0

环境变量:ETCD_AUTO_COMPACTION_RETENTION

--auto-compaction-mode

解释“自动压缩保留”之一:“定期”,“修订”。 基于期限的保留的“定期”,如果未提供时间单位(例如“ 5m”),则默认为小时。 “修订”用于基于修订号的保留。

默认值:periodic

环境变量:ETCD_AUTO_COMPACTION_MODE

--enable-v2

接受etcd V2客户端请求

默认值:false

环境变量:ETCD_ENABLE_V2

代理参数

--proxy前缀标志将etcd配置为以代理模式运行。 “代理”仅支持v2 API。

--proxy

代理模式设置(”off","readonly"或者"on")

默认值:"off"

环境变量:ETCD_PROXY

--proxy-failure-wait

在重新考虑端点请求之前,端点将保持故障状态的时间(以毫秒为单位)。

默认值:5000

环境变量:ETCD_PROXY_FAILURE_WAIT

--proxy-refresh-interval

节点刷新间隔的时间(以毫秒为单位)。

默认值:30000

环境变量:ETCD_PROXY_REFRESH_INTERVAL

--proxy-dial-timeout

拨号超时的时间(以毫秒为单位),或0以禁用超时

默认值:1000

环境变量:ETCD_PROXY_DIAL_TIMEOUT

--proxy-write-timeout

写入超时的时间(以毫秒为单位)或禁用超时的时间为0。

默认值:5000

环境变量:ETCD_PROXY_WRITE_TIMEOUT

--proxy-read-timeout

读取超时的时间(以毫秒为单位),或者为0以禁用超时。

如果使用Watch,请勿更改此值,因为会使用较长的轮询请求。

默认值:0

环境变量:ETCD_PROXY_READ_TIMEOUT

安全参数

安全参数有助于构建一个安全的etcd集群

--ca-file

DEPRECATED

客户端服务器TLS CA文件的路径。 --ca-file ca.crt可以替换为--trusted-ca-file ca.crt --client-cert-auth,而etcd将执行相同的操作。

默认值:""

环境变量:ETCD_CA_FILE

--cert-file

客户端服务器TLS证书文件的路径

默认值:""

环境变量:ETCD_CERT_FILE

--key-file

客户端服务器TLS秘钥文件的路径

默认值:""

环境变量:ETCD_KEY_FILE

--client-cert-auth

开启客户端证书认证

默认值:false

环境变量:ETCD_CLIENT_CERT_AUTH

CN 权限认证不支持gRPC-网关

--client-crl-file

客户端被撤销的TLS证书文件的路径

默认值:""

环境变量:ETCD_CLIENT_CERT_ALLOWED_HOSTNAME

--client-cert-allowed-hostname

允许客户端证书身份验证的TLS名称。

默认值:""

环境变量:ETCD_CLIENT_CERT_ALLOWED_HOSTNAME

--trusted-ca-file

客户端服务器受信任的TLS CA证书文件的路径

默认值:""

环境变量:ETCD_TRUSTED_CA_FILE

--auto-tls

客户端TLS使用自动生成的证书

默认值:false

环境变量:ETCD_AUTO_TLS

--peer-ca-file

已淘汰

节点TLS CA文件的路径.--peer-ca-file可以替换为--peer-trusted-ca-file ca.crt --peer-client-cert-auth,而etcd将执行相同的操作。

默认值:”“

环境变量:ETCD_PEER_CA_FILE

--peer-cert-file

对等服务器TLS证书文件的路径。 这是对等节点通信证书,在服务器和客户端都可以使用。

默认值:""

环境变量:ETCD_PEER_CERT_FILE

--peer-key-file

对等服务器TLS秘钥文件的路径。 这是对等节点通信秘钥,在服务器和客户端都可以使用。

默认值:""

环境变量:ETCD_PEER_KEY_FILE

--peer-client-cert-auth

启动节点客户端证书认证

默认值:false

环境变量:ETCD_PEER_CLIENT_CERT_AUTH

--peer-crl-file

节点被撤销的TLS证书文件的路径

默认值:""

环境变量:ETCD_PEER_CRL_FILE

--peer-trusted-ca-file

节点受信任的TLS CA证书文件的路径

默认值:""

环境变量:ETCD_PEER_TRUSTED_CA_FILE

--peer-auto-tls

节点使用自动生成的证书

默认值:false

环境变量:ETCD_PEER_AUTO_TLS

--peer-cert-allowed-cn

允许使用CommonName进行对等身份验证。

默认值:""

环境变量:ETCD_PEER_CERT_ALLOWED_CN

--peer-cert-allowed-hostname

允许的TLS证书名称用于对等身份验证。

默认值:""

环境变量:ETCD_PEER_CERT_ALLOWED_HOSTNAME

--cipher-suites

以逗号分隔的服务器/客户端和对等方之间受支持的TLS密码套件列表。

默认值:""

环境变量:ETCD_CIPHER_SUITES

日志参数

--logger

v3.4可以使用,警告:--logger=capnslog在v3.5被抛弃使用

指定“ zap”用于结构化日志记录或“ capnslog”。

默认值:capnslog

环境变量:ETCD_LOGGER

--log-outputs

指定“ stdout”或“ stderr”以跳过日志记录,即使在systemd或逗号分隔的输出目标列表下运行时也是如此。

默认值:defalut

环境变量:ETCD_LOG_OUTPUTS

default在zap logger迁移期间对v3.4使用stderr配置

--log-level

v3.4可以使用

配置日志等级,仅支持debug,info,warn,error,panic,fatal

默认值:info

环境变量:ETCD_LOG_LEVEL

default使用info.

--debug

警告:在v3.5被抛弃使用

将所有子程序包的默认日志级别降为DEBUG。

默认值:false(所有的包使用INFO)

环境变量:ETCD_DEBUG

--log-package-levels

警告:在v3.5被抛弃使用

将各个etcd子软件包设置为特定的日志级别。 一个例子是etcdserver = WARNING,security = DEBUG

默认值:""(所有的包使用INFO)

环境变量:ETCD_LOG_PACKAGE_LEVELS

风险参数

使用不安全标志时请小心,因为它将破坏共识协议提供的保证。 例如,如果群集中的其他成员仍然存在,可能会panic。 使用这些标志时,请遵循说明。

--force-new-cluster

强制创建一个新的单成员群集。 它提交配置更改,以强制删除群集中的所有现有成员并添加自身,但是强烈建议不要这样做。 请查看灾难恢复文档以了解首选的v3恢复过程。

默认值:false

环境变量:ETCD_FORCE_NEW_CLUSTER

杂项参数

--version

打印版本并退出

默认值:false

--config-file

从文件加载服务器配置。 请注意,如果提供了配置文件,则其他命令行标志和环境变量将被忽略。

默认值:""

示例:配置文件示例

环境变量:ETCD_CONFIG_FILE

分析参数

--enable-pprof

通过HTTP服务器启用运行时分析数据。地址位于客户端URL+“/debug/pprof/”

默认值:false

环境变量:ETCD_ENABLE_PPROF

--metrics

设置导出指标的详细程度,specify 'extensive' to include server side grpc histogram metrics.

默认值:basic

环境变量:ETCD_METRICS

--listen-metrics-urls

可以响应/metrics和/health端点的其他URL列表

默认值:""

环境变量:ETCD_LISTEN_METRICS_URLS

权限参数

--auth-token

指定令牌类型和特定于令牌的选项,特别是对于JWT,格式为type,var1=val1,var2=val2,...,可能的类型是simple或者jwt.对于具体的签名方法jwt可能的变量为sign-method(可能的值为'ES256', 'ES384', 'ES512', 'HS256', 'HS384', 'HS512', 'RS256', 'RS384', 'RS512', 'PS256', 'PS384','PS512')

对于非对称算法(“ RS”,“ PS”,“ ES”),公钥是可选的,因为私钥包含足够的信息来签名和验证令牌。pub-key用于指定用于验证jwt的公钥的路径,priv-key用于指定用于对jwt进行签名的私钥的路径,ttl用于指定jwt令牌的TTL。

JWT的示例选项:-auth-token jwt,pub-key=app.rsa.pub,privkey=app.rsasign-method = RS512,ttl = 10m

默认值:"simple"

环境变量:ETCD_AUTH_TOKEN

--bcrypt-cost

指定用于哈希认证密码的bcrypt算法的成本/强度。 有效值在4到31之间。

默认值:10

环境变量:(不支持)

实验参数

--experimental-corrupt-check-time

群集损坏检查通过之间的时间间隔

默认值:0s

环境变量:ETCD_EXPERIMENTAL_CORRUPT_CHECK_TIME

--experimental-compaction-batch-limit

设置每个压缩批处理中删除的最大修订。

默认值:1000

环境变量:ETCD_EXPERIMENTAL_COMPACTION_BATCH_LIMIT

--experimental-peer-skip-client-san-verification

跳过客户端证书中对等连接的SAN字段验证。 这可能是有帮助的,例如 如果群集成员在NAT后面的不同网络中运行。在这种情况下,请确保使用基于私有证书颁发机构的对等证书.--peer-cert-file, --peer-key-file, --peer-trusted-ca-file

默认值:false

环境变量:ETCD_EXPERIMENTAL_PEER_SKIP_CLIENT_SAN_VERIFICATION

k8s组件安装

创建kubernetes证书

证书签发,在k8s-master-01签发,然后复制到其他节点

创建ca证书签名的请求(csr)json文件

CN: Common Name ,浏览器使用该字段验证网站是否合法, 一般写的是域名。非常重要。浏览器使用该字段验证网站是否合法

C: Country,国家ST:State,州,省L: Locality ,地区,城市O: Organization Name ,组织名称,公司名称OU: Organization Unit Name ,组织单位名称,公司部门

————————————————

指定ca请求文件,生成json格式的证书证书 cfssl gencert -initca ca-csr.json

生成josn格式的证书之后转为文件承载形式的ca证书 cfssl gencert -initca ca-csr.json | cfssl-json -bare ca 生成 ca.pem(根证书), ca-key.pem(根证书的私钥)

cfssl-certinfo -cert 证书名称 查询证书的信息

# 所有master节点创建k8s证书目录

mkdir -p /etc/kubernetes/ssl

# 创建ca csr

cd /etc/kubernetes/ssl

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "Kubernetes",

"OU": "Kubernetes"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

# 生成ca证书和ca证书的key

cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

# 创建ca config

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

# 创建apiserver csr

cat > apiserver-csr.json << EOF

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "Kubernetes",

"OU": "Kubernetes"

}

]

}

EOF

# 签发apiserver证书

# 10.96.0.0 service网段第一个IP

# 192.168.10.11 VIP地址

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.96.0.1,192.168.10.11,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.10.12,192.168.10.14,192.168.10.15 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

# 创建apiserver聚合证书的ca csr

cat > front-proxy-ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

# 签发apiserver聚合证书

# 生成ca证书和ca证书的key

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

# 创建apiserver聚合证书和key

cat > front-proxy-client-csr.json << EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

# 签发apiserver聚合证书

cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

# 创建manager csr

cat > manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "system:kube-controller-manager",

"OU": "Kubernetes"

}

]

}

EOF

# 签发controller-manage的证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

# 创建scheduler csr

cat > scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "system:kube-scheduler",

"OU": "Kubernetes"

}

]

}

EOF

# 签发scheduler证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

# 创建admin csr

cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "system:masters",

"OU": "Kubernetes"

}

]

}

EOF

# 签发admin证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

# 创建ServiceAccount Key

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

查看证书

[root@k8s-master01 ssl]# ls /etc/kubernetes/pki/

admin.csr admin.pem apiserver-key.pem ca.csr ca.pem controller-manager-key.pem etcd front-proxy-ca-key.pem front-proxy-client.csr front-proxy-client.pem sa.pub scheduler-key.pem

admin-key.pem apiserver.csr apiserver.pem ca-key.pem controller-manager.csr controller-manager.pem front-proxy-ca.csr front-proxy-ca.pem front-proxy-client-key.pem sa.key scheduler.csr scheduler.pem

[root@k8s-master01 pki]# ls /etc/kubernetes/pki/ |wc -l

24

# 复制证书以及配置文件到其他master节点

for NODE in k8s-master02 k8s-master03;do

for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do

scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};

done;

done

证书签发,在k8s-master-01签发,然后复制到其他节点

创建ca证书签名的请求(csr)json文件

CN: Common Name ,浏览器使用该字段验证网站是否合法, 一般写的是域名。非常重要。浏览器使用该字段验证网站是否合法

C: Country,国家ST:State,州,省L: Locality ,地区,城市O: Organization Name ,组织名称,公司名称OU: Organization Unit Name ,组织单位名称,公司部门

————————————————

指定ca请求文件,生成json格式的证书证书 cfssl gencert -initca ca-csr.json

生成josn格式的证书之后转为文件承载形式的ca证书 cfssl gencert -initca ca-csr.json | cfssl-json -bare ca 生成 ca.pem(根证书), ca-key.pem(根证书的私钥)

cfssl-certinfo -cert 证书名称 查询证书的信息

# 所有master节点创建k8s证书目录

mkdir -p /etc/kubernetes/ssl

# 创建ca csr

cd /etc/kubernetes/ssl

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "Kubernetes",

"OU": "Kubernetes"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

# 生成ca证书和ca证书的key

cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

# 创建ca config

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

# 创建apiserver csr

cat > apiserver-csr.json << EOF

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "Kubernetes",

"OU": "Kubernetes"

}

]

}

EOF

# 签发apiserver证书

# 10.96.0.0 service网段第一个IP

# 192.168.10.11 VIP地址

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.96.0.1,192.168.10.11,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.10.12,192.168.10.14,192.168.10.15 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

# 创建apiserver聚合证书的ca csr

cat > front-proxy-ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

# 签发apiserver聚合证书

# 生成ca证书和ca证书的key

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

# 创建apiserver聚合证书和key

cat > front-proxy-client-csr.json << EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

# 签发apiserver聚合证书

cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

# 创建manager csr

cat > manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "system:kube-controller-manager",

"OU": "Kubernetes"

}

]

}

EOF

# 签发controller-manage的证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

# 创建scheduler csr

cat > scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "system:kube-scheduler",

"OU": "Kubernetes"

}

]

}

EOF

# 签发scheduler证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

# 创建admin csr

cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "PICC",

"O": "system:masters",

"OU": "Kubernetes"

}

]

}

EOF

# 签发admin证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

# 创建ServiceAccount Key

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

查看证书

[root@k8s-master01 ssl]# ls /etc/kubernetes/pki/

admin.csr admin.pem apiserver-key.pem ca.csr ca.pem controller-manager-key.pem etcd front-proxy-ca-key.pem front-proxy-client.csr front-proxy-client.pem sa.pub scheduler-key.pem

admin-key.pem apiserver.csr apiserver.pem ca-key.pem controller-manager.csr controller-manager.pem front-proxy-ca.csr front-proxy-ca.pem front-proxy-client-key.pem sa.key scheduler.csr scheduler.pem

[root@k8s-master01 pki]# ls /etc/kubernetes/pki/ |wc -l

24

# 复制证书以及配置文件到其他master节点

for NODE in k8s-master02 k8s-master03;do

for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do

scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};

done;

done

k8s组件安装,master部分

k8s组件安装,master部分(所有)

# 创建目录

mkdir -p /var/lib/kubelet

mkdir -p /var/log/kubernetes

mkdir -p /etc/systemd/system/kubelet.service.d

mkdir -p /etc/kubernetes/manifests/

mkdir -p /etc/kubernetes/pki/

k8s组件配置,在k8s-master-01节点执行

# controller-manage配置

# 设置一个集群项

# 注意,高可用集群地址为VIP:8443,如果不是高可用集群,192.168.10.12:6443 IP改为master01的地址,6443改为apiserver的端口,默认是6443

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.10.11:8443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# set-credentials 设置一个用户项

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 设置一个环境项

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--namespace=kube-system \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 设置默认环境

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# scheduler配置

# 设置一个集群项

# 注意,高可用集群地址为VIP:6443,如果不是高可用集群,192.168.10.12:6443改为master01的地址,6443改为apiserver的端口,默认是6443

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.10.11:8443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

# set-credentials 设置一个用户项

kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

# 设置一个环境项

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

# 设置默认环境

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

# admin配置

# 设置一个集群项

# 注意,高可用集群地址为VIP:6443,如果不是高可用集群,192.168.10.12:6443改为master01的地址,6443改为apiserver的端口,默认是6443

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.10.11:8443 \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

# set-credentials 设置一个用户项

kubectl config set-credentials kubernetes-admin \

--client-certificate=/etc/kubernetes/pki/admin.pem \

--client-key=/etc/kubernetes/pki/admin-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

# 设置一个环境项

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

# 设置默认环境

kubectl config use-context kubernetes-admin@kubernetes \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

# 复制配置文件到其他master节点

for NODE in k8s-master02 k8s-master03;do

for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do

scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE};

done;

done

# APIserver配置

# 创建apiserver service,三节点文件不一样

# master01

# 注意修改IP

# 注意service-cluster-ip-range设置为k8s-service网段

vi /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.10.12 \

--service-cluster-ip-range=10.96.0.0/12 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.10.12:2379,https://192.168.10.14:2379,https://192.168.10.15:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-extra-headers-prefix=X-Remote-Group \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

# master02

# 注意service-cluster-ip-range设置为service网段

vi /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.10.14 \

--service-cluster-ip-range=10.96.0.0/12 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.10.12:2379,https://192.168.10.14:2379,https://192.168.10.15:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-extra-headers-prefix=X-Remote-Group \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

# k8s-master-03

# 注意service-cluster-ip-range设置为service网段

vi /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.10.15 \

--service-cluster-ip-range=10.96.0.0/12 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.10.12:2379,https://192.168.10.14:2379,https://192.168.10.15:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-extra-headers-prefix=X-Remote-Group \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

# 所有节点启动apiserver

systemctl daemon-reload

systemctl enable --now kube-apiserver

systemctl start kube-apiserver

# 查看状态

systemctl status kube-apiserver

ControllerManager

所有Master节点配置kube-controller-manager service --cluster-cidr 为内部K8s pod 网络 172.16.0.0/12

vi /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--cluster-cidr=172.16.0.0/12 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

复制kube-controller-manager.service到其他节点

for NODE in k8s-master02 k8s-master03; do

scp /usr/lib/systemd/system/kube-controller-manager.service $NODE:/usr/lib/systemd/system/kube-controller-manager.service

done

所有Master节点启动kube-controller-manager

systemctl daemon-reload

systemctl enable --now kube-controller-manager

systemctl start kube-controller-manager.service

systemctl status kube-controller-manager.service

Scheduler

所有Master节点配置kube-scheduler service

vi /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

复制kube-scheduler.service到其他节点

for NODE in k8s-master02 k8s-master03; do

scp /usr/lib/systemd/system/kube-scheduler.service $NODE:/usr/lib/systemd/system/kube-scheduler.service

done

# 启动应用

systemctl daemon-reload

systemctl enable --now kube-scheduler

systemctl start kube-scheduler

systemctl status kube-scheduler

k8s组件安装,node部分

node节点安装k8s二进制文件

k8s-Mster01上将组件发送到所有node节点

WorkNodes='k8s-node01'

for NODE in $WorkNodes;do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin;done

复制证书和配置文件到node节点

for NODE in k8s-node01 ; do

ssh $NODE mkdir -p /etc/kubernetes/pki /etc/etcd/ssl

for FILE in etcd-ca.pem etcd.pem etcd-key.pem; do

scp /etc/etcd/ssl/$FILE $NODE:/etc/etcd/ssl/

done

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done

Kubelet配置,所有节点包括master和node

Kubelet配置,所有节点包括master和node

# 创建kubelet service配置文件,所有节点一样

vi /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

复制配置文件到所有节点

for NODE in k8s-master02 k8s-master03 k8s-node01; do

scp /usr/lib/systemd/system/kubelet.service $NODE:/usr/lib/systemd/system/kubelet.service

done

创建kubelet配置文件,所有节点一样

kubernetes中默认的配置参数是:

KUBELET_POD_INFRA_CONTAINER=–pod-infra-container-image=k8s.gcr.io/pause-amd64:3.1

Pause容器,是可以自己来定义私有仓库地址: harbor.k8s.local/k8s/pause-amd64:3.2

vi /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml --pod-infra-container-image=harbor.k8s.local/k8s/pause-amd64:3.2"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node=''"

ExecStart=

ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

复制配置文件到所有节点

for NODE in k8s-master02 k8s-master03 k8s-node01; do

scp /etc/systemd/system/kubelet.service.d/10-kubelet.conf $NODE:/etc/systemd/system/kubelet.service.d/10-kubelet.conf

done

# 创建kubelet配置文件,所有节点一样

# clusterDNS设置为service网段第10个IP

注意:如果更改了k8s的service网段,需要更改kubelet-conf.yml的clusterDNS:配置,改成k8s Service网段的第十个地址,比如10.96.0.10

vi /etc/kubernetes/kubelet-conf.yml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

复制配置文件到所有节点

for NODE in k8s-master02 k8s-master03 k8s-node01; do

scp /etc/kubernetes/kubelet-conf.yml $NODE:/etc/kubernetes/kubelet-conf.yml

done

# 启动kubelet (所有master)

systemctl daemon-reload

systemctl enable --now kubelet

systemctl status kubelet

systemctl restart kubelet

配置 kube-proxy

# 只在Master01执行

kubectl -n kube-system create serviceaccount kube-proxy

kubectl create clusterrolebinding system:kube-proxy \

--clusterrole system:node-proxier \

--serviceaccount kube-system:kube-proxy

SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

--output=jsonpath='{.data.token}' | base64 -d)

# 设置一个集群项

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.10.11:8443 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# set-credentials 设置一个用户项

kubectl config set-credentials kubernetes \

--token=${JWT_TOKEN} \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# 设置一个环境项

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=kubernetes \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# 设置默认环境

kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# 复制配置文件至其他节点

for NODE in k8s-master02 k8s-master03 k8s-node01 ; do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

done

# 创建kube-proxy service,所有节点一样

vi /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

复制kube-proxy service到其他节点

for NODE in k8s-master02 k8s-master03 k8s-node01 ; do

scp /usr/lib/systemd/system/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

done

# kube-proxy配置文件,所有节点一样

# clusterCIDR设置为pod网段

vi /etc/kubernetes/kube-proxy.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 172.16.0.0/16

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

复制kube-proxy.yaml到其他节点

for NODE in k8s-master02 k8s-master03 k8s-node01 ; do

scp /etc/kubernetes/kube-proxy.yaml $NODE:/etc/kubernetes/kube-proxy.yaml

done

# 启动kube-proxy,所有节点

systemctl daemon-reload

systemctl enable --now kube-proxy

systemctl start kube-proxy

systemctl status kube-proxy

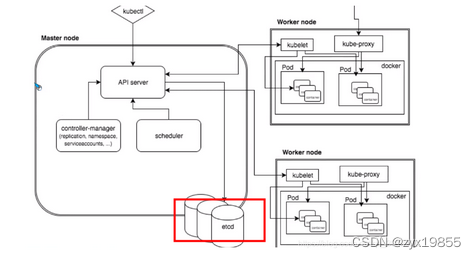

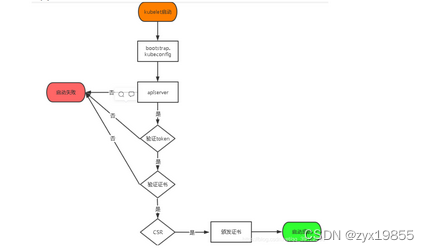

TLS Bootstrapping配置

启用 TLS Bootstrapping 机制创建token

TLS Bootstraping:Master

apiserver启用TLS认证后,Node节点kubelet和kube-proxy 要与kube-

apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节 点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为 了简化流程,Kubernetes引入了TLS

bootstraping机制来自动颁发客户端证书, kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver 动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet, kube-proxy还是由我们统一颁发一个证书。

TLS bootstraping 工作流程

用于kubelet证书自动签发,只在k8s-master-01节点操作

在Master01创建bootstrap

# 生成token格式字符串(长度不符,内容符合),取前六位作为令牌id,后16位作为令牌秘密(随便怎么截取,长度对就行)

[root@master01 kubernetes]#head -c 16 /dev/urandom | od -An -t x | tr -d ' '

# 9ab0c753b92c2e59c210679f53f5e4bf

# token-id: 9ab0c7

# token-secret: c210679f53f5e4bf

# 设置一个集群项 #注意,如果不是高可用集群,192.168.10.11:8443改为master01的地址,8443改为apiserver的端口,默认是6443

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.10.11:8443 \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# set-credentials 设置一个用户项

kubectl config set-credentials tls-bootstrap-token-user \

--token=9ab0c7.c210679f53f5e4bf \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# 设置一个环境项

kubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# 设置默认环境

kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# 设置admin环境变量,用户kubectl访问集群(master一个节点上就行,其他节点按需要再配置)

mkdir -p /root/.kube

cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

# 创建bootstrap.secret.yaml

# 注意metadata.name,和上面的token保持一致

# 主机stringData.token-id,和上面的token保持一致

# 主机stringData.token-secret,和上面的token保持一致

vi bootstrap.secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-9ab0c7

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: 9ab0c7

token-secret: c210679f53f5e4bf

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

# 创建bootstrap相关资源

kubectl create -f bootstrap.secret.yaml

可以正常查询集群状态,才可以继续往下,否则不行,需要排查k8s组件是否有故障

[root@k8s-master01 yaml]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

复制证书文件

for NODE in k8s-master02 k8s-master03 k8s-node01 ; do

for FILE in bootstrap-kubelet.kubeconfig; do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done

安装Calico

八. 安装Calico(calico3.8.9)

# 依据k8s版本的changelog选择calico版本

# k8s1.18.20版本changelog:kubernetes/CHANGELOG-1.18.md at master · kubernetes/kubernetes · GitHub

# calico3.8.9版本说明页:Release notes

# 下载calico.yaml

curl https://docs.projectcalico.org/archive/v3.8/manifests/calico-typha.yaml -o calico.yaml

修改镜像地址 calico.yaml

防止下载calico需要的镜像失败,建议修改成私有镜像仓库地址

image: harbor.k8s.local/calico/typha:v3.8.9

image: harbor.k8s.local/calico/cni:v3.8.9

image: harbor.k8s.local/calico/cni:v3.8.9

image: harbor.k8s.local/calico/pod2daemon-flexvol:v3.8.9

image: harbor.k8s.local/calico/node:v3.8.9

image: harbor.k8s.local/calico/kube-controllers:v3.8.9

# 修改pod网段

# 取消对清单中的 CALICO_IPV4POOL_CIDR 变量的注释,并将其设置为与您选择的 pod CIDR 相同的值。

vi calico.yaml

## 修改内容如下

- name: CALICO_IPV4POOL_CIDR

value: "172.16.0.0/16"

# 安装

kubectl apply -f calico.yaml

# 查看状态,确认状态都是running后继续

[root@k8s-master01 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-bb9bdf898-95jnh 1/1 Running 1 4m16s

calico-node-9dr5g 1/1 Running 0 4m17s

calico-node-x4v9s 1/1 Running 0 4m17s

calico-node-xq2cf 1/1 Running 0 4m17s

calico-node-xt6kd 1/1 Running 0 4m17s

calico-typha-969cb6b85-kbzjp 1/1 Running 0 4m17s

安装CoreDNS

安装CoreDNS( coredns:1.6.9)

# 依据k8s版本的changelog选择coreDns版本

GitHub - coredns/deployment: Scripts, utilities, and examples for deploying CoreDNS.

wget deployment/deploy.sh at master · coredns/deployment · GitHub

chmod 755 deploy.sh

下载coredns模板 coredns.yaml.sed

wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed

# 生成coredns.yaml,10.96.0.10是k8s service网段的第十个IP

./deploy.sh -s -i 10.96.0.10 > coredns.yaml

## 修改images为私有镜像仓库地址

vi coredns.yaml

image: coredns/coredns:1.8.6 --> image: harbor.k8s.local/coredns/coredns:1.6.9

# 安装,coredns.yaml

kubectl create -f coredns.yaml

# 查看状态

kubectl get po -n kube-system -l k8s-app=kube-dns