目录

1. 操作说明

1.1 错误操作示例:

smartctl -a /dev/sdk > /dev/sdk

1.2 正确操作示例:

[root@node01 ~]# smartctl -a /dev/sda > smartctl_sda

[root@node01 ~]# ll smartctl_sda

-rw-r----- 1 root root 5855 Jan 3 10:55 smartctl_sda

[root@node01 ~]#

产生的数据不超过 6KB,即最多覆盖了磁盘的前 6KB 空间。

2. 磁盘分析

2.1 磁盘二进制数据分析

使用 hexdump 命令查看磁盘的前 6KB 信息如下:

[root@node01 ~]# hexdump -C -n 6144 /dev/sdf

00000000 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00000200 4c 41 42 45 4c 4f 4e 45 01 00 00 00 00 00 00 00 |LABELONE........|

00000210 7d 8e ab 9f 20 00 00 00 4c 56 4d 32 20 30 30 31 |}... ...LVM2 001|

00000220 5a 50 4f 62 6b 31 38 4b 72 6f 78 50 53 56 33 76 |ZPObk18KroxPSV3v|

00000230 58 57 38 31 6c 47 4a 30 4a 6e 49 6d 67 5a 55 72 |XW81lGJ0JnImgZUr|

00000240 00 60 d3 2d a3 01 00 00 00 00 10 00 00 00 00 00 |.`.-............|

00000250 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000260 00 00 00 00 00 00 00 00 00 10 00 00 00 00 00 00 |................|

00000270 00 f0 0f 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000280 00 00 00 00 00 00 00 00 02 00 00 00 01 00 00 00 |................|

00000290 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00001000 97 3c 34 41 20 4c 56 4d 32 20 78 5b 35 41 25 72 |.<4A LVM2 x[5A%r|

00001010 30 4e 2a 3e 01 00 00 00 00 10 00 00 00 00 00 00 |0N*>............|

00001020 00 f0 0f 00 00 00 00 00 00 78 00 00 00 00 00 00 |.........x......|

00001030 77 06 00 00 00 00 00 00 12 0b 4c 15 00 00 00 00 |w.........L.....|

00001040 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00001200 63 65 70 68 2d 32 34 63 30 32 37 62 66 2d 35 61 |ceph-24c027bf-5a|

00001210 62 61 2d 34 39 65 61 2d 38 66 33 62 2d 37 36 62 |ba-49ea-8f3b-76b|

00001220 66 64 32 31 37 37 34 65 33 20 7b 0a 69 64 20 3d |fd21774e3 {.id =|

00001230 20 22 35 42 72 59 44 74 2d 69 6b 67 71 2d 6e 41 | "5BrYDt-ikgq-nA|

00001240 41 36 2d 71 4a 70 50 2d 4e 4c 4c 79 2d 47 4a 76 |A6-qJpP-NLLy-GJv|

00001250 45 2d 65 4c 45 33 4a 4f 22 0a 73 65 71 6e 6f 20 |E-eLE3JO".seqno |

......

000017c0 61 66 36 30 38 32 61 62 2d 63 33 32 36 2d 34 35 |af6082ab-c326-45|

000017d0 39 36 2d 62 64 31 39 2d 30 33 64 36 63 62 33 39 |96-bd19-03d6cb39|

000017e0 65 30 35 36 20 7b 0a 69 64 20 3d 20 22 58 33 69 |e056 {.id = "X3i|

000017f0 50 54 45 2d 50 73 54 7a 2d 47 42 7a 53 2d 48 46 |PTE-PsTz-GBzS-HF|

00001800

由上可分析得出:

- 0x0 ~ 0x200(0B ~ 512B):留空

- 0x200 ~ 0x290(512B ~ 656B): lvm label 和 pv id

- 0x290 ~ 0x1000(656B ~ 4096B):留空

- 0x1000 ~ 0x1040(4096B ~ 4160B):lvm 信息

- 0x1040 ~ 0x1200(4160B ~ 4608B):留空

- 0x1200 ~ 0x1800(4160B ~ 6144B):lvm record

2.2 LVM 结构说明

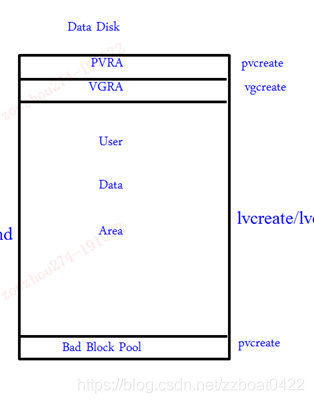

根据网上查询的 LVM 结构资料,数据磁盘(非 boot disk)结构如下,磁盘头的信息为 PVRA:

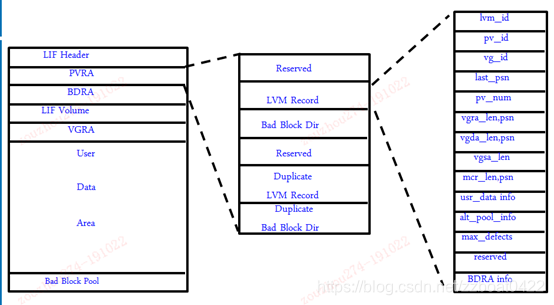

而 PVRA 结构(非 boot disk 无 LIF Header)如下:

PVRA 中的 lvm record:128 sector。

也就是说至少前128*512B=64KB 的空间为 lvm 记录(lvm record)

2.3 LVM metadata 分析

检查实际磁盘的 metadata 信息如下:

[root@node01 ~]# cat /etc/lvm/backup/ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3

# Generated by LVM2 version 2.02.171(2)-RHEL7 (2017-05-03): Mon Sep 9 17:11:59 2019

contents = "Text Format Volume Group"

version = 1

description = "Created *after* executing '/usr/sbin/lvchange --addtag ceph.journal_device=/dev/sdd2 /dev/ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3/osd-data-af6082ab-c326-4596-bd19-03d6cb39e056'"

creation_host = "node01" # Linux node01 3.10.0-862.14.4.el7.x86_64 #1 SMP Fri Sep 21 09:07:21 UTC 2018 x86_64

creation_time = 1568020319 # Mon Sep 9 17:11:59 2019

ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3 {

id = "5BrYDt-ikgq-nAA6-qJpP-NLLy-GJvE-eLE3JO"

seqno = 19

format = "lvm2" # informational

status = ["RESIZEABLE", "READ", "WRITE"]

flags = []

extent_size = 8192 # 4 Megabytes

max_lv = 0

max_pv = 0

metadata_copies = 0

physical_volumes {

pv0 {

id = "ZPObk1-8Kro-xPSV-3vXW-81lG-J0Jn-ImgZUr"

device = "/dev/sdf" # Hint only

status = ["ALLOCATABLE"]

flags = []

dev_size = 3516328368 # 1.63742 Terabytes

pe_start = 2048

pe_count = 429239 # 1.63742 Terabytes

}

}

可见 metadata 信息与通过 hexdump 看到的二进制信息 0x1200 到 0x1800 保持一致。

2.4 总结

经仔细分析发现,从 4160B 之后直到 128KB 区间内,存放的是此磁盘所有 lvm metadata 的变更记录,即一旦这块磁盘的 lvm metadata 发生任何变化,都会在此区域内将完整 metaddata 信息记录一次。

3. 恢复操作

3.1 模拟故障

- 检查 /dev/sdf 磁盘的状态:

[root@node01 ~]# pvs -v

Wiping internal VG cache

Wiping cache of LVM-capable devices

PV VG Fmt Attr PSize PFree DevSize PV UUID

/dev/sde ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 lvm2 a-- <1.64t 0 <1.64t z2Pm3j-5eLO-KV1N-RbfI-4Ixs-pkpa-fiqJQN

/dev/sdf ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3 lvm2 a-- <1.64t 0 <1.64t ZPObk1-8Kro-xPSV-3vXW-81lG-J0Jn-ImgZUr

/dev/sdg ceph-f9157059-3ef4-4442-b634-4c4677021b6b lvm2 a-- <1.64t 0 <1.64t NRrLYu-FBSU-p5f0-S6hW-DIt1-Xz04-omFHcJ

/dev/sdh ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 lvm2 a-- <1.64t 0 <1.64t mXMip8-e14j-4fOe-krM9-L9Wf-egQy-xJTfOn

/dev/sdi ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 lvm2 a-- <1.64t 0 <1.64t edR6a9-Uwoc-NGZc-f1SB-c8jq-8lPi-rEwZNj

/dev/sdj ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f lvm2 a-- <1.64t 0 <1.64t fwwooH-c3KG-V068-ujbE-8x4S-HiC4-GXDTe7

/dev/sdk ceph-94a84a85-5806-4a5b-95b6-37316b682962 lvm2 a-- <1.64t 0 <1.64t eaE8Rq-E8PW-Wxa9-gzH7-fMmk-8OQ8-NYkYkp

/dev/sdw3 rootvg lvm2 a-- 276.46g 0 276.46g 70AQcc-bGYp-Vx4Y-9fAP-6bHV-6LRC-DDRtO5

[root@node01 ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

osd-data-6aed5334-3ba8-4cf4-b036-eaca7b6566ad ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 -wi-ao---- <1.64t

osd-data-af6082ab-c326-4596-bd19-03d6cb39e056 ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3 -wi-ao---- <1.64t

osd-data-2bcd7aac-8710-4c9d-a0d5-182ff43baf57 ceph-94a84a85-5806-4a5b-95b6-37316b682962 -wi-ao---- <1.64t

osd-data-003c05ea-8589-4658-96d8-d7543fe2325b ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 -wi-ao---- <1.64t

osd-data-7f770457-5372-4c32-b786-a87396f5204c ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 -wi-ao---- <1.64t

osd-data-b913142a-565e-487b-86e8-b76f509d2a77 ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f -wi-ao---- <1.64t

osd-data-f58531ef-8d69-4904-9459-e80135fc1dca ceph-f9157059-3ef4-4442-b634-4c4677021b6b -wi-ao---- <1.64t

lv_root rootvg -wi-ao---- 276.46g

[root@node01 ~]#

sde 8:64 0 1.7T 0 disk

└─ceph--aa80e19b--e6e3--4093--b8c8--0a9d5e7c6765-osd--data--7f770457--5372--4c32--b786--a87396f5204c 253:1 0 1.7T 0 lvm /var/lib/ceph/osd/ceph-1

sdf 8:80 0 1.7T 0 disk

└─ceph--24c027bf--5aba--49ea--8f3b--76bfd21774e3-osd--data--af6082ab--c326--4596--bd19--03d6cb39e056 253:7 0 1.7T 0 lvm /var/lib/ceph/osd/ceph-5

sdg 8:96 0 1.7T 0 disk

└─ceph--f9157059--3ef4--4442--b634--4c4677021b6b-osd--data--f58531ef--8d69--4904--9459--e80135fc1dca 253:5 0 1.7T 0 lvm /var/lib/ceph/osd/ceph-8

sdh 8:112 0 1.7T 0 disk

└─ceph--987f5adf--b742--4265--bf89--bcd7539b6dd2-osd--data--003c05ea--8589--4658--96d8--d7543fe2325b 253:4 0 1.7T 0 lvm /var/lib/ceph/osd/ceph-11

sdi 8:128 0 1.7T 0 disk

└─ceph--23c42eea--06ef--46ef--8f5b--1a8b91d2e868-osd--data--6aed5334--3ba8--4cf4--b036--eaca7b6566ad 253:2 0 1.7T 0 lvm /var/lib/ceph/osd/ceph-14

sdj 8:144 0 1.7T 0 disk

└─ceph--ecd3417c--4b5d--472b--bc0b--d48bf5e4176f-osd--data--b913142a--565e--487b--86e8--b76f509d2a77 253:6 0 1.7T 0 lvm /var/lib/ceph/osd/ceph-19

sdk 8:160 0 1.7T 0 disk

└─ceph--94a84a85--5806--4a5b--95b6--37316b682962-osd--data--2bcd7aac--8710--4c9d--a0d5--182ff43baf57 253:3 0 1.7T 0 lvm /var/lib/ceph/osd/ceph-20

- 使用 dd 命令覆盖 /dev/sdf 的前 6KB 空间:

[root@node01 ~]# dd if=/dev/zero of=/dev/sdf bs=1k count=6

6+0 records in

6+0 records out

6144 bytes (6.1 kB) copied, 0.000142057 s, 43.3 MB/s

[root@node01 ~]# hexdump -C -n 6144 /dev/sdf

00000000 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00001800

[root@node01 ~]#

- 再次检查,可以发现 /dev/sdf 已经在 lvm 记录中消失:

[root@node01 ~]# pvs -v

Wiping internal VG cache

Wiping cache of LVM-capable devices

PV VG Fmt Attr PSize PFree DevSize PV UUID

/dev/sde ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 lvm2 a-- <1.64t 0 <1.64t z2Pm3j-5eLO-KV1N-RbfI-4Ixs-pkpa-fiqJQN

/dev/sdg ceph-f9157059-3ef4-4442-b634-4c4677021b6b lvm2 a-- <1.64t 0 <1.64t NRrLYu-FBSU-p5f0-S6hW-DIt1-Xz04-omFHcJ

/dev/sdh ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 lvm2 a-- <1.64t 0 <1.64t mXMip8-e14j-4fOe-krM9-L9Wf-egQy-xJTfOn

/dev/sdi ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 lvm2 a-- <1.64t 0 <1.64t edR6a9-Uwoc-NGZc-f1SB-c8jq-8lPi-rEwZNj

/dev/sdj ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f lvm2 a-- <1.64t 0 <1.64t fwwooH-c3KG-V068-ujbE-8x4S-HiC4-GXDTe7

/dev/sdk ceph-94a84a85-5806-4a5b-95b6-37316b682962 lvm2 a-- <1.64t 0 <1.64t eaE8Rq-E8PW-Wxa9-gzH7-fMmk-8OQ8-NYkYkp

/dev/sdw3 rootvg lvm2 a-- 276.46g 0 276.46g 70AQcc-bGYp-Vx4Y-9fAP-6bHV-6LRC-DDRtO5

[root@node01 ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

osd-data-6aed5334-3ba8-4cf4-b036-eaca7b6566ad ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 -wi-ao---- <1.64t

osd-data-2bcd7aac-8710-4c9d-a0d5-182ff43baf57 ceph-94a84a85-5806-4a5b-95b6-37316b682962 -wi-ao---- <1.64t

osd-data-003c05ea-8589-4658-96d8-d7543fe2325b ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 -wi-ao---- <1.64t

osd-data-7f770457-5372-4c32-b786-a87396f5204c ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 -wi-ao---- <1.64t

osd-data-b913142a-565e-487b-86e8-b76f509d2a77 ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f -wi-ao---- <1.64t

osd-data-f58531ef-8d69-4904-9459-e80135fc1dca ceph-f9157059-3ef4-4442-b634-4c4677021b6b -wi-ao---- <1.64t

lv_root rootvg -wi-ao---- 276.46g

[root@node01 ~]#

3.2 在线修复

- 停止 OSD 服务,卸载目录(注意:如果使用的是 bluestore,卸载后无法再直接挂载,挂载方法还在找):

[root@node01 ~]# systemctl stop ceph-osd@5.service

[root@node01 ~]# umount /var/lib/ceph/osd/ceph-5/

[root@node01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rootvg-lv_root 272G 3.6G 255G 2% /

devtmpfs 63G 0 63G 0% /dev

tmpfs 63G 0 63G 0% /dev/shm

tmpfs 63G 9.7M 63G 1% /run

tmpfs 63G 0 63G 0% /sys/fs/cgroup

/dev/sdw2 976M 152M 758M 17% /boot

/dev/sdw1 1022M 9.8M 1013M 1% /boot/efi

/dev/dm-1 1.7T 434G 1.3T 26% /var/lib/ceph/osd/ceph-1

/dev/dm-4 1.7T 425G 1.3T 26% /var/lib/ceph/osd/ceph-11

/dev/dm-6 1.7T 433G 1.3T 26% /var/lib/ceph/osd/ceph-19

/dev/dm-5 1.7T 428G 1.3T 26% /var/lib/ceph/osd/ceph-8

/dev/dm-2 1.7T 434G 1.3T 26% /var/lib/ceph/osd/ceph-14

/dev/dm-3 1.7T 427G 1.3T 26% /var/lib/ceph/osd/ceph-20

tmpfs 13G 0 13G 0% /run/user/0

[root@node01 ~]#

- 清除系统层的卷信息:

[root@node01 ~]# dmsetup ls

rootvg-lv_root (253:0)

ceph--ecd3417c--4b5d--472b--bc0b--d48bf5e4176f-osd--data--b913142a--565e--487b--86e8--b76f509d2a77 (253:6)

ceph--23c42eea--06ef--46ef--8f5b--1a8b91d2e868-osd--data--6aed5334--3ba8--4cf4--b036--eaca7b6566ad (253:2)

ceph--987f5adf--b742--4265--bf89--bcd7539b6dd2-osd--data--003c05ea--8589--4658--96d8--d7543fe2325b (253:4)

ceph--aa80e19b--e6e3--4093--b8c8--0a9d5e7c6765-osd--data--7f770457--5372--4c32--b786--a87396f5204c (253:1)

ceph--94a84a85--5806--4a5b--95b6--37316b682962-osd--data--2bcd7aac--8710--4c9d--a0d5--182ff43baf57 (253:3)

ceph--f9157059--3ef4--4442--b634--4c4677021b6b-osd--data--f58531ef--8d69--4904--9459--e80135fc1dca (253:5)

ceph--24c027bf--5aba--49ea--8f3b--76bfd21774e3-osd--data--af6082ab--c326--4596--bd19--03d6cb39e056 (253:7)

[root@node01 ~]# dmsetup remove ceph--24c027bf--5aba--49ea--8f3b--76bfd21774e3-osd--data--af6082ab--c326--4596--bd19--03d6cb39e056

[root@node01 ~]# dmsetup ls

rootvg-lv_root (253:0)

ceph--ecd3417c--4b5d--472b--bc0b--d48bf5e4176f-osd--data--b913142a--565e--487b--86e8--b76f509d2a77 (253:6)

ceph--23c42eea--06ef--46ef--8f5b--1a8b91d2e868-osd--data--6aed5334--3ba8--4cf4--b036--eaca7b6566ad (253:2)

ceph--987f5adf--b742--4265--bf89--bcd7539b6dd2-osd--data--003c05ea--8589--4658--96d8--d7543fe2325b (253:4)

ceph--aa80e19b--e6e3--4093--b8c8--0a9d5e7c6765-osd--data--7f770457--5372--4c32--b786--a87396f5204c (253:1)

ceph--94a84a85--5806--4a5b--95b6--37316b682962-osd--data--2bcd7aac--8710--4c9d--a0d5--182ff43baf57 (253:3)

ceph--f9157059--3ef4--4442--b634--4c4677021b6b-osd--data--f58531ef--8d69--4904--9459--e80135fc1dca (253:5)

- 根据 lvm backup 信息和 pv id 重建 pv 信息:

[root@node01 ~]# pvcreate --force --uuid "ZPObk1-8Kro-xPSV-3vXW-81lG-J0Jn-ImgZUr" --restorefile /etc/lvm/backup/ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3 /dev/sdf

Couldn't find device with uuid ZPObk1-8Kro-xPSV-3vXW-81lG-J0Jn-ImgZUr.

Physical volume "/dev/sdf" successfully created.

[root@node01 ~]# pvs -v

Wiping internal VG cache

Wiping cache of LVM-capable devices

PV VG Fmt Attr PSize PFree DevSize PV UUID

/dev/sde ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 lvm2 a-- <1.64t 0 <1.64t z2Pm3j-5eLO-KV1N-RbfI-4Ixs-pkpa-fiqJQN

/dev/sdf lvm2 --- <1.64t <1.64t <1.64t ZPObk1-8Kro-xPSV-3vXW-81lG-J0Jn-ImgZUr

/dev/sdg ceph-f9157059-3ef4-4442-b634-4c4677021b6b lvm2 a-- <1.64t 0 <1.64t NRrLYu-FBSU-p5f0-S6hW-DIt1-Xz04-omFHcJ

/dev/sdh ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 lvm2 a-- <1.64t 0 <1.64t mXMip8-e14j-4fOe-krM9-L9Wf-egQy-xJTfOn

/dev/sdi ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 lvm2 a-- <1.64t 0 <1.64t edR6a9-Uwoc-NGZc-f1SB-c8jq-8lPi-rEwZNj

/dev/sdj ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f lvm2 a-- <1.64t 0 <1.64t fwwooH-c3KG-V068-ujbE-8x4S-HiC4-GXDTe7

/dev/sdk ceph-94a84a85-5806-4a5b-95b6-37316b682962 lvm2 a-- <1.64t 0 <1.64t eaE8Rq-E8PW-Wxa9-gzH7-fMmk-8OQ8-NYkYkp

/dev/sdw3 rootvg lvm2 a-- 276.46g 0 276.46g 70AQcc-bGYp-Vx4Y-9fAP-6bHV-6LRC-DDRtO5

[root@node01 ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

osd-data-6aed5334-3ba8-4cf4-b036-eaca7b6566ad ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 -wi-ao---- <1.64t

osd-data-2bcd7aac-8710-4c9d-a0d5-182ff43baf57 ceph-94a84a85-5806-4a5b-95b6-37316b682962 -wi-ao---- <1.64t

osd-data-003c05ea-8589-4658-96d8-d7543fe2325b ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 -wi-ao---- <1.64t

osd-data-7f770457-5372-4c32-b786-a87396f5204c ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 -wi-ao---- <1.64t

osd-data-b913142a-565e-487b-86e8-b76f509d2a77 ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f -wi-ao---- <1.64t

osd-data-f58531ef-8d69-4904-9459-e80135fc1dca ceph-f9157059-3ef4-4442-b634-4c4677021b6b -wi-ao---- <1.64t

lv_root rootvg -wi-ao---- 276.46g

- 重建 vg 信息:

[root@node01 ~]# vgcfgrestore ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3

Restored volume group ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3

[root@node01 ~]# pvs -v

Wiping internal VG cache

Wiping cache of LVM-capable devices

PV VG Fmt Attr PSize PFree DevSize PV UUID

/dev/sde ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 lvm2 a-- <1.64t 0 <1.64t z2Pm3j-5eLO-KV1N-RbfI-4Ixs-pkpa-fiqJQN

/dev/sdf ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3 lvm2 a-- <1.64t 0 <1.64t ZPObk1-8Kro-xPSV-3vXW-81lG-J0Jn-ImgZUr

/dev/sdg ceph-f9157059-3ef4-4442-b634-4c4677021b6b lvm2 a-- <1.64t 0 <1.64t NRrLYu-FBSU-p5f0-S6hW-DIt1-Xz04-omFHcJ

/dev/sdh ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 lvm2 a-- <1.64t 0 <1.64t mXMip8-e14j-4fOe-krM9-L9Wf-egQy-xJTfOn

/dev/sdi ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 lvm2 a-- <1.64t 0 <1.64t edR6a9-Uwoc-NGZc-f1SB-c8jq-8lPi-rEwZNj

/dev/sdj ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f lvm2 a-- <1.64t 0 <1.64t fwwooH-c3KG-V068-ujbE-8x4S-HiC4-GXDTe7

/dev/sdk ceph-94a84a85-5806-4a5b-95b6-37316b682962 lvm2 a-- <1.64t 0 <1.64t eaE8Rq-E8PW-Wxa9-gzH7-fMmk-8OQ8-NYkYkp

/dev/sdw3 rootvg lvm2 a-- 276.46g 0 276.46g 70AQcc-bGYp-Vx4Y-9fAP-6bHV-6LRC-DDRtO5

[root@node01 ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

osd-data-6aed5334-3ba8-4cf4-b036-eaca7b6566ad ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 -wi-ao---- <1.64t

osd-data-af6082ab-c326-4596-bd19-03d6cb39e056 ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3 -wi------- <1.64t

osd-data-2bcd7aac-8710-4c9d-a0d5-182ff43baf57 ceph-94a84a85-5806-4a5b-95b6-37316b682962 -wi-ao---- <1.64t

osd-data-003c05ea-8589-4658-96d8-d7543fe2325b ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 -wi-ao---- <1.64t

osd-data-7f770457-5372-4c32-b786-a87396f5204c ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 -wi-ao---- <1.64t

osd-data-b913142a-565e-487b-86e8-b76f509d2a77 ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f -wi-ao---- <1.64t

osd-data-f58531ef-8d69-4904-9459-e80135fc1dca ceph-f9157059-3ef4-4442-b634-4c4677021b6b -wi-ao---- <1.64t

lv_root rootvg -wi-ao---- 276.46g

- 重新激活此 lv(也可以 使用 lvchange 命令激活,参见附录的参考链接一):

[root@node01 ~]# vgchange -ay ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3

1 logical volume(s) in volume group "ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3" now active

[root@node01 ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

osd-data-6aed5334-3ba8-4cf4-b036-eaca7b6566ad ceph-23c42eea-06ef-46ef-8f5b-1a8b91d2e868 -wi-ao---- <1.64t

osd-data-af6082ab-c326-4596-bd19-03d6cb39e056 ceph-24c027bf-5aba-49ea-8f3b-76bfd21774e3 -wi-a----- <1.64t

osd-data-2bcd7aac-8710-4c9d-a0d5-182ff43baf57 ceph-94a84a85-5806-4a5b-95b6-37316b682962 -wi-ao---- <1.64t

osd-data-003c05ea-8589-4658-96d8-d7543fe2325b ceph-987f5adf-b742-4265-bf89-bcd7539b6dd2 -wi-ao---- <1.64t

osd-data-7f770457-5372-4c32-b786-a87396f5204c ceph-aa80e19b-e6e3-4093-b8c8-0a9d5e7c6765 -wi-ao---- <1.64t

osd-data-b913142a-565e-487b-86e8-b76f509d2a77 ceph-ecd3417c-4b5d-472b-bc0b-d48bf5e4176f -wi-ao---- <1.64t

osd-data-f58531ef-8d69-4904-9459-e80135fc1dca ceph-f9157059-3ef4-4442-b634-4c4677021b6b -wi-ao---- <1.64t

lv_root rootvg -wi-ao---- 276.46g

- 挂载目录,启动 OSD 服务器:

[root@node01 ~]# mount /dev/dm-7 /var/lib/ceph/osd/ceph-5

[root@node01 ~]# systemctl start ceph-osd@5

[root@node01 ~]# systemctl status ceph-osd@5

● ceph-osd@5.service - Ceph object storage daemon osd.5

Loaded: loaded (/usr/lib/systemd/system/ceph-osd@.service; enabled-runtime; vendor preset: disabled)

Active: active (running) since Thu 2020-01-02 18:07:09 CST; 4s ago

Process: 5671 ExecStartPre=/usr/lib/ceph/ceph-osd-prestart.sh --cluster ${CLUSTER} --id %i (code=exited, status=0/SUCCESS)

Main PID: 5676 (ceph-osd)

CGroup: /system.slice/system-ceph\x2dosd.slice/ceph-osd@5.service

└─5676 /usr/bin/ceph-osd -f --cluster ceph --id 5 --setuser ceph --setgroup ceph

Jan 02 18:07:09 node01 systemd[1]: Starting Ceph object storage daemon osd.5...

Jan 02 18:07:09 node01 systemd[1]: Started Ceph object storage daemon osd.5.

Jan 02 18:07:09 node01 ceph-osd[5676]: starting osd.5 at - osd_data /var/lib/ceph/osd/ceph-5 /var/lib/ceph/osd/ceph-5/journal

Jan 02 18:07:10 node01 ceph-osd[5676]: 2020-01-02 18:07:10.117127 7fbf7ff35d40 -1 journal do_read_entry(11751694336): bad header magic

Jan 02 18:07:10 node01 ceph-osd[5676]: 2020-01-02 18:07:10.117138 7fbf7ff35d40 -1 journal do_read_entry(11751694336): bad header magic

[root@node01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 34.36853 root default

-3 11.45618 host node03

0 hdd 1.63660 osd.0 up 1.00000 1.00000

3 hdd 1.63660 osd.3 up 1.00000 1.00000

6 hdd 1.63660 osd.6 up 1.00000 1.00000

9 hdd 1.63660 osd.9 up 1.00000 1.00000

12 hdd 1.63660 osd.12 up 1.00000 1.00000

15 hdd 1.63660 osd.15 up 1.00000 1.00000

17 hdd 1.63660 osd.17 up 1.00000 1.00000

-5 11.45618 host node02

2 hdd 1.63660 osd.2 up 1.00000 1.00000

4 hdd 1.63660 osd.4 up 1.00000 1.00000

7 hdd 1.63660 osd.7 up 1.00000 1.00000

10 hdd 1.63660 osd.10 up 1.00000 1.00000

13 hdd 1.63660 osd.13 up 1.00000 1.00000

16 hdd 1.63660 osd.16 up 1.00000 1.00000

18 hdd 1.63660 osd.18 up 1.00000 1.00000

-7 11.45618 host node01

1 hdd 1.63660 osd.1 up 1.00000 1.00000

5 hdd 1.63660 osd.5 up 1.00000 1.00000

8 hdd 1.63660 osd.8 up 1.00000 1.00000

11 hdd 1.63660 osd.11 up 1.00000 1.00000

14 hdd 1.63660 osd.14 up 1.00000 1.00000

19 hdd 1.63660 osd.19 up 1.00000 1.00000

20 hdd 1.63660 osd.20 up 1.00000 1.00000

- 重启服务器,验证是否能自动挂载。

4. 附录

参考链接:

2614

2614

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?