Mastering OpenCV with Practical Computer Vision Projects 中的第三章里面讲到了几种特征点匹配的优化方式

1. OpenCV提供了两种Matching方式:

• Brute-force matcher (cv::BFMatcher)

• Flann-based matcher (cv::FlannBasedMatcher)

Brute-force matcher就是用暴力方法找到点集一中每个descriptor在点集二中距离最近的descriptor;

Flann-based matcher 使用快速近似最近邻搜索算法寻找(用快速的第三方库近似最近邻搜索算法)

一般把点集一称为 train set (训练集)对应模板图像,点集二称为 query set(查询集)对应查找模板图的目标图像。

为了提高检测速度,你可以调用matching函数前,先训练一个matcher。训练过程可以首先使用cv::FlannBasedMatcher来优化,为descriptor建立索引树,这种操作将在匹配大量数据时发挥巨大作用(比如在上百幅图像的数据集中查找匹配图像)。而Brute-force matcher在这个过程并不进行操作,它只是将train descriptors保存在内存中。

2. 在matching过程中可以使用cv::DescriptorMatcher的如下功能来进行匹配:

- 简单查找最优匹配:void match(const Mat& queryDescriptors, vector<DMatch>& matches,const vector<Mat>& masks=vector<Mat>() );

- 为每个descriptor查找K-nearest-matches:void knnMatch(const Mat& queryDescriptors, vector<vector<DMatch> >& matches, int k,const vector<Mat>&masks=vector<Mat>(),bool compactResult=false );

- 查找那些descriptors间距离小于特定距离的匹配:void radiusMatch(const Mat& queryDescriptors, vector<vector<DMatch> >& matches, maxDistance, const vector<Mat>& masks=vector<Mat>(), bool compactResult=false );

3. matching结果包含许多错误匹配,错误的匹配分为两种:

- False-positive matches: 将非对应特征点检测为匹配(我们可以对他做文章,尽量消除它)

- False-negative matches: 未将匹配的特征点检测出来(无法处理,因为matching算法拒绝)

- Cross-match filter:

- Ratio test

为了进一步提升匹配精度,可以采用随机样本一致性(RANSAC)方法。

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/nonfree/nonfree.hpp"

#include <iostream>

using namespace cv;

using namespace std;

int main( )

{

//【0】改变console字体颜色

system("color 1F");

//【1】载入原始图片

Mat srcImage1 = imread( "1.jpg", 1 );

Mat srcImage2 = imread( "2.jpg", 1 );

Mat copysrcImage1=srcImage1.clone();

Mat copysrcImage2=srcImage2.clone();

if( !srcImage1.data || !srcImage2.data )

{ printf("读取图片错误,请确定目录下是否有imread函数指定的图片存在~! \n"); return false; }

//【2】使用SURF算子检测关键点

int minHessian = 400;//SURF算法中的hessian阈值

SurfFeatureDetector detector( minHessian );//定义一个SurfFeatureDetector(SURF) 特征检测类对象

vector<KeyPoint> keypoints_object, keypoints_scene;//vector模板类,存放任意类型的动态数组

//【3】调用detect函数检测出SURF特征关键点,保存在vector容器中

detector.detect( srcImage1, keypoints_object );

detector.detect( srcImage2, keypoints_scene );

//【4】计算描述符(特征向量)

SurfDescriptorExtractor extractor;

Mat descriptors_object, descriptors_scene;

extractor.compute( srcImage1, keypoints_object, descriptors_object );

extractor.compute( srcImage2, keypoints_scene, descriptors_scene );

//【5】使用FLANN匹配算子进行匹配

FlannBasedMatcher matcher;

vector< DMatch > matches;

matcher.match( descriptors_object, descriptors_scene, matches );

double max_dist = 0; double min_dist = 100;//最小距离和最大距离

//【6】计算出关键点之间距离的最大值和最小值

for( int i = 0; i < descriptors_object.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

printf(">Max dist 最大距离 : %f \n", max_dist );

printf(">Min dist 最小距离 : %f \n", min_dist );

//【7】存下匹配距离小于3*min_dist的点对

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_object.rows; i++ )

{

if( matches[i].distance < 3*min_dist )

{

good_matches.push_back( matches[i]);

}

}

//绘制出匹配到的关键点

Mat img_matches;

drawMatches( srcImage1, keypoints_object, srcImage2, keypoints_scene,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//定义两个局部变量

vector<Point2f> obj;

vector<Point2f> scene;

//从匹配成功的匹配对中获取关键点

for( unsigned int i = 0; i < good_matches.size(); i++ )

{

obj.push_back( keypoints_object[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_scene[ good_matches[i].trainIdx ].pt );

}

vector<unsigned char> listpoints;

//Mat H = findHomography( obj, scene, CV_RANSAC );//计算透视变换

Mat H = findHomography( obj, scene, CV_RANSAC,3, listpoints);//计算透视变换

std::vector< DMatch > goodgood_matches;

for (int i=0;i<listpoints.size();i++)

{

if ((int)listpoints[i])

{

goodgood_matches.push_back(good_matches[i]);

cout<<(int)listpoints[i]<<endl;

}

}

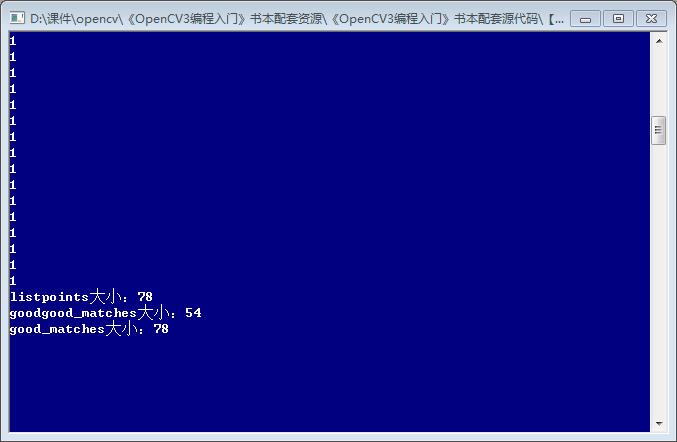

cout<<"listpoints大小:"<<listpoints.size()<<endl;

cout<<"goodgood_matches大小:"<<goodgood_matches.size()<<endl;

cout<<"good_matches大小:"<<good_matches.size()<<endl;

Mat Homgimg_matches;

drawMatches( copysrcImage1, keypoints_object, copysrcImage2, keypoints_scene,

goodgood_matches, Homgimg_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

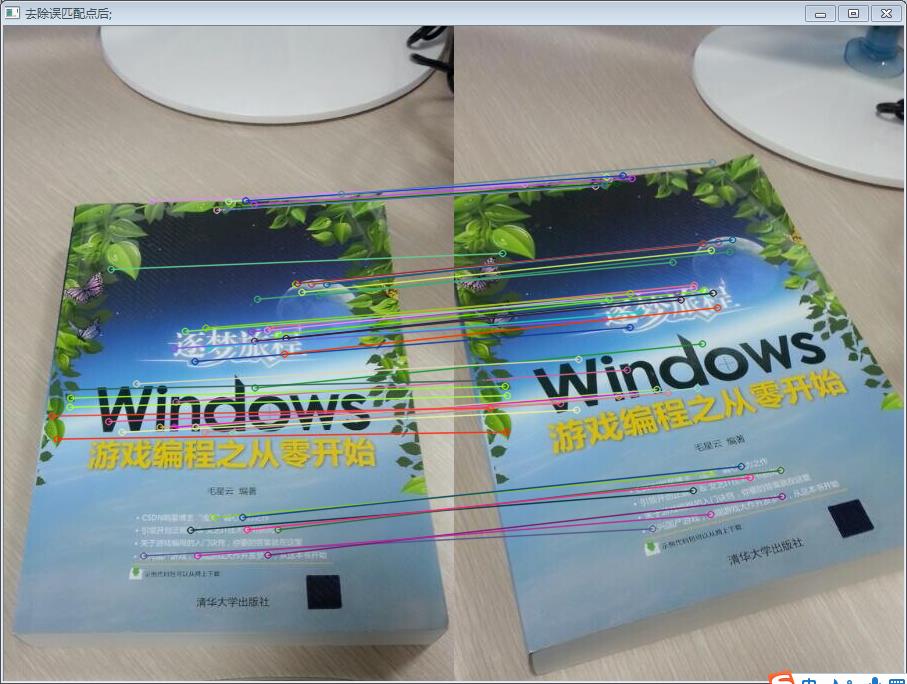

imshow("去除误匹配点后;",Homgimg_matches);

//从待测图片中获取角点

vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0); obj_corners[1] = cvPoint( srcImage1.cols, 0 );

obj_corners[2] = cvPoint( srcImage1.cols, srcImage1.rows ); obj_corners[3] = cvPoint( 0, srcImage1.rows );

vector<Point2f> scene_corners(4);

//进行透视变换

perspectiveTransform( obj_corners, scene_corners, H);

//绘制出角点之间的直线

line( img_matches, scene_corners[0] + Point2f( static_cast<float>(srcImage1.cols), 0), scene_corners[1] + Point2f( static_cast<float>(srcImage1.cols), 0), Scalar(255, 0, 123), 4 );

line( img_matches, scene_corners[1] + Point2f( static_cast<float>(srcImage1.cols), 0), scene_corners[2] + Point2f( static_cast<float>(srcImage1.cols), 0), Scalar( 255, 0, 123), 4 );

line( img_matches, scene_corners[2] + Point2f( static_cast<float>(srcImage1.cols), 0), scene_corners[3] + Point2f( static_cast<float>(srcImage1.cols), 0), Scalar( 255, 0, 123), 4 );

line( img_matches, scene_corners[3] + Point2f( static_cast<float>(srcImage1.cols), 0), scene_corners[0] + Point2f( static_cast<float>(srcImage1.cols), 0), Scalar( 255, 0, 123), 4 );

//显示最终结果

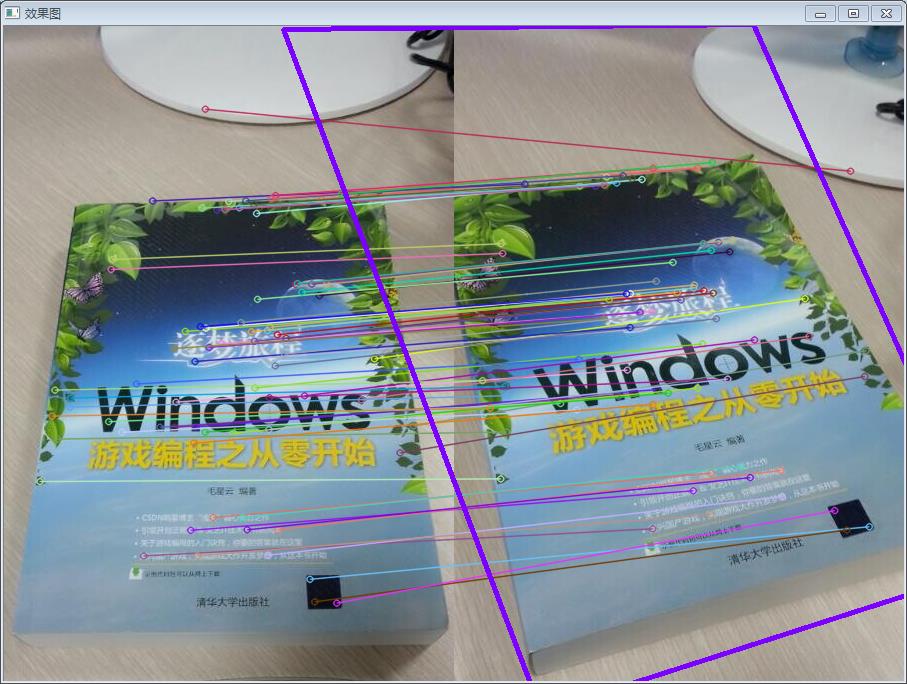

imshow( "效果图", img_matches );

waitKey(0);

return 0;

}

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?