前言:

使用mysql做主从设置,redis数据库做热门访问数据库。mycat读写分离,zookeeper+kafka做数据收集,使用三台虚拟机

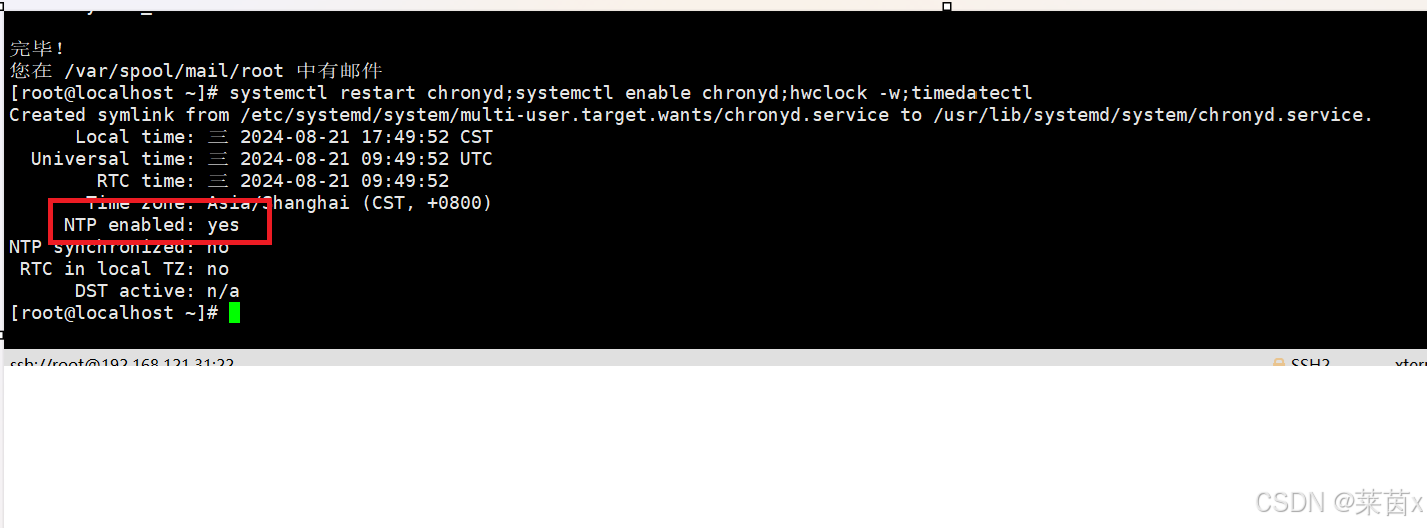

关掉防火墙和selinux,做时间同步

192.168.121.11 node1 mycat.example.com

192.168.121.21 node2 db1

192.168.121.31 node3 db2

还要一台做网络源使用,192.168.121.200做网络源使用,主要使用的是mycat192.168.121.11那一台

#curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-6.10.repo

#yum -y install epel-release //安装epel源

#yum -y install bash-completion //安装自动补齐

[root@localhost ~]# nmcli connection modify ens33 connection.autoconnect yes //设置网络连接

[root@localhost ~]# yum -y install chrony

[root@localhost ~]# systemctl restart chronyd;systemctl enable chronyd;hwclock -w;timedatectl

时间同步

一、前提环境准备

1、第一台192.168.121.11的前期设置

[root@localhost ~]# hostnamectl set-hostname mycat

[root@localhost ~]# bash

三台互相做ssh连接

[root@mycat ~]# ssh-keygen 一直回车,然后敲yes就行了

[root@mycat ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.121.21

[root@mycat ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.121.31

2、192.168.121.21的设置

[root@localhost ~]# hostnamectl set-hostname db1

[root@localhost ~]# bash

[root@db1 ~]# ssh-keygen

[root@db1 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.121.31

[root@db1 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.121.11

3、192.168.121.30的设置

[root@localhost ~]# hostnamectl set-hostname db2

[root@localhost ~]# bash

[root@db2 ~]# ssh-keygen

[root@db2 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.121.11

[root@db2 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.121.21

4、安装网络源,在192.168.121.200的主机上,方便实验下载所需要的安装包

[root@localhost ~]# yum -y install httpd

[root@localhost ~]# cd /var/www/html/

[root@localhost html]# mkdir project4

使用fz将文件传到/var/www/html/project4里面

主要是下载mariabd数据库,在网络源下载也是一样,为什么我要用本地源腻,我就是想麻烦。

[root@localhost ~]# systemctl restart httpd

[root@localhost ~]# systemctl enable httpd

分别在三台写入本地源

[root@mycat ~]# vim /etc/yum.repos.d/CentOS-Base.repo

最后写入按G跳到最后,再小写的o下面插入一行

[mariadb]

name=aa

baseurl=http://192.168.121.200/project4/gpmall-repo

enabled=1

gpgcheck=0

[root@mycat ~]# yum -y install mariadb mariadb-server

scp传到对应的两台

[root@mycat yum.repos.d]# scp CentOS-Base.repo root@192.168.121.21:/etc/yum.repos.d

[root@mycat yum.repos.d]# scp CentOS-Base.repo root@192.168.121.31:/etc/yum.repos.d

三台都安装java java-devel

# yum -y install java java-devel

#java -version

[root@db1 ~]# java -version

openjdk version "1.8.0_412" 可以看到版本,安装成功

二、192.168.121.21和192.168.121.31做主从

[root@db1 ~]# yum -y install mariabd mariadb-server

[root@db2 ~]# yum -y install mariadb mariadb-server

[root@db2 ~]# systemctl restart mariadb;systemctl enable mariadb

[root@db1 ~]# systemctl restart mariadb;systemctl enable mariadb

[root@db2 ~]# mysql_secure_installation 初始化数据库

回车yyny,设置密码为123

[root@db1 ~]# mysql_secure_installation

[root@db1 ~]# vim /etc/my.cnf 开启日志和命令功能,mysql数据库跟mariadb的写入的内容是不一样的,下面是mariadb的主从设置配置。两台都写上。

其中主库的id小于从库的,这里主库20,从库30

[mysqld]

log_bin=mysql-bin

binlog_ignore_db=mysql

server_id=20

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

symbolic-links=0

[mysqld_safe]

log-error=/var/log/mariadb/mariadb.log

pid-file=/var/run/mariadb/mariadb.pid

[root@db2 ~]# vim /etc/my.cnf

[root@db2 ~]# cat /etc/my.cnf

[mysqld]

log_bin=mysql-bin

binlog_ignore_db=mysql

server_id=30

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

symbolic-links=0

[mysqld_safe]

log-error=/var/log/mariadb/mariadb.log

pid-file=/var/run/mariadb/mariadb.pid

# systemctl restart mariadb 两台都重启

1、mariadb主库的设置

[root@db1 ~]# mysql -uroot -p123

MariaDB [(none)]> grant all privileges on *.* to root@'%' identified by "123";

设置用户,跟从库保持相同内容

MariaDB [(none)]> grant replication slave on *.* to 'user'@'192.168.121.31' identified by '123';

创建从库登录的用户,因为我没有写host文件,所以用IP地址就行了

2、从库的设置

[root@db2 ~]# mysql -uroot -p123

MariaDB [(none)]> grant all privileges on *.* to root@'%' identified by "123";

跟主库保持一致

MariaDB [(none)]> change master to master_host='192.168.121.21',master_user='user',master_password='123';

设置主库为db1,从库为db2

MariaDB [(none)]> start slave;

MariaDB [(none)]> show slave status \G;

查看状态

3、测试主库和丛库

[root@db1 ~]# mysql -uroot -p123

create database test;

use test

create table company(id int not null primary key,name varchar(50),addr varchar(255));

insert into company values(1,"facebook","usa");

[root@db2~]# mysql -uroot -p123

MariaDB [(none)]> use test;

MariaDB [test]> select * from company;

三、mariadb数据库的读写分离,使用mycat

1、环境,软件安装

[root@mycat ~]# yum -y install lrzsz

[root@mycat ~]# tar -zxvf Mycat-server-1.6-RELEASE-20161028204710-linux.tar.gz -C /usr/local/

[root@mycat ~]# echo export MYCAT_HOME=/usr/local/mycat/ >> /etc/profile

[root@mycat ~]# source /etc/profile 写入环境变量

编辑mycat用户的/usr/local/mycat/conf/目录下的server.xml,修改root用户的密码123456,访问mycat的逻辑库为USERDB,注意删除后几行

vi /usr/local/mycat/conf/server.xml

在配置文件的最后部分,

<user name="root">

<property name="password">123456</property>

<property name="schemas">USERDB</property>

然后删除如下几行:

<user name="root">

<property name="password">user</property>

<property name="schemas">TESTDB</property>

<property name="readOnly">true</property>

2、修改两个配置文件

[root@mycat ~]# vim /usr/local/mycat/conf/schema.xml

<?xml version="1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="USERDB" checkSQLschema="true" sqlMaxLimit="100" dataNode="dn1"></schema>

<dataNode name="dn1" dataHost="localhost1" database="test" />

<dataHost name="localhost1" maxCon="1000" minCon="10" balance="3" dbType="mysql" dbDriver="native" writeType="0" switchType="1" slaveThreshold="100">

<heartbeat>select user()</heartbeat>

<writeHost host="hostM1" url="192.168.121.21:3306" user="root" password="123">

<readHost host="hostS1" url="192.168.121.31:3306" user="root" password="123" />

</writeHost>

</dataHost>

</mycat:schema>

[root@mycat ~]# vim /usr/local/mycat/conf/server.xml 两个文件对应,前面写过,不想做了

[root@mycat ~]# /bin/bash /usr/local/mycat/bin/mycat stop

[root@mycat ~]# /bin/bash /usr/local/mycat/bin/mycat start

3、测试主从分离

[root@mycat ~]# mysql -h127.0.0.1 -P8066 -uroot -p123;

大写的P后面接端口号

[root@mycat ~]# mysql -h127.0.0.1 -P9066 -uroot -p123 -e 'show @@datasource;'

-e后面接mysql可以使用的命令,查看到主从分离

四、集群的设置,zookeeper+kafka设置

[root@mycat ~]# vim /etc/hosts

写入六行数据吧,其实不写也没事,反正是同一张网卡,只不过要只要ip地址。

192.168.121.11 mycat

192.168.121.21 db1

192.168.121.31 db2

192.168.121.11 zookeeper1

192.168.121.21 zookeeper2

192.168.121.31 zookeeper3

[root@mycat ~]# scp /etc/hosts root@192.168.121.31:/etc/hosts

[root@mycat ~]# scp /etc/hosts root@192.168.121.21:/etc/hosts

1、安装zookeeper集群收集

在mycat主机上

#tar -xzvf zookeeper-3.4.8.tar.gz

# cd /root/zookeeper-3.4.8/conf

[root@mycat conf]#mv zoo_sample.cfg zoo.cfg

[root@mycat conf]# vim zoo.cfg 添加三行,用与启用zookeeper

server.1=192.168.121.11:2888:3888

server.2=192.168.121.21:2888:3888

server.3=192.168.121.31:2888:3888

[root@mycat conf]#mkdir /tmp/zookeeper

[root@mycat conf]# echo 1 > /tmp/zookeeper/myid

[root@mycat conf]#scp -r zookeeper-3.4.8 root@192.168.121.21:/root/

[root@mycat conf]# scp -r zookeeper-3.4.8 root@192.168.121.31:/root/

1.2修改其他两台主机的参数

[root@db1 ~]#mkdir /tmp/zookeeper

[root@db1 ~]#echo 2 > /tmp/zookeeper/myid

[root@db2 ~]#mkdir /tmp/zookeeper 创建尊放的数据目录,三台主机都创建

[root@db2~]#echo 3 > /tmp/zookeeper/myid

[root@mycat]# ./zookeeper-3.4.8/bin/zkServer.sh start 当前目录使用zookeeper的脚本文件启用zoop

[root@db1 ~]# ./zookeeper-3.4.8/bin/zkServer.sh start

[root@db2 ~]# ./zookeeper-3.4.8/bin/zkServer.sh start 三台都启用zoop

[root@mycat]#./zookeeper-3.4.8/bin/zkServer.sh status 查看状态,一般是db2是learder

2、安装kafka,大型吞吐量日志收集反应工具

[root@mycat conf]#tar -zxvf kafka_2.11-2.4.0.tgz

[root@mycat]#vim kafka_2.11-2.4.0/config/server.properties

broker.id=1 对应上面的myid

listeners=PLAINTEXT://192.168.121.11:9092 //填写本机的ip地址

zookeeper.connect=192.168.121.11:2181,192.168.121.21:2181,192.168.121.31:2181

[root@mycat conf]#scp -r kafka_2.11-2.4.0 root@192.168.121.21:/root/

[root@mycat conf]# scp -r kafka_2.11-2.4.0 root@192.168.121.31:/root/

[root@db1 ~]#vim kafka_2.11-2.4.0/config/server.properties

broker.id=2

listeners=PLAINTEXT://192.168.121.21:9092

[root@db2~]#vim kafka_2.11-2.4.0/config/server.properties

broker.id=3

listeners=PLAINTEXT://192.168.121.31:9092

[root@mycat conf]#./kafka_2.11-2.4.0/bin/kafka-server-start.sh -daemon ./kafka_2.11-2.4.0/config/server.properties 启用kafka,三台都启用

[root@db1 ~]# ./kafka_2.11-2.4.0/bin/kafka-server-start.sh -daemon ./kafka_2.11-2.4.0/config/server.properties

[root@db2 ~]# ./kafka_2.11-2.4.0/bin/kafka-server-start.sh -daemon ./kafka_2.11-2.4.0/config/server.properties

[root@mycat conf]# jps

[root@mycat conf]#./kafka_2.11-2.4.0/bin/kafka-topics.sh --create --zookeeper 192.168.121.11:2181 --replication-factor 1 --partitions 1 --topic test

[root@db1 ~]#./kafka_2.11-2.4.0/bin/kafka-topics.sh --list --zookeeper 192.168.121.21:2181

[root@db2 ~]#./kafka_2.11-2.4.0/bin/kafka-topics.sh --list --zookeeper 192.168.121.31:2181

五、主库安装对应的数据库数据和前端网页的安装

就是网页对应的数据库数据,提前下载好了,

1、数据库的配置

[root@db1 ~]# mysql -uroot -p123

MariaDB [(none)]> create database gpmall;

MariaDB [(none)]> use gpmall;

MariaDB [gpmall]> source /root/gpmall.sql

[root@mycat ~]# vim /usr/local/mycat/conf/schema.xml

[root@mycat ~]# vim /usr/local/mycat/conf/server.xml

[root@mycat ~]# /bin/bash /usr/local/mycat/bin/mycat start

[root@mycat ~]# vim /etc/hosts 加上redis缓存数据库的信息

192.168.121.11 redis

[root@mycat ~]# scp /etc/hosts root@192.168.121.21:/etc/hosts

[root@mycat ~]# scp /etc/hosts root@192.168.121.31:/etc/hosts

[root@mycat ~]# vim /etc/redis.conf

#bind 127.0.0.1 //本地注释掉

protect-mode no //关掉保护模式

[root@mycat ~]# systemctl restart redis

[root@mycat ~]# systemctl enable redis

2、hosts文件的配置

[root@mycat ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.121.11 mycat

192.168.121.21 db1

192.168.121.31 db2

192.168.121.11 zookeeper1

192.168.121.21 zookeeper2

192.168.121.31 zookeeper3

192.168.121.11 redis

192.168.121.21 mysql.mall

192.168.121.11 zk1.mall

192.168.121.21 zk2.mall

192.168.121.31 zk3.mall

192.168.121.11 kafka1.mall

192.168.121.21 kafka2.mall

192.168.121.31 kafka3.mall

192.168.121.11 redis.mall

[root@mycat ~]# scp /etc/hosts root@192.168.121.21:/etc/hosts

[root@mycat ~]# scp /etc/hosts root@192.168.121.31:/etc/hosts

3、安装ningx和js文件

将四个文件闯到db1,做主库的那台

后台运行四个文件

[root@db1 ~]# nohup java -jar user-provider-0.0.1-SNAPSHOT.jar &

[root@db1 ~]# nohup java -jar shopping-provider-0.0.1-SNAPSHOT.jar &

[root@db1 ~]# nohup java -jar gpmall-user-0.0.1-SNAPSHOT.jar &

[root@db1 ~]# nohup java -jar gpmall-shopping-0.0.1-SNAPSHOT.jar &

多运行几次

3、主从都运行js文件

#nohup java -jar user-provider-0.0.1-SNAPSHOT.jar &

#nohup java -jar shopping-provider-0.0.1-SNAPSHOT.jar &

#nohup java -jar gpmall-user-0.0.1-SNAPSHOT.jar &

#nohup java -jar gpmall-shopping-0.0.1-SNAPSHOT.jar &

[root@db2 ~]# jobs -l

[root@mycat ~]# yum -y install nginx

[root@mycat ~]# cat /etc/nginx/conf.d/default.conf

upstream myuser {

server 192.168.121.21:8082;

server 192.168.121.31:8082;

ip_hash;

}

upstream myshopping {

server 192.168.121.21:8081;

server 192.168.121.31:8081;

ip_hash;

}

upstream mycashier {

server 192.168.121.21:8083;

server 192.168.121.31:8083;

ip_hash;

}

server {

location /user {

proxy_pass http://myuser;

}

location /shopping {

proxy_pass http://myshopping;

}

location /cashier {

proxy_pass http://mycashier;

}

}

[root@mycat ~]# systemctl restart nginx

1333

1333

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?