先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7

深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

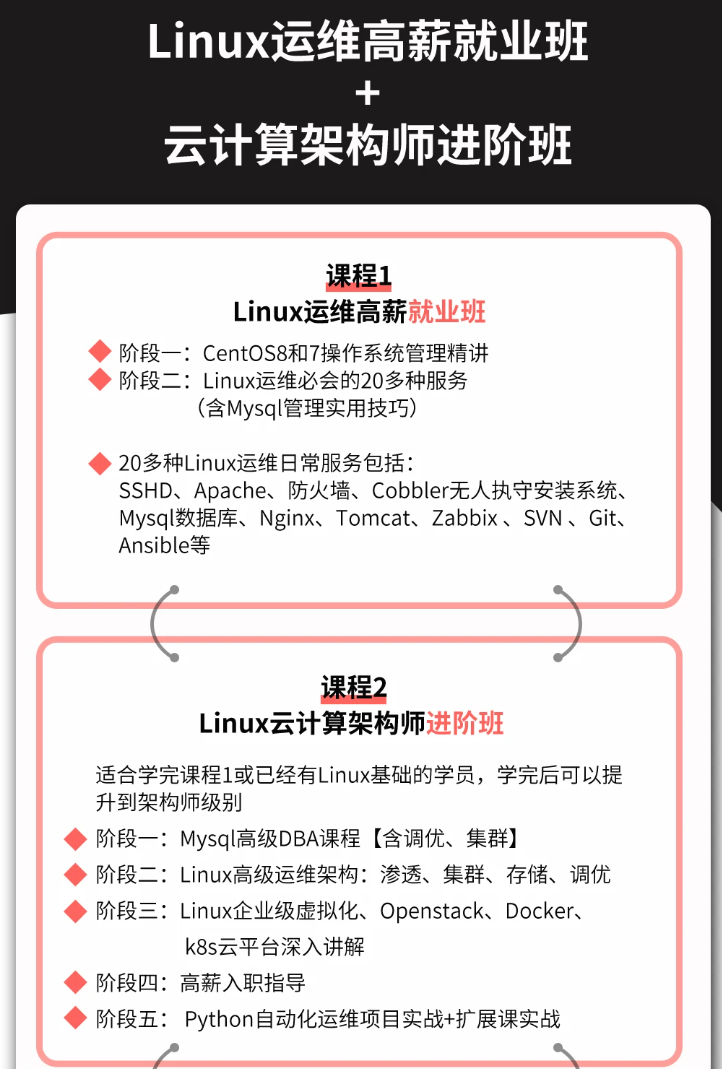

因此收集整理了一份《2024年最新Linux运维全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上运维知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

如果你需要这些资料,可以添加V获取:vip1024b (备注运维)

正文

at /root/3rd/boost_1_62_0/boost/asio/basic_deadline_timer.hpp:458

#6 0x0000000000418921 in main () at test_deadline_timer.cpp:20

正如前面所说,basic\_deadline\_timer自己是干不了啥事的,还是得调用deadline\_timer\_service的接口方法:

// basic_deadline_timer

void wait()

{

asio::error_code ec;

this->get_service().wait(this->get_implementation(), ec);

asio::detail::throw_error(ec, “wait”);

}

// deadline_timer_service

void wait(implementation_type& impl, asio::error_code& ec)

{

time_type now = Time_Traits::now();

ec = asio::error_code();

while (Time_Traits::less_than(now, impl.expiry) && !ec)

{

this->do_wait(Time_Traits::to_posix_duration(

Time_Traits::subtract(impl.expiry, now)), ec);

now = Time_Traits::now();

}

}

//deadline_timer_service中wait–>do_wait

template

void do_wait(const Duration& timeout, asio::error_code& ec)

{

#if defined(ASIO_WINDOWS_RUNTIME)

std::this_thread::sleep_for(

std::chrono::seconds(timeout.total_seconds())

+ std::chrono::microseconds(timeout.total_microseconds()));

ec = asio::error_code();

#else // defined(ASIO_WINDOWS_RUNTIME)

::timeval tv;

tv.tv_sec = timeout.total_seconds();

tv.tv_usec = timeout.total_microseconds() % 1000000;

socket_ops::select(0, 0, 0, 0, &tv, ec);

#endif // defined(ASIO_WINDOWS_RUNTIME)

}

// The queue of timers.

timer_queue<Time_Traits> timer_queue_;

// The object that schedules and executes timers. Usually a reactor.

timer_scheduler& scheduler_;

};

其实源码已经很好理解了,用了类似pthread\_cond\_t条件变量触发的写法,在循环判断内进行阻塞等待操作。

在Linux系统上阻塞等待用的是select的定时机制,这里的select实际上是没有传入任何描述符的。

在windows上使用的是std::this\_thread::sleep\_for方法

#### 异步调用——async\_wait

异步调用相对就复杂一点了,因为要传入回调函数,还需要epoll\_reactor的协助。

首先看basic\_deadline\_timer中的async\_wait函数:

template

BOOST_ASIO_INITFN_RESULT_TYPE(WaitHandler,

void (boost::system::error_code))

async_wait(WaitHandler&& handler)

{

// If you get an error on the following line it means that your handler does

// not meet the documented type requirements for a WaitHandler.

BOOST_ASIO_WAIT_HANDLER_CHECK(WaitHandler, handler) type_check;

async_completion<WaitHandler,

void (boost::system::error_code)> init(handler);

this->get\_service().async\_wait(this->get\_implementation(),

init.completion_handler);

return init.result.get();

}

看起来很长,实际上逻辑很简单。第8行的宏函数,实际上就是检验传进来的WaitHandler这个函数是否符合要求,具体代码有点复杂,这里就不贴了,大致思路是采用static\_cast能否正常转化来验证,这里面用到了静态断言。

当然,这个函数的主体还是得去调用deadline\_timer\_service的async\_wait。此外,还有async\_completion的处理,这里引用下async\_completion构造函数的官方注释:

/**

* The constructor creates the concrete completion handler and makes the link

* between the handler and the asynchronous result.

*/

大致意思就是对这个传进来的回调函数进行下处理,再将其与一个异步返回结果进行绑定。颇像future的机制啊。

然而实际上,让我很纳闷的是,在return语句那一行,返回的init.result.get(),这个get()函数实际上是空的…

template

class async_result

{

public:

typedef void type;

explicit async_result(Handler&) {}

type get() {}

};

不知道是不是我理解有误,反正我是没弄懂这写法。。

再看到deadline\_timer\_service的async\_wait:

template

void async_wait(implementation_type& impl, Handler& handler)

{

// Allocate and construct an operation to wrap the handler.

typedef wait_handler op;

typename op::ptr p = { boost::asio::detail::addressof(handler),

op::ptr::allocate(handler), 0 };

p.p = new (p.v) op(handler);

impl.might_have_pending_waits = true;

// shceduler\_是定时器服务的调度器,是epoll\_reactor对象

scheduler_.schedule\_timer(timer_queue_, impl.expiry, impl.timer_data, p.p);

p.v = p.p = 0;

}

op::ptr这一段大致意思应该是对handler这个回调函数进行一层包装,这个包装对象是动态分配的,可以看到p指针最后被清0,因为在schedule\_timer中,该包装对象的负责权已经被托管给传入该函数的deadline\_timer\_service的timer\_queue\_了。因此在异步操作中需要保证对象始终有效,否则异步回调时可能会出现段错误;

在此再补充一下,实际上deadline\_timer\_service有两个成员:

// The queue of timers.

timer_queue<Time_Traits> timer_queue_; // 维护所有的定时器

// The object that schedules and executes timers. Usually a reactor.

timer_scheduler& scheduler_; // deadline_timer_service服务的异步调度器。这里timer_scheduler就是epoll_reacotr

接下来再看schedule\_timer函数:

template <typename Time_Traits>

void epoll_reactor::schedule_timer(timer_queue<Time_Traits>& queue,

const typename Time_Traits::time_type& time,

typename timer_queue<Time_Traits>::per_timer_data& timer, wait_op* op)

{

mutex::scoped_lock lock(mutex_);

if (shutdown_)

{

scheduler_.post_immediate_completion(op, false);

return;

}

bool earliest = queue.enqueue_timer(time, timer, op);//将定时器添加进队列,这个队列是deadline_timer_service的timer_queue_成员

scheduler_.work_started(); //epoll_reactor::work_started

if (earliest)

update_timeout(); // 如果当前定时器的触发时间最早,则更新epoll_reactor的timer_fd

}

void epoll_reactor::update_timeout()

{

if (timer_fd_ != -1)

{

itimerspec new_timeout;

itimerspec old_timeout;

int flags = get_timeout(new_timeout);

timerfd_settime(timer_fd_, flags, &new_timeout, &old_timeout);

return;

}

interrupt();

}

// Notify that some work has started.

//scheduler::post_immediate_completion scheduler::post_immediate_completion

//epoll_reactor::schedule_timer epoll_reactor::start_op

//work_finished和work_started对应 io_context::work::work中调用

void epoll_reactor::work_started() //计数,实际上代表的是accept获取到的链接数

{

++outstanding_work_;

}

前面用于包装回调函数的wait\_handler是wait\_op的子类,而wait\_op是scheduler\_operation的子类。scheduler\_operation代表所有这种回调函数的包装。

需要注意的是这里的scheduler\_不再是前面的scheduler\_了,这里的scheduler\_是epoll\_reactor的成员,是scheduler对象(scheduler就是io\_service的实现类)。

这个函数先判断当前的epoll\_reactor是否处于关闭状态,epoll\_reactor关闭时是不会进行任何异步监听的。如果epoll\_reactor已关闭,则把该操作函数交给scheduler(即io\_service)来处理。具体如何处理我们后面的博客再讲,这个逻辑有点复杂。如果epoll\_reactor处于正常的开启状态,则将该定时器添加到deadline\_timer\_service的timer\_queue\_队列中,然后告诉scheduler(io\_service)有新的任务来了(就是那句scheduler\_.work\_started()),后面scheduler会自动处理。如果当前定时器的触发时间点最早,还要更新epoll\_reactor的定时器。

### 异步定时器示例

/**

* @brief 测试异步定时器

*/

#include

#include <boost/asio.hpp>

#include <boost/asio/steady_timer.hpp>

void callback(const boost::system::error_code&) {

std::cout << “Hello, world!” << std::endl;

}

void callback2(const boost::system::error_code&) {

std::cout << “second call but first run” << std::endl;

}

int main() {

boost::asio::io_service io;

boost::asio::steady_timer st(io);

st.expires_from_now(std::chrono::seconds(5));

st.vim(callback);

boost::asio::deadline_timer dt(io, boost::posix_time::seconds(3));

dt.async_wait(callback2);

std::cout << “first run\n”;

io.run();

return 0;

}

#### gdb异步定时器流程

aysnc_wait 程序调用堆栈

(gdb) bt

#0 boost::asio::detail::epoll_reactor::schedule_timer<boost::asio::detail::chrono_time_traits<std::chrono::_V2::steady_clock, boost::asio::wait_traitsstd::chrono:\_V2::steady\_clock > > (this=0x639d70, queue=…, time=…, timer=…, op=0x63a2a0)

at /root/3rd/boost_1_62_0/boost/asio/detail/impl/epoll_reactor.hpp:52

#1 0x0000000000416b6c in boost::asio::detail::deadline_timer_service<boost::asio::detail::chrono_time_traits<std::chrono::_V2::steady_clock, boost::asio::wait_traitsstd::chrono:\_V2::steady\_clock > >::async_wait<void (*)(boost::system::error_code const&)> (this=0x639d28, impl=…,

handler=@0x7fffffffe240: 0x40ce12 <callback(boost::system::error_code const&)>)

at /root/3rd/boost_1_62_0/boost/asio/detail/deadline_timer_service.hpp:192

#2 0x000000000041506c in boost::asio::waitable_timer_service<std::chrono::_V2::steady_clock, boost::asio::wait_traitsstd::chrono:\_V2::steady\_clock >::async_wait<void (&)(boost::system::error_code const&)> (this=0x639d00, impl=…,

handler=@0x40ce12: {void (const boost::system::error_code &)} 0x40ce12 <callback(boost::system::error_code const&)>)

at /root/3rd/boost_1_62_0/boost/asio/waitable_timer_service.hpp:149

#3 0x0000000000413080 in boost::asio::basic_waitable_timer<std::chrono::_V2::steady_clock, boost::asio::wait_traitsstd::chrono:\_V2::steady\_clock, boost::asio::waitable_timer_service<std::chrono::_V2::steady_clock, boost::asio::wait_traitsstd::chrono:\_V2::steady\_clock > >::async_wait<void (&)(boost::system::error_code const&)> (this=0x7fffffffe2c0,

handler=@0x40ce12: {void (const boost::system::error_code &)} 0x40ce12 <callback(boost::system::error_code const&)>)

at /root/3rd/boost_1_62_0/boost/asio/basic_waitable_timer.hpp:511

#4 0x000000000040cf07 in main () at asio_async_timer_test.cpp:22

run

#0 boost::asio::detail::epoll_reactor::run (this=0x639d70, block=true, ops=…)

at /root/3rd/boost_1_62_0/boost/asio/detail/impl/epoll_reactor.ipp:438

#1 0x0000000000410748 in boost::asio::detail::task_io_service::do_run_one (this=0x639c20, lock=…, this_thread=…, ec=…)

at /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:356

#2 0x0000000000410337 in boost::asio::detail::task_io_service::run (this=0x639c20, ec=…)

at /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:149

#3 0x0000000000410a7f in boost::asio::io_service::run (this=0x7fffffffe300) at /root/3rd/boost_1_62_0/boost/asio/impl/io_service.ipp:59

#4 0x000000000040cf70 in main () at asio_async_timer_test.cpp:28

(gdb) bt

#0 callback2 () at asio_async_timer_test.cpp:14

#1 0x0000000000419c26 in boost::asio::detail::binder1<void (*)(boost::system::error_code const&), boost::system::error_code>::operator() (

this=0x7fffffffe080) at /root/3rd/boost_1_62_0/boost/asio/detail/bind_handler.hpp:47

#2 0x000000000041947a in boost::asio::asio_handler_invoke<boost::asio::detail::binder1<void (*)(boost::system::error_code const&), boost::system::error_code> > (function=…) at /root/3rd/boost_1_62_0/boost/asio/handler_invoke_hook.hpp:69

#3 0x0000000000418e4b in boost_asio_handler_invoke_helpers::invoke<boost::asio::detail::binder1<void (*)(boost::system::error_code const&), boost::system::error_code>, void (*)(boost::system::error_code const&)> (function=…,

context=@0x7fffffffe080: 0x40ce4c <callback2(boost::system::error_code const&)>)

at /root/3rd/boost_1_62_0/boost/asio/detail/handler_invoke_helpers.hpp:37

#4 0x00000000004183ec in boost::asio::detail::wait_handler<void (*)(boost::system::error_code const&)>::do_complete (owner=0x639c20,

base=0x63a060) at /root/3rd/boost_1_62_0/boost/asio/detail/wait_handler.hpp:70

#5 0x000000000040e9ac in boost::asio::detail::task_io_service_operation::complete (this=0x63a060, owner=…, ec=…, bytes_transferred=0)

at /root/3rd/boost_1_62_0/boost/asio/detail/task_io_service_operation.hpp:38

#6 0x00000000004107cc in boost::asio::detail::task_io_service::do_run_one (this=0x639c20, lock=…, this_thread=…, ec=…)

at /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:372

#7 0x0000000000410337 in boost::asio::detail::task_io_service::run (this=0x639c20, ec=…)

at /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:149

#8 0x0000000000410a7f in boost::asio::io_service::run (this=0x7fffffffe300) at /root/3rd/boost_1_62_0/boost/asio/impl/io_service.ipp:59

#9 0x000000000040cf70 in main () at asio_async_timer_test.cpp:28

(gdb) bt

#0 callback () at asio_async_timer_test.cpp:10

#1 0x0000000000419c26 in boost::asio::detail::binder1<void (*)(boost::system::error_code const&), boost::system::error_code>::operator() (

this=0x7fffffffe080) at /root/3rd/boost_1_62_0/boost/asio/detail/bind_handler.hpp:47

#2 0x000000000041947a in boost::asio::asio_handler_invoke<boost::asio::detail::binder1<void (*)(boost::system::error_code const&), boost::system::error_code> > (function=…) at /root/3rd/boost_1_62_0/boost/asio/handler_invoke_hook.hpp:69

#3 0x0000000000418e4b in boost_asio_handler_invoke_helpers::invoke<boost::asio::detail::binder1<void (*)(boost::system::error_code const&), boost::system::error_code>, void (*)(boost::system::error_code const&)> (function=…,

context=@0x7fffffffe080: 0x40ce12 <callback(boost::system::error_code const&)>)

at /root/3rd/boost_1_62_0/boost/asio/detail/handler_invoke_helpers.hpp:37

#4 0x00000000004183ec in boost::asio::detail::wait_handler<void (*)(boost::system::error_code const&)>::do_complete (owner=0x639c20,

base=0x63a2a0) at /root/3rd/boost_1_62_0/boost/asio/detail/wait_handler.hpp:70

#5 0x000000000040e9ac in boost::asio::detail::task_io_service_operation::complete (this=0x63a2a0, owner=…, ec=…, bytes_transferred=0)

at /root/3rd/boost_1_62_0/boost/asio/detail/task_io_service_operation.hpp:38

#6 0x00000000004107cc in boost::asio::detail::task_io_service::do_run_one (this=0x639c20, lock=…, this_thread=…, ec=…)

at /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:372

#7 0x0000000000410337 in boost::asio::detail::task_io_service::run (this=0x639c20, ec=…)

at /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:149

#8 0x0000000000410a7f in boost::asio::io_service::run (this=0x7fffffffe300) at /root/3rd/boost_1_62_0/boost/asio/impl/io_service.ipp:59

#9 0x000000000040cf70 in main () at asio_async_timer_test.cpp:28

`boost::asio::io_service::run` 调用分析

run其实就是一直循环执行do\_one,并且是以阻塞方式进行的(参数为true),而run\_one同样是以阻塞方式进行的,但只执行一次do\_one;poll和run几乎完全相同,只是它是以非阻塞方式执行do\_one(参数为false),poll\_one是以非阻塞方式执行一次do\_one。

run()会以阻塞的方式等待所有异步操作(包括post的回调函数)完成然后返回

run\_one()也会以阻塞的方式完成一个异步操作(包括post的回调函数)就返回。

poll()以非阻塞的方式检测所有的异步操作,如果有已经完成的,或者是通过post加入的异步操作,直接调用其回调函数,处理完之后,然后返回。不在处理其他的需要等待的异步操作。

poll\_one() 以非阻塞方式完成一个异步操作(已经完成的)就立即返回,不在处理其他已经完成的异步操作和仍需等待的异步操作。

// /root/3rd/boost_1_62_0/boost/asio/impl/io_service.ipp:59

std::size_t io_service::run()

{

boost::system::error_code ec;

std::size_t s = impl_.run(ec);

boost::asio::detail::throw_error(ec);

return s;

}

// /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:149

std::size_t task_io_service::run(boost::system::error_code& ec)

{

ec = boost::system::error_code();

if (outstanding_work_ == 0)

{

stop();

return 0;

}

thread_info this_thread;

// 本线程私有private_op_queue队列中op任务数,"outstanding_work"表示未完成的工作,并非"杰出"的工作

this_thread.private_outstanding_work = 0;

thread_call_stack::context ctx(this, this_thread);

mutex::scoped_lock lock(mutex_);

std::size_t n = 0;

for (; do_run_one(lock, this_thread, ec); lock.lock())

if (n != (std::numeric_limitsstd::size_t::max)())

++n;

return n;

}

`thead_info`结构的定义:

// Structure containing thread-specific data.

//线程私有队列,该结构中包含一个队列成员

typedef scheduler_thread_info thread_info;

struct scheduler_thread_info : public thread_info_base

{

scheduler::do_wait_one->epoll_reactor::run 获取对应op,

// 最终再通过scheduler::task_cleanup和scheduler::work_cleanup析构函数入队到scheduler::op_queue_

//epoll相关的网络事件任务首先入队到私有队列private_op_queue,然后再入队到全局op_queue_队列,这样就可以一次性把获取到的网络事件任务入队到全局队列,只需要加锁一次

//private_op_queue队列成员的op类型为descriptor_state,

op_queue<scheduler_operation> private_op_queue;

//本线程私有private_op_queue队列中op任务数,"outstanding_work"表示未完成的工作,并非"杰出"的工作

long private_outstanding_work;

};

`thread_call_stack::context`结构:

// ~/workspace/asio-annotation/asio/include/asio/detail/thread_context.hpp

class thread_context

{

public:

// Per-thread call stack to track the state of each thread in the context.

//参考scheduler.ipp里面搜索thread_call_stack 所以工作线程都会加入到call_stack.top_链表中

typedef call_stack<thread_context, thread_info_base> thread_call_stack;

};

//~/asio-annotation/asio/include/asio/detail/call_stack.hpp

call_stack 是一个模板链表结构,ctx 构造函数会把任务线程入队到top_队列,ctx对象析构 会移动top_头指针

// Helper class to determine whether or not the current thread is inside an

// invocation of io_context::run() for a specified io_context object.

template <typename Key, typename Value = unsigned char>

class call_stack

{

public:

// Context class automatically pushes the key/value pair on to the stack.

class context

: private noncopyable

{

public:

// Push the key on to the stack.

explicit context(Key* k)

: key_(k),

next_(call_stack<Key, Value>::top_)

{

value_ = reinterpret_cast<unsigned char*>(this);

call_stack<Key, Value>::top_ = this;

}

// Push the key/value pair on to the stack.

//KV入队到top\_队列,例如线程的入队可以参考scheduler::wait\_one

context(Key\* k, Value& v)

: key\_(k),

value\_(&v),

next\_(call_stack<Key, Value>::top_)

{

call_stack<Key, Value>::top_ = this;

}

// Pop the key/value pair from the stack.

~context()

{

call_stack<Key, Value>::top_ = next_;

}

// Find the next context with the same key.

Value\* next\_by\_key() const

{

context\* elem = next_;

while (elem)

{

if (elem->key_ == key_)

return elem->value_;

elem = elem->next_;

}

return 0;

}

private:

friend class call_stack<Key, Value>;

// The key associated with the context.

Key\* key_;

// The value associated with the context.

Value\* value_;

// The next element in the stack.

//kv通过next\_指针链接在一起

context\* next_;

};

friend class context;

// Determine whether the specified owner is on the stack. Returns address of

// key if present, 0 otherwise.

//K是否在top队列中,可以参考thread_call_stack::contains(this);

static Value* contains(Key* k)

{

context* elem = top_;

while (elem)

{

if (elem->key_ == k)

return elem->value_;

elem = elem->next_;

}

return 0;

}

// Obtain the value at the top of the stack.

static Value* top()

{

context* elem = top_;

return elem ? elem->value_ : 0;

}

private:

// The top of the stack of calls for the current thread.

//线程队列头部

//KV入队到top_队列,例如线程的入队可以参考scheduler::wait_one

static tss_ptr top_;

};

`do_run_one`中 **task\_cleanup** 结构 和 **work\_cleanup** 结构:

// task_cleanup结构

// 通过task_cleanup析构入队task_io_service_->op_queue_

struct task_io_service::task_cleanup

{

~task_cleanup()

{

if (this_thread_->private_outstanding_work > 0)

{

boost::asio::detail::increment(

task_io_service_->outstanding_work_,

this_thread_->private_outstanding_work);

}

this_thread_->private_outstanding_work = 0;

// Enqueue the completed operations and reinsert the task at the end of

// the operation queue.

// 将线程上的私有队列入队全局op队列,并在op队列末尾重新插入task\_operation\_

lock_->lock();

task_io_service_->task_interrupted_ = true;

task_io_service_->op_queue_.push(this_thread_->private_op_queue);

task_io_service_->op_queue_.push(&task_io_service_->task_operation_);

}

task_io_service* task_io_service_;

mutex::scoped_lock* lock_;

thread_info* this_thread_;

};

// work_cleanup结构

// 通过work_cleanup析构入队task_io_service_->op_queue_

struct task_io_service::work_cleanup

{

~work_cleanup()

{

if (this_thread_->private_outstanding_work > 1)

{

boost::asio::detail::increment(

task_io_service_->outstanding_work_,

this_thread_->private_outstanding_work - 1);

}

else if (this_thread_->private_outstanding_work < 1)

{

task_io_service_->work_finished();

}

this_thread_->private_outstanding_work = 0;

#if defined(BOOST_ASIO_HAS_THREADS)

if (!this_thread_->private_op_queue.empty())

{

lock_->lock();

task_io_service_->op_queue_.push(this_thread_->private_op_queue);

}

#endif // defined(BOOST_ASIO_HAS_THREADS)

}

task_io_service* task_io_service_;

mutex::scoped_lock* lock_;

thread_info* this_thread_;

};s

// /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:372

std::size_t task_io_service::do_run_one(mutex::scoped_lock& lock,

task_io_service::thread_info& this_thread,

const boost::system::error_code& ec)

{

while (!stopped_)

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注运维)

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

service;

mutex::scoped_lock* lock_;

thread_info* this_thread_;

};s

// /root/3rd/boost_1_62_0/boost/asio/detail/impl/task_io_service.ipp:372

std::size_t task_io_service::do_run_one(mutex::scoped_lock& lock,

task_io_service::thread_info& this_thread,

const boost::system::error_code& ec)

{

while (!stopped_)

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注运维)

[外链图片转存中…(img-fjLBYjQw-1713301784339)]

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

705

705

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?