yum install openstack-keystone httpd mod_wsgi -y

cp /etc/keystone/keystone.conf{,.bak}

egrep -v ‘$|#’ /etc/keystone/keystone.conf.bak >/etc/keystone/keystone.conf

**openstack-utils能够让openstack安装更加简单,直接在命令行修改配置文件**

yum install -y openstack-utils -y

openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

openstack-config --set /etc/keystone/keystone.conf token provider fernet

#填充keystone数据库

su -s /bin/sh -c “keystone-manage db_sync” keystone

mysql keystone -e ‘show tables’

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

keystone-manage bootstrap --bootstrap-password ADMIN_PASS

–bootstrap-admin-url http://controller:5000/v3/

–bootstrap-internal-url http://controller:5000/v3/

–bootstrap-public-url http://controller:5000/v3/

–bootstrap-region-id RegionOne

mysql keystone -e ‘select * from role’

**配置Apache HTTP服务器**

#一定记得关闭selinux setenforce 0

echo “ServerName controller” >> /etc/httpd/conf/httpd.conf

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

systemctl restart httpd.service

systemctl enable httpd.service

**为admin用户添加环境变量,目的是可以提高客户端操作的效率,省去不必要的输入**

#官方文档将admin用户和demo租户的变量写入到了家目录下,本文中创建的租户为mysuer

cat >> ~/admin-openrc << EOF

#admin-openrc

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

source ~/admin-openrc

**创建域,项目,用户和角色**

#创建新域的方法

openstack domain create --description “An Example Domain” example

#创建service 项目

openstack project create --domain default --description “Service Project” service

#创建myproject项目

openstack project create --domain default --description “Demo Project” myproject

#创建myuser用户,需要输入新用户的密码(–password-prompt为交互式,–password+密码为非交互式)

openstack user create --domain default --password MYUSER_PASSWORD myuser

#创建user角色

openstack role create user

#查看角色

openstack role list

#将user角色添加到myproject项目和myuser用户

openstack role add --project myproject --user myuser user

#验证keystone

unset OS_AUTH_URL OS_PASSWORD

**以admin用户身份请求身份验证令牌,使用admin用户密码ADMIN\_PASS**

openstack --os-auth-url http://controller:5000/v3

–os-project-domain-name Default --os-user-domain-name Default

–os-project-name admin --os-username admin token issue

**为创建的myuser用户,请请求认证令牌, 使用myuser用户密码MYUSER\_PASSWORD**

openstack --os-auth-url http://controller:5000/v3

–os-project-domain-name Default --os-user-domain-name Default

–os-project-name myproject --os-username myuser token issue

**为myuser用户也添加一个环境变量文件,密码为myuser用户的密码,**

cat >> ~/myuser-openrc << EOF

#myuser-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=myproject

export OS_USERNAME=myuser

export OS_PASSWORD=MYUSER_PASSWORD

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

#需要用到此用户的时候source生效一下

**官方文档中创建了demo用户,也添加一个环境变量文件**

cat >> ~/demo-openrc << EOF

#demo-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=myproject

export OS_USERNAME=demo

export OS_PASSWORD=DEMO_PASS

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

**请求身份验证令牌**

openstack token issue

=====================================================

## 5. glance

**安装glance镜像服务**

https://docs.openstack.org/glance/train/install/install-rdo.html

**创建数据库并授权**

mysql -u root

create database glance;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@‘localhost’ IDENTIFIED BY ‘GLANCE_DBPASS’;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@‘%’ IDENTIFIED BY ‘GLANCE_DBPASS’;

flush privileges;

**创建glance用户**

source ~/admin-openrc

openstack user create --domain default --password GLANCE_PASS glance

**将管理员admin用户添加到glance用户和项目中**

openstack role add --project service --user glance admin

**创建glance服务实体**

openstack service create --name glance --description “OpenStack Image” image

**创建glance服务API端点,OpenStack使用三种API端点变种代表每种服务:admin、internal、public**

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292

**安装glance软件包**

yum install openstack-glance -y

**编辑glance配置文件 /etc/glance/glance-api.conf**

cp -a /etc/glance/glance-api.conf{,.bak}

grep -Ev ‘^$|#’ /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://controller:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/

**编辑镜像服务的另一个组件文件 /etc/glance/glance-registry.conf**

cp -a /etc/glance/glance-registry.conf{,.bak}

grep -Ev ‘^$|#’ /etc/glance/glance-registry.conf.bak > /etc/glance/glance-registry.conf

openstack-config --set /etc/glance/glance-registry.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url http://controller:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

**同步写入镜像数据库**

su -s /bin/sh -c “glance-manage db_sync” glance

**启动glance服务并设置开机自启**

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl restart openstack-glance-api.service openstack-glance-registry.service

lsof -i:9292

**赋予openstack-glance-api.service服务对存储设备的可写权限**

chown -hR glance:glance /var/lib/glance/

**下载cirros镜像验证glance服务**

wget -c http://download.cirros-cloud.net/0.5.1/cirros-0.5.1-x86_64-disk.img

**上传镜像**

#这里不要使用官方文档里面的glance image-create这样的写法,新版本的OpenStack已经不支持,尽量统一使用以openstack开头的命令写法

openstack image create --file ~/cirros-0.5.1-x86_64-disk.img --disk-format qcow2 --container-format bare --public cirros

**查看镜像**

openstack image list

glance image-list

#查看镜像的物理文件

ll /var/lib/glance/images/

##删除镜像的命令

openstack image delete

================================================

## 6. placement

**安装placement放置服务**

https://docs.openstack.org/placement/train/install/install-rdo.html

https://docs.openstack.org/placement/train/install/

**创建placement数据库**

mysql -uroot

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON placement.* TO ‘placement’@‘localhost’ IDENTIFIED BY ‘PLACEMENT_DBPASS’;

GRANT ALL PRIVILEGES ON placement.* TO ‘placement’@‘%’ IDENTIFIED BY ‘PLACEMENT_DBPASS’;

flush privileges;

**创建placement用户**

openstack user create --domain default --password PLACEMENT_PASS placement

**将Placement用户添加到服务项目中**

openstack role add --project service --user placement admin

**创建placement API服务实体**

openstack service create --name placement --description “Placement API” placement

**创建placement API服务访问端点**

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778

**安装placement软件包**

yum install openstack-placement-api -y

**修改配置文件/etc/placement/placement.conf**

cp /etc/placement/placement.conf /etc/placement/placement.conf.bak

grep -Ev ‘^$|#’ /etc/placement/placement.conf.bak > /etc/placement/placement.conf

openstack-config --set /etc/placement/placement.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement

openstack-config --set /etc/placement/placement.conf api auth_strategy keystone

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_url http://controller:5000/v3

openstack-config --set /etc/placement/placement.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_type password

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_name service

openstack-config --set /etc/placement/placement.conf keystone_authtoken username placement

openstack-config --set /etc/placement/placement.conf keystone_authtoken password PLACEMENT_PASS

**填充placement数据库**

su -s /bin/sh -c “placement-manage db sync” placement

mysql placement -e ‘show tables’

**修改placement的apache配置文件,官方文档没有提到,如果不修改,计算服务检查时将会报错**

#启用placement API访问

[root@controller ~]# vim /etc/httpd/conf.d/00-placement-api.conf

…

15 #SSLCertificateKeyFile

#SSLCertificateKeyFile …

<Directory /usr/bin>

= 2.4>

Require all granted

<IfVersion < 2.4>

Order allow,deny

Allow from all

…

#重启apache服务

systemctl restart httpd.service

netstat -lntup|grep 8778

lsof -i:8778

#curl地址看是否能返回json

curl http://controller:8778

**验证检查健康状态**

placement-status upgrade check

==================================================

## 7. nova

**nova计算服务需要在 控制节点 和 计算节点 都安装**

https://docs.openstack.org/nova/train/install/

**控制节点主要安装**

nova-api(nova主服务)

nova-scheduler(nova调度服务)

nova-conductor(nova数据库服务,提供数据库访问)

nova-novncproxy(nova的vnc服务,提供实例的控制台)

**计算节点主要安装**

nova-compute(nova计算服务)

### 7.1 安装nova计算服务(controller控制节点192.168.0.10)

**创建nova\_api,nova和nova\_cell0数据库并授权**

mysql -uroot

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@‘localhost’ IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@‘%’ IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@‘localhost’ IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@‘%’ IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@‘localhost’ IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@‘%’ IDENTIFIED BY ‘NOVA_DBPASS’;

flush privileges;

**创建nova用户**

openstack user create --domain default --password NOVA_PASS nova

**向nova用户添加admin角色**

openstack role add --project service --user nova admin

**创建nova服务实体**

openstack service create --name nova --description “OpenStack Compute” compute

**创建Compute API服务端点**

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

**安装nova软件包**

yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y

**编辑nova服务的配置文件/etc/nova/nova.conf**

cp -a /etc/nova/nova.conf{,.bak}

grep -Ev ‘^$|#’ /etc/nova/nova.conf.bak > /etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.0.10

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@controller/nova

openstack-config --set /etc/nova/nova.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen ’ $my_ip’

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ’ $my_ip’

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://controller:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

**填充nova-api数据库**

su -s /bin/sh -c “nova-manage api_db sync” nova

su -s /bin/sh -c “nova-manage cell_v2 map_cell0” nova

su -s /bin/sh -c “nova-manage cell_v2 create_cell --name=cell1 --verbose” nova

su -s /bin/sh -c “nova-manage db sync” nova

**验证nova cell0和cell1是否正确注册**

su -s /bin/sh -c “nova-manage cell_v2 list_cells” nova

**启动计算服务nova并将其配置为开机自启**

systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl restart openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

**检查nova服务是否启动**

netstat -tnlup|egrep ‘8774|8775’

curl http://controller:8774

### 7.2 安装nova计算服务(computel01计算节点 192.168.0.20)

**安装软件包**

yum install centos-release-openstack-train -y

yum install openstack-nova-compute -y

yum install -y openstack-utils -y

**编辑计算节点上的nova配置文件/etc/nova/nova.conf**

cp /etc/nova/nova.conf{,.bak}

grep -Ev ‘^$|#’ /etc/nova/nova.conf.bak > /etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.0.20

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ’ $my_ip’

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://controller:6080/vnc_auto.html

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://controller:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

**确定计算节点是否支持虚拟机硬件加速**

egrep -c ‘(vmx|svm)’ /proc/cpuinfo

#如果此命令返回值不是0,则计算节点支持硬件加速,不需要加入下面的配置。

#如果此命令返回值是0,则计算节点不支持硬件加速,并且必须配置libvirt为使用QEMU而不是KVM,需要编辑/etc/nova/nova.conf 配置文件中的[libvirt]部分:

openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

**启动计算节点的nova服务及其相关服务,并设置开机自启**

#如果nova-compute服务无法启动,请检查 /var/log/nova/nova-compute.log。该错误消息可能表明控制器节点上的防火墙阻止访问端口5672。将防火墙配置为打开控制器节点上的端口5672并重新启动 计算节点上的服务。

systemctl restart libvirtd.service openstack-nova-compute.service

systemctl enable libvirtd.service openstack-nova-compute.service

**到控制节点上验证计算节点(controller)**

[root@controller ~]# openstack compute service list --service nova-compute

**控制节点上发现计算主机**

#添加每台新的计算节点时,必须在控制器节点上运行”su -s /bin/sh -c “nova-manage cell_v2 discover_hosts --verbose” nova“以注册这些新的计算节点。

su -s /bin/sh -c “nova-manage cell_v2 discover_hosts --verbose” nova

#也可以设置适当的发现时间间隔来添加新的计算节点

openstack-config --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval 600

systemctl restart openstack-nova-api.service

### 7.3 在控制节点上进行验证nova服务

**controller计算节点 192.168.0.10**

**列出服务组件以验证每个进程的成功启动和注册情况**

openstack compute service list

**列出身份服务中的API端点以验证与身份服务的连接**

openstack catalog list

**列出图像服务中的图像以验证与图像服务的连接性**

openstack image list

**检查Cells和placement API是否正常运行**

nova-status upgrade check

==================================================

## 8. neutron

https://docs.openstack.org/neutron/train/install/

### 8.1 安装neutron网络服务(controller控制节点192.168.0.10)

**创建neutron数据库**

mysql -uroot

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@‘localhost’ IDENTIFIED BY ‘NEUTRON_DBPASS’;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@‘%’ IDENTIFIED BY ‘NEUTRON_DBPASS’;

flush privileges;

**创建neutron用户**

openstack user create --domain default --password NEUTRON_PASS neutron

**向neutron用户添加admin角色**

openstack role add --project service --user neutron admin

**创建neutron服务实体**

openstack service create --name neutron --description “OpenStack Networking” network

**创建neutron服务端点**

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:9696

**安装neutron软件包**

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y

- openstack-neutron:neutron-server的包

- openstack-neutron-ml2:ML2 plugin的包

- openstack-neutron-linuxbridge:linux bridge network provider相关的包

- ebtables:防火墙相关的包

**编辑neutron服务配置文件/etc/neutron/neutron.conf**

#配置二层网络

cp -a /etc/neutron/neutron.conf{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

openstack-config --set /etc/neutron/neutron.conf DEFAULT allow_overlapping_ips true

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes true

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes true

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf nova auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password NOVA_PASS

**ML2 plugin的配置文件ml2\_conf.ini**

cp -a /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan,vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge,l2population

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_vxlan vni_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset true

**配置Linux网桥代理**

>

> Linux网桥代理为实例构建第2层(桥接和交换)虚拟网络基础结构并处理安全组

> 修改配置文件`/etc/neutron/plugins/ml2/linuxbridge_agent.ini`

>

>

>

#官方配置文档中,

#PROVIDER_INTERFACE_NAME指的是eth0网卡,就是连接外部网络的那块网卡

#OVERLAY_INTERFACE_IP_ADDRESS指的是控制节点访问外网的IP地址

cp -a /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.0.10

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

#修改linux内核参数设置为1

echo ‘net.bridge.bridge-nf-call-iptables=1’ >>/etc/sysctl.conf

echo ‘net.bridge.bridge-nf-call-ip6tables=1’ >>/etc/sysctl.conf

#启用网络桥接器支持,加载 br_netfilter 内核模块

modprobe br_netfilter

sysctl -p

**配置第3层 l3代理为自助式虚拟网络提供路由和NAT服务**

#配置三层网络

cp -a /etc/neutron/l3_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT interface_driver linuxbridge

**配置DHCP代理,DHCP代理为虚拟网络提供DHCP服务**

#修改配置文件/etc/neutron/dhcp_agent.ini

cp -a /etc/neutron/dhcp_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver linuxbridge

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true

**配置元数据代理**

#元数据代理提供配置信息,例如实例的凭据

#修改配置文件/etc/neutron/metadata_agent.ini ,并为元数据设置密码METADATA_SECRET

cp -a /etc/neutron/metadata_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_host controller

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret METADATA_SECRET

**在控制节点上配置Nova服务与网络服务进行交互**

#修改配置文件/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:5000

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy true

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret METADATA_SECRET

**创建ml2的软连接 文件指向ML2插件配置的软链接**

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

**填充数据库**

su -s /bin/sh -c “neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head” neutron

**重新启动nova API计算服务**

systemctl restart openstack-nova-api.service

**启动neutron服务和配置开机启动**

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl restart neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

**因配置了第3层l3网络服务 需要启动第三层服务**

systemctl enable neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

### 8.2 在计算节点安装neutron网络服务(computel01计算节点192.168.0.20)

**安装组件**

yum install openstack-neutron-linuxbridge ebtables ipset -y

**修改neutron主配置文件/etc/neutron/neutron.conf**

cp -a /etc/neutron/neutron.conf{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

**配置Linux网桥代理**

cp -a /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.0.20

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

**修改linux系统内核网桥参数为1**

echo ‘net.bridge.bridge-nf-call-iptables=1’ >>/etc/sysctl.conf

echo ‘net.bridge.bridge-nf-call-ip6tables=1’ >>/etc/sysctl.conf

modprobe br_netfilter

sysctl -p

**配置计算节点上Nova服务使用网络服务**

#修改nova配置文件/etc/nova/nova.conf,添加neutron模块配置

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:5000

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

**重新启动计算节点上的Nova服务**

systemctl restart openstack-nova-compute.service

**启动neutron网桥代理服务 设置开机自启动**

systemctl enable neutron-linuxbridge-agent.service

systemctl restart neutron-linuxbridge-agent.service

**回到控制节点验证Neutron网络服务-(controller控制节点192.168.0.10)**

#列出已加载的扩展,以验证该neutron-server过程是否成功启动

[root@controller ~]# openstack extension list --network

#列出代理商以验证成功

[root@controller ~]# openstack network agent list

---

### 8.3 可选:安装neutron网络服务节点(neutron01网络节点192.168.0.30)

>

> 网络配置按照官网文档的租户自助网络

>

>

>

**配置系统参数**

echo ‘net.ipv4.ip_forward = 1’ >>/etc/sysctl.conf

sysctl -p

**安装train版yum源**

yum install centos-release-openstack-train -y

**安装客户端**

yum install python-openstackclient -y

**安装组件**

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables openstack-utils -y

**编辑neutron服务配置文件/etc/neutron/neutron.conf**

#配置二层网络

cp -a /etc/neutron/neutron.conf{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

openstack-config --set /etc/neutron/neutron.conf DEFAULT allow_overlapping_ips true

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@controller

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes true

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes true

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf nova auth_url http://controller:5000

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password NOVA_PASS

**ML2 plugin的配置文件ml2\_conf.ini**

cp -a /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan,vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge,l2population

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_vxlan vni_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset true

**配置Linux网桥代理**

#Linux网桥代理为实例构建第2层(桥接和交换)虚拟网络基础结构并处理安全组

#修改配置文件/etc/neutron/plugins/ml2/linuxbridge_agent.ini

#官网配置文档中:

#PROVIDER_INTERFACE_NAME指的是eth0网卡,就是连接外部网络的那块网卡

#OVERLAY_INTERFACE_IP_ADDRESS指的是控制节点访问外网的IP地址

cp -a /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.0.30

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

#修改linux内核参数设置为1

echo ‘net.bridge.bridge-nf-call-iptables=1’ >>/etc/sysctl.conf

echo ‘net.bridge.bridge-nf-call-ip6tables=1’ >>/etc/sysctl.conf

#启用网络桥接器支持,加载 br_netfilter 内核模块

modprobe br_netfilter

sysctl -p

**配置第3层 l3代理为自助式虚拟网络提供路由和NAT服务**

#配置三层网络

cp -a /etc/neutron/l3_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT interface_driver linuxbridge

**配置DHCP代理,DHCP代理为虚拟网络提供DHCP服务**

#修改配置文件/etc/neutron/dhcp_agent.ini

cp -a /etc/neutron/dhcp_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver linuxbridge

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true

**配置元数据代理**

#元数据代理提供配置信息,例如实例的凭据

#修改配置文件/etc/neutron/metadata_agent.ini ,并为元数据设置密码METADATA_SECRET

cp -a /etc/neutron/metadata_agent.ini{,.bak}

grep -Ev ‘^$|#’ /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_host controller

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret METADATA_SECRET

**创建ml2的软连接 文件指向ML2插件配置的软链接**

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

**填充数据库**

su -s /bin/sh -c “neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head” neutron

---

**在controller控制节点上配置nova服务与网络节点服务进行交互**

**如果是单独安装网络节点则添加以下操作,如果已经在配置计算节点的网络服务时,在控制节点的配置文件/etc/nova/nova.conf添加了neutron模块 ,则不用再次添加**

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696 #此条官方文档未添加

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:5000

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy true

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret METADATA_SECRET

#在controller控制节点上重新启动nova API计算服务

systemctl restart openstack-nova-api.service

---

**回到网络节点启动neutron服务和配置开机启动**

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl restart neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

**因配置了第3层l3网络服务 需要启动第三层服务**

systemctl enable neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

**可以到控制节点再次验证Neutron网络服务-(controller控制节点192.168.0.10)**

#列出已加载的扩展,以验证该neutron-server过程是否成功启动

[root@controller ~]# openstack extension list --network

#列出代理商以验证成功

[root@controller ~]# openstack network agent list

±-------------------------------------±-------------------±-----------±------------------±------±------±--------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

±-------------------------------------±-------------------±-----------±------------------±------±------±--------------------------+

| 44624896-15d1-4029-8ac1-e2ba3f850ca6 | DHCP agent | controller | nova | 😃 | UP | neutron-dhcp-agent |

| 50b90b02-b6bf-4164-ae29-a20592d6a093 | Linux bridge agent | controller | None | 😃 | UP | neutron-linuxbridge-agent |

| 52761bf6-164e-4d91-bcbe-01a3862b0a4e | DHCP agent | neutron01 | nova | 😃 | UP | neutron-dhcp-agent |

| 82780de2-9ace-4e24-a150-f6b6563d7fc8 | Linux bridge agent | computel01 | None | 😃 | UP | neutron-linuxbridge-agent |

| b22dfdda-fcc7-418e-bdaf-6b89e454ee83 | Linux bridge agent | neutron01 | None | 😃 | UP | neutron-linuxbridge-agent |

| bae84064-8cf1-436a-9cb2-bf9f906a9357 | Metadata agent | neutron01 | None | 😃 | UP | neutron-metadata-agent |

| cbd972ef-59f2-4fba-b3b3-2e12c49c5b03 | L3 agent | neutron01 | nova | 😃 | UP | neutron-l3-agent |

| dda8af2f-6c0b-427a-97f7-75fd1912c60d | L3 agent | controller | nova | 😃 | UP | neutron-l3-agent |

| f2193732-9f88-4e87-a82c-a81e1d66c2e0 | Metadata agent | controller | None | 😃 | UP | neutron-metadata-agent |

±-------------------------------------±-------------------±-----------±------------------±------±------±--------------------------+

=====================================================

## 9. Horizon

https://docs.openstack.org/horizon/train/install/

>

> OpenStack仪表板Dashboard服务的项目名称是Horizon,它所需的唯一服务是身份服务keystone,开发语言是python的web框架Django。

>

>

>

**安装Train版本的Horizon有以下要求**

* Python 2.7、3.6或3.7

* Django 1.11、2.0和2.2

* Django 2.0和2.2支持在Train版本中处于试验阶段

* Ussuri发行版(Train发行版之后的下一个发行版)将使用Django 2.2作为主要的Django版本。Django 2.0支持将被删除。

**在计算节点(compute01 192.168.0.20)上安装仪表板服务horizon**

>

> **由于horizon运行需要apache,为了不影响控制节点上的keystone等其他服务使用的apache,故在计算节点上安装。安装之前确认以前安装的服务是否正常启动。(也可以按照官方文档步骤部署在控制节点上)**

>

>

>

#安装软件包

yum install openstack-dashboard memcached python-memcached -y

**修改memcached配置文件**

sed -i ‘/OPTIONS/c\OPTIONS=“-l 0.0.0.0,::1”’ /etc/sysconfig/memcached

systemctl restart memcached.service

systemctl enable memcached.service

**修改配置文件/etc/openstack-dashboard/local\_settings**

cp -a /etc/openstack-dashboard/local_settings{,.bak}

grep -Ev ‘^$|#’ /etc/openstack-dashboard/local_settings.bak >/etc/openstack-dashboard/local_settings

**下面的所有注释不要写到配置文件中,这里只是用作解释含义,可以继续向下查看修改完整的配置文件内容**

[root@computel01 ~]# vim /etc/openstack-dashboard/local_settings

#配置仪表盘在controller节点上使用OpenStack服务

OPENSTACK_HOST = “controller”

#允许主机访问仪表板,接受所有主机,不安全不应在生产中使用

ALLOWED_HOSTS = [‘*’]

#ALLOWED_HOSTS = [‘one.example.com’, ‘two.example.com’]

#配置memcached会话存储服务

SESSION_ENGINE = ‘django.contrib.sessions.backends.cache’

CACHES = {

‘default’: {

‘BACKEND’: ‘django.core.cache.backends.memcached.MemcachedCache’,

‘LOCATION’: ‘controller:11211’,

}

}

#启用身份API版本3

OPENSTACK_KEYSTONE_URL = “http://%s:5000/v3” % OPENSTACK_HOST

#启用对域的支持

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

#配置API版本

OPENSTACK_API_VERSIONS = {

“identity”: 3,

“image”: 2,

“volume”: 3,

}

#配置Default为通过仪表板创建的用户的默认域

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = “Default”

#配置user为通过仪表板创建的用户的默认角色

OPENSTACK_KEYSTONE_DEFAULT_ROLE = “user”

#如果选择网络选项1,请禁用对第3层网络服务的支持,如果选择网络选项2,则可以打开

OPENSTACK_NEUTRON_NETWORK = {

#自动分配的网络

‘enable_auto_allocated_network’: False,

#Neutron分布式虚拟路由器(DVR)

‘enable_distributed_router’: False,

#FIP拓扑检查

‘enable_fip_topology_check’: False,

#高可用路由器模式

‘enable_ha_router’: False,

#下面三个已过时,不用过多了解,官方文档配置中是关闭的

‘enable_lb’: False,

‘enable_firewall’: False,

‘enable_vpn’: False,

#ipv6网络

‘enable_ipv6’: True,

#Neutron配额功能

‘enable_quotas’: True,

#rbac政策

‘enable_rbac_policy’: True,

#路由器的菜单和浮动IP功能,如果Neutron部署有三层功能的支持可以打开

‘enable_router’: True,

#默认的DNS名称服务器

‘default_dns_nameservers’: [],

#网络支持的提供者类型,在创建网络时,该列表中的网络类型可供选择

‘supported_provider_types’: [‘*’],

#使用与提供网络ID范围,仅涉及到VLAN,GRE,和VXLAN网络类型

‘segmentation_id_range’: {},

#使用与提供网络类型

‘extra_provider_types’: {},

#支持的vnic类型,用于与端口绑定扩展

#‘supported_vnic_types’: [‘*’],

#物理网络

#‘physical_networks’: [],

}

#配置时区为亚洲上海

TIME_ZONE = “Asia/Shanghai”

**完整的配置文件修改内容**

[root@computel01 ~]# cat /etc/openstack-dashboard/local_settings|head -45

import os

from django.utils.translation import ugettext_lazy as _

from openstack_dashboard.settings import HORIZON_CONFIG

DEBUG = False

ALLOWED_HOSTS = [‘*’]

LOCAL_PATH = ‘/tmp’

SECRET_KEY=‘f8ac039815265a99b64f’

SESSION_ENGINE = ‘django.contrib.sessions.backends.cache’

CACHES = {

‘default’: {

‘BACKEND’: ‘django.core.cache.backends.memcached.MemcachedCache’,

‘LOCATION’: ‘controller:11211’,

}

}

EMAIL_BACKEND = ‘django.core.mail.backends.console.EmailBackend’

OPENSTACK_HOST = “controller”

OPENSTACK_KEYSTONE_URL = “http://%s:5000/v3” % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

“identity”: 3,

“image”: 2,

“volume”: 3,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = “Default”

OPENSTACK_KEYSTONE_DEFAULT_ROLE = “user”

OPENSTACK_NEUTRON_NETWORK = {

‘enable_auto_allocated_network’: False,

‘enable_distributed_router’: False,

‘enable_fip_topology_check’: False,

‘enable_ha_router’: False,

‘enable_lb’: False,

‘enable_firewall’: False,

‘enable_vpn’: False,

‘enable_ipv6’: True,

‘enable_quotas’: True,

‘enable_rbac_policy’: True,

‘enable_router’: True,

‘default_dns_nameservers’: [],

‘supported_provider_types’: [‘*’],

‘segmentation_id_range’: {},

‘extra_provider_types’: {},

‘supported_vnic_types’: [‘*’],

‘physical_networks’: [],

}

TIME_ZONE = “Asia/Shanghai”

**重建apache的dashboard配置文件**

cd /usr/share/openstack-dashboard

python manage.py make_web_conf --apache > /etc/httpd/conf.d/openstack-dashboard.conf

**若出现不能正常访问,请操作以下步骤**

#建立策略文件(policy.json)的软链接,否则登录到dashboard将出现权限错误和显示混乱

ln -s /etc/openstack-dashboard /usr/share/openstack-dashboard/openstack_dashboard/conf

#/etc/httpd/conf.d/openstack-dashboard.conf如果未包含,则添加以下行

WSGIApplicationGroup %{GLOBAL}

**重新启动compute01计算节点上的apache服务和memcache服务**

systemctl restart httpd.service memcached.service

systemctl enable httpd.service memcached.service

**验证访问**

>

> 在浏览器访问仪表板,网址为 http://192.168.0.20(注意,和以前版本不一样,不加dashboard)

> 使用admin或myuser用户和default域凭据进行身份验证。

>

>

>

域: default

用户名: admin

密码: ADMIN_PASS

---

**登陆界面**

>

>

>

>

>

---

**登陆成功后的页面**

>

>

>

>

>

---

==================================================

## 10. 创建虚拟网络并启动实例操作

* <https://docs.openstack.org/install-guide/launch-instance.html#block-storage>

* [openstack学习-网络管理操作 51CTO博客]( )

* [启动实例的操作 建议参考的博客]( )

* [创建虚拟网络的两种方式]( )

>

> 使用VMware虚拟机创建网络可能会有不可预测到的故障,可以通过dashboard界面,管理员创建admin用户的网络环境

>

>

>

### 10.1 第一种: 建立公共提供商网络

>

> 在admin管理员用户下创建

>

>

>

source ~/admin-openrc

openstack network create --share --external --provider-physical-network provider --provider-network-type flat provider

#参数解释:

–share 允许所有项目使用虚拟网络

–external 将虚拟网络定义为外部,如果想创建一个内部网络,则可以使用–internal。默认值为internal

–provider-physical-network provider

#指明物理网络的提供者,provider 与下面neutron的配置文件对应,其中provider是标签,可以更改为其他,但是2个地方必须要统一

#配置文件/etc/neutron/plugins/ml2/ml2_conf.ini中的参数

[ml2_type_flat]

flat_networks = provider

[linux_bridge]

physical_interface_mappings = provider:eth0

–provider-network-type flat 指明这里创建的网络是flat类型,即实例连接到此网络时和物理网络是在同一个网段,无vlan等功能。

最后输入的provider 指定网络的名称

**在网络上创建一个子网 192.168.0.0/24 ; 子网对应真实的物理网络**

openstack subnet create --network provider

–allocation-pool start=192.168.0.195,end=192.168.0.210

–dns-nameserver 255.5.5.5 --gateway 192.168.0.254

–subnet-range 192.168.0.0/24 provider

#参数解释:

–network provider 指定父网络

–allocation-pool start=192.168.0.195,end=192.168.0.210 指定子网的起始地址和终止地址

–dns-nameserver 223.5.5.5 指定DNS服务器地址

–gateway 192.168.0.254 指定网关地址

–subnet-range 192.168.0.0/24 指定子网的网段

最后的provider 指定子网的名称

**查看已创建的网络**

openstack network list

**查看已创建的子网**

openstack subnet list

### 10.2 第二种: 建立普通租户的私有自助服务网络

>

> 自助服务网络,也叫租户网络或项目网络,它是由openstack租户创建的,完全虚拟的,是租户私有的,只在本网络内部连通,不能在租户之间共享

>

>

>

**在普通租户下创建网络**

source ~/myuser-openrc

openstack network create selfservice

>

> 非特权用户通常无法为该命令提供其他参数。该服务使用以下配置文件中的信息自动选择参数

>

>

>

> ```

> cat /etc/neutron/plugins/ml2/ml2_conf.ini

> [ml2]

> type_drivers = flat,vlan,vxlan

> tenant_network_types = vxlan

> [ml2_type_vxlan]

> vni_ranges = 1:1000

>

> ```

>

>

**创建一个子网 172.18.1.0/24**

openstack subnet create --network selfservice

–dns-nameserver 223.5.5.5 --gateway 172.18.1.1

–subnet-range 172.18.1.0/24 selfservice

#参数解释:

–network selfservice 指定父网络

–allocation-pool start=172.16.10.2,end=172.18.1.200

可以指定子网的起始地址和终止地址,不添加此参数则分配从172.16.1.2到172.18.1.254的IP地址

–dns-nameserver 223.5.5.5 指定DNS服务器地址

–gateway 172.18.1.1 指定网关地址

–subnet-range 172.18.1.0/24 指定子网的网段

最后的selfservice 指定子网的名称

**查看已创建的网络**

openstack network list

**查看已创建的子网**

openstack subnet list

**创建路由器,用myuser普通租户创建**

source ~/myuser-openrc

openstack router create router01

**查看创建的路由**

openstack router list

**将创建的租户自助服务网络子网添加为路由器上的接口**

openstack router add subnet router01 selfservice

**在路由器的公共提供商网络上设置网关**

openstack router set router01 --external-gateway provider

**查看网络名称空间,一个qrouter名称空间和两个 qdhcp名称空间**

[一篇讲解ip netns的博客]( )

[root@controller ~]# ip netns

qrouter-919685b9-24c7-4859-b793-48a2add1fd30 (id: 2)

qdhcp-a7acab4d-3d4b-41f8-8d2c-854fb1ff6d4f (id: 0)

qdhcp-926859eb-1e48-44ed-9634-bcabba5eb8b8 (id: 1)

#使用ip netns命令找到这个虚拟路由器之后,用这个虚拟路由器ping真实物理网络中的网关

#ping通即证明OpenStack内部虚拟网络与真实物理网络衔接成功

[root@controller ~]# ip netns exec qrouter-919685b9-24c7-4859-b793-48a2add1fd30 ping 192.168.0.254

PING 192.168.0.254 (192.168.0.254) 56(84) bytes of data.

64 bytes from 192.168.0.254: icmp_seq=1 ttl=128 time=0.570 ms

64 bytes from 192.168.0.254: icmp_seq=2 ttl=128 time=0.276 ms

**验证查看创建网络和子网中的IP地址范围,回到admin用户下**

source ~/admin-openrc

**列出路由器上的端口,以确定提供商网络上的网关IP地址**

openstack port list --router router01

…|ip_address=‘172.18.1.1’, |…| ACTIVE

…|ip_address=‘192.168.0.209’, |…| ACTIVE

**从控制器节点或物理提供商网络上的任何主机ping此IP地址进行验证**

[root@controller ~]# ping 192.168.0.209

PING 192.168.0.209 (192.168.0.209) 56(84) bytes of data.

64 bytes from 192.168.0.209: icmp_seq=1 ttl=64 time=0.065 ms

64 bytes from 192.168.0.209: icmp_seq=2 ttl=64 time=0.066 ms

**创建一个m1.nano的类型模板**

#Flavor:类型模板,虚机硬件模板被称为类型模板,包括RAM和硬盘大小,CPU核数等。

#创建一台1核cpu 128M硬盘的类型模板与CirrOS映像一起使用进行测试

openstack flavor create --id 0 --vcpus 1 --ram 128 --disk 1 m1.nano

**查看创建的类型模板**

**自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。**

**深知大多数Linux运维工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!**

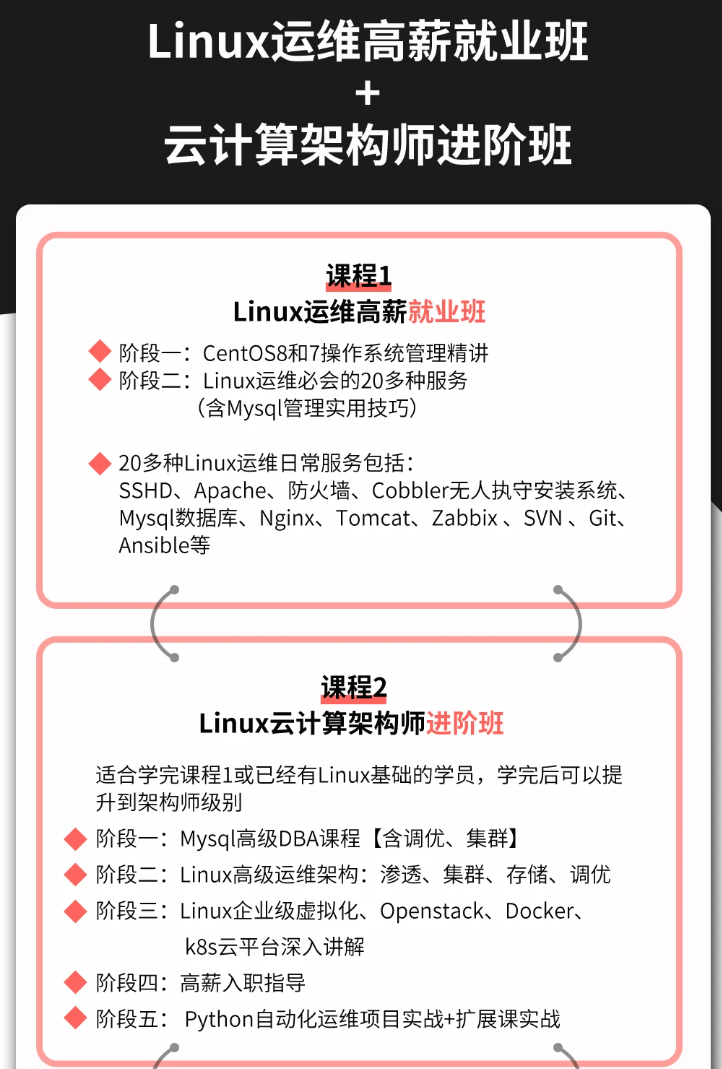

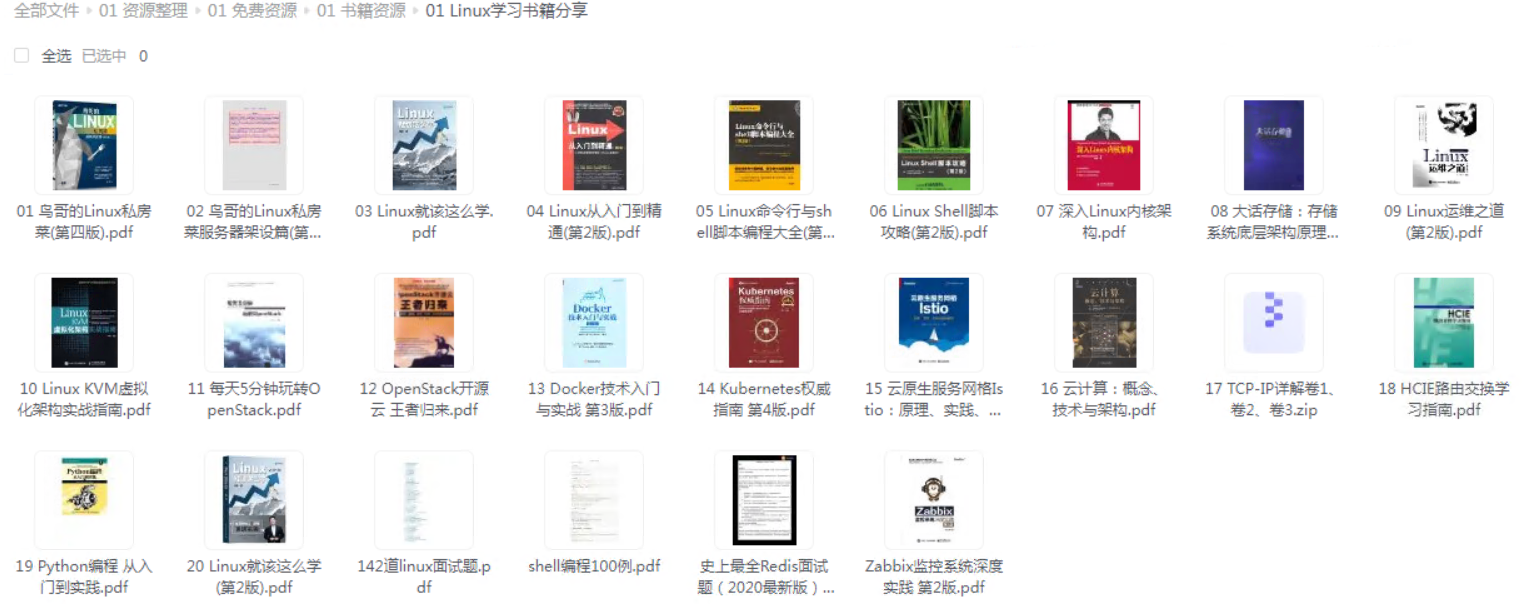

**因此收集整理了一份《2024年Linux运维全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。**

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Linux运维知识点,真正体系化!**

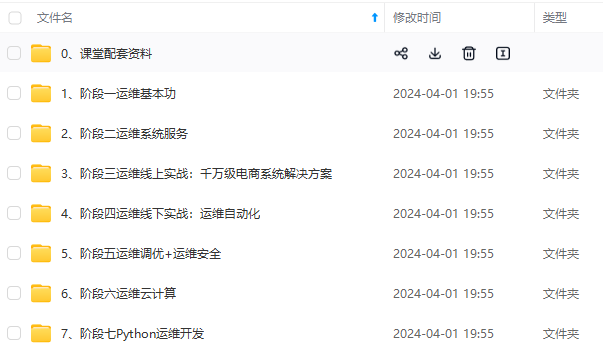

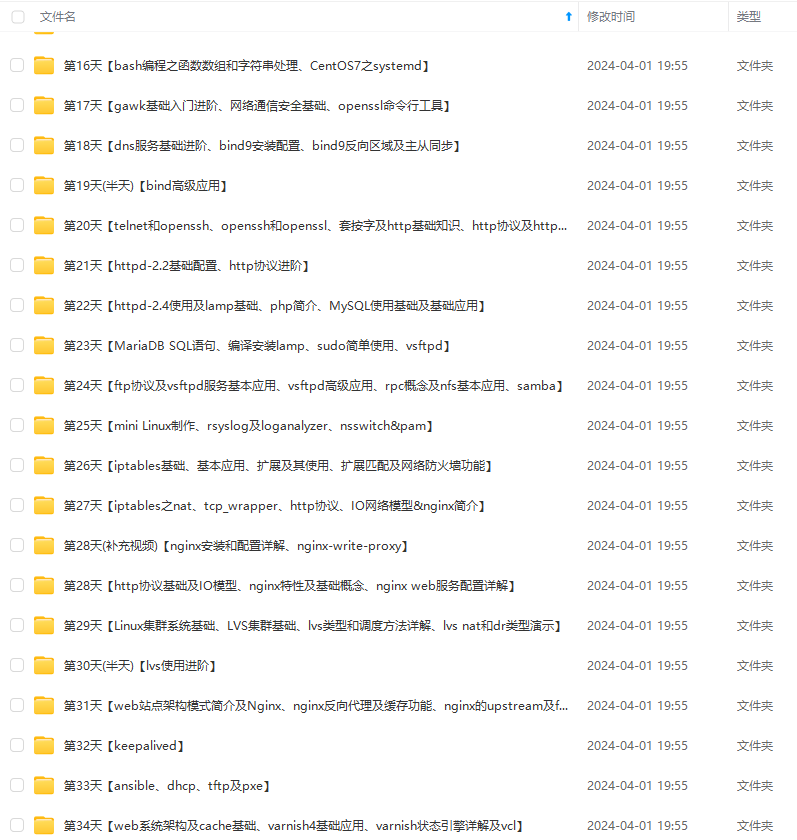

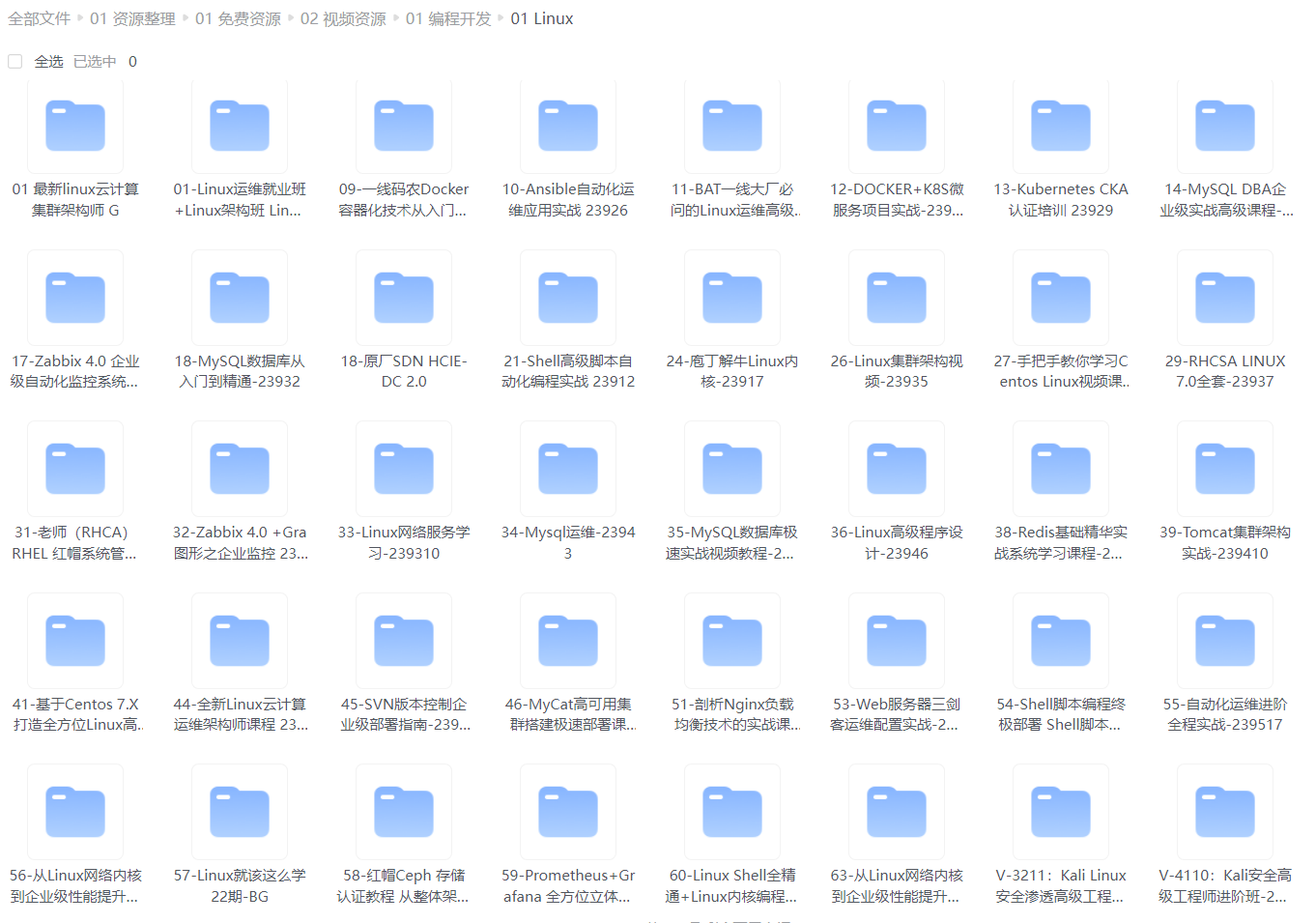

**由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新**

**如果你觉得这些内容对你有帮助,可以添加VX:vip1024b (备注Linux运维获取)**

### 最后的话

最近很多小伙伴找我要Linux学习资料,于是我翻箱倒柜,整理了一些优质资源,涵盖视频、电子书、PPT等共享给大家!

### 资料预览

给大家整理的视频资料:

给大家整理的电子书资料:

**如果本文对你有帮助,欢迎点赞、收藏、转发给朋友,让我有持续创作的动力!**

**一个人可以走的很快,但一群人才能走的更远。不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎扫码加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

4c7-4859-b793-48a2add1fd30 ping 192.168.0.254

PING 192.168.0.254 (192.168.0.254) 56(84) bytes of data.

64 bytes from 192.168.0.254: icmp_seq=1 ttl=128 time=0.570 ms

64 bytes from 192.168.0.254: icmp_seq=2 ttl=128 time=0.276 ms

验证查看创建网络和子网中的IP地址范围,回到admin用户下

source ~/admin-openrc

列出路由器上的端口,以确定提供商网络上的网关IP地址

openstack port list --router router01

...|ip_address='172.18.1.1', |...| ACTIVE

...|ip_address='192.168.0.209', |...| ACTIVE

从控制器节点或物理提供商网络上的任何主机ping此IP地址进行验证

[root@controller ~]# ping 192.168.0.209

PING 192.168.0.209 (192.168.0.209) 56(84) bytes of data.

64 bytes from 192.168.0.209: icmp_seq=1 ttl=64 time=0.065 ms

64 bytes from 192.168.0.209: icmp_seq=2 ttl=64 time=0.066 ms

创建一个m1.nano的类型模板

#Flavor:类型模板,虚机硬件模板被称为类型模板,包括RAM和硬盘大小,CPU核数等。

#创建一台1核cpu 128M硬盘的类型模板与CirrOS映像一起使用进行测试

openstack flavor create --id 0 --vcpus 1 --ram 128 --disk 1 m1.nano

查看创建的类型模板

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Linux运维工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Linux运维全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

[外链图片转存中…(img-L5uDZpA0-1712974392815)]

[外链图片转存中…(img-ImHEUcc6-1712974392816)]

[外链图片转存中…(img-OU69ZfZu-1712974392816)]

[外链图片转存中…(img-K8OUN9Ja-1712974392817)]

[外链图片转存中…(img-HtaKQC55-1712974392817)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Linux运维知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加VX:vip1024b (备注Linux运维获取)

[外链图片转存中…(img-QztTFwrE-1712974392817)]

最后的话

最近很多小伙伴找我要Linux学习资料,于是我翻箱倒柜,整理了一些优质资源,涵盖视频、电子书、PPT等共享给大家!

资料预览

给大家整理的视频资料:

[外链图片转存中…(img-nzb7Csx3-1712974392818)]

给大家整理的电子书资料:

[外链图片转存中…(img-ABQpRvGZ-1712974392818)]

如果本文对你有帮助,欢迎点赞、收藏、转发给朋友,让我有持续创作的动力!

一个人可以走的很快,但一群人才能走的更远。不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎扫码加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

[外链图片转存中…(img-xe1vx2c7-1712974392818)]

1752

1752

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?