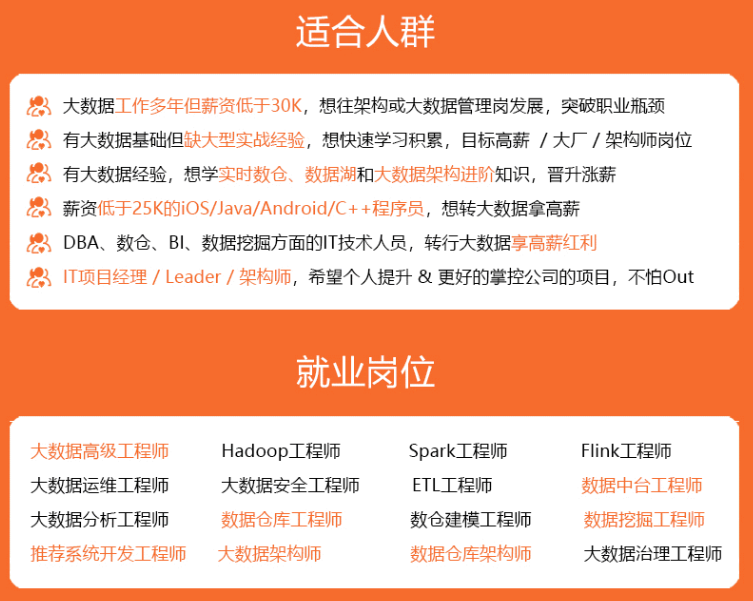

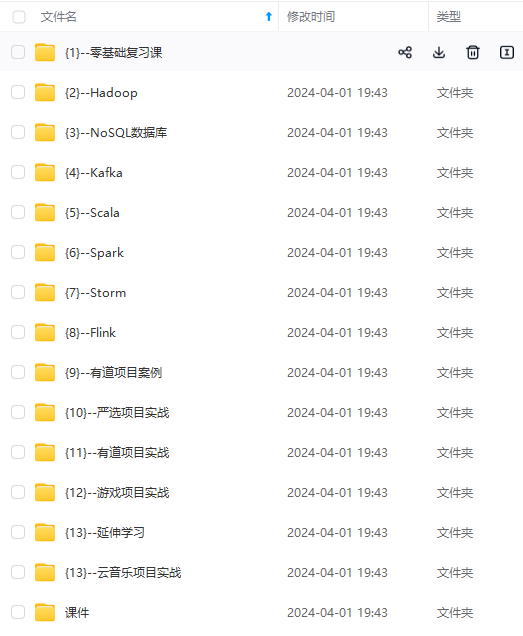

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

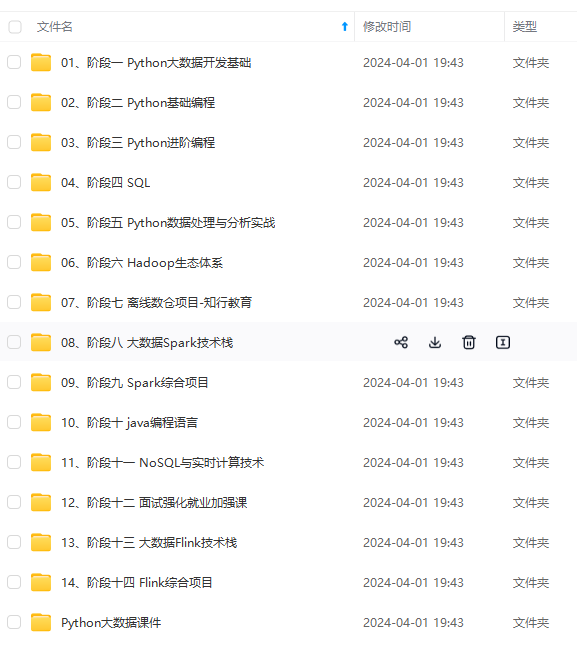

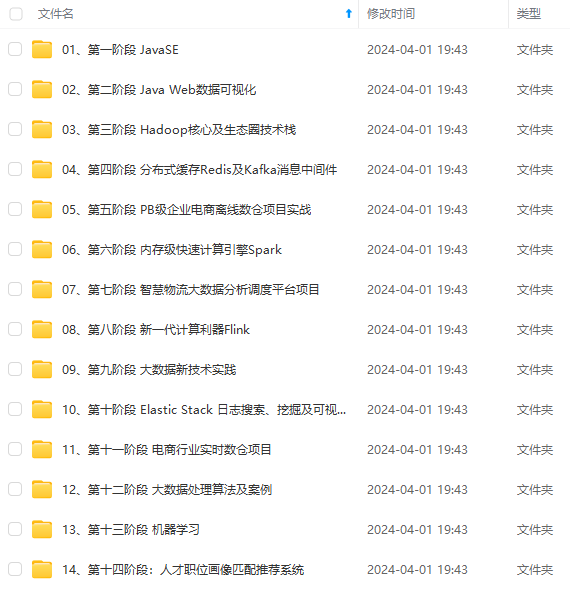

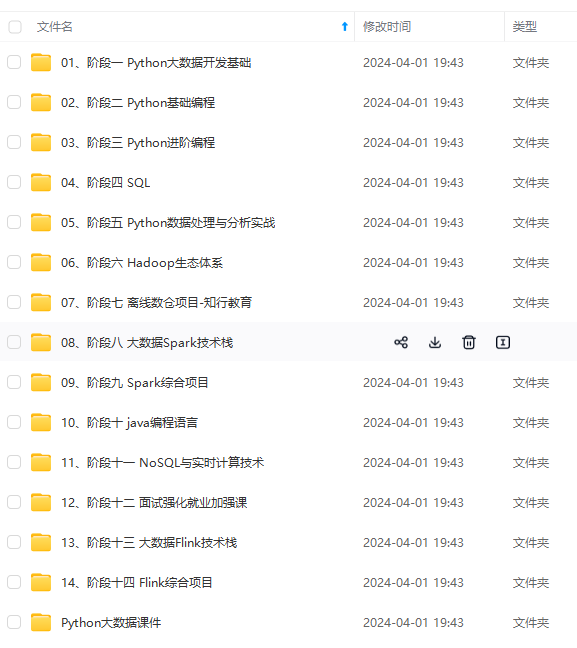

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

at org.apache.spark.rdd.RDDKaTeX parse error: Can't use function '$' in math mode at position 8: anonfun$̲partitions$2.ap…anonfun$partitions

2.

a

p

p

l

y

(

R

D

D

.

s

c

a

l

a

:

237

)

a

t

s

c

a

l

a

.

O

p

t

i

o

n

.

g

e

t

O

r

E

l

s

e

(

O

p

t

i

o

n

.

s

c

a

l

a

:

120

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

r

d

d

.

R

D

D

.

p

a

r

t

i

t

i

o

n

s

(

R

D

D

.

s

c

a

l

a

:

237

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

r

d

d

.

M

a

p

P

a

r

t

i

t

i

o

n

s

R

D

D

.

g

e

t

P

a

r

t

i

t

i

o

n

s

(

M

a

p

P

a

r

t

i

t

i

o

n

s

R

D

D

.

s

c

a

l

a

:

35

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

r

d

d

.

R

D

D

2.apply(RDD.scala:237) at scala.Option.getOrElse(Option.scala:120) at org.apache.spark.rdd.RDD.partitions(RDD.scala:237) at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35) at org.apache.spark.rdd.RDD

2.apply(RDD.scala:237)atscala.Option.getOrElse(Option.scala:120)atorg.apache.spark.rdd.RDD.partitions(RDD.scala:237)atorg.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)atorg.apache.spark.rdd.RDD

a

n

o

n

f

u

n

anonfun

anonfunpartitions

2.

a

p

p

l

y

(

R

D

D

.

s

c

a

l

a

:

239

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

r

d

d

.

R

D

D

2.apply(RDD.scala:239) at org.apache.spark.rdd.RDD

2.apply(RDD.scala:239)atorg.apache.spark.rdd.RDD

a

n

o

n

f

u

n

anonfun

anonfunpartitions

2.

a

p

p

l

y

(

R

D

D

.

s

c

a

l

a

:

237

)

a

t

s

c

a

l

a

.

O

p

t

i

o

n

.

g

e

t

O

r

E

l

s

e

(

O

p

t

i

o

n

.

s

c

a

l

a

:

120

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

r

d

d

.

R

D

D

.

p

a

r

t

i

t

i

o

n

s

(

R

D

D

.

s

c

a

l

a

:

237

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

P

a

r

t

i

t

i

o

n

e

r

2.apply(RDD.scala:237) at scala.Option.getOrElse(Option.scala:120) at org.apache.spark.rdd.RDD.partitions(RDD.scala:237) at org.apache.spark.Partitioner

2.apply(RDD.scala:237)atscala.Option.getOrElse(Option.scala:120)atorg.apache.spark.rdd.RDD.partitions(RDD.scala:237)atorg.apache.spark.Partitioner.defaultPartitioner(Partitioner.scala:65)

at org.apache.spark.rdd.PairRDDFunctionsKaTeX parse error: Can't use function '$' in math mode at position 8: anonfun$̲reduceByKey$3.a…anonfun$reduceByKey

3.

a

p

p

l

y

(

P

a

i

r

R

D

D

F

u

n

c

t

i

o

n

s

.

s

c

a

l

a

:

331

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

r

d

d

.

R

D

D

O

p

e

r

a

t

i

o

n

S

c

o

p

e

3.apply(PairRDDFunctions.scala:331) at org.apache.spark.rdd.RDDOperationScope

3.apply(PairRDDFunctions.scala:331)atorg.apache.spark.rdd.RDDOperationScope.withScope(RDDOperationScope.scala:150)

at org.apache.spark.rdd.RDDOperationScope

.

w

i

t

h

S

c

o

p

e

(

R

D

D

O

p

e

r

a

t

i

o

n

S

c

o

p

e

.

s

c

a

l

a

:

111

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

r

d

d

.

R

D

D

.

w

i

t

h

S

c

o

p

e

(

R

D

D

.

s

c

a

l

a

:

316

)

a

t

o

r

g

.

a

p

a

c

h

e

.

s

p

a

r

k

.

r

d

d

.

P

a

i

r

R

D

D

F

u

n

c

t

i

o

n

s

.

r

e

d

u

c

e

B

y

K

e

y

(

P

a

i

r

R

D

D

F

u

n

c

t

i

o

n

s

.

s

c

a

l

a

:

330

)

a

t

c

n

.

i

t

c

a

s

t

.

s

p

a

r

k

.

W

C

.withScope(RDDOperationScope.scala:111) at org.apache.spark.rdd.RDD.withScope(RDD.scala:316) at org.apache.spark.rdd.PairRDDFunctions.reduceByKey(PairRDDFunctions.scala:330) at cn.itcast.spark.WC

.withScope(RDDOperationScope.scala:111)atorg.apache.spark.rdd.RDD.withScope(RDD.scala:316)atorg.apache.spark.rdd.PairRDDFunctions.reduceByKey(PairRDDFunctions.scala:330)atcn.itcast.spark.WC.main(WC.scala:16)

at cn.itcast.spark.WC.main(WC.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at com.intellij.rt.execution.application.AppMain.main(AppMain.java:140)

16/06/20 11:05:58 INFO SparkContext: Invoking stop() from shutdown hook

16/06/20 11:05:58 INFO SparkUI: Stopped Spark web UI at http://192.168.2.109:4040

16/06/20 11:05:58 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

16/06/20 11:05:58 INFO MemoryStore: MemoryStore cleared

16/06/20 11:05:58 INFO BlockManager: BlockManager stopped

16/06/20 11:05:58 INFO BlockManagerMaster: BlockManagerMaster stopped

16/06/20 11:05:58 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

16/06/20 11:05:58 INFO SparkContext: Successfully stopped SparkContext

16/06/20 11:05:58 INFO ShutdownHookManager: Shutdown hook called

16/06/20 11:05:58 INFO ShutdownHookManager: Deleting directory /private/var/folders/z_/thb8h2gd4hqdcz9z5r9xzl140000gn/T/spark-81bc4c8a-5f7f-45c0-97ab-a82b88619ee5

Process finished with exit code 1

===================================================================================================================================

我的pom.xml几个重要的包得版本如下:

做了几个测试,其他版本不变,hadoop.version 为 2.4.0,2.4.1,2.5.2,2.6.1,2.6.4 都会报上面的错误,不知道是不是hadoop的bug,有人说将hadoop的源码重新编译一下就行,但我没试。

解决办法:

把hadoop version改成2.2.0就可以了,测了一下2.7.2也可以,所以估计是hadoop的一个坑,2.7.2就修复了。其他版本没试

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?