文末有福利领取哦~

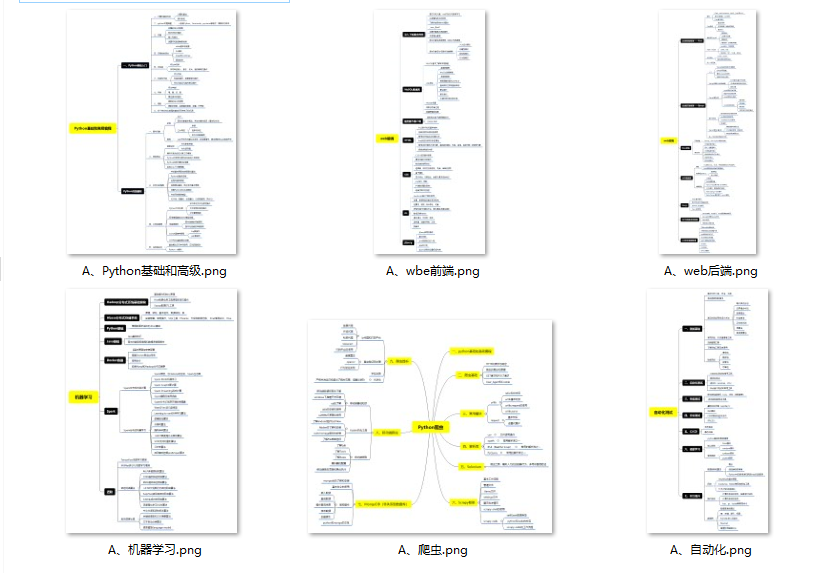

👉一、Python所有方向的学习路线

Python所有方向的技术点做的整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

👉二、Python必备开发工具

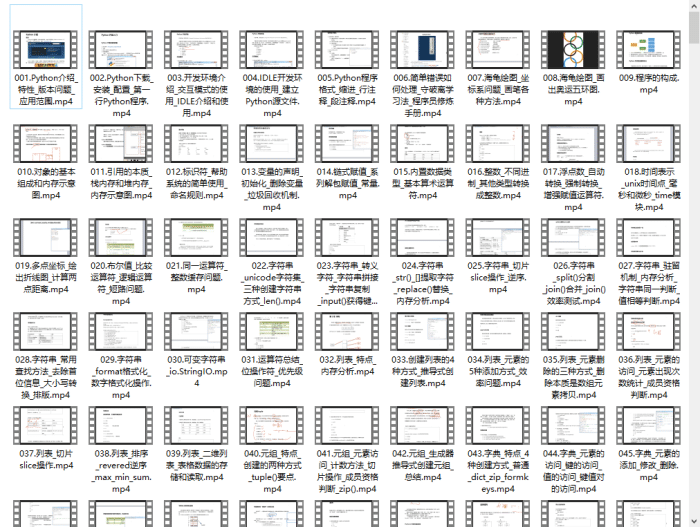

👉三、Python视频合集

观看零基础学习视频,看视频学习是最快捷也是最有效果的方式,跟着视频中老师的思路,从基础到深入,还是很容易入门的。

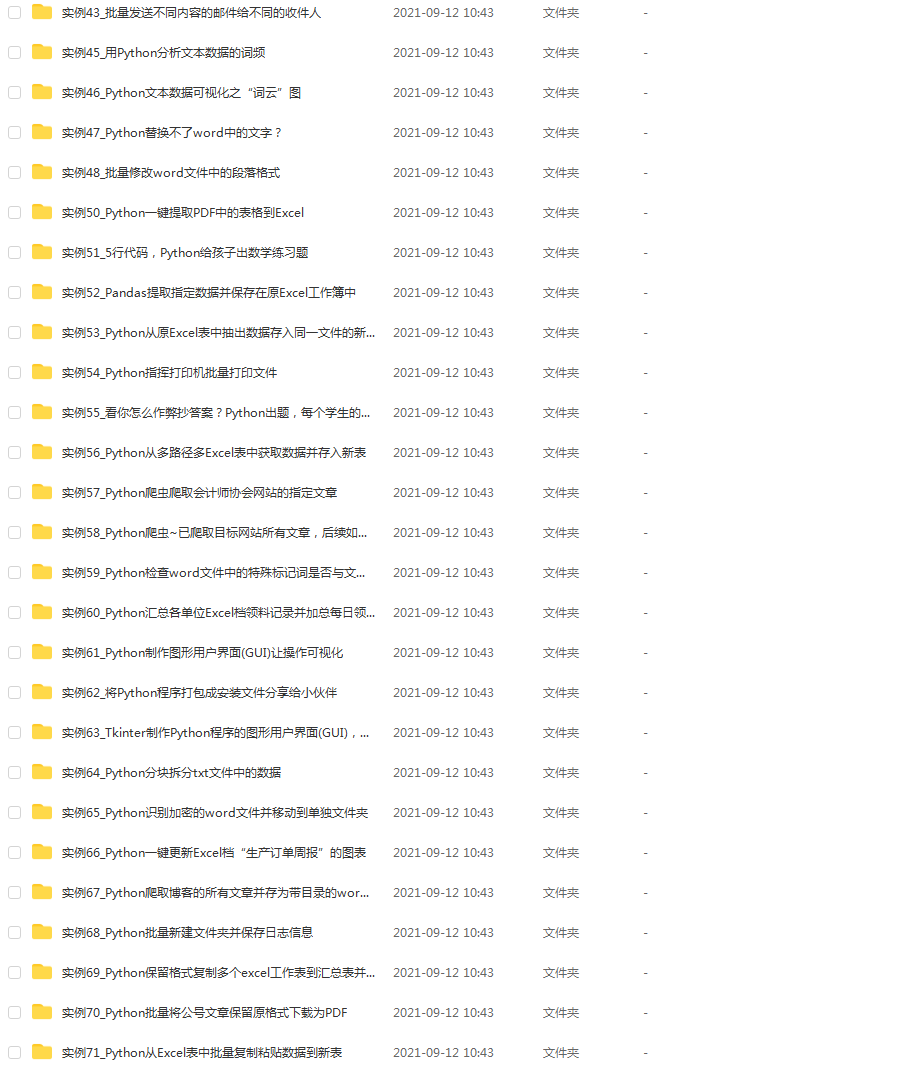

👉 四、实战案例

光学理论是没用的,要学会跟着一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。(文末领读者福利)

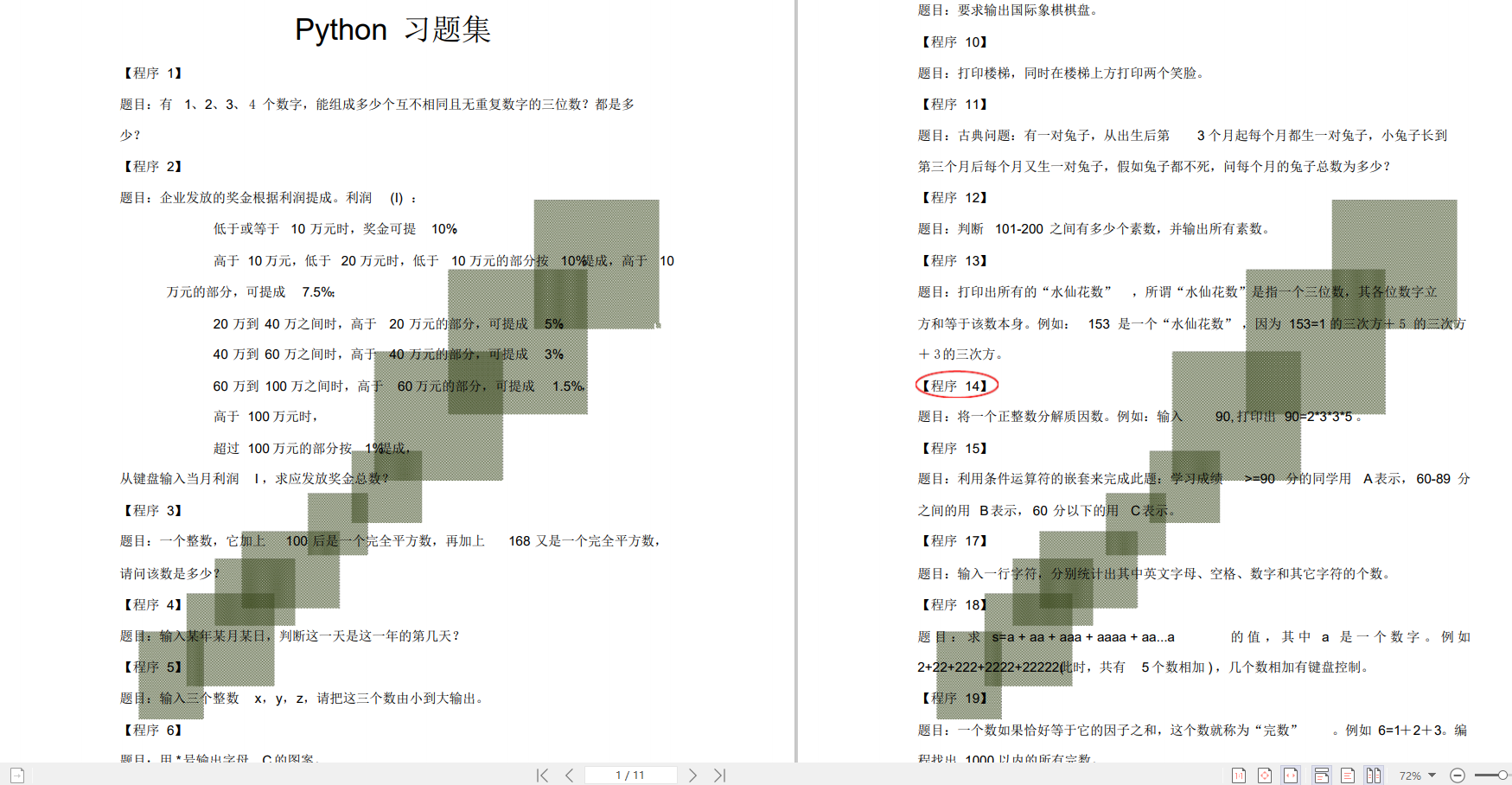

👉五、Python练习题

检查学习结果。

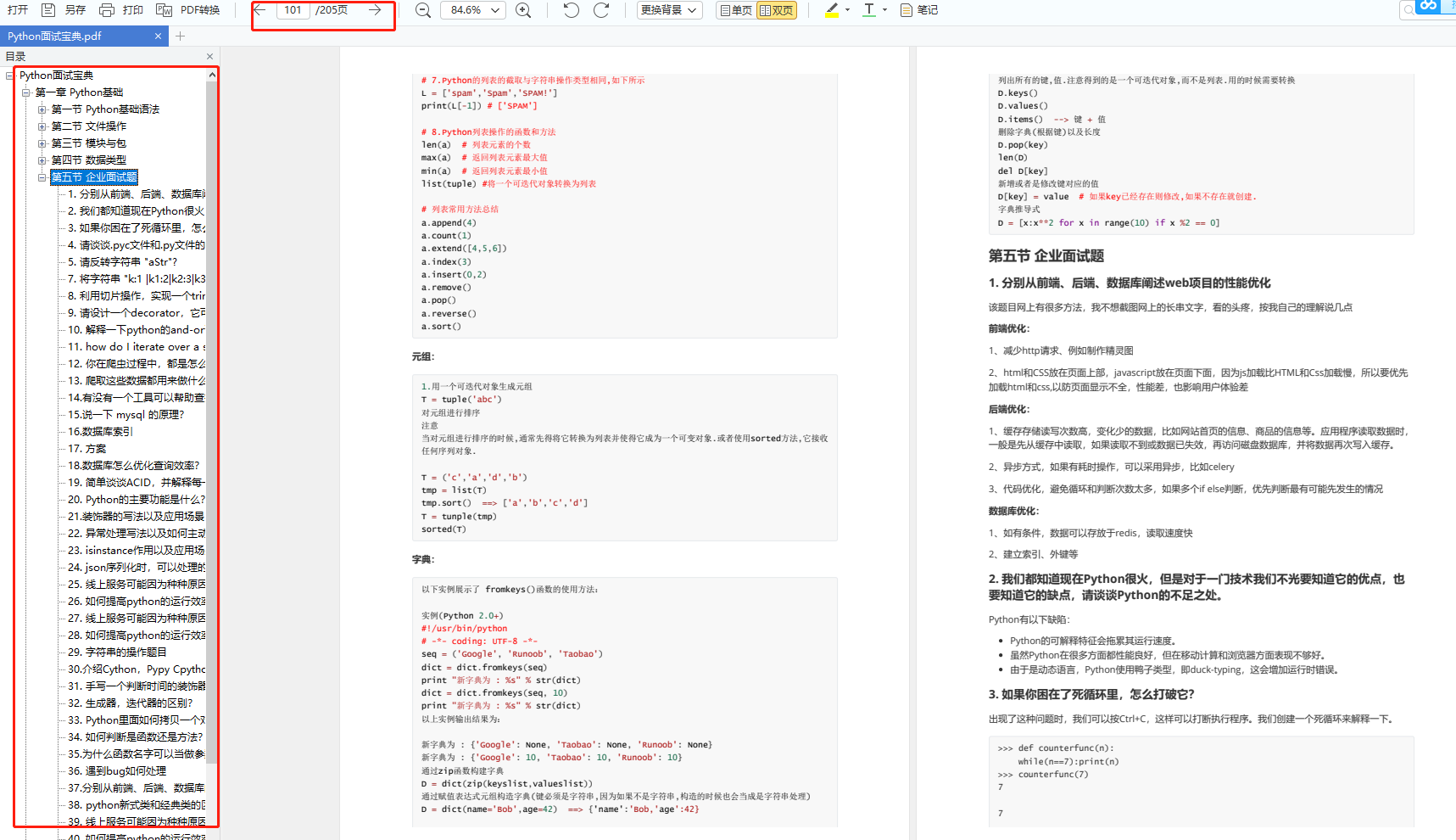

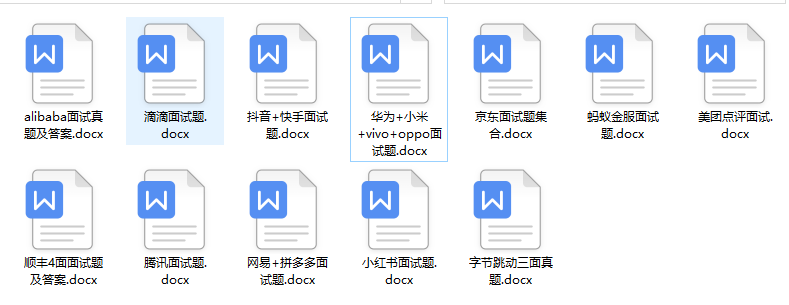

👉六、面试资料

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

👉因篇幅有限,仅展示部分资料,这份完整版的Python全套学习资料已经上传

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

filepath = os.path.join(dataset_directory, “images.tar.gz”)

download_url(

url=“https://www.robots.ox.ac.uk/~vgg/data/pets/data/images.tar.gz”, filepath=filepath,

)

extract_archive(filepath)

Dataset already exists on the disk. Skipping download.

filepath = os.path.join(dataset_directory, “annotations.tar.gz”)

download_url(

url=“https://www.robots.ox.ac.uk/~vgg/data/pets/data/annotations.tar.gz”, filepath=filepath,

)

extract_archive(filepath)

Dataset already exists on the disk. Skipping download.

切分训练集、验证集和测试集

=============

数据集中的某些文件已损坏,因此我们将仅使用OpenCV可以正确加载的那些图像文件。 我们将使用6000张图像进行训练,使用1374张图像进行验证,并使用10张图像进行测试。

root_directory = os.path.join(dataset_directory)

images_directory = os.path.join(root_directory, “images”)

masks_directory = os.path.join(root_directory, “annotations”, “trimaps”)

images_filenames = list(sorted(os.listdir(images_directory)))

correct_images_filenames = [i for i in images_filenames if cv2.imread(os.path.join(images_directory, i)) is not None]

random.seed(42)

random.shuffle(correct_images_filenames)

train_images_filenames = correct_images_filenames[:6000]

val_images_filenames = correct_images_filenames[6000:-10]

test_images_filenames = images_filenames[-10:]

print(len(train_images_filenames), len(val_images_filenames), len(test_images_filenames))

6000 1374 10

定义函数以预处理掩码

==========

数据集包含像素级三图分割。 对于每个图像,都有一个带掩码的关联PNG文件。 掩码的大小等于相关图像的大小。 遮罩图像中的每个像素可以采用以下三个值之一:1、2或3.1表示图像的该像素属于``宠物’‘类别,``2’‘属于背景类别,``3’'属于边界类别。 由于此示例演示了二进制分割的任务(即为每个像素分配两个类别之一),因此我们将对遮罩进行预处理,因此它将仅包含两个唯一值:如果像素是背景,则为0.0;如果像素是背景,则为1.0。 宠物或边界。

def preprocess_mask(mask):

mask = mask.astype(np.float32)

mask[mask == 2.0] = 0.0

mask[(mask == 1.0) | (mask == 3.0)] = 1.0

return mask

定义一个功能以可视化图像及其标签

================

让我们定义一个可视化函数,该函数将获取图像文件名的列表,带图像的目录的路径,带掩码的目录的路径以及带预测掩码的可选参数 (我们稍后将使用此参数来显示模型的预测)。

def display_image_grid(images_filenames, images_directory, masks_directory, predicted_masks=None):

cols = 3 if predicted_masks else 2

rows = len(images_filenames)

figure, ax = plt.subplots(nrows=rows, ncols=cols, figsize=(10, 24))

for i, image_filename in enumerate(images_filenames):

image = cv2.imread(os.path.join(images_directory, image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

mask = cv2.imread(os.path.join(masks_directory, image_filename.replace(“.jpg”, “.png”)), cv2.IMREAD_UNCHANGED,)

mask = preprocess_mask(mask)

ax[i, 0].imshow(image)

ax[i, 1].imshow(mask, interpolation=“nearest”)

ax[i, 0].set_title(“Image”)

ax[i, 1].set_title(“Ground truth mask”)

ax[i, 0].set_axis_off()

ax[i, 1].set_axis_off()

if predicted_masks:

predicted_mask = predicted_masks[i]

ax[i, 2].imshow(predicted_mask, interpolation=“nearest”)

ax[i, 2].set_title(“Predicted mask”)

ax[i, 2].set_axis_off()

plt.tight_layout()

plt.show()

display_image_grid(test_images_filenames, images_directory, masks_directory)

训练和预测的图像大小

==========

通常,用于训练和推理的图像具有不同的高度和宽度以及不同的纵横比。 这个事实给深度学习管道带来了两个挑战:-PyTorch要求一批中的所有图像都具有相同的高度和宽度。 -如果神经网络不是完全卷积,则在训练和推理过程中,所有图像都必须使用相同的宽度和高度。 完全卷积的体系结构(例如UNet)可以处理任何大小的图像。

有三种常见的方法来应对这些挑战:

1.在训练过程中,将所有图像和掩码调整为固定大小(例如256x256像素)。模型在推理过程中预测具有固定大小的蒙版后,将蒙版调整为原始图像大小。这种方法很简单,但是有一些缺点:-预测的蒙版小于图像,并且蒙版可能会丢失一些上下文和原始图像的重要细节。 -如果数据集中的图像具有不同的宽高比,则此方法可能会出现问题。例如,假设您要调整大小为1024x512像素(因此长宽比为2:1的图像)到256x256像素(长宽比为1:1)的图像。在这种情况下,这种变换会使图像失真,也可能影响预测的质量。

2.如果使用全卷积神经网络,则可以使用图像裁剪训练模型,但可以使用原始图像进行推理。此选项通常在质量,培训速度和硬件要求之间提供最佳折衷。

3.请勿更改图像的大小,并使用源图像进行训练和推理。使用这种方法,您将不会丢失任何信息。但是,原始图像可能会很大,因此它们可能需要大量的GPU内存。同样,此方法需要更多的培训时间才能获得良好的效果。

某些体系结构(例如UNet)要求必须通过网络的下采样因子(通常为32)将图像的大小整除,因此您可能还需要使用边框填充图像。Albumentations 为这种情况提供了一种特殊的转化方式。

以下示例显示了不同类型的图像的外观。

example_image_filename = correct_images_filenames[0]

image = cv2.imread(os.path.join(images_directory, example_image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

resized_image = F.resize(image, height=256, width=256)

padded_image = F.pad(image, min_height=512, min_width=512)

padded_constant_image = F.pad(image, min_height=512, min_width=512, border_mode=cv2.BORDER_CONSTANT)

cropped_image = F.center_crop(image, crop_height=256, crop_width=256)

figure, ax = plt.subplots(nrows=1, ncols=5, figsize=(18, 10))

ax.ravel()[0].imshow(image)

ax.ravel()[0].set_title(“Original image”)

ax.ravel()[1].imshow(resized_image)

ax.ravel()[1].set_title(“Resized image”)

ax.ravel()[2].imshow(cropped_image)

ax.ravel()[2].set_title(“Cropped image”)

ax.ravel()[3].imshow(padded_image)

ax.ravel()[3].set_title(“Image padded with reflection”)

ax.ravel()[4].imshow(padded_constant_image)

ax.ravel()[4].set_title(“Image padded with constant padding”)

plt.tight_layout()

plt.show()

在本教程中,我们将探讨处理图像大小的所有三种方法。

方法1.将所有图像和蒙版调整为固定大小(例如256x256像素)。

=================================

定义一个PyTorch数据集类

接下来,我们定义一个PyTorch数据集。 如果您不熟悉PyTorch数据集,请参阅本教程-https://pytorch.org/tutorials/beginner/data_loading_tutorial.html。 __init__将收到一个可选的转换参数。 它是“白化”增强管道的转换功能。 然后在__getitem__中,Dataset类将使用该函数来扩展图像和遮罩并返回其扩展版本。

class OxfordPetDataset(Dataset):

def init(self, images_filenames, images_directory, masks_directory, transform=None):

self.images_filenames = images_filenames

self.images_directory = images_directory

self.masks_directory = masks_directory

self.transform = transform

def len(self):

return len(self.images_filenames)

def getitem(self, idx):

image_filename = self.images_filenames[idx]

image = cv2.imread(os.path.join(self.images_directory, image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

mask = cv2.imread(

os.path.join(self.masks_directory, image_filename.replace(“.jpg”, “.png”)), cv2.IMREAD_UNCHANGED,

)

mask = preprocess_mask(mask)

if self.transform is not None:

transformed = self.transform(image=image, mask=mask)

image = transformed[“image”]

mask = transformed[“mask”]

return image, mask

接下来,我们为训练和验证数据集创建增强管道。 请注意,我们使用A.Resize(256,256)将输入图像和蒙版的大小调整为256x256像素。

train_transform = A.Compose(

[

A.Resize(256, 256),

A.ShiftScaleRotate(shift_limit=0.2, scale_limit=0.2, rotate_limit=30, p=0.5),

A.RGBShift(r_shift_limit=25, g_shift_limit=25, b_shift_limit=25, p=0.5),

A.RandomBrightnessContrast(brightness_limit=0.3, contrast_limit=0.3, p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

]

)

train_dataset = OxfordPetDataset(train_images_filenames, images_directory, masks_directory, transform=train_transform,)

val_transform = A.Compose(

[A.Resize(256, 256), A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)), ToTensorV2()]

)

val_dataset = OxfordPetDataset(val_images_filenames, images_directory, masks_directory, transform=val_transform,)

让我们定义一个函数,该函数获取数据集并可视化应用于相同图像和关联蒙版的不同增强。

def visualize_augmentations(dataset, idx=0, samples=5):

dataset = copy.deepcopy(dataset)

dataset.transform = A.Compose([t for t in dataset.transform if not isinstance(t, (A.Normalize, ToTensorV2))])

figure, ax = plt.subplots(nrows=samples, ncols=2, figsize=(10, 24))

for i in range(samples):

image, mask = dataset[idx]

ax[i, 0].imshow(image)

ax[i, 1].imshow(mask, interpolation=“nearest”)

ax[i, 0].set_title(“Augmented image”)

ax[i, 1].set_title(“Augmented mask”)

ax[i, 0].set_axis_off()

ax[i, 1].set_axis_off()

plt.tight_layout()

plt.show()

random.seed(42)

visualize_augmentations(train_dataset, idx=55)

定义训练辅助方法

MetricMonitor helps to track metrics such as accuracy or loss during training and validation.

class MetricMonitor:

def init(self, float_precision=3):

self.float_precision = float_precision

self.reset()

def reset(self):

self.metrics = defaultdict(lambda: {“val”: 0, “count”: 0, “avg”: 0})

def update(self, metric_name, val):

metric = self.metrics[metric_name]

metric[“val”] += val

metric[“count”] += 1

metric[“avg”] = metric[“val”] / metric[“count”]

def str(self):

return " | ".join(

[

“{metric_name}: {avg:.{float_precision}f}”.format(

metric_name=metric_name, avg=metric[“avg”], float_precision=self.float_precision

)

for (metric_name, metric) in self.metrics.items()

]

)

定义训练和验证方法

def train(train_loader, model, criterion, optimizer, epoch, params):

metric_monitor = MetricMonitor()

model.train()

stream = tqdm(train_loader)

for i, (images, target) in enumerate(stream, start=1):

images = images.to(params[“device”], non_blocking=True)

target = target.to(params[“device”], non_blocking=True)

output = model(images).squeeze(1)

loss = criterion(output, target)

metric_monitor.update(“Loss”, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

stream.set_description(

“Epoch: {epoch}. Train. {metric_monitor}”.format(epoch=epoch, metric_monitor=metric_monitor)

)

def validate(val_loader, model, criterion, epoch, params):

metric_monitor = MetricMonitor()

model.eval()

stream = tqdm(val_loader)

with torch.no_grad():

for i, (images, target) in enumerate(stream, start=1):

images = images.to(params[“device”], non_blocking=True)

target = target.to(params[“device”], non_blocking=True)

output = model(images).squeeze(1)

loss = criterion(output, target)

metric_monitor.update(“Loss”, loss.item())

stream.set_description(

“Epoch: {epoch}. Validation. {metric_monitor}”.format(epoch=epoch, metric_monitor=metric_monitor)

)

def create_model(params):

model = getattr(ternausnet.models, params[“model”])(pretrained=True)

model = model.to(params[“device”])

return model

def train_and_validate(model, train_dataset, val_dataset, params):

train_loader = DataLoader(

train_dataset,

batch_size=params[“batch_size”],

shuffle=True,

num_workers=params[“num_workers”],

pin_memory=True,

)

val_loader = DataLoader(

val_dataset,

batch_size=params[“batch_size”],

shuffle=False,

num_workers=params[“num_workers”],

pin_memory=True,

)

criterion = nn.BCEWithLogitsLoss().to(params[“device”])

optimizer = torch.optim.Adam(model.parameters(), lr=params[“lr”])

for epoch in range(1, params[“epochs”] + 1):

train(train_loader, model, criterion, optimizer, epoch, params)

validate(val_loader, model, criterion, epoch, params)

return model

def predict(model, params, test_dataset, batch_size):

test_loader = DataLoader(

test_dataset, batch_size=batch_size, shuffle=False, num_workers=params[“num_workers”], pin_memory=True,

)

model.eval()

predictions = []

with torch.no_grad():

for images, (original_heights, original_widths) in test_loader:

images = images.to(params[“device”], non_blocking=True)

output = model(images)

probabilities = torch.sigmoid(output.squeeze(1))

predicted_masks = (probabilities >= 0.5).float() * 1

predicted_masks = predicted_masks.cpu().numpy()

for predicted_mask, original_height, original_width in zip(

predicted_masks, original_heights.numpy(), original_widths.numpy()

):

predictions.append((predicted_mask, original_height, original_width))

return predictions

定义训练参数

在这里,我们定义了一些训练参数,例如模型架构,学习率,批量大小,epoch等。

params = {

“model”: “UNet11”,

“device”: “cuda”,

“lr”: 0.001,

“batch_size”: 16,

“num_workers”: 4,

“epochs”: 10,

}

训练模型

model = create_model(params)

model = train_and_validate(model, train_dataset, val_dataset, params)

Epoch: 1. Train. Loss: 0.415: 100%|██████████| 375/375 [01:42<00:00, 3.66it/s]

Epoch: 1. Validation. Loss: 0.210: 100%|██████████| 86/86 [00:09<00:00, 9.55it/s]

Epoch: 2. Train. Loss: 0.257: 100%|██████████| 375/375 [01:40<00:00, 3.75it/s]

Epoch: 2. Validation. Loss: 0.178: 100%|██████████| 86/86 [00:08<00:00, 10.62it/s]

Epoch: 3. Train. Loss: 0.221: 100%|██████████| 375/375 [01:39<00:00, 3.75it/s]

Epoch: 3. Validation. Loss: 0.168: 100%|██████████| 86/86 [00:08<00:00, 10.58it/s]

Epoch: 4. Train. Loss: 0.209: 100%|██████████| 375/375 [01:40<00:00, 3.75it/s]

Epoch: 4. Validation. Loss: 0.156: 100%|██████████| 86/86 [00:08<00:00, 10.57it/s]

Epoch: 5. Train. Loss: 0.190: 100%|██████████| 375/375 [01:40<00:00, 3.75it/s]

Epoch: 5. Validation. Loss: 0.149: 100%|██████████| 86/86 [00:08<00:00, 10.57it/s]

Epoch: 6. Train. Loss: 0.179: 100%|██████████| 375/375 [01:39<00:00, 3.75it/s]

Epoch: 6. Validation. Loss: 0.155: 100%|██████████| 86/86 [00:08<00:00, 10.55it/s]

Epoch: 7. Train. Loss: 0.175: 100%|██████████| 375/375 [01:40<00:00, 3.75it/s]

Epoch: 7. Validation. Loss: 0.147: 100%|██████████| 86/86 [00:08<00:00, 10.59it/s]

Epoch: 8. Train. Loss: 0.167: 100%|██████████| 375/375 [01:40<00:00, 3.75it/s]

Epoch: 8. Validation. Loss: 0.146: 100%|██████████| 86/86 [00:08<00:00, 10.61it/s]

Epoch: 9. Train. Loss: 0.165: 100%|██████████| 375/375 [01:40<00:00, 3.75it/s]

Epoch: 9. Validation. Loss: 0.131: 100%|██████████| 86/86 [00:08<00:00, 10.56it/s]

Epoch: 10. Train. Loss: 0.156: 100%|██████████| 375/375 [01:40<00:00, 3.75it/s]

Epoch: 10. Validation. Loss: 0.140: 100%|██████████| 86/86 [00:08<00:00, 10.60it/s]

预测图像标签并可视化这些预测

现在我们有了训练好的模型,因此让我们尝试预测某些图像的蒙版。 请注意,__ getitem__方法不仅返回图像,还返回图像的原始高度和宽度。 我们将使用这些值将预测蒙版的大小从256x256像素调整为原始图像的大小。

class OxfordPetInferenceDataset(Dataset):

def init(self, images_filenames, images_directory, transform=None):

self.images_filenames = images_filenames

self.images_directory = images_directory

self.transform = transform

def len(self):

return len(self.images_filenames)

def getitem(self, idx):

image_filename = self.images_filenames[idx]

image = cv2.imread(os.path.join(self.images_directory, image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

original_size = tuple(image.shape[:2])

if self.transform is not None:

transformed = self.transform(image=image)

image = transformed[“image”]

return image, original_size

test_transform = A.Compose(

[A.Resize(256, 256), A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)), ToTensorV2()]

)

test_dataset = OxfordPetInferenceDataset(test_images_filenames, images_directory, transform=test_transform,)

predictions = predict(model, params, test_dataset, batch_size=16)

接下来,我们将将256x256像素大小的预测蒙版调整为原始图像的大小。

predicted_masks = []

for predicted_256x256_mask, original_height, original_width in predictions:

full_sized_mask = F.resize(

predicted_256x256_mask, height=original_height, width=original_width, interpolation=cv2.INTER_NEAREST

)

predicted_masks.append(full_sized_mask)

display_image_grid(test_images_filenames, images_directory, masks_directory, predicted_masks=predicted_masks)

方法1的完整代码(亲测可运行)

===============

from collections import defaultdict

import copy

import random

import os

import shutil

from urllib.request import urlretrieve

import albumentations as A

import albumentations.augmentations.functional as F

from albumentations.pytorch import ToTensorV2

import cv2

import matplotlib.pyplot as plt

import numpy as np

import ternausnet.models

from tqdm import tqdm

import torch

import torch.backends.cudnn as cudnn

import torch.nn as nn

import torch.optim

from torch.utils.data import Dataset, DataLoader

cudnn.benchmark = True

class TqdmUpTo(tqdm):

def update_to(self, b=1, bsize=1, tsize=None):

if tsize is not None:

self.total = tsize

self.update(b * bsize - self.n)

def download_url(url, filepath):

directory = os.path.dirname(os.path.abspath(filepath))

os.makedirs(directory, exist_ok=True)

if os.path.exists(filepath):

print(“Dataset already exists on the disk. Skipping download.”)

return

with TqdmUpTo(unit=“B”, unit_scale=True, unit_divisor=1024, miniters=1, desc=os.path.basename(filepath)) as t:

urlretrieve(url, filename=filepath, reporthook=t.update_to, data=None)

t.total = t.n

def extract_archive(filepath):

extract_dir = os.path.dirname(os.path.abspath(filepath))

shutil.unpack_archive(filepath, extract_dir)

def preprocess_mask(mask):

mask = mask.astype(np.float32)

mask[mask == 2.0] = 0.0

mask[(mask == 1.0) | (mask == 3.0)] = 1.0

return mask

def display_image_grid(images_filenames, images_directory, masks_directory, predicted_masks=None):

cols = 3 if predicted_masks else 2

rows = len(images_filenames)

figure, ax = plt.subplots(nrows=rows, ncols=cols, figsize=(10, 24))

for i, image_filename in enumerate(images_filenames):

image = cv2.imread(os.path.join(images_directory, image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

mask = cv2.imread(os.path.join(masks_directory, image_filename.replace(“.jpg”, “.png”)), cv2.IMREAD_UNCHANGED, )

mask = preprocess_mask(mask)

ax[i, 0].imshow(image)

ax[i, 1].imshow(mask, interpolation=“nearest”)

ax[i, 0].set_title(“Image”)

ax[i, 1].set_title(“Ground truth mask”)

ax[i, 0].set_axis_off()

ax[i, 1].set_axis_off()

if predicted_masks:

predicted_mask = predicted_masks[i]

ax[i, 2].imshow(predicted_mask, interpolation=“nearest”)

ax[i, 2].set_title(“Predicted mask”)

ax[i, 2].set_axis_off()

plt.tight_layout()

plt.show()

class OxfordPetDataset(Dataset):

def init(self, images_filenames, images_directory, masks_directory, transform=None):

self.images_filenames = images_filenames

self.images_directory = images_directory

self.masks_directory = masks_directory

self.transform = transform

def len(self):

return len(self.images_filenames)

def getitem(self, idx):

image_filename = self.images_filenames[idx]

image = cv2.imread(os.path.join(self.images_directory, image_filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

mask = cv2.imread(

os.path.join(self.masks_directory, image_filename.replace(“.jpg”, “.png”)), cv2.IMREAD_UNCHANGED,

)

mask = preprocess_mask(mask)

if self.transform is not None:

transformed = self.transform(image=image, mask=mask)

image = transformed[“image”]

mask = transformed[“mask”]

return image, mask

def visualize_augmentations(dataset, idx=0, samples=5):

dataset = copy.deepcopy(dataset)

dataset.transform = A.Compose([t for t in dataset.transform if not isinstance(t, (A.Normalize, ToTensorV2))])

figure, ax = plt.subplots(nrows=samples, ncols=2, figsize=(10, 24))

for i in range(samples):

image, mask = dataset[idx]

ax[i, 0].imshow(image)

ax[i, 1].imshow(mask, interpolation=“nearest”)

ax[i, 0].set_title(“Augmented image”)

ax[i, 1].set_title(“Augmented mask”)

ax[i, 0].set_axis_off()

ax[i, 1].set_axis_off()

plt.tight_layout()

plt.show()

class MetricMonitor:

def init(self, float_precision=3):

self.float_precision = float_precision

self.reset()

def reset(self):

self.metrics = defaultdict(lambda: {“val”: 0, “count”: 0, “avg”: 0})

def update(self, metric_name, val):

metric = self.metrics[metric_name]

metric[“val”] += val

metric[“count”] += 1

metric[“avg”] = metric[“val”] / metric[“count”]

def str(self):

return " | ".join(

[

“{metric_name}: {avg:.{float_precision}f}”.format(

metric_name=metric_name, avg=metric[“avg”], float_precision=self.float_precision

)

for (metric_name, metric) in self.metrics.items()

]

)

def train(train_loader, model, criterion, optimizer, epoch, params):

metric_monitor = MetricMonitor()

model.train()

stream = tqdm(train_loader)

for i, (images, target) in enumerate(stream, start=1):

images = images.to(params[“device”], non_blocking=True)

target = target.to(params[“device”], non_blocking=True)

output = model(images).squeeze(1)

loss = criterion(output, target)

metric_monitor.update(“Loss”, loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

stream.set_description(

“Epoch: {epoch}. Train. {metric_monitor}”.format(epoch=epoch, metric_monitor=metric_monitor)

)

def validate(val_loader, model, criterion, epoch, params):

metric_monitor = MetricMonitor()

model.eval()

stream = tqdm(val_loader)

with torch.no_grad():

for i, (images, target) in enumerate(stream, start=1):

images = images.to(params[“device”], non_blocking=True)

target = target.to(params[“device”], non_blocking=True)

output = model(images).squeeze(1)

loss = criterion(output, target)

metric_monitor.update(“Loss”, loss.item())

stream.set_description(

“Epoch: {epoch}. Validation. {metric_monitor}”.format(epoch=epoch, metric_monitor=metric_monitor)

)

def create_model(params):

model = getattr(ternausnet.models, params[“model”])(pretrained=True)

model = model.to(params[“device”])

return model

def train_and_validate(model, train_dataset, val_dataset, params):

train_loader = DataLoader(

train_dataset,

batch_size=params[“batch_size”],

shuffle=True,

num_workers=params[“num_workers”],

pin_memory=True,

)

val_loader = DataLoader(

val_dataset,

batch_size=params[“batch_size”],

shuffle=False,

num_workers=params[“num_workers”],

pin_memory=True,

)

criterion = nn.BCEWithLogitsLoss().to(params[“device”])

optimizer = torch.optim.Adam(model.parameters(), lr=params[“lr”])

for epoch in range(1, params[“epochs”] + 1):

train(train_loader, model, criterion, optimizer, epoch, params)

validate(val_loader, model, criterion, epoch, params)

return model

def predict(model, params, test_dataset, batch_size):

test_loader = DataLoader(

test_dataset, batch_size=batch_size, shuffle=False, num_workers=params[“num_workers”], pin_memory=True,

)

model.eval()

predictions = []

with torch.no_grad():

for images, (original_heights, original_widths) in test_loader:

images = images.to(params[“device”], non_blocking=True)

最后

不知道你们用的什么环境,我一般都是用的Python3.6环境和pycharm解释器,没有软件,或者没有资料,没人解答问题,都可以免费领取(包括今天的代码),过几天我还会做个视频教程出来,有需要也可以领取~

给大家准备的学习资料包括但不限于:

Python 环境、pycharm编辑器/永久激活/翻译插件

python 零基础视频教程

Python 界面开发实战教程

Python 爬虫实战教程

Python 数据分析实战教程

python 游戏开发实战教程

Python 电子书100本

Python 学习路线规划

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?