网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

老铁们好,我是V,今天我们简单聊聊使用logstash从ES集群迁移索引的数据到另外一个ES集群相关的问题

什么是logstash

https://www.elastic.co/guide/en/logstash/7.10/introduction.html

如何不知道这是个啥东东的,就自己看下官方文档吧

下载logstash

https://www.elastic.co/cn/downloads/past-releases#logstash

尽量选择和自己ES版本相同的版本号吧,不然不知道会不会有些问题

比如我们线上的ES版本是7.10.0,这里我就选择7.10.0

执行

直接运行

bin/logstash -f config/es/xxx.conf --path.data=/opt/apps/logstash-7.10.0/datas/xxx -b 100

参数含义

-f 配置文件位置

-b 批量大小

-w 工作线程大小,一般不用设置,默认取cpu核心数量

–path.data 指向一个有写入权限的目录,需要存储数据时会使用该目录

具体的参数介绍见文档

https://www.elastic.co/guide/en/logstash/7.10/running-logstash-command-line.html

后台运行

nohup bin/logstash -f config/es/xxx.conf --path.data=/opt/apps/logstash-7.10.0/datas/xxx -b 100 > /opt/apps/log/xxx.log 2>&1 &

不知道nohup啥意思的自己搜索下

配置文件

上游elasticsearch文档

https://www.elastic.co/guide/en/logstash/7.10/plugins-inputs-elasticsearch.html

下游elasticsearch文档

https://www.elastic.co/guide/en/logstash/7.10/plugins-outputs-elasticsearch.html

查看文档一顿吭哧吭哧配置文件写好了

input {

# 上游

elasticsearch {

hosts => "http://es1.es.com:80"

index => "xxx"

user => "elastic"

password => "XXX"

query => '{ "query": { "query_string": { "query": "*" } } }'

size => 2000

scroll => "10m"

docinfo => true

}

}

output {

# 下游

elasticsearch {

hosts => "http://es2.es.com:80"

index => "xxx"

user => "elastic"

password => "XXX"

document_id => "%{[@metadata][_id]}"

}

}

是不是很简单?当然这个从一个ES级群迁移数据到两一个ES集群的事情虽然不难,其实还是会遇到一些问题的。

遇到的问题

文档中指定了routing

你直接用上面的配置文件硬怼,就会遇到如下的告警日志

[2024-03-04T10:56:51,751][WARN ][logstash.outputs.elasticsearch][[main]>worker6][main][b7552c5d93f7de321e4e8f1e6da7bf8ec4696e8dff2bb087018235182d1f7fe2] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>"ded5349e62e678cbf222560e5da90a47", :_index=>"xxx", :routing=>nil, :_type=>"_doc"}, #<LogStash::Event:0x5d3bdb61>], :response=>{"index"=>{"_index"=>"xxx", "_type"=>"_doc", "_id"=>"ded5349e62e678cbf222560e5da90a47", "status"=>400, "error"=>{"type"=>"routing_missing_exception", "reason"=>"routing is required for [xxx]/[_doc]/[ded5349e62e678cbf222560e5da90a47]", "index_uuid"=>"_na_", "index"=>"xxx"}}}}

[2024-03-04T10:56:51,751][WARN ][logstash.outputs.elasticsearch][[main]>worker8][main][b7552c5d93f7de321e4e8f1e6da7bf8ec4696e8dff2bb087018235182d1f7fe2] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>"1181a16445b0069dc824fdde48454b57", :_index=>"xxx", :routing=>nil, :_type=>"_doc"}, #<LogStash::Event:0x5a1ba4d6>], :response=>{"index"=>{"_index"=>"xxx", "_type"=>"_doc", "_id"=>"1181a16445b0069dc824fdde48454b57", "status"=>400, "error"=>{"type"=>"routing_missing_exception", "reason"=>"routing is required for [xxx]/[_doc]/[1181a16445b0069dc824fdde48454b57]", "index_uuid"=>"_na_", "index"=>"xxx"}}}}

啥情况?

{"type"=>"routing_missing_exception", "reason"=>"routing is required for [xxx]/[_doc]/[ded5349e62e678cbf222560e5da90a47]", "index_uuid"=>"_na_", "index"=>"xxx"}}}

原来是没有指定routing字段

我们来看下索引信息

{

"xxx" : {

"aliases" : { },

"mappings" : {

"_routing" : {

"required" : true

},

"properties" : {

}

},

"settings" : {

}

}

}

原来如此,需要指定routing,配置文件一通改,就变成了下面的模样

input {

elasticsearch {

hosts => “http://es1.es.com:80”

index => “xxx”

user => “elastic”

password => “XXX”

query => ‘{ “query”: { “query_string”: { “query”: “*” } } }’

size => 2000

scroll => “1m”

docinfo => true

# input中添加routing

docinfo_fields => [“_index”, “_id”, “_type”, “_routing”]

}

}

output {

elasticsearch {

hosts => “http://es2.es.com:80”

index => “xxx”

user => “elastic”

password => “XXX”

document_id => “%{[@metadata][_id]}”

# 指定routing

routing => “%{[@metadata][_routing]}”

}

}

那么问题来了,如果你所有的索引都用这个模板,那么当上游没有指定routing字段的时候,下游的数据中的routing字段就会是[@metadata][_routing],真的是人都麻了,这个logstash组件一段都不智能,那么这个问题能解决吗?别急,看到最后你就知道了

索引严格模式,无法写入@timestamp和@version字段

上面的问题解决了,跑着跑着,又遇到事了

[2024-03-04T11:43:48,372][WARN ][logstash.outputs.elasticsearch][[main]>worker0][main][23eda3c9518e4ba5a787adadf9714d5512c8ad9a9754020744b84ca81fe1bedc] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>"110109711637125402", :_index=>"xxx", :routing=>nil, :_type=>"_doc"}, #<LogStash::Event:0x5e156236>], :response=>{"index"=>{"_index"=>"xxx", "_type"=>"_doc", "_id"=>"110109711637125402", "status"=>400, "error"=>{"type"=>"strict_dynamic_mapping_exception", "reason"=>"mapping set to strict, dynamic introduction of [@timestamp] within [_doc] is not allowed"}}}}

[2024-03-04T11:43:48,372][WARN ][logstash.outputs.elasticsearch][[main]>worker0][main][23eda3c9518e4ba5a787adadf9714d5512c8ad9a9754020744b84ca81fe1bedc] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>"110109711960916147", :_index=>"xxx", :routing=>nil, :_type=>"_doc"}, #<LogStash::Event:0x75333e01>], :response=>{"index"=>{"_index"=>"xxx", "_type"=>"_doc", "_id"=>"110109711960916147", "status"=>400, "error"=>{"type"=>"strict_dynamic_mapping_exception", "reason"=>"mapping set to strict, dynamic introduction of [@timestamp] within [_doc] is not allowed"}}}}

[2024-03-04T11:43:48,372][WARN ][logstash.outputs.elasticsearch][[main]>worker0][main][23eda3c9518e4ba5a787adadf9714d5512c8ad9a9754020744b84ca81fe1bedc] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>"110109712328692950", :_index=>"xxx", :routing=>nil, :_type=>"_doc"}, #<LogStash::Event:0x7405cd45>], :response=>{"index"=>{"_index"=>"xxx", "_type"=>"_doc", "_id"=>"110109712328692950", "status"=>400, "error"=>{"type"=>"strict_dynamic_mapping_exception", "reason"=>"mapping set to strict, dynamic introduction of [@timestamp] within [_doc] is not allowed"}}}}

看下索引结构

{

"xxx" : {

"aliases" : { },

"mappings" : {

"dynamic" : "strict",

"properties" : {

}

},

"settings" : {

"index" : {

}

}

}

}

原来是索引设置了,严格模式,不允许插入新的字段,那咋整?

还有logstash支持一些filter可以删除掉一些字段,那么我们安排上

input {

elasticsearch {

hosts => "http://es1.es.com:80"

index => "merchant_order_rel_pro_v2"

user => "elastic"

password => "XXX"

query => '{ "query": { "query_string": { "query": "*" } } }'

size => 2000

scroll => "1m"

docinfo => true

}

}

filter {

mutate {

# 删除logstash多余字段

remove_field => ["@version","@timestamp"]

}

}

output {

elasticsearch {

hosts => "http://es2.es.com:80"

index => "xxx"

user => "elastic"

password => "XXX"

document_id => "%{[@metadata][_id]}"

}

}

logstash限流

有的时候写入的太快了,下游扛不住,刚开始是通过修改参数来解决,但是每次修改任务都要重新跑,人有点麻了

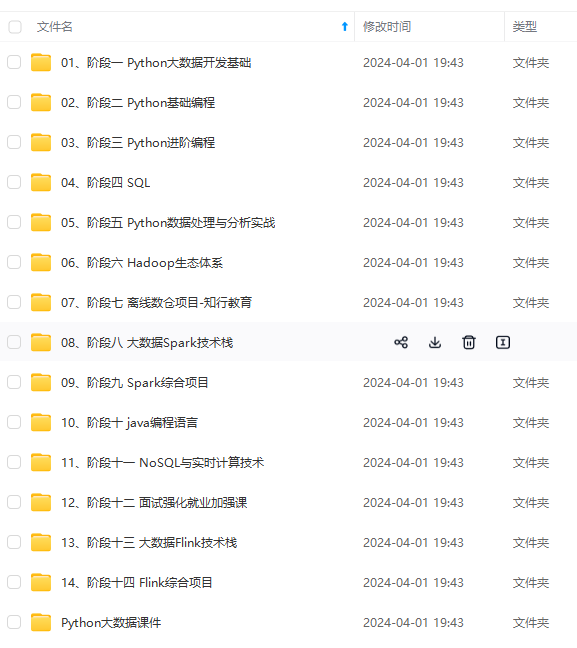

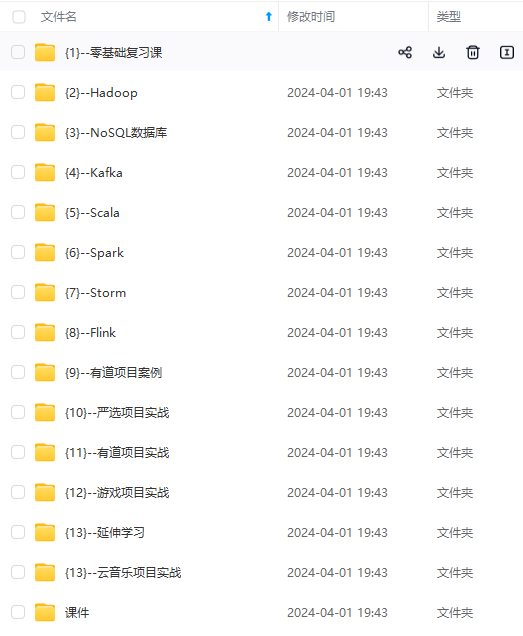

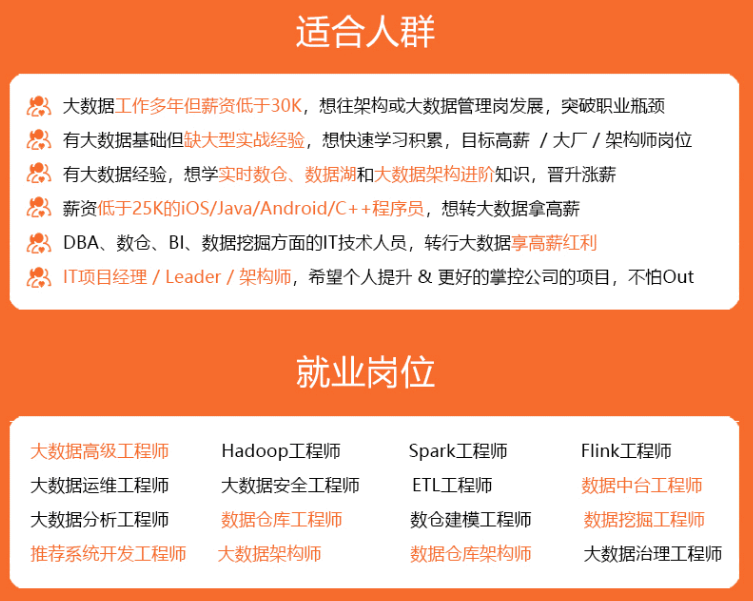

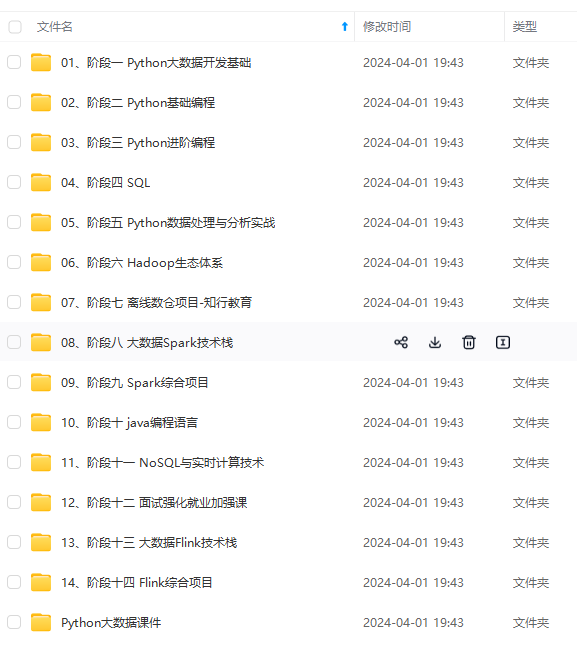

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

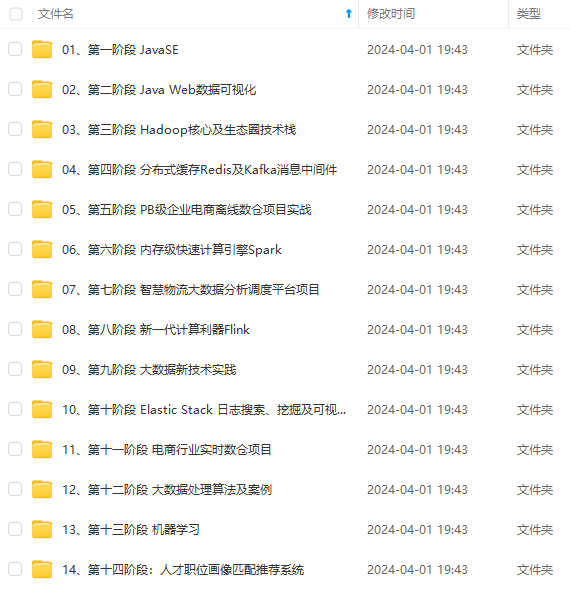

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

中…(img-RMgNNBaj-1715503458012)]

[外链图片转存中…(img-EqyVKXz5-1715503458013)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

2763

2763

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?