[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = Daemon.COM

dns_lookup_realm = false

dns_lookup_kdc = true

rdns = false

ticket_lifetime = 24h

forwardable = true

udp_preference_limit = 0

#default_tkt_enctypes = rc4-hmac aes256-cts aes128-cts

#default_tgs_enctypes = rc4-hmac aes256-cts aes128-cts

#permitted_enctypes = rc4-hmac aes256-cts aes128-cts

#default_ccache_name = KEYRING:persistent:%{uid}

[realms]

Daemon.COM = {

kdc = localhost1.Daemon.com:88

master_kdc = localhost1.Daemon.com:88

admin_server = localhost1.Daemon.com:749

default_domain = Daemon.com

pkinit_anchors = FILE:/var/lib/ipa-client/pki/kdc-ca-bundle.pem

pkinit_pool = FILE:/var/lib/ipa-client/pki/ca-bundle.pem

}

[domain_realm]

.Daemon.com = Daemon.COM

Daemon.com = Daemon.COM

localhost1.Daemon.com = Daemon.COM

[dbmodules]

Daemon.COM = {

db_library = ipadb.so

}

[plugins]

certauth = {

module = ipakdb:kdb/ipadb.so

enable_only = ipakdb

}

### 创建用户

useradd hive

### 添加环境变量

[starrocks@localhost1 conf]$ vi /u01/starrocks/be/conf/hadoop_env.sh

export HADOOP_USER_NAME=hive

[starrocks@localhost1 conf]$ vi /u01/starrocks/fe/conf/hadoop_env.sh

export HADOOP_USER_NAME=hive

### 复制集群配置文件

将 HDFS 集群中的hdfs-site.xml、core-site.xml 、Hive-site.xml分别复制到

B

E

H

O

M

E

/

c

o

n

f

、

BE\_HOME/conf、

BEHOME/conf、FE\_HOME/conf

### 配置主机映射

[starrocks@localhost2 be]$ cat /etc/hosts

127.0.0.1 localhost1 localhost1.Daemon.com

127.0.0.2 localhost2 localhost2.Daemon.com

127.0.0.3 localhost3 localhost3.Daemon.com

127.0.0.10 localhost4.Daemon.com localhost4

127.0.0.11 localhost5.Daemon.com localhost5

127.0.0.12 localhost6.Daemon.com localhost6

### 配置StarRocks配置文件

在每个 FE 的 $FE\_HOME/conf/fe.conf 文件和每个 BE 的 $BE\_HOME/conf/be.conf

[starrocks@localhost2 be]$ cat be/conf/be.conf

JAVA_OPTS=“-Djava.security.krb5.conf=/etc/krb5.conf”

[starrocks@localhost2 be]$ cat fe/conf/be.conf

JAVA_OPTS=“-Djava.security.krb5.conf=/etc/krb5.conf”

### 重启各个节点

/u01/starrocks/be/bin/stop_be.sh

/u01/starrocks/fe/bin/stop_fe.sh

/u01/starrocks/fe/bin/start_fe.sh --daemon

/u01/starrocks/be/bin/start_be.sh --daemon

## 验证

### 创建catalog

CREATE EXTERNAL CATALOG hive_catalog

PROPERTIES (“hive.metastore.type” = “hive”,

“hive.metastore.uris” = “thrift://127.0.0.1:9083”,

“metastore_cache_refresh_interval_sec” = “30”,

“type” = “hive”,

“enable_metastore_cache” = “true”

)

mysql> show catalogs;

±-----------------±---------±-----------------------------------------------------------------+

| Catalog | Type | Comment |

±-----------------±---------±-----------------------------------------------------------------+

| default_catalog | Internal | An internal catalog contains this cluster’s self-managed tables. |

| hive_catalog | hive | NULL |

| hive_catalog_hms | hive | NULL |

±-----------------±---------±-----------------------------------------------------------------+

### 查看数据库

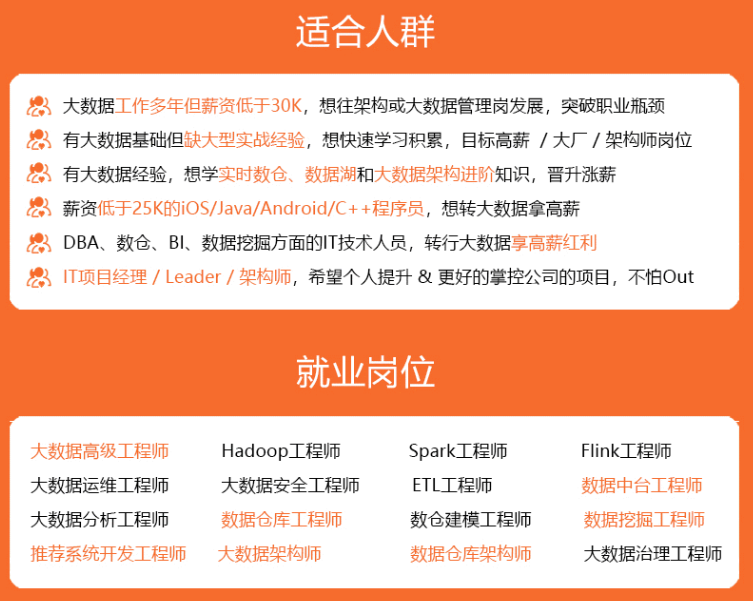

**自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。**

**深知大多数大数据工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!**

**因此收集整理了一份《2024年大数据全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。**

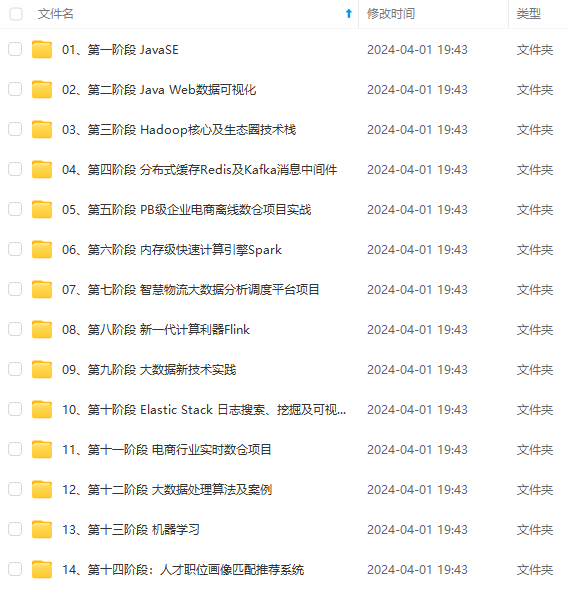

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上大数据开发知识点,真正体系化!**

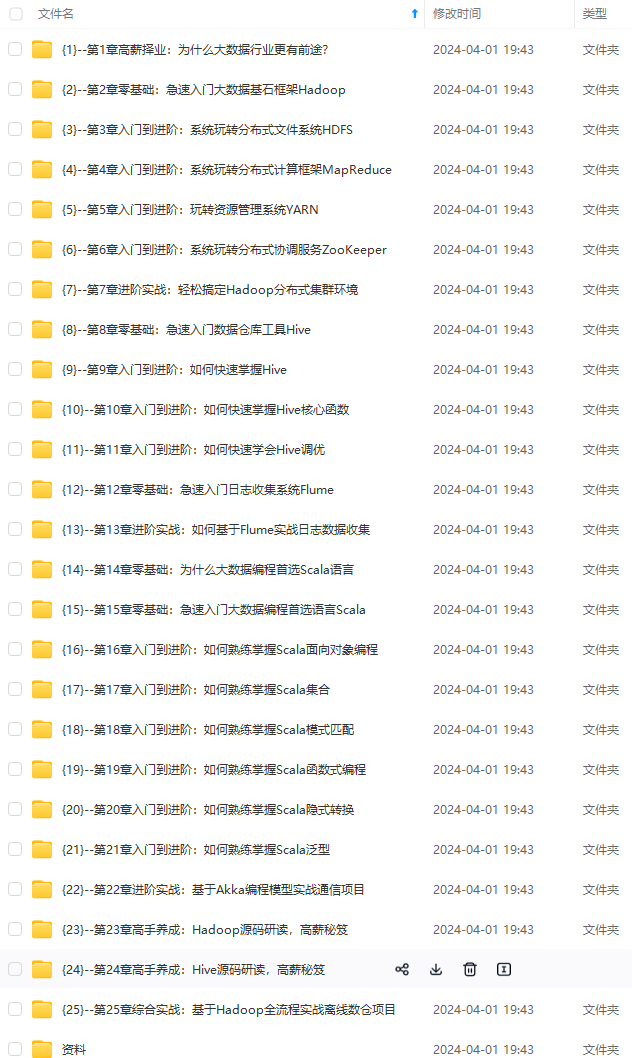

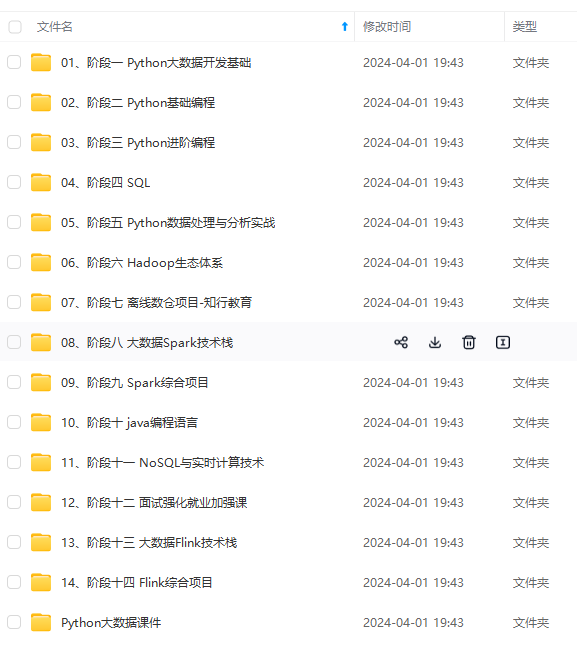

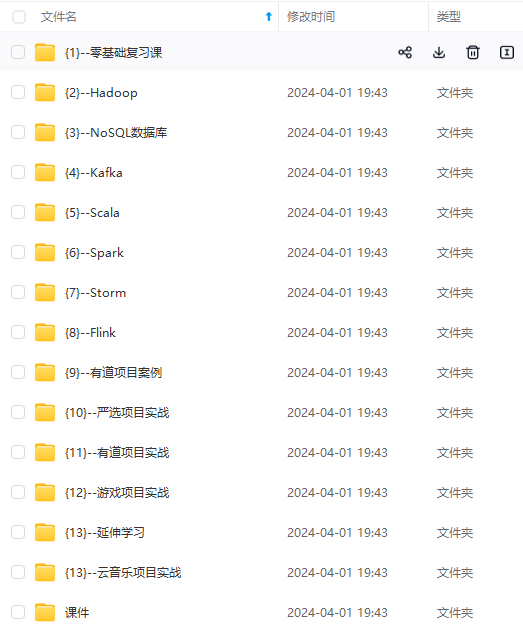

**由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新**

**如果你觉得这些内容对你有帮助,可以添加VX:vip204888 (备注大数据获取)**

3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上大数据开发知识点,真正体系化!**

**由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新**

**如果你觉得这些内容对你有帮助,可以添加VX:vip204888 (备注大数据获取)**

[外链图片转存中...(img-IOfFGzN2-1712863126787)]

3172

3172

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?