网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

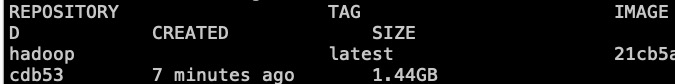

docker commit hadoopimages hadoop

#查看一下镜像

docker images

#### 创建网络bigdata,供各种大数据应用共同一个网络

**这里指定的是172.25.0.0/16子网,注意不要和自己的其他子网相冲突,以免一些不必要的麻烦**

docker network create --driver bridge --subnet 172.25.0.0/16 --gateway 172.25.0.1 bigdata

---

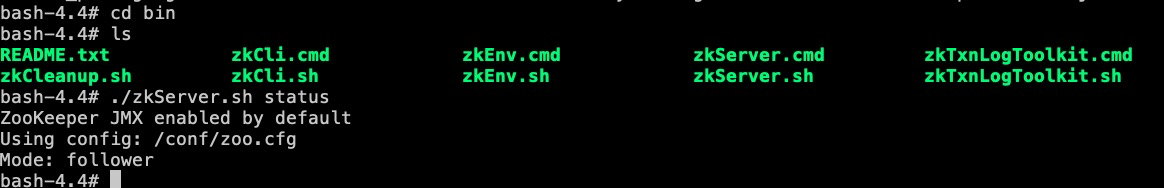

## Zookeeper搭建

---

###### 拉取zookeeper镜像

#选取自己合适的镜像即可

docker pull zookeeper:3.4.13

##### 使用docker-compose创建三个zookeeper容器

version: ‘2’

services:

zoo1:

image: zookeeper:3.4.13 # 镜像名称

restart: always # 当发生错误时自动重启

hostname: zoo1

container_name: zoo1

privileged: true

ports: # 端口

- 2181:2181

volumes: # 挂载数据卷

- ./zoo1/data:/data

- ./zoo1/datalog:/datalog

environment:

TZ: Asia/Shanghai

ZOO_MY_ID: 1 # 节点ID

ZOO_PORT: 2181 # zookeeper端口号

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888 # zookeeper节点列表

networks:

default:

ipv4_address: 172.25.0.11

zoo2:

image: zookeeper:3.4.13

restart: always

hostname: zoo2

container_name: zoo2

privileged: true

ports:

- 2182:2181

volumes:

- ./zoo2/data:/data

- ./zoo2/datalog:/datalog

environment:

TZ: Asia/Shanghai

ZOO_MY_ID: 2

ZOO_PORT: 2181

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

networks:

default:

ipv4_address: 172.25.0.12

zoo3:

image: zookeeper:3.4.13

restart: always

hostname: zoo3

container_name: zoo3

privileged: true

ports:

- 2183:2181

volumes:

- ./zoo3/data:/data

- ./zoo3/datalog:/datalog

environment:

TZ: Asia/Shanghai

ZOO_MY_ID: 3

ZOO_PORT: 2181

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

networks:

default:

ipv4_address: 172.25.0.13

networks:

default:

external:

name: bigdata

#运行命令

docker-compose up -d

➜ zookeeper docker-compose up -d

Recreating 44dad6cddccd_zoo1 … done

Recreating 9b0f2cfe666f_zoo3 … done

Creating zoo2 … done

---

## Kafka集群搭建

---

#拉取Kafka镜像和kafka-manager镜像

docker pull wurstmeister/kafka:2.12-2.3.1

docker pull sheepkiller/kafka-manager

###### 编辑docker-compose.yml文件

version: ‘2’

services:

broker1:

image: wurstmeister/kafka:2.12-2.3.1

restart: always # 出现错误时自动重启

hostname: broker1# 节点主机

container_name: broker1 # 节点名称

privileged: true # 可以在容器里面使用一些权限

ports:

- “9091:9092” # 将容器的9092端口映射到宿主机的9091端口上

environment:

KAFKA_BROKER_ID: 1

KAFKA_LISTENERS: PLAINTEXT://broker1:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://broker1:9092

KAFKA_ADVERTISED_HOST_NAME: broker1

KAFKA_ADVERTISED_PORT: 9092

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181/kafka1,zoo2:2181/kafka1,zoo3:2181/kafka1

JMX_PORT: 9988 # 负责kafkaManager的端口JMX通信

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./broker1:/kafka/kafka-logs-broker1

external_links:

- zoo1

- zoo2

- zoo3

networks:

default:

ipv4_address: 172.25.0.14

broker2:

image: wurstmeister/kafka:2.12-2.3.1

restart: always

hostname: broker2

container_name: broker2

privileged: true

ports:

- “9092:9092”

environment:

KAFKA_BROKER_ID: 2

KAFKA_LISTENERS: PLAINTEXT://broker2:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://broker2:9092

KAFKA_ADVERTISED_HOST_NAME: broker2

KAFKA_ADVERTISED_PORT: 9092

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181/kafka1,zoo2:2181/kafka1,zoo3:2181/kafka1

JMX_PORT: 9988

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./broker2:/kafka/kafka-logs-broker2

external_links: # 连接本compose文件以外的container

- zoo1

- zoo2

- zoo3

networks:

default:

ipv4_address: 172.25.0.15

broker3:

image: wurstmeister/kafka:2.12-2.3.1

restart: always

hostname: broker3

container_name: broker3

privileged: true

ports:

- “9093:9092”

environment:

KAFKA_BROKER_ID: 3

KAFKA_LISTENERS: PLAINTEXT://broker3:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://broker3:9092

KAFKA_ADVERTISED_HOST_NAME: broker3

KAFKA_ADVERTISED_PORT: 9092

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181/kafka1,zoo2:2181/kafka1,zoo3:2181/kafka1

JMX_PORT: 9988

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./broker3:/kafka/kafka-logs-broker3

external_links: # 连接本compose文件以外的container

- zoo1

- zoo2

- zoo3

networks:

default:

ipv4_address: 172.25.0.16

kafka-manager:

image: sheepkiller/kafka-manager:latest

restart: always

container_name: kafka-manager

hostname: kafka-manager

ports:

- “9000:9000”

links: # 连接本compose文件创建的container

- broker1

- broker2

- broker3

external_links: # 连接本compose文件以外的container

- zoo1

- zoo2

- zoo3

environment:

ZK_HOSTS: zoo1:2181/kafka1,zoo2:2181/kafka1,zoo3:2181/kafka1

KAFKA_BROKERS: broker1:9092,broker2:9092,broker3:9092

APPLICATION_SECRET: letmein

KM_ARGS: -Djava.net.preferIPv4Stack=true

networks:

default:

ipv4_address: 172.25.0.10

networks:

default:

external: # 使用已创建的网络

name: bigdata

#运行命令

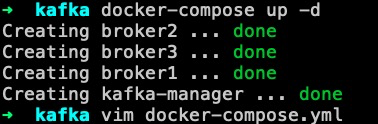

docker-compose up -d

\*\*看看本地端口9000也确实起来了

---

## Hadoop高可用集群搭建

---

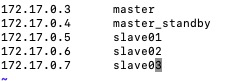

#### docker-compose创建集群

version: ‘2’

services:

master:

image: hadoop:latest

restart: always # 出现错误时自动重启

hostname: master# 节点主机

container_name: master # 节点名称

privileged: true # 可以在容器里面使用一些权限

networks:

default:

ipv4_address: 172.25.0.3

master_standby:

image: hadoop:latest

restart: always

hostname: master_standby

container_name: master_standby

privileged: true

networks:

default:

ipv4_address: 172.25.0.4

slave01:

image: hadoop:latest

restart: always

hostname: slave01

container_name: slave01

privileged: true

networks:

default:

ipv4_address: 172.25.0.5

slave02:

image: hadoop:latest

restart: always

container_name: slave02

hostname: slave02

networks:

default:

ipv4_address: 172.25.0.6

slave03:

image: hadoop:latest

restart: always

container_name: slave03

hostname: slave03

networks:

default:

ipv4_address: 172.25.0.7

#### 命令行方式创建

#创建一个master节点

docker run -tid --name master --privileged=true hadoop:latest /usr/sbin/init

#创建热备master_standby节点

docker run -tid --name master_standby --privileged=true hadoop:latest /usr/sbin/init

#创建三个slave

docker run -tid --name slave01 --privileged=true hadoop:latest /usr/sbin/init

docker run -tid --name slave02 --privileged=true hadoop:latest /usr/sbin/init

docker run -tid --name slave03 --privileged=true hadoop:latest /usr/sbin/init

#### 给每台节点配置免密码登陆

ssh-keygen -t rsa

#然后不断会车,最终如下图所示

#每台机器都是如此

###### 将各自的公钥传到每台机器authorized\_keys里面

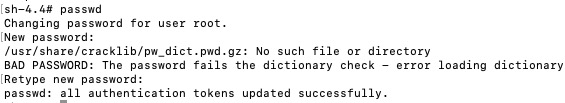

**这里有个小问题:先检查安装了passwd没有,如果没有执行以下命令:**

yum install passwd

#然后设置密码

passwd

#一台机器的公钥都要弄到自己和其他机器的authorized_keys

#以免以后安装其他东西减少不必要的麻烦

cat id_rsa.pub >> .ssh/authorized_keys

###### 编辑/etc/hosts

**注意:这里的master\_standby可能不允许带下划线,有的机器在hdfs格式化的时候会不合法,所以你配置最后不要带特殊字符**

#将/etc/hosts复制到每台节点

scp /etc/hosts master_standby:/etc/

scp /etc/hosts slave01:/etc/

scp /etc/hosts slave02:/etc/

scp /etc/hosts slave03:/etc/

#### 配置Hadoop

#解压hadoop包

tar -zxvf hadoop-2.8.5.tar.gz

###### 配置环境变量

#配置环境变量

vim ~/.bashrc

#添加以下内容

export HADOOP_HOME=/usr/local/hadoop-2.8.5

export CLASSPATH=.:

H

A

D

O

O

P

_

H

O

M

E

/

l

i

b

:

HADOOP\_HOME/lib:

HADOOP_HOME/lib:CLASSPATH

export PATH=

P

A

T

H

:

PATH:

PATH:HADOOP_HOME/bin

export PATH=

P

A

T

H

:

PATH:

PATH:HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=

H

A

D

O

O

P

_

H

O

M

E

e

x

p

o

r

t

H

A

D

O

O

P

_

C

O

M

M

O

N

_

H

O

M

E

=

HADOOP\_HOME export HADOOP\_COMMON\_HOME=

HADOOP_HOMEexportHADOOP_COMMON_HOME=HADOOP_HOME

export HADOOP_HDFS_HOME=

H

A

D

O

O

P

_

H

O

M

E

e

x

p

o

r

t

Y

A

R

N

_

H

O

M

E

=

HADOOP\_HOME export YARN\_HOME=

HADOOP_HOMEexportYARN_HOME=HADOOP_HOME

export HADOOP_ROOT_LOGGER=INFO,console

export HADOOP_COMMON_LIB_NATIVE_DIR=

H

A

D

O

O

P

_

H

O

M

E

/

l

i

b

/

n

a

t

i

v

e

e

x

p

o

r

t

H

A

D

O

O

P

_

O

P

T

S

=

"

−

D

j

a

v

a

.

l

i

b

r

a

r

y

.

p

a

t

h

=

HADOOP\_HOME/lib/native export HADOOP\_OPTS="-Djava.library.path=

HADOOP_HOME/lib/nativeexportHADOOP_OPTS="−Djava.library.path=HADOOP_HOME/lib"

#将这个文件拷到其他机器的下面

scp ~/.bashrc 机器名字:~/

#hadoop命令验证一下

###### 配置文件

**hdfs-site.xml**

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

rty>

dfs.ha.fencing.methods sshfence dfs.ha.fencing.ssh.private-key-files /root/.ssh/id_rsa[外链图片转存中…(img-9qR9d0nO-1715670647027)]

[外链图片转存中…(img-9NcR1tXG-1715670647027)]

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

440

440

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?