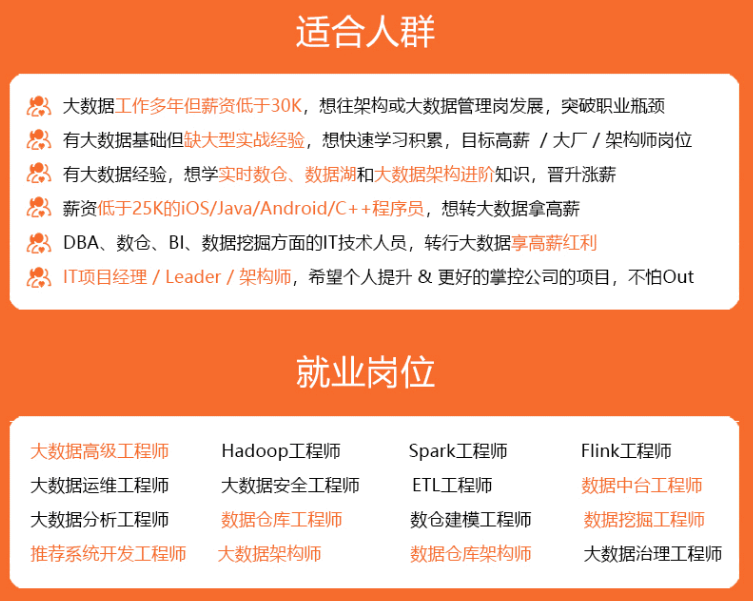

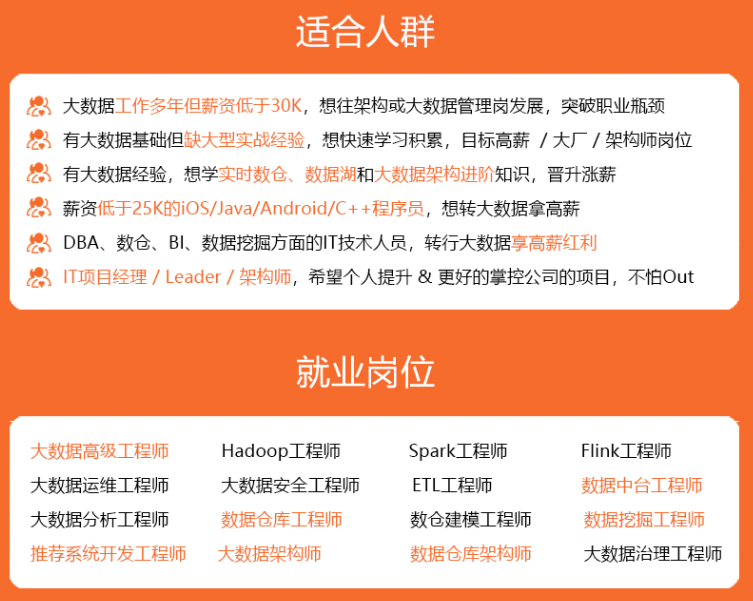

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

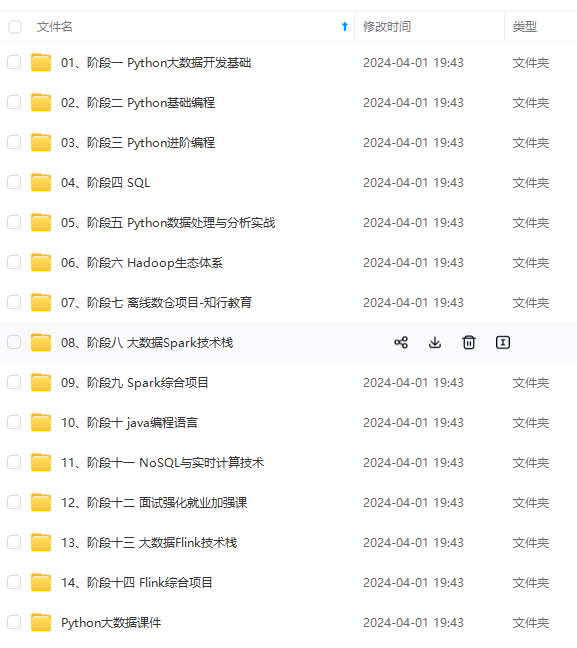

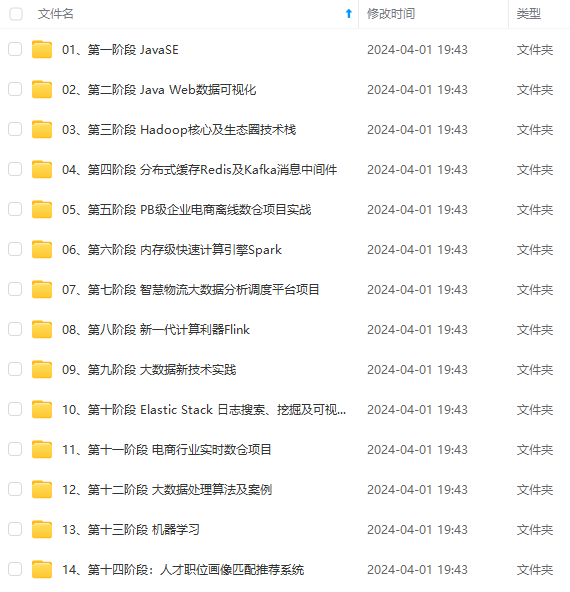

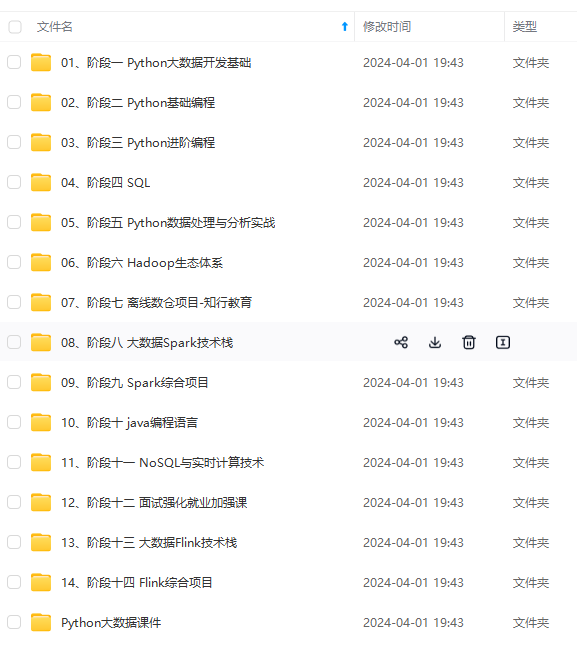

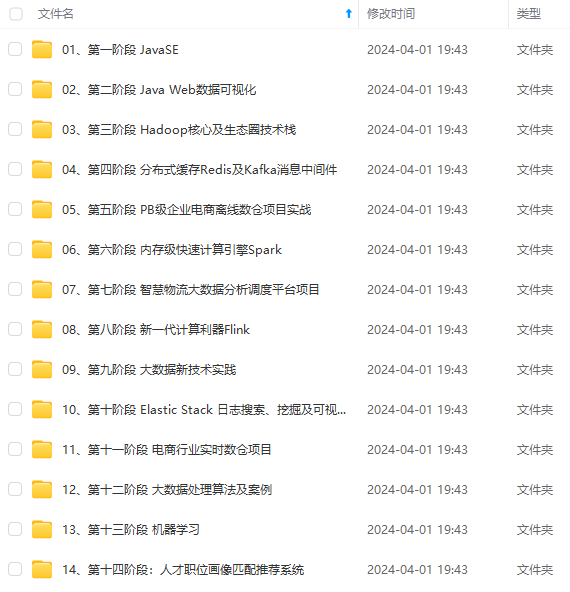

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

KNeighborsClassifier(algorithm=‘auto’, leaf_size=30, metric=‘minkowski’,

metric_params=None, n_jobs=None, n_neighbors=5, p=2,

weights=‘uniform’)

* 预测

res = clf.predict(iris_x_test)

print(res)

print(iris_y_test.values)

[0 2 0 0 1 1 0 2 1 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

[0 2 0 0 1 1 0 2 2 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

* 翻转

encoder.inverse_transform(res)

array([‘setosa’, ‘virginica’, ‘setosa’, ‘setosa’, ‘versicolor’,

‘versicolor’, ‘setosa’, ‘virginica’, ‘versicolor’, ‘virginica’,

‘versicolor’, ‘virginica’, ‘virginica’, ‘virginica’, ‘versicolor’,

‘setosa’, ‘setosa’, ‘setosa’, ‘versicolor’, ‘setosa’, ‘virginica’,

‘setosa’, ‘virginica’, ‘versicolor’, ‘setosa’, ‘versicolor’,

‘setosa’, ‘setosa’, ‘versicolor’, ‘versicolor’], dtype=object)

* 评估

accuracy = clf.score(iris_x_test, iris_y_test)

print(“预测正确率:{:.0%}”.format(accuracy))

预测正确率:97%

* 存储数据

out = iris_x_test.copy()

out[“y”] = iris_y_test

out[“pre”] = res

out

| | petal\_length | petal\_width | y | pre |

| --- | --- | --- | --- | --- |

| 3 | 1.5 | 0.2 | 0 | 0 |

| 111 | 5.3 | 1.9 | 2 | 2 |

| 24 | 1.9 | 0.2 | 0 | 0 |

| 5 | 1.7 | 0.4 | 0 | 0 |

| 92 | 4.0 | 1.2 | 1 | 1 |

| 57 | 3.3 | 1.0 | 1 | 1 |

| 1 | 1.4 | 0.2 | 0 | 0 |

| 112 | 5.5 | 2.1 | 2 | 2 |

| 106 | 4.5 | 1.7 | 2 | 1 |

| 136 | 5.6 | 2.4 | 2 | 2 |

| 80 | 3.8 | 1.1 | 1 | 1 |

| 131 | 6.4 | 2.0 | 2 | 2 |

| 147 | 5.2 | 2.0 | 2 | 2 |

| 113 | 5.0 | 2.0 | 2 | 2 |

| 84 | 4.5 | 1.5 | 1 | 1 |

| 39 | 1.5 | 0.2 | 0 | 0 |

| 40 | 1.3 | 0.3 | 0 | 0 |

| 17 | 1.4 | 0.3 | 0 | 0 |

| 56 | 4.7 | 1.6 | 1 | 1 |

| 2 | 1.3 | 0.2 | 0 | 0 |

| 100 | 6.0 | 2.5 | 2 | 2 |

| 42 | 1.3 | 0.2 | 0 | 0 |

| 144 | 5.7 | 2.5 | 2 | 2 |

| 79 | 3.5 | 1.0 | 1 | 1 |

| 19 | 1.5 | 0.3 | 0 | 0 |

| 75 | 4.4 | 1.4 | 1 | 1 |

| 44 | 1.9 | 0.4 | 0 | 0 |

| 37 | 1.4 | 0.1 | 0 | 0 |

| 64 | 3.6 | 1.3 | 1 | 1 |

| 90 | 4.4 | 1.2 | 1 | 1 |

out.to_csv(“iris_predict.csv”)

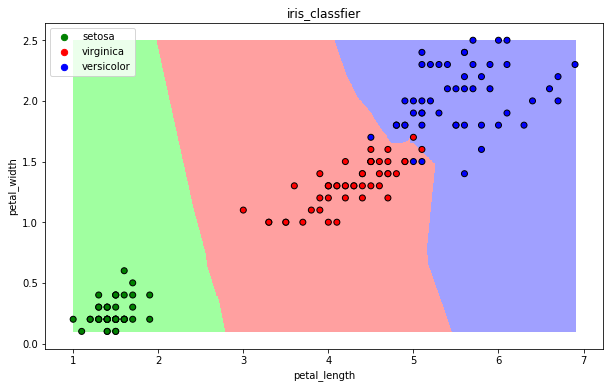

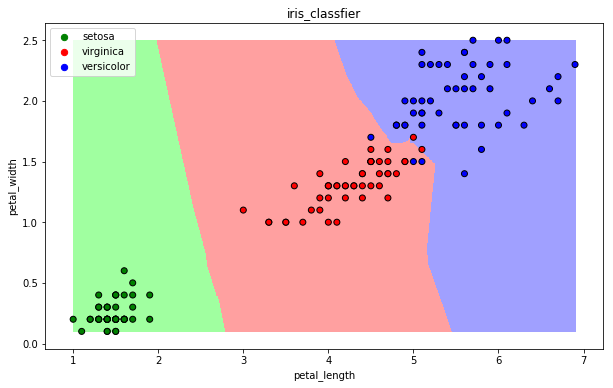

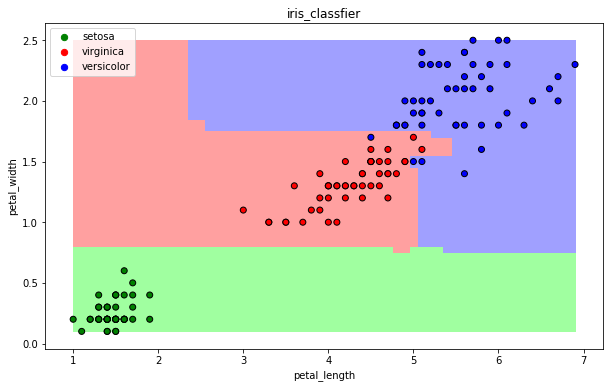

【3】可视化

import numpy as np

import matplotlib as mpl

import matplotlib.pyplot as plt

def draw(clf):

# 网格化

M, N = 500, 500

x1_min, x2_min = iris_simple[["petal\_length", "petal\_width"]].min(axis=0)

x1_max, x2_max = iris_simple[["petal\_length", "petal\_width"]].max(axis=0)

t1 = np.linspace(x1_min, x1_max, M)

t2 = np.linspace(x2_min, x2_max, N)

x1, x2 = np.meshgrid(t1, t2)

# 预测

x_show = np.stack((x1.flat, x2.flat), axis=1)

y_predict = clf.predict(x_show)

# 配色

cm_light = mpl.colors.ListedColormap(["#A0FFA0", "#FFA0A0", "#A0A0FF"])

cm_dark = mpl.colors.ListedColormap(["g", "r", "b"])

# 绘制预测区域图

plt.figure(figsize=(10, 6))

plt.pcolormesh(t1, t2, y_predict.reshape(x1.shape), cmap=cm_light)

# 绘制原始数据点

plt.scatter(iris_simple["petal\_length"], iris_simple["petal\_width"], label=None,

c=iris_simple["species"], cmap=cm_dark, marker='o', edgecolors='k')

plt.xlabel("petal\_length")

plt.ylabel("petal\_width")

# 绘制图例

color = ["g", "r", "b"]

species = ["setosa", "virginica", "versicolor"]

for i in range(3):

plt.scatter([], [], c=color[i], s=40, label=species[i]) # 利用空点绘制图例

plt.legend(loc="best")

plt.title('iris\_classfier')

draw(clf)

### 13.2 朴素贝叶斯算法

【1】基本思想

当X=(x1, x2)发生的时候,哪一个yk发生的概率最大

【2】sklearn实现

from sklearn.naive_bayes import GaussianNB

* 构建分类器对象

clf = GaussianNB()

clf

* 训练

clf.fit(iris_x_train, iris_y_train)

* 预测

res = clf.predict(iris_x_test)

print(res)

print(iris_y_test.values)

[0 2 0 0 1 1 0 2 1 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

[0 2 0 0 1 1 0 2 2 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

* 评估

accuracy = clf.score(iris_x_test, iris_y_test)

print(“预测正确率:{:.0%}”.format(accuracy))

预测正确率:97%

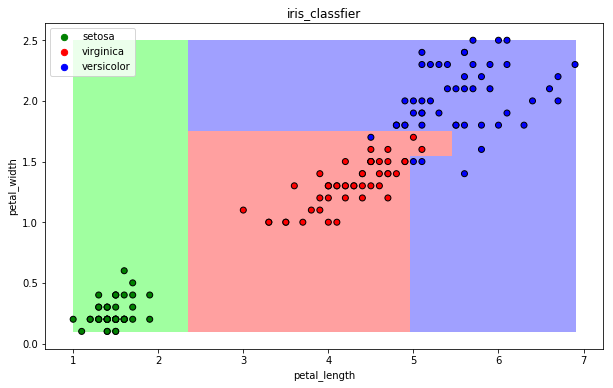

* 可视化

draw(clf)

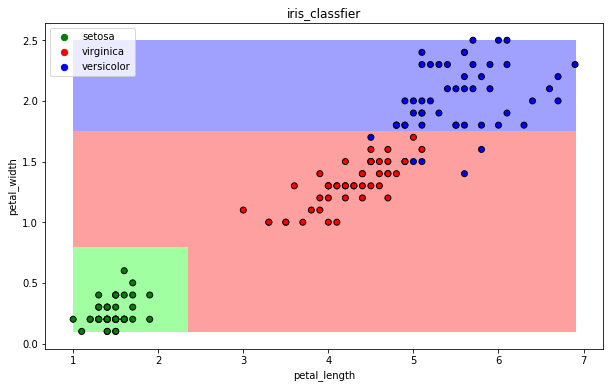

### 13.3 决策树算法

【1】基本思想

CART算法:每次通过一个特征,将数据尽可能的分为纯净的两类,递归的分下去

【2】sklearn实现

from sklearn.tree import DecisionTreeClassifier

* 构建分类器对象

clf = DecisionTreeClassifier()

clf

DecisionTreeClassifier(class_weight=None, criterion=‘gini’, max_depth=None,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False,

random_state=None, splitter=‘best’)

* 训练

clf.fit(iris_x_train, iris_y_train)

DecisionTreeClassifier(class_weight=None, criterion=‘gini’, max_depth=None,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False,

random_state=None, splitter=‘best’)

* 预测

res = clf.predict(iris_x_test)

print(res)

print(iris_y_test.values)

[0 2 0 0 1 1 0 2 1 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

[0 2 0 0 1 1 0 2 2 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

* 评估

accuracy = clf.score(iris_x_test, iris_y_test)

print(“预测正确率:{:.0%}”.format(accuracy))

预测正确率:97%

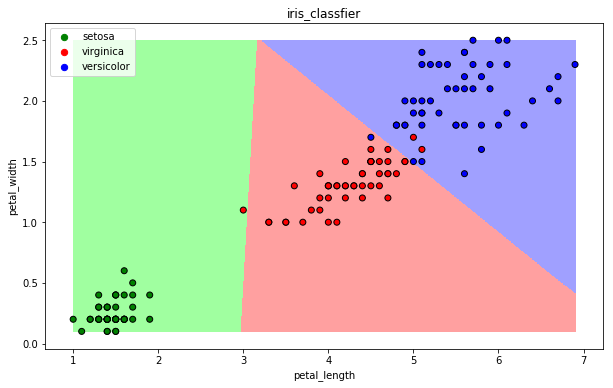

* 可视化

draw(clf)

### 13.4 逻辑回归算法

【1】基本思想

一种解释:

训练:通过一个映射方式,将特征X=(x1, x2) 映射成 P(y=ck), 求使得所有概率之积最大化的映射方式里的参数

预测:计算p(y=ck) 取概率最大的那个类别作为预测对象的分类

【2】sklearn实现

from sklearn.linear_model import LogisticRegression

* 构建分类器对象

clf = LogisticRegression(solver=‘saga’, max_iter=1000)

clf

LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=1000,

multi_class=‘warn’, n_jobs=None, penalty=‘l2’,

random_state=None, solver=‘saga’, tol=0.0001, verbose=0,

warm_start=False)

* 训练

clf.fit(iris_x_train, iris_y_train)

C:\Users\ibm\Anaconda3\lib\site-packages\sklearn\linear_model\logistic.py:469: FutureWarning: Default multi_class will be changed to ‘auto’ in 0.22. Specify the multi_class option to silence this warning.

“this warning.”, FutureWarning)

LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=1000,

multi_class=‘warn’, n_jobs=None, penalty=‘l2’,

random_state=None, solver=‘saga’, tol=0.0001, verbose=0,

warm_start=False)

* 预测

res = clf.predict(iris_x_test)

print(res)

print(iris_y_test.values)

[0 2 0 0 1 1 0 2 1 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

[0 2 0 0 1 1 0 2 2 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

* 评估

accuracy = clf.score(iris_x_test, iris_y_test)

print(“预测正确率:{:.0%}”.format(accuracy))

预测正确率:97%

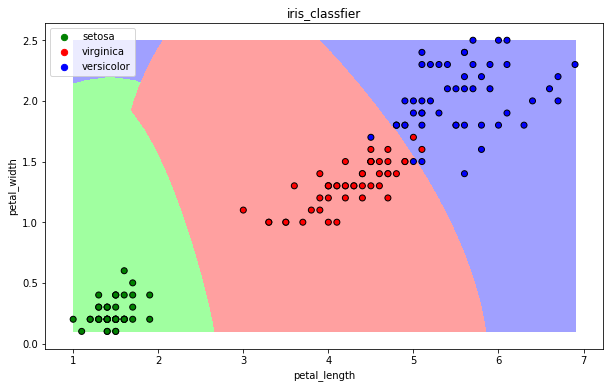

* 可视化

draw(clf)

### 13.5 支持向量机算法

【1】基本思想

以二分类为例,假设数据可用完全分开:

用一个超平面将两类数据完全分开,且最近点到平面的距离最大

【2】sklearn实现

from sklearn.svm import SVC

* 构建分类器对象

clf = SVC()

clf

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=‘ovr’, degree=3, gamma=‘auto_deprecated’,

kernel=‘rbf’, max_iter=-1, probability=False, random_state=None,

shrinking=True, tol=0.001, verbose=False)

* 训练

clf.fit(iris_x_train, iris_y_train)

C:\Users\ibm\Anaconda3\lib\site-packages\sklearn\svm\base.py:193: FutureWarning: The default value of gamma will change from ‘auto’ to ‘scale’ in version 0.22 to account better for unscaled features. Set gamma explicitly to ‘auto’ or ‘scale’ to avoid this warning.

“avoid this warning.”, FutureWarning)

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=‘ovr’, degree=3, gamma=‘auto_deprecated’,

kernel=‘rbf’, max_iter=-1, probability=False, random_state=None,

shrinking=True, tol=0.001, verbose=False)

* 预测

res = clf.predict(iris_x_test)

print(res)

print(iris_y_test.values)

[0 2 0 0 1 1 0 2 1 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

[0 2 0 0 1 1 0 2 2 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

* 评估

accuracy = clf.score(iris_x_test, iris_y_test)

print(“预测正确率:{:.0%}”.format(accuracy))

预测正确率:97%

* 可视化

draw(clf)

### 13.6 集成方法——随机森林

【1】基本思想

训练集m,有放回的随机抽取m个数据,构成一组,共抽取n组采样集

n组采样集训练得到n个弱分类器 弱分类器一般用决策树或神经网络

将n个弱分类器进行组合得到强分类器

【2】sklearn实现

from sklearn.ensemble import RandomForestClassifier

* 构建分类器对象

clf = RandomForestClassifier()

clf

RandomForestClassifier(bootstrap=True, class_weight=None, criterion=‘gini’,

max_depth=None, max_features=‘auto’, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=‘warn’,

n_jobs=None, oob_score=False, random_state=None,

verbose=0, warm_start=False)

* 训练

clf.fit(iris_x_train, iris_y_train)

C:\Users\ibm\Anaconda3\lib\site-packages\sklearn\ensemble\forest.py:245: FutureWarning: The default value of n_estimators will change from 10 in version 0.20 to 100 in 0.22.

“10 in version 0.20 to 100 in 0.22.”, FutureWarning)

RandomForestClassifier(bootstrap=True, class_weight=None, criterion=‘gini’,

max_depth=None, max_features=‘auto’, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=10,

n_jobs=None, oob_score=False, random_state=None,

verbose=0, warm_start=False)

* 预测

res = clf.predict(iris_x_test)

print(res)

print(iris_y_test.values)

[0 2 0 0 1 1 0 2 1 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

[0 2 0 0 1 1 0 2 2 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

* 评估

accuracy = clf.score(iris_x_test, iris_y_test)

print(“预测正确率:{:.0%}”.format(accuracy))

预测正确率:97%

* 可视化

draw(clf)

### 13.7 集成方法——Adaboost

【1】基本思想

训练集m,用初始数据权重训练得到第一个弱分类器,根据误差率计算弱分类器系数,更新数据的权重

使用新的权重训练得到第二个弱分类器,以此类推

根据各自系数,将所有弱分类器加权求和获得强分类器

【2】sklearn实现

from sklearn.ensemble import AdaBoostClassifier

* 构建分类器对象

clf = AdaBoostClassifier()

clf

AdaBoostClassifier(algorithm=‘SAMME.R’, base_estimator=None, learning_rate=1.0,

n_estimators=50, random_state=None)

* 训练

clf.fit(iris_x_train, iris_y_train)

AdaBoostClassifier(algorithm=‘SAMME.R’, base_estimator=None, learning_rate=1.0,

n_estimators=50, random_state=None)

* 预测

res = clf.predict(iris_x_test)

print(res)

print(iris_y_test.values)

[0 2 0 0 1 1 0 2 1 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

[0 2 0 0 1 1 0 2 2 2 1 2 2 2 1 0 0 0 1 0 2 0 2 1 0 1 0 0 1 1]

* 评估

accuracy = clf.score(iris_x_test, iris_y_test)

print(“预测正确率:{:.0%}”.format(accuracy))

预测正确率:97%

* 可视化

draw(clf)

### 13.8 集成方法——梯度提升树GBDT

【1】基本思想

训练集m,获得第一个弱分类器,获得残差,然后不断地拟合残差

所有弱分类器相加得到强分类器

【2】sklearn实现

from sklearn.ensemble import GradientBoostingClassifier

* 构建分类器对象

clf = GradientBoostingClassifier()

clf

GradientBoostingClassifier(criterion=‘friedman_mse’, init=None,

learning_rate=0.1, loss=‘deviance’, max_depth=3,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=100,

n_iter_no_change=None, presort=‘auto’,

random_state=None, subsample=1.0, tol=0.0001,

validation_fraction=0.1, verbose=0,

warm_start=False)

* 训练

clf.fit(iris_x_train, iris_y_train)

GradientBoostingClassifier(criterion=‘friedman_mse’, init=None,

learning_rate=0.1, loss=‘deviance’, max_depth=3,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=100,

n_iter_no_change=None, presort=‘auto’,

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

clf.fit(iris_x_train, iris_y_train)

GradientBoostingClassifier(criterion=‘friedman_mse’, init=None,

learning_rate=0.1, loss=‘deviance’, max_depth=3,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=100,

n_iter_no_change=None, presort=‘auto’,

[外链图片转存中…(img-Xtx1jm4f-1715682583593)]

[外链图片转存中…(img-kqJxV0rK-1715682583594)]

[外链图片转存中…(img-gDYlcNLw-1715682583594)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

1496

1496

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?