既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上Go语言开发知识点,真正体系化!

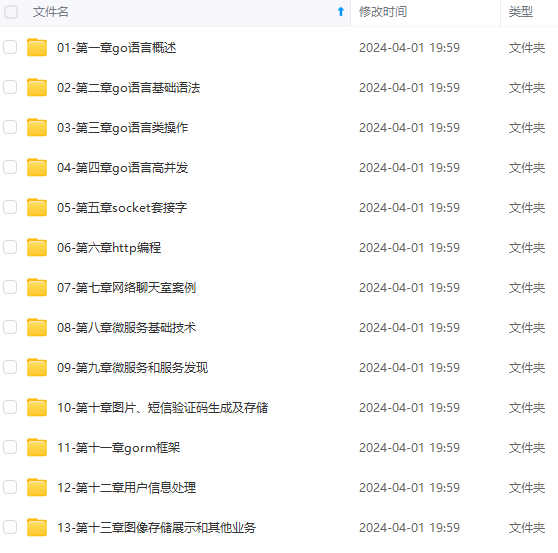

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

yum list docker-ce --showduplicates | sort -r

yum install docker-ce-20.10.\* docker-ce-cli-20.10.\* containerd.io -y

配置Containerd所需的模块

# cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

加载内核模块

modprobe -- overlay

modprobe -- br_netfilter

配置内核

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

加载内核

sysctl --system

创建配置文件

mkdir -p /etc/containerd

containerd config default | tee /etc/containerd/config.toml

修改配置文件

vim /etc/containerd/config.toml

修改以下内容

找到containerd.runtimes.runc.options,修改SystemdCgroup = true

将所有的sanbox_image修改为registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

启动containerd并配置开机启动

systemctl daemon-reload

systemctl enable --now containerd

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

说明一下Cgroup drivers:systemd cgroupfs 区别

那么 systemd 和 cgroupfs 这两种驱动有什么区别呢?

- systemd cgroup driver 是 systemd 本身提供了一个 cgroup 的管理方式,使用systemd 做 cgroup 驱动的话,所有的 cgroup 操作都必须通过 systemd 的接口来完成,不能手动更改 cgroup 的文件

- cgroupfs 驱动就比较直接,比如说要限制内存是多少、要用 CPU share 为多少?直接把 pid 写入对应的一个 cgroup 文件,然后把对应需要限制的资源也写入相应的 memory cgroup 文件和 CPU 的 cgroup 文件就可以了

所以可以看出来 systemd 更加安全,因为不能手动去更改 cgroup 文件,当然我们也推荐使用 systemd 驱动来管理 cgroup。

K8s组件安装

下载kubernetes1.23.4 https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.23.md#v1234

wget https://storage.googleapis.com/kubernetes-release/release/v1.23.4/kubernetes-server-linux-amd64.tar.gz

下载etcd3.5.1 https://github.com/etcd-io/etcd/releases

https://github.com/etcd-io/etcd/releases/download/v3.5.1/etcd-v3.5.1-linux-amd64.tar.gz

解压kubernetes组件

tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

解压etcd组件

tar -zxvf etcd-v3.5.1-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.5.1-linux-amd64/etcd{,ctl}

将组件发送到其他节点

MasterNodes='master2 master3'

for NODE in $MasterNodes; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd\* $NODE:/usr/local/bin/; done

下载CNI https://github.com/containernetworking/plugins/releases/tag/v1.1.1

wget https://github.com/containernetworking/plugins/releases/download/v1.1.0/cni-plugins-linux-amd64-v1.1.1.tgz

所有节点创建/opt/cni/bin目录

mkdir -p /opt/cni/bin

解压cni并发送到所有节点

tar -zxf cni-plugins-linux-amd64-v1.1.1.tgz -C /opt/cni/bin

for NODE in $MasterNodes; do ssh $NODE 'mkdir -p /opt/cni/bin'; scp /opt/cni/bin/\* $NODE:/opt/cni/bin/; done

生成证书

master1下载生成证书工具 https://github.com/cloudflare/cfssl

wget https://github.com/cloudflare/cfssl/releases/tag/v1.6.1/cfssl_1.6.1_linux_amd64 -O /usr/local/bin/cfssl

wget https://github.com/cloudflare/cfssl/releases/tag/v1.6.1/cfssljson_1.6.1_linux_amd64 -O /usr/local/bin/cfssljson

chmod +x /usr/local/bin/\*

所有节点创建etcd证书目录

mkdir -p /etc/etcd/ssl

所有节点创建kubernetes目录

mkdir -p /etc/kubernetes/pki

生成etcd证书

master1生成etcd证书

生成自签证书颁发机构(CA)

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

cat > etcd-ca-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

生成证书CSR文件:证书签名请求文件

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

颁发客户端证书

cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,master1,master2,master3,192.168.56.101,192.168.56.102,192.168.56.103 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

将etcd证书复制到其他节点

for NODE in $MasterNodes; do

ssh $NODE "mkdir -p /etc/etcd/ssl"

for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do

scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}

done

done

生成kubernetes证书

cd /etc/kubernetes/pki

生成CA证书

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

生成apiserver证书

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

cat > apiserver-csr.json << EOF

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}

EOF

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-hostname=10.96.0.1,192.168.56.88,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.56.101,192.168.56.102,192.168.56.103 \

-profile=kubernetes \

apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

生成apiserver的聚合证书

cat > front-proxy-ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"ca": {

"expiry": "876000h"

}

}

EOF

cat > front-proxy-client-csr.json << EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

cfssl gencert \

-ca=/etc/kubernetes/pki/front-proxy-ca.pem \

-ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

生成controller-manager证书

cat > manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}

EOF

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

配置一个集群项

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.56.88:8443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

设置一个环境项,一个上下文

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

设置一个用户项,set-credentials

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

使用某个环境当作默认环境

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

生成scheduler证书

cat > scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

EOF

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

设置一个集群项

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.56.88:8443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

设置一个环境项,一个上下文

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

设置一个用户项,set-credentials

kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

使用某个环境当作默认环境

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

生成admin证书

cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

EOF

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

设置一个集群项

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.56.88:8443 \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

设置一个环境项,一个上下文

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

设置一个用户项,set-credentials

kubectl config set-credentials kubernetes-admin \

--client-certificate=/etc/kubernetes/pki/admin.pem \

--client-key=/etc/kubernetes/pki/admin-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

使用某个环境当作默认环境

kubectl config use-context kubernetes-admin@kubernetes \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

生成ServiceAccount Key

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

发送到其他节点

for NODE in master2 master3; do

for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do

scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};

done;

for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do

scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE};

done;

done

kubernetes组件配置

etcd配置

master1

cat > /etc/etcd/etcd.config.yml << EOF

name: 'master1'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.56.101:2380'

listen-client-urls: 'https://192.168.56.101:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.56.101:2380'

advertise-client-urls: 'https://192.168.56.101:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'master1=https://192.168.56.101:2380,master2=https://192.168.56.102:2380,master3=https://192.168.56.103:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-output: default

force-new-cluster: false

EOF

master2

cat > /etc/etcd/etcd.config.yml << EOF

name: 'master2'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.56.102:2380'

listen-client-urls: 'https://192.168.56.102:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.56.102:2380'

advertise-client-urls: 'https://192.168.56.102:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'master1=https://192.168.56.101:2380,master2=https://192.168.56.102:2380,master3=https://192.168.56.103:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-output: default

force-new-cluster: false

EOF

master3

cat > /etc/etcd/etcd.config.yml << EOF

name: 'master3'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.56.103:2380'

listen-client-urls: 'https://192.168.56.103:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.56.103:2380'

advertise-client-urls: 'https://192.168.56.103:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'master1=https://192.168.56.101:2380,master2=https://192.168.56.102:2380,master3=https://192.168.56.103:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-output: default

force-new-cluster: false

EOF

创建service文件

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

EOF

mkdir /etc/kubernetes/pki/etcd

ln -s /etc/etcd/ssl/\* /etc/kubernetes/pki/etcd/

systemctl daemon-reload

systemctl enable --now etcd

export ETCDCTL_API=3

etcdctl --endpoints="192.168.56.101:2379,192.168.56.102:2379,192.168.56.103:2379" \

--cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem \

--cert=/etc/kubernetes/pki/etcd/etcd.pem \

--key=/etc/kubernetes/pki/etcd/etcd-key.pem \

endpoint status --write-out=table

高可用配置

所有节点安装keepalived和haproxy

yum install keepalived haproxy -y

haproxy配置

cat > /etc/haproxy/haproxy.cfg << EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind \*:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind \*:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server master1 192.168.56.101:6443 check

server master2 192.168.56.102:6443 check

server master3 192.168.56.103:6443 check

EOF

keepalived master1配置,注意每个节点IP和网卡不一样

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check\_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface enp0s8

mcast_src_ip 192.168.56.101

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.56.88

}

track_script { chk_apiserver

} }

EOF

master2

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check\_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface enp0s8

mcast_src_ip 192.168.56.102

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.56.88

}

track_script { chk_apiserver

} }

EOF

master3

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check\_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface enp0s8

mcast_src_ip 192.168.56.103

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.56.88

}

track_script { chk_apiserver

} }

EOF

健康检查配置

cat > /etc/keepalived/check_apiserver.sh << EOF

#!/bin/bash

err=0

for k in $(seq 1 5)

do

check_code=$(pgrep kube-apiserver)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 5

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_apiserver.sh

systemctl enable --now haproxy

systemctl enable --now keepalived

组件配置

创建相关目录

mkdir -p /etc/kubernetes/manifests/ \

/etc/systemd/system/kubelet.service.d \

/var/lib/kubelet \

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上Go语言开发知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[如果你需要这些资料,可以戳这里获取](https://bbs.csdn.net/topics/618658159)**

riority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.56.88

}

track_script { chk_apiserver

} }

EOF

健康检查配置

cat > /etc/keepalived/check_apiserver.sh << EOF

#!/bin/bash

err=0

for k in $(seq 1 5)

do

check_code=$(pgrep kube-apiserver)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 5

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_apiserver.sh

systemctl enable --now haproxy

systemctl enable --now keepalived

组件配置

创建相关目录

mkdir -p /etc/kubernetes/manifests/ \

/etc/systemd/system/kubelet.service.d \

/var/lib/kubelet \

[外链图片转存中...(img-kro6LwuS-1715636077610)]

[外链图片转存中...(img-rIOAT0xb-1715636077610)]

[外链图片转存中...(img-9B6xARCE-1715636077611)]

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上Go语言开发知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[如果你需要这些资料,可以戳这里获取](https://bbs.csdn.net/topics/618658159)**

3733

3733

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?