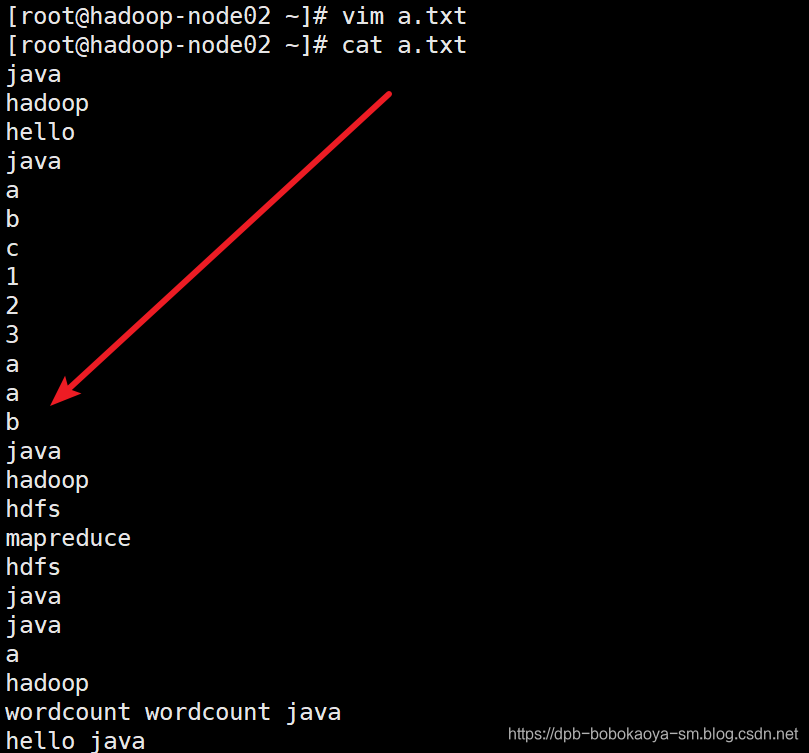

执行wordcount案例来统计文件中单词出现的次数.

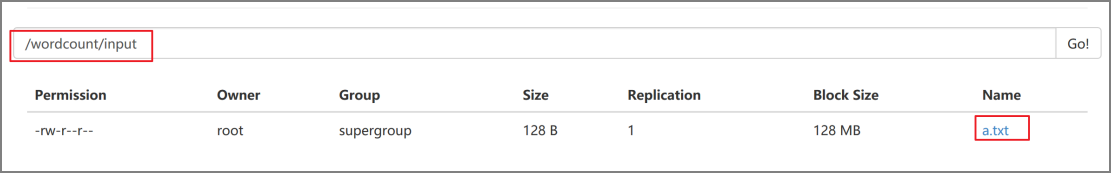

在hdfs中创建文件夹存储需要统计的文件,及创建输出文件的路径

hadoop fs -mkdir -p /wordcount/input

hadoop fs -put a.txt /wordcount/input/

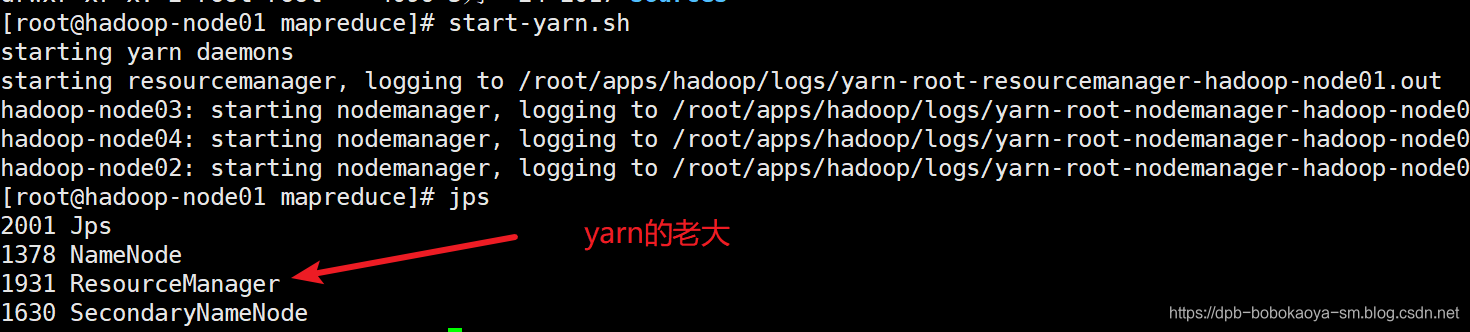

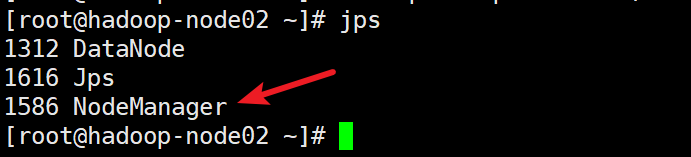

要做分布式运算必须要启动yarn

start-yarn.sh

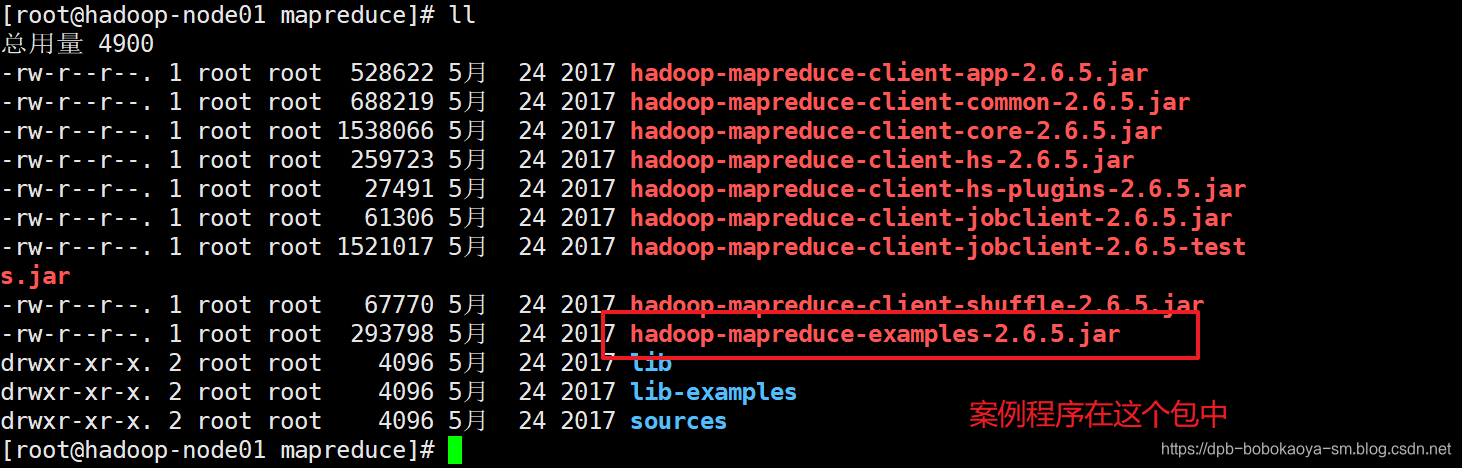

hadoop jar hadoop-mapreduce-examples-2.6.5.jar wordcount /wordcount/input/ /wordcount/output

输出

[root@hadoop-node01 mapreduce]# hadoop jar hadoop-mapreduce-examples-2.6.5.jar wordcount /wordcount/input/ /wordcount/output

19/04/02 23:06:03 INFO client.RMProxy: Connecting to ResourceManager at hadoop-node01/192.168.88.61:8032

19/04/02 23:06:07 INFO input.FileInputFormat: Total input paths to process : 1

19/04/02 23:06:09 INFO mapreduce.JobSubmitter: number of splits:1

19/04/02 23:06:09 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1554217397936_0001

19/04/02 23:06:10 INFO impl.YarnClientImpl: Submitted application application_1554217397936_0001

19/04/02 23:06:11 INFO mapreduce.Job: The url to track the job: http://hadoop-node01:8088/proxy/application_1554217397936_0001/

19/04/02 23:06:11 INFO mapreduce.Job: Running job: job_1554217397936_0001

19/04/02 23:06:30 INFO mapreduce.Job: Job job_1554217397936_0001 running in uber mode : false

19/04/02 23:06:30 INFO mapreduce.Job: map 0% reduce 0%

19/04/02 23:06:46 INFO mapreduce.Job: map 100% reduce 0%

19/04/02 23:06:57 INFO mapreduce.Job: map 100% reduce 100%

19/04/02 23:06:58 INFO mapreduce.Job: Job job_1554217397936_0001 completed successfully

19/04/02 23:06:59 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=133

FILE: Number of bytes written=214969

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=240

HDFS: Number of bytes written=79

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=11386

Total time spent by all reduces in occupied slots (ms)=9511

Total time spent by all map tasks (ms)=11386

Total time spent by all reduce tasks (ms)=9511

Total vcore-milliseconds taken by all map tasks=11386

Total vcore-milliseconds taken by all reduce tasks=9511

Total megabyte-milliseconds taken by all map tasks=11659264

Total megabyte-milliseconds taken by all reduce tasks=9739264

Map-Reduce Framework

Map input records=24

Map output records=27

Map output bytes=236

Map output materialized bytes=133

Input split bytes=112

Combine input records=27

Combine output records=12

Reduce input groups=12

Reduce shuffle bytes=133

Reduce input records=12

Reduce output records=12

Spilled Records=24

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=338

CPU time spent (ms)=2600

Physical memory (bytes) snapshot=283582464

Virtual memory (bytes) snapshot=4125011968

Total committed heap usage (bytes)=137363456

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=128

File Output Format Counters

Bytes Written=79

Kafka进阶篇知识点

Kafka高级篇知识点

44个Kafka知识点(基础+进阶+高级)解析如下

由于篇幅有限,小编已将上面介绍的**《Kafka源码解析与实战》、Kafka面试专题解析、复习学习必备44个Kafka知识点(基础+进阶+高级)都整理成册,全部都是PDF文档**

3Aha-1716575158513)]

Kafka高级篇知识点

[外链图片转存中…(img-x6NjQlZs-1716575158514)]

44个Kafka知识点(基础+进阶+高级)解析如下

[外链图片转存中…(img-js4SaNxA-1716575158514)]

由于篇幅有限,小编已将上面介绍的**《Kafka源码解析与实战》、Kafka面试专题解析、复习学习必备44个Kafka知识点(基础+进阶+高级)都整理成册,全部都是PDF文档**

1143

1143

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?