The big goal is to unify the abstractions of tables and streams in one common framework:

Combined, tables and streams cover the critical spectrum of business operations ranging from strategic decision making supported by historical data to near- and real-time data used in interactive analysis… We believe, based on our experience and nearly two decades of research on streaming SQL extensions, that using the same SQL semantics in a consistent manner is a productive and elegant way to unify these two modalities of data…

As the authors point out, there has been a lot of prior work in this space over many years, and the proposals presented in this paper draw on much of it. At the sharp end, they are based on lessons learned working an Apache Flink, Beam, and Calcite.

The thing that streaming adds to a traditional relational view is the concept of time. Note that a mutable database table, as perceived by a consumer across multiple queries, is already a time-varying relation (TVR). It’s just that for any one query the results always show the relation at a single point in time.

A time-varying relation is exactly what the name implies: a relationship whose contents may vary over time… The key insight, stated but under-utilized in prior work, is that streams and tables are two representations for one semantic object.

The TVR, by definition, supports the entire suite of relational operators, even in scenarios involving time-varying relational data. So the first part of the proposal is essentially a no-op! We want TVRs, and that’s what relations already are, so let’s just use them – and make it explicit that SQL operates over TVRs as we do so.

We do need some extensions to deal with the notion of event time though. In particular, we need to take care to separate the event time from the processing time (which could be some arbitrary time later). We also need to understand that events will not necessarily be presented for processing in event-time order.

We propose to support event time semantics via two concepts: explicit event timestamps and watermarks. Together, these allow correct event time calculation, such as grouping into intervals (or windows) of event time, to be effectively expressed and carried out without consuming unbounded resources.

The watermarking model used traces its lineage back to Millwheel, Google Cloud Dataflow, and from there to Beam and Flink. For each moment in processing time, the watermark specifies the event timestamp up to which the input is believed to be complete at that point in processing time.

The third piece of the puzzle is to provide some control over how relations are rendered and when rows are materialized. For example: should a query’s output change instantaneously to reflect any new input (normally overkill), or do we just want to see batched updates at the end of a window?

Example

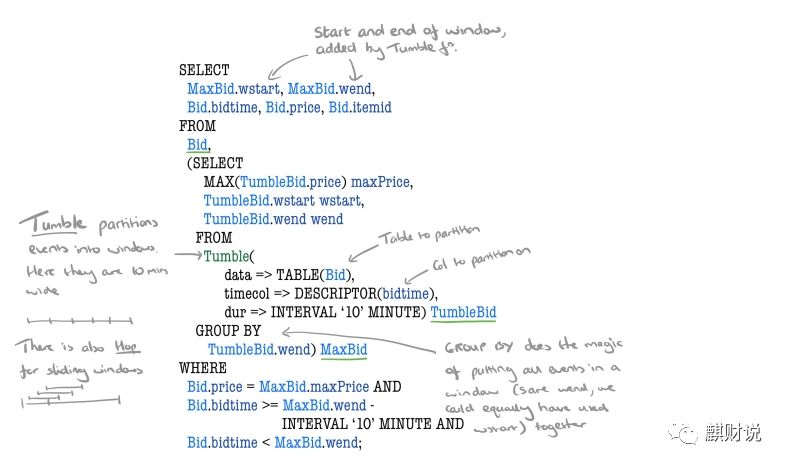

Query 7 from the NEXmark stream querying benchmark monitors the highest price items in an auction. Every ten minutes, it returns the highest bid and associated itemid for the most recent ten minutes.

Here’s what it looks like expressed using the proposed SQL extensions. Rather than give a lengthy prose description of what’s going on, I’ve chosen just to annotate the query itself. Hopefully that’s enough for you to get the gist…

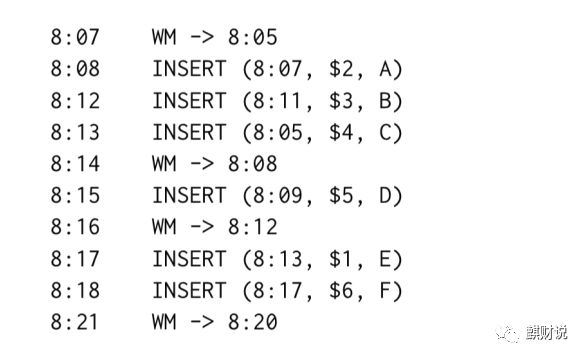

Given the following events

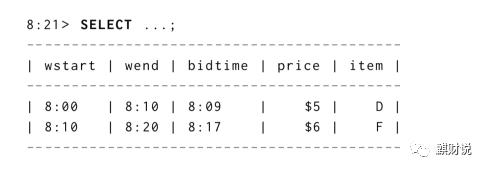

Then a query evaluated at 8:21 would yield the following TVR:

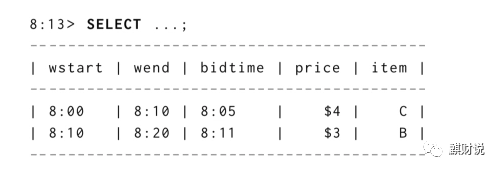

Whereas an evaluation at 8:13 would have looked different:

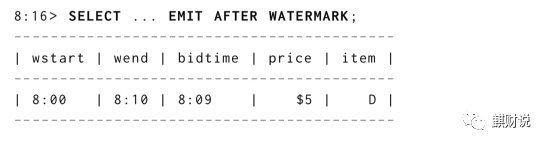

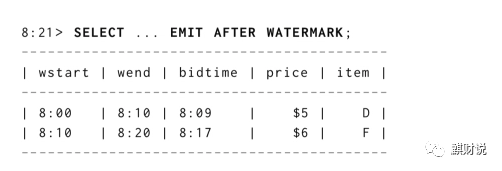

Note that as currently expressed, the query returns point in time results, but we can use the materialisation delay extensions to change that if we want to. For example, SELECT ... EMIT AFTER WATERMARK; will only emit rows once the watermark has passed the end of the a window.

So at 8:16 we’d see

And at 8:21:

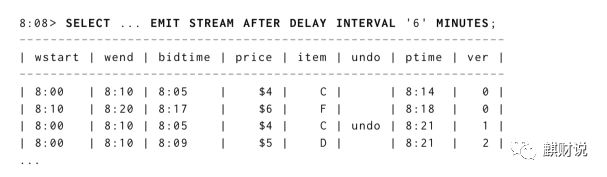

If we want to see rows for windows regardless of watermark, but only get periodic aggregated snapshots we can use SELECT ... EMIT STREAM AFTER DELAY (STREAM here indicates we want streamed results too).

SQL Extensions

Hopefully that’s given you a good flavour. As it stands, the proposal contains 7 extensions to standard SQL:

-

Watermarked event time column: an event time column in a relation is a distinguished column of type

TIMESTAMPwith an associated watermark. The watermark is maintained by the system. -

Grouping on event timestamps: when a GROUP BY clause groups on an event time column, only groups with a key less than the watermark for the column are included

-

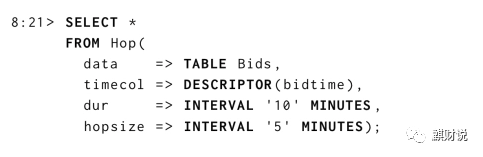

Event-time windowing functions: starting with

TumbleandHopwhich take a relation and event time column descriptor and return a relation with additional event-time columns as output. Tumble produces equally spaced disjoint windows, Hop produces equally sized sliding windows. -

Stream materialization: EMIT STREAM results in a time-varying relation representing changes to the classic result of the query. Additional columns indicate whether or not the row is a retraction of the previous row, the changelog processing time offset of the row, and a sequence number relative to other changes to the same event time grouping.

-

Materialization delay: when a query has an

EMIT AFTER WATERMARKmodifier, only complete rows from the results are materialized -

Periodic materialization: when a query has

EMIT AFTER DELAY drows are materialized with period d, instead of continuously. -

Combined materialization delay: when a query has

EMIT AFTER DELAY d AND AFTER WATERMARKrows are materialized with period d as well as when complete.

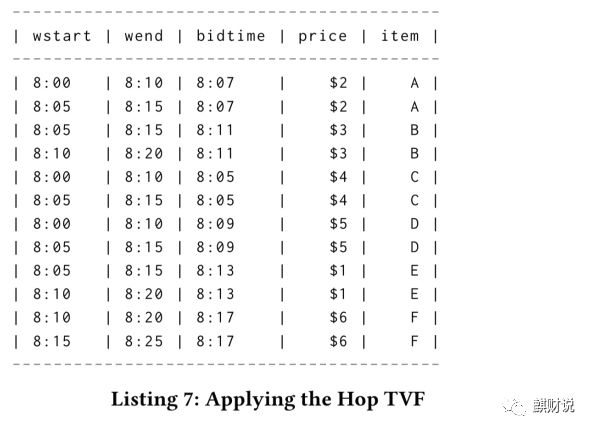

Hop example

1719

1719

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?