Java GC 源码分析(1)

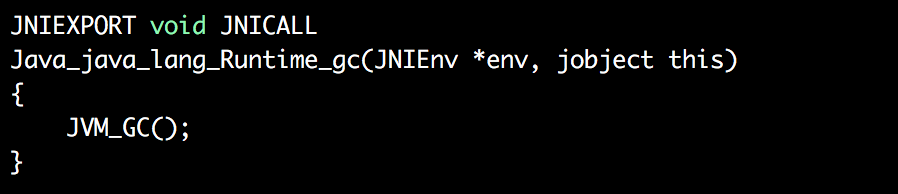

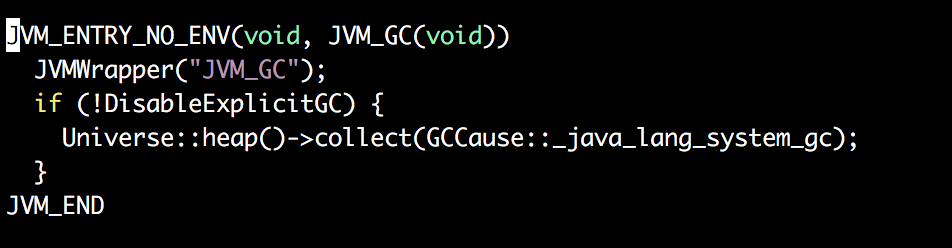

System.gc()入口

hotspot/src/share/vm/memory/universe.cpp:

jint Universe::initialize_heap() {

if (UseParallelGC) {

#if INCLUDE_ALL_GCS

Universe::_collectedHeap = new ParallelScavengeHeap();

#else // INCLUDE_ALL_GCS

fatal("UseParallelGC not supported in this VM.");

#endif // INCLUDE_ALL_GCS

} else if (UseG1GC) {

#if INCLUDE_ALL_GCS

G1CollectorPolicy* g1p = new G1CollectorPolicy();

g1p->initialize_all();

G1CollectedHeap* g1h = new G1CollectedHeap(g1p);

Universe::_collectedHeap = g1h;

#else // INCLUDE_ALL_GCS

fatal("UseG1GC not supported in java kernel vm.");

#endif // INCLUDE_ALL_GCS

} else {

GenCollectorPolicy *gc_policy;

if (UseSerialGC) {

gc_policy = new MarkSweepPolicy();

} else if (UseConcMarkSweepGC) {

#if INCLUDE_ALL_GCS

if (UseAdaptiveSizePolicy) {

gc_policy = new ASConcurrentMarkSweepPolicy();

} else {

gc_policy = new ConcurrentMarkSweepPolicy();

}

#else // INCLUDE_ALL_GCS

fatal("UseConcMarkSweepGC not supported in this VM.");

#endif // INCLUDE_ALL_GCS

} else { // default old generation

gc_policy = new MarkSweepPolicy();

}

gc_policy->initialize_all();

Universe::_collectedHeap = new GenCollectedHeap(gc_policy);

}

jint status = Universe::heap()->initialize();

if (status != JNI_OK) {

return status;

}- 通用收集堆的初始化

jint GenCollectedHeap::initialize() {

CollectedHeap::pre_initialize();

int i;

_n_gens = gen_policy()->number_of_generations();

// While there are no constraints in the GC code that HeapWordSize

// be any particular value, there are multiple other areas in the

// system which believe this to be true (e.g. oop->object_size in some

// cases incorrectly returns the size in wordSize units rather than

// HeapWordSize).

guarantee(HeapWordSize == wordSize, "HeapWordSize must equal wordSize");

// The heap must be at least as aligned as generations.

size_t gen_alignment = Generation::GenGrain;

_gen_specs = gen_policy()->generations();

// Make sure the sizes are all aligned.

for (i = 0; i < _n_gens; i++) {

_gen_specs[i]->align(gen_alignment);

}

// Allocate space for the heap.

char* heap_address;

size_t total_reserved = 0;

int n_covered_regions = 0;

ReservedSpace heap_rs;

size_t heap_alignment = collector_policy()->heap_alignment();

heap_address = allocate(heap_alignment, &total_reserved,

&n_covered_regions, &heap_rs);

if (!heap_rs.is_reserved()) {

vm_shutdown_during_initialization(

"Could not reserve enough space for object heap");

return JNI_ENOMEM;

}

_reserved = MemRegion((HeapWord*)heap_rs.base(),

(HeapWord*)(heap_rs.base() + heap_rs.size()));

// It is important to do this in a way such that concurrent readers can't

// temporarily think somethings in the heap. (Seen this happen in asserts.)

_reserved.set_word_size(0);

_reserved.set_start((HeapWord*)heap_rs.base());

size_t actual_heap_size = heap_rs.size();

_reserved.set_end((HeapWord*)(heap_rs.base() + actual_heap_size));

_rem_set = collector_policy()->create_rem_set(_reserved, n_covered_regions);

set_barrier_set(rem_set()->bs());

_gch = this;

for (i = 0; i < _n_gens; i++) {

ReservedSpace this_rs = heap_rs.first_part(_gen_specs[i]->max_size(), false, false);

_gens[i] = _gen_specs[i]->init(this_rs, i, rem_set());

heap_rs = heap_rs.last_part(_gen_specs[i]->max_size());

}

clear_incremental_collection_failed();

#if INCLUDE_ALL_GCS

// If we are running CMS, create the collector responsible

// for collecting the CMS generations.

if (collector_policy()->is_concurrent_mark_sweep_policy()) {

bool success = create_cms_collector();

if (!success) return JNI_ENOMEM;

}

#endif // INCLUDE_ALL_GCS

return JNI_OK;

}

- 通用收集堆的收集接口

void GenCollectedHeap::collect(GCCause::Cause cause) {

if (should_do_concurrent_full_gc(cause)) {

#if INCLUDE_ALL_GCS

// mostly concurrent full collection

collect_mostly_concurrent(cause);

#else // INCLUDE_ALL_GCS

ShouldNotReachHere();

#endif // INCLUDE_ALL_GCS

} else {

#ifdef ASSERT

if (cause == GCCause::_scavenge_alot) {

// minor collection only

collect(cause, 0);

} else {

// Stop-the-world full collection

collect(cause, n_gens() - 1);

}

#else

// Stop-the-world full collection

collect(cause, n_gens() - 1);

#endif

}

}

void GenCollectedHeap::collect(GCCause::Cause cause, int max_level) {

// The caller doesn't have the Heap_lock

assert(!Heap_lock->owned_by_self(), "this thread should not own the Heap_lock");

MutexLocker ml(Heap_lock);

collect_locked(cause, max_level);

}

void GenCollectedHeap::collect_locked(GCCause::Cause cause) {

// The caller has the Heap_lock

assert(Heap_lock->owned_by_self(), "this thread should own the Heap_lock");

collect_locked(cause, n_gens() - 1);

}

// this is the private collection interface

// The Heap_lock is expected to be held on entry.

void GenCollectedHeap::collect_locked(GCCause::Cause cause, int max_level) {

// Read the GC count while holding the Heap_lock

unsigned int gc_count_before = total_collections();

unsigned int full_gc_count_before = total_full_collections();

{

MutexUnlocker mu(Heap_lock); // give up heap lock, execute gets it back

VM_GenCollectFull op(gc_count_before, full_gc_count_before,

cause, max_level);

VMThread::execute(&op);

}

}其中VM_Operation实现了如下接口方法:

// Called by VM thread - does in turn invoke doit(). Do not override this

void evaluate();

// evaluate() is called by the VMThread and in turn calls doit().

// If the thread invoking VMThread::execute((VM_Operation*) is a JavaThread,

// doit_prologue() is called in that thread before transferring control to

// the VMThread.

// If doit_prologue() returns true the VM operation will proceed, and

// doit_epilogue() will be called by the JavaThread once the VM operation

// completes. If doit_prologue() returns false the VM operation is cancelled.

virtual void doit() = 0;

virtual bool doit_prologue() { return true; };

virtual void doit_epilogue() {}; // Note: Not called if mode is: _concurrent- 通用垃圾收集过程

void VM_GenCollectFull::doit() {

SvcGCMarker sgcm(SvcGCMarker::FULL);

GenCollectedHeap* gch = GenCollectedHeap::heap();

GCCauseSetter gccs(gch, _gc_cause);

gch->do_full_collection(gch->must_clear_all_soft_refs(), _max_level);

}收集过程:

void GenCollectedHeap::do_collection(bool full,

bool clear_all_soft_refs,

size_t size,

bool is_tlab,

int max_level) {

bool prepared_for_verification = false;

ResourceMark rm;

DEBUG_ONLY(Thread* my_thread = Thread::current();)

assert(SafepointSynchronize::is_at_safepoint(), "should be at safepoint");

assert(my_thread->is_VM_thread() ||

my_thread->is_ConcurrentGC_thread(),

"incorrect thread type capability");

assert(Heap_lock->is_locked(),

"the requesting thread should have the Heap_lock");

guarantee(!is_gc_active(), "collection is not reentrant");

assert(max_level < n_gens(), "sanity check");

if (GC_locker::check_active_before_gc()) {

return; // GC is disabled (e.g. JNI GetXXXCritical operation)

}

const bool do_clear_all_soft_refs = clear_all_soft_refs ||

collector_policy()->should_clear_all_soft_refs();

ClearedAllSoftRefs casr(do_clear_all_soft_refs, collector_policy());

const size_t metadata_prev_used = MetaspaceAux::allocated_used_bytes();

print_heap_before_gc();

{

FlagSetting fl(_is_gc_active, true);

bool complete = full && (max_level == (n_gens()-1));

const char* gc_cause_prefix = complete ? "Full GC" : "GC";

gclog_or_tty->date_stamp(PrintGC && PrintGCDateStamps);

TraceCPUTime tcpu(PrintGCDetails, true, gclog_or_tty);

GCTraceTime t(GCCauseString(gc_cause_prefix, gc_cause()), PrintGCDetails, false, NULL);

gc_prologue(complete);

increment_total_collections(complete);

size_t gch_prev_used = used();

int starting_level = 0;

if (full) {

// Search for the oldest generation which will collect all younger

// generations, and start collection loop there.

for (int i = max_level; i >= 0; i--) {

if (_gens[i]->full_collects_younger_generations()) {

starting_level = i;

break;

}

}

}

bool must_restore_marks_for_biased_locking = false;

int max_level_collected = starting_level;

for (int i = starting_level; i <= max_level; i++) {

if (_gens[i]->should_collect(full, size, is_tlab)) {

if (i == n_gens() - 1) { // a major collection is to happen

if (!complete) {

// The full_collections increment was missed above.

increment_total_full_collections();

}

pre_full_gc_dump(NULL); // do any pre full gc dumps

}

// Timer for individual generations. Last argument is false: no CR

// FIXME: We should try to start the timing earlier to cover more of the GC pause

GCTraceTime t1(_gens[i]->short_name(), PrintGCDetails, false, NULL);

TraceCollectorStats tcs(_gens[i]->counters());

TraceMemoryManagerStats tmms(_gens[i]->kind(),gc_cause());

size_t prev_used = _gens[i]->used();

_gens[i]->stat_record()->invocations++;

_gens[i]->stat_record()->accumulated_time.start();

// Must be done anew before each collection because

// a previous collection will do mangling and will

// change top of some spaces.

record_gen_tops_before_GC();

if (PrintGC && Verbose) {

gclog_or_tty->print("level=%d invoke=%d size=" SIZE_FORMAT,

i,

_gens[i]->stat_record()->invocations,

size*HeapWordSize);

}

if (VerifyBeforeGC && i >= VerifyGCLevel &&

total_collections() >= VerifyGCStartAt) {

HandleMark hm; // Discard invalid handles created during verification

if (!prepared_for_verification) {

prepare_for_verify();

prepared_for_verification = true;

}

Universe::verify(" VerifyBeforeGC:");

}

COMPILER2_PRESENT(DerivedPointerTable::clear());

if (!must_restore_marks_for_biased_locking &&

_gens[i]->performs_in_place_marking()) {

// We perform this mark word preservation work lazily

// because it's only at this point that we know whether we

// absolutely have to do it; we want to avoid doing it for

// scavenge-only collections where it's unnecessary

must_restore_marks_for_biased_locking = true;

BiasedLocking::preserve_marks();

}

// Do collection work

{

// Note on ref discovery: For what appear to be historical reasons,

// GCH enables and disabled (by enqueing) refs discovery.

// In the future this should be moved into the generation's

// collect method so that ref discovery and enqueueing concerns

// are local to a generation. The collect method could return

// an appropriate indication in the case that notification on

// the ref lock was needed. This will make the treatment of

// weak refs more uniform (and indeed remove such concerns

// from GCH). XXX

HandleMark hm; // Discard invalid handles created during gc

save_marks(); // save marks for all gens

// We want to discover references, but not process them yet.

// This mode is disabled in process_discovered_references if the

// generation does some collection work, or in

// enqueue_discovered_references if the generation returns

// without doing any work.

ReferenceProcessor* rp = _gens[i]->ref_processor();

// If the discovery of ("weak") refs in this generation is

// atomic wrt other collectors in this configuration, we

// are guaranteed to have empty discovered ref lists.

if (rp->discovery_is_atomic()) {

rp->enable_discovery(true /*verify_disabled*/, true /*verify_no_refs*/);

rp->setup_policy(do_clear_all_soft_refs);

} else {

// collect() below will enable discovery as appropriate

}

_gens[i]->collect(full, do_clear_all_soft_refs, size, is_tlab);

if (!rp->enqueuing_is_done()) {

rp->enqueue_discovered_references();

} else {

rp->set_enqueuing_is_done(false);

}

rp->verify_no_references_recorded();

}

max_level_collected = i;

// Determine if allocation request was met.

if (size > 0) {

if (!is_tlab || _gens[i]->supports_tlab_allocation()) {

if (size*HeapWordSize <= _gens[i]->unsafe_max_alloc_nogc()) {

size = 0;

}

}

}

COMPILER2_PRESENT(DerivedPointerTable::update_pointers());

_gens[i]->stat_record()->accumulated_time.stop();

update_gc_stats(i, full);

if (VerifyAfterGC && i >= VerifyGCLevel &&

total_collections() >= VerifyGCStartAt) {

HandleMark hm; // Discard invalid handles created during verification

Universe::verify(" VerifyAfterGC:");

}

if (PrintGCDetails) {

gclog_or_tty->print(":");

_gens[i]->print_heap_change(prev_used);

}

}

}

// Update "complete" boolean wrt what actually transpired --

// for instance, a promotion failure could have led to

// a whole heap collection.

complete = complete || (max_level_collected == n_gens() - 1);

if (complete) { // We did a "major" collection

// FIXME: See comment at pre_full_gc_dump call

post_full_gc_dump(NULL); // do any post full gc dumps

}

if (PrintGCDetails) {

print_heap_change(gch_prev_used);

// Print metaspace info for full GC with PrintGCDetails flag.

if (complete) {

MetaspaceAux::print_metaspace_change(metadata_prev_used);

}

}

for (int j = max_level_collected; j >= 0; j -= 1) {

// Adjust generation sizes.

_gens[j]->compute_new_size();

}

if (complete) {

// Delete metaspaces for unloaded class loaders and clean up loader_data graph

ClassLoaderDataGraph::purge();

MetaspaceAux::verify_metrics();

// Resize the metaspace capacity after full collections

MetaspaceGC::compute_new_size();

update_full_collections_completed();

}

// Track memory usage and detect low memory after GC finishes

MemoryService::track_memory_usage();

gc_epilogue(complete);

if (must_restore_marks_for_biased_locking) {

BiasedLocking::restore_marks();

}

}

AdaptiveSizePolicy* sp = gen_policy()->size_policy();

AdaptiveSizePolicyOutput(sp, total_collections());

print_heap_after_gc();

#ifdef TRACESPINNING

ParallelTaskTerminator::print_termination_counts();

#endif

}

224

224

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?