前言

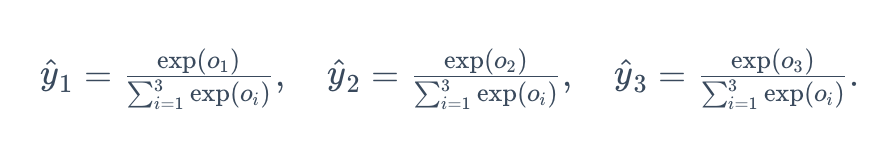

softmax回归其实和普通线性回归很像,只是普通的线性回归是二分类,但是softmax可以是多分类。

o

i

=

x

i

W

+

b

,

y

i

=

s

o

f

t

m

a

x

(

o

i

)

o^i = x^iW+b, \\y^i= softmax(o^i)

oi=xiW+b,yi=softmax(oi)

softmax的原始代码

想比与源代码,根据自己情况有所改动

import torch

import torchvision

import numpy as np

import d2l # 原文的包,被我拉到本地,有所改动

num_epochs, lr = 5, 0.1

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

num_inputs = 784

num_outputs = 10

# 参数

W = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_outputs)), dtype=torch.float) # 正则化随机创建

b = torch.zeros(num_outputs, dtype=torch.float)

W.requires_grad_(requires_grad=True)

b.requires_grad_(requires_grad=True)

# 模型

def softmax(X):

X_exp = X.exp()

partition = X_exp.sum(dim=1, keepdim=True) # keepdim 结果保留维度

return X_exp / partition # 这里应用了广播机制

def net(X):

return softmax(torch.mm(X.view((-1, num_inputs)), W) + b)

# 损失函数

def cross_entropy(y_hat, y): # y shape[1, ] len=batchsize

p = y_hat.gather(1, y.view(-1, 1)) # p shape[1, ] len=batchsize

return - torch.log(p)

# 在这个训练数据集中,是这个类的y_i为1,不是的y_i为0,因为数据集的读取问题,所以需要gather寻找对应位置的预测概率

# 本函数已保存在d2lzh_pytorch包中方便以后使用。该函数将被逐步改进:它的完整实现将在“图像增广”一节中描述

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for X, y in data_iter:

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

return acc_sum / n

# 本函数已保存在d2lzh包中方便以后使用

def train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size,

params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X) # shape[batchsize, num_output]

l = loss(y_hat, y).sum()

# 梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

d2l.sgd(params, lr, batch_size)

else:

optimizer.step() # “softmax回归的简洁实现”一节将用到

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item() # 训练数据的正确率

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f'

% (epoch + 1, train_l_sum / n, train_acc_sum / n, test_acc))

train_ch3(net, train_iter, test_iter, cross_entropy, num_epochs, batch_size, [W, b], lr)

y.gather(dim, index)函数

举个例子:

import torch

a = torch.randint(0, 30, (2, 3, 5))

print(a)

index = torch.LongTensor([[[0,1,2,0,2],

[0,0,0,0,0],

[1,1,1,1,1]],

[[1,2,2,2,2],

[0,0,0,0,0],

[2,2,2,2,2]]])

b = torch.gather(a, 1, index)

print(b)

'''

dim=1,所以在第二个维度上改变,第二个维度的值会根据同样位置index上的改变

a中的a[0][0][1]根据index[0][0][1]的值,变为a[0][1][1]的值(只有dim维度会变化)

'''

参考:https://www.jianshu.com/p/5d1f8cd5fe31

softmax的简洁代码

import torch

from torch import nn

from torch.nn import init

import numpy as np

import d2l

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

num_inputs = 784

num_outputs = 10

# 模型

class LinearNet(nn.Module):

def __init__(self, num_inputs, num_outputs):

super(LinearNet, self).__init__()

self.linear = nn.Linear(num_inputs, num_outputs)

def forward(self, x): # x shape: (batch, 1, 28, 28)a

y = self.linear(x.view(x.shape[0], -1))

return y

net = LinearNet(num_inputs, num_outputs)

# 初始化

init.normal_(net.linear.weight, mean=0, std=0.01)

init.constant_(net.linear.bias, val=0)

# 损失函数

loss = nn.CrossEntropyLoss()

# 优化方法

optimizer = torch.optim.SGD(net.parameters(), lr=0.1)

# 训练

num_epochs = 5

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for X, y in data_iter:

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

return acc_sum / n

def train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size,

params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

# 梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

d2l.sgd(params, lr, batch_size)

else:

optimizer.step() # “softmax回归的简洁实现”一节将用到

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item() # 训练数据的正确率

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f'

% (epoch + 1, train_l_sum / n, train_acc_sum / n, test_acc))

train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, None, None, optimizer) # 上节,封装到d2l中

多层感知机

前面的两种都是单层神经网络,多层神经网络可以说是在单层神经网络加上可隐藏层。

上面可以看出,层数越多也是多加上一些多项式即可。

多层感知机原始代码

用于是从单层神经网络而来,其实很多地方很像,区别在于多其他层的w,b;加上激活函数,网络结构稍作改变。还是用之前的数据集。

# 代码不完整

# w,b

num_inputs, num_outputs, num_hiddens = 784, 10, 256

W1 = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_hiddens)), dtype=torch.float)

b1 = torch.zeros(num_hiddens, dtype=torch.float)

W2 = torch.tensor(np.random.normal(0, 0.01, (num_hiddens, num_outputs)), dtype=torch.float)

b2 = torch.zeros(num_outputs, dtype=torch.float)

params = [W1, b1, W2, b2]

for param in params:

param.requires_grad_(requires_grad=True)

# 激活函数

def relu(X):

return torch.max(input=X, other=torch.tensor(0.0))

# 网络模型

def net(X):

X = X.view((-1, num_inputs))

H = relu(torch.matmul(X, W1) + b1)

return torch.matmul(H, W2) + b2

多层感知机简洁代码

# 代码不完整,只有区别部分

num_inputs, num_outputs, num_hiddens = 784, 10, 256

# 网络,自带参数

net = nn.Sequential(

d2l.FlattenLayer(),

nn.Linear(num_inputs, num_hiddens),

nn.ReLU(),

nn.Linear(num_hiddens, num_outputs),

)

447

447

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?