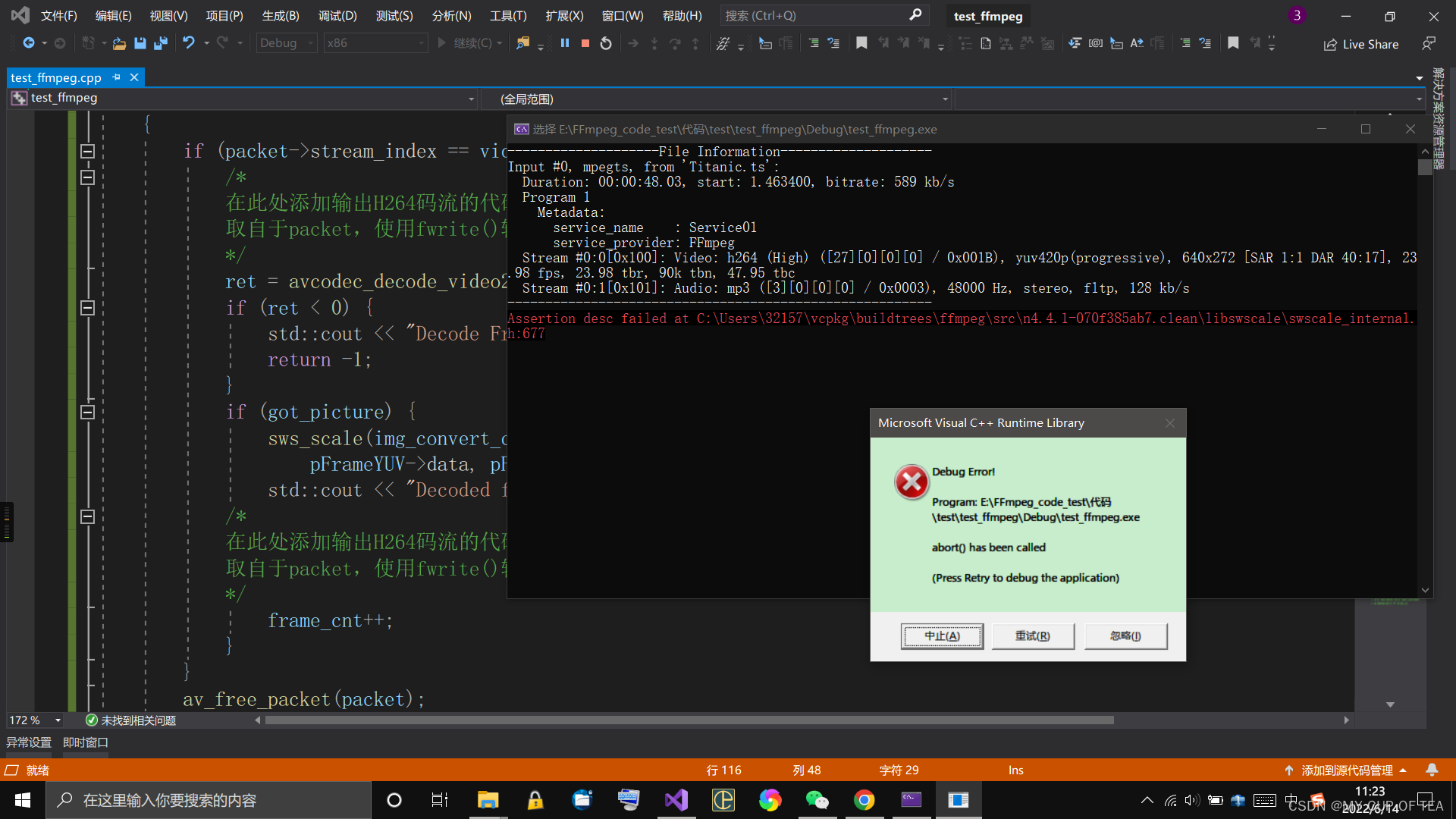

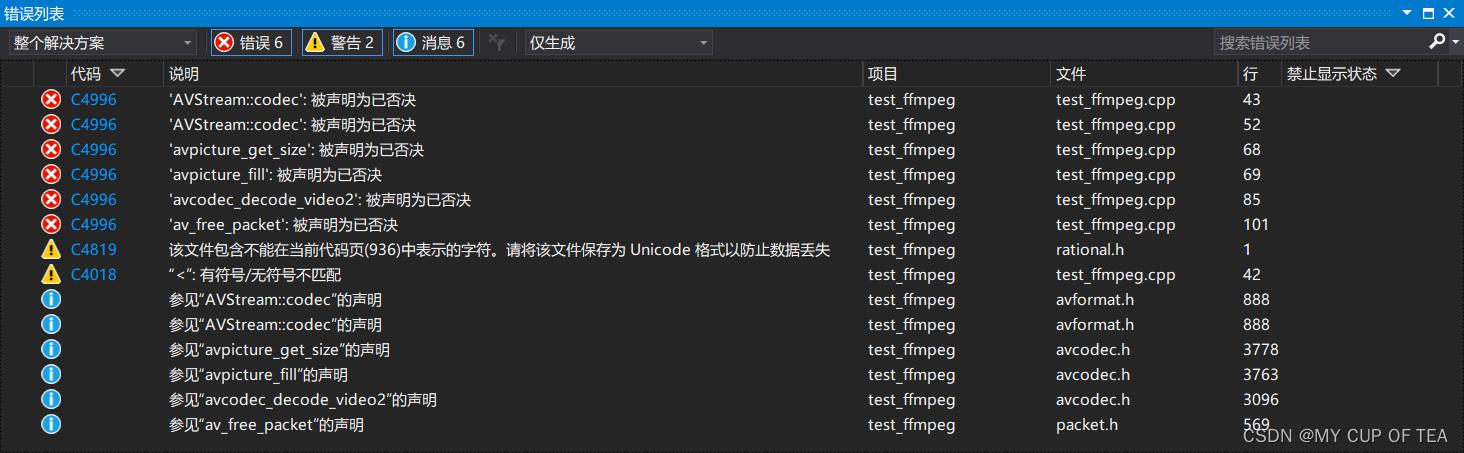

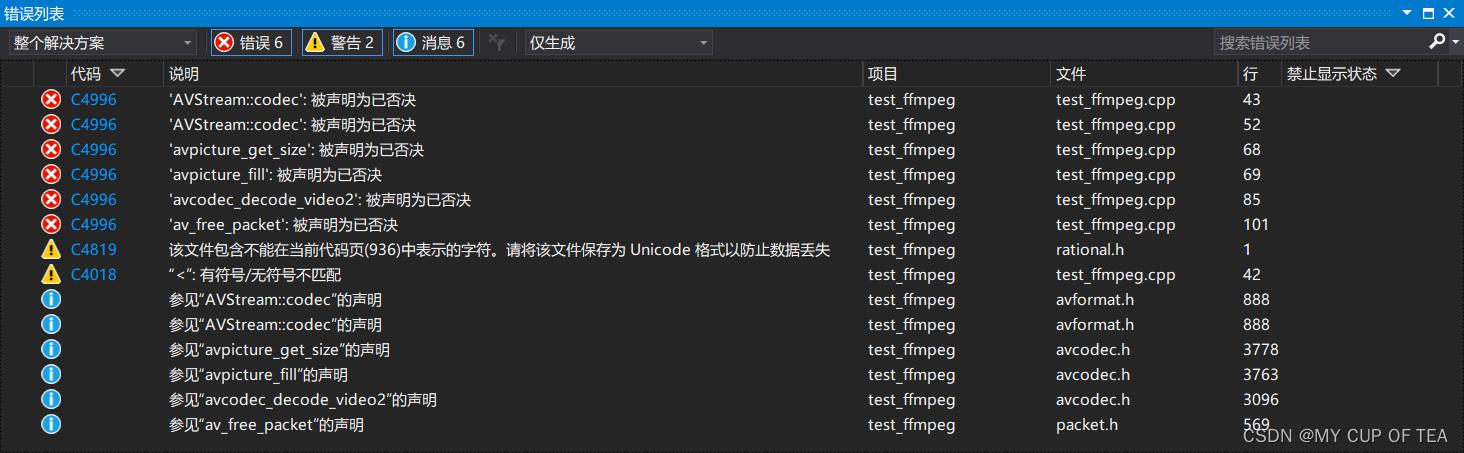

旧代码

- 旧代码使用了很多过时的API,这些API使用后,vs会报编译器警告 (级别 3) C4996的错误

- 即 函数被声明为已否决 报 C4996的错误

// test_ffmpeg.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

//

#define SDL_MAIN_HANDLED

#define __STDC_CONSTANT_MACROS

#pragma warning(disable: 4996)

#include <iostream>

#include <SDL2/SDL.h>

extern "C" {

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

}

int main(int argc,char* argv[])

{

AVFormatContext *pFormatCtx;

int i, videoindex;

AVCodecContext* pCodeCtx;

AVCodec* pCodec;

AVFrame* pFrame, *pFrameYUV;

uint8_t* out_buffer;

AVPacket* packet;

//int y_size;

int ret, got_picture;

struct SwsContext* img_convert_ctx;

//输入文件的路径

char filepath[] = "Titanic.ts";

int frame_cnt;

avformat_network_init();

pFormatCtx = avformat_alloc_context();

if (avformat_open_input(&pFormatCtx, filepath, NULL, NULL) != 0) {

std::cout << "Couldn't open input stream." << std::endl;

return -1;

}

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

std::cout << "Couldn't find stream information." << std::endl;

return -1;

}

videoindex = -1;

for (i = 0;i < pFormatCtx->nb_streams;i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

}

if (videoindex == -1) {

std::cout << "Didn't find a video stream." << std::endl;

return -1;

}

pCodeCtx = pFormatCtx->streams[videoindex]->codec;

pCodec = avcodec_find_decoder(pCodeCtx->codec_id);

if (pCodec == NULL) {

std::cout << "Codec not find!" << std::endl;

return -1;

}

if (avcodec_open2(pCodeCtx, pCodec, NULL) < 0) {

std::cout << "Could not open codec!" << std::endl;

return -1;

}

/*

此处添加输出视频信息的代码

取自于pFormatCtx,使用std::cout输出

*/

pFrame = av_frame_alloc();

pFrameYUV = av_frame_alloc();

out_buffer = (uint8_t*)av_malloc(avpicture_get_size(AV_PIX_FMT_YUV420P, pCodeCtx->width, pCodeCtx->height));

avpicture_fill((AVPicture*)pFrameYUV, out_buffer, AV_PIX_FMT_YUV420P, pCodeCtx->width, pCodeCtx->height);

packet = (AVPacket*)av_malloc(sizeof(AVPacket));

//Output Info

std::cout << "--------------------File Information--------------------" << std::endl;

av_dump_format(pFormatCtx, 0, filepath, 0);

std::cout << "--------------------------------------------------------" << std::endl;

img_convert_ctx = sws_getContext(pCodeCtx->width, pCodeCtx->height, pCodeCtx->sw_pix_fmt,

pCodeCtx->width, pCodeCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

frame_cnt = 0;

while (av_read_frame(pFormatCtx,packet)>=0)

{

if (packet->stream_index == videoindex) {

/*

在此处添加输出H264码流的代码

取自于packet,使用fwrite()输出

*/

ret = avcodec_decode_video2(pCodeCtx, pFrame, &got_picture, packet);

if (ret < 0) {

std::cout << "Decode Frror!" << std::endl;

return -1;

}

if (got_picture) {

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodeCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

std::cout << "Decoded frame index"<< frame_cnt << std::endl;

/*

在此处添加输出H264码流的代码

取自于packet,使用fwrite()输出

*/

frame_cnt++;

}

}

av_free_packet(packet);

}

sws_freeContext(img_convert_ctx);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodeCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

// 运行程序: Ctrl + F5 或调试 >“开始执行(不调试)”菜单

// 调试程序: F5 或调试 >“开始调试”菜单

// 入门使用技巧:

// 1. 使用解决方案资源管理器窗口添加/管理文件

// 2. 使用团队资源管理器窗口连接到源代码管理

// 3. 使用输出窗口查看生成输出和其他消息

// 4. 使用错误列表窗口查看错误

// 5. 转到“项目”>“添加新项”以创建新的代码文件,或转到“项目”>“添加现有项”以将现有代码文件添加到项目

// 6. 将来,若要再次打开此项目,请转到“文件”>“打开”>“项目”并选择 .sln 文件

对应修改

- FFmpeg 被声明为已否决 deprecated_Louis_815的博客-CSDN博客

- api函数替换,在里面搜索ctrl+F,会有英文说明的

- PIX_FMT_YUV420P -> AV_PIX_FMT_YUV420P

- 'AVStream::codec': 被声明为已否决:

- if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO){

- =>

- if(pFormatCtx->streams[i]->codecpar->codec_type==AVMEDIA_TYPE_VIDEO){

- 'AVStream::codec': 被声明为已否决:

- pCodecCtx = pFormatCtx->streams[videoindex]->codec;

- =>

- pCodecCtx = avcodec_alloc_context3(NULL);

- avcodec_parameters_to_context(pCodecCtx, pFormatCtx->streams[videoindex]->codecpar);

- 'avpicture_get_size': 被声明为已否决:

- avpicture_get_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height)

- =>

- #include "libavutil/imgutils.h"

- av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1)

- 'avpicture_fill': 被声明为已否决:

- avpicture_fill((AVPicture *)pFrameYUV, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

- =>

- av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1);

- 'avcodec_decode_video2': 被声明为已否决:

- ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet); //got_picture_ptr Zero if no frame could be decompressed

- =>

- ret = avcodec_send_packet(pCodecCtx, packet);

- got_picture = avcodec_receive_frame(pCodecCtx, pFrame); //got_picture = 0 success, a frame was returned

- //注意:got_picture含义相反

- 或者:

- int ret = avcodec_send_packet(aCodecCtx, &pkt);

- if (ret != 0)

- {

- prinitf("%s/n","error");

- return;

- }

- while( avcodec_receive_frame(aCodecCtx, &frame) == 0){

- //读取到一帧音频或者视频

- //处理解码后音视频 frame

- }

- 'av_free_packet': 被声明为已否决:

- av_free_packet(packet);

- =>

- av_packet_unref(packet);

- 本文代码 未涉及

- avcodec_decode_audio4:被声明为已否决:

- int ret = avcodec_send_packet(aCodecCtx, &pkt);

- if (ret != 0){prinitf("%s/n","error");}

- while( avcodec_receive_frame(aCodecCtx, &frame) == 0){

- //读取到一帧音频或者视频

- //处理解码后音视频 frame

- }

修改后的代码

// test_ffmpeg.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

//

#define SDL_MAIN_HANDLED

#define __STDC_CONSTANT_MACROS

#pragma warning(disable: 4819)

#include <iostream>

#include <SDL2/SDL.h>

extern "C" {

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

}

int main(int argc,char* argv[])

{

AVFormatContext *pFormatCtx;

int i, videoindex;

AVCodecContext* pCodeCtx;

AVCodec* pCodec;

AVFrame* pFrame, *pFrameYUV;

uint8_t* out_buffer;

AVPacket* packet;

//int y_size;

int ret, got_picture;

struct SwsContext* img_convert_ctx;

//输入文件的路径

char filepath[] = "Titanic.ts";

int frame_cnt;

avformat_network_init();

pFormatCtx = avformat_alloc_context();

if (avformat_open_input(&pFormatCtx, filepath, NULL, NULL) != 0) {

std::cout << "Couldn't open input stream." << std::endl;

return -1;

}

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

std::cout << "Couldn't find stream information." << std::endl;

return -1;

}

videoindex = -1;

for (i = 0;i < pFormatCtx->nb_streams;i++) {

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

}

if (videoindex == -1) {

std::cout << "Didn't find a video stream." << std::endl;

return -1;

}

pCodeCtx = avcodec_alloc_context3(NULL);

avcodec_parameters_to_context(pCodeCtx, pFormatCtx->streams[videoindex]->codecpar);

pCodec = avcodec_find_decoder(pCodeCtx->codec_id);

if (pCodec == NULL) {

std::cout << "Codec not find!" << std::endl;

return -1;

}

if (avcodec_open2(pCodeCtx, pCodec, NULL) < 0) {

std::cout << "Could not open codec!" << std::endl;

return -1;

}

/*

此处添加输出视频信息的代码

取自于pFormatCtx,使用std::cout输出

*/

pFrame = av_frame_alloc();

pFrameYUV = av_frame_alloc();

out_buffer = (uint8_t*)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodeCtx->width, pCodeCtx->height,1));

av_image_fill_arrays(pFrameYUV->data,pFrameYUV->linesize,out_buffer,AV_PIX_FMT_YUV420P,pCodeCtx->width,pCodeCtx->height,1);

packet = (AVPacket*)av_malloc(sizeof(AVPacket));

//Output Info

std::cout << "--------------------File Information--------------------" << std::endl;

av_dump_format(pFormatCtx, 0, filepath, 0);

std::cout << "--------------------------------------------------------" << std::endl;

img_convert_ctx = sws_getContext(pCodeCtx->width, pCodeCtx->height, pCodeCtx->sw_pix_fmt,

pCodeCtx->width, pCodeCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

frame_cnt = 0;

while (av_read_frame(pFormatCtx,packet)>=0)

{

if (packet->stream_index == videoindex) {

/*

在此处添加输出H264码流的代码

取自于packet,使用fwrite()输出

*/

ret = avcodec_send_packet(pCodeCtx, packet);

//注意 got_picture=0 success,a frame was returned

got_picture = avcodec_receive_frame(pCodeCtx, pFrame);

if (ret < 0) {

std::cout << "Decode Frror!" << std::endl;

return -1;

}

if (got_picture) {

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodeCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

std::cout << "Decoded frame index"<< frame_cnt << std::endl;

/*

在此处添加输出H264码流的代码

取自于packet,使用fwrite()输出

*/

frame_cnt++;

}

}

av_packet_unref(packet);

}

sws_freeContext(img_convert_ctx);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodeCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

// 运行程序: Ctrl + F5 或调试 >“开始执行(不调试)”菜单

// 调试程序: F5 或调试 >“开始调试”菜单

// 入门使用技巧:

// 1. 使用解决方案资源管理器窗口添加/管理文件

// 2. 使用团队资源管理器窗口连接到源代码管理

// 3. 使用输出窗口查看生成输出和其他消息

// 4. 使用错误列表窗口查看错误

// 5. 转到“项目”>“添加新项”以创建新的代码文件,或转到“项目”>“添加现有项”以将现有代码文件添加到项目

// 6. 将来,若要再次打开此项目,请转到“文件”>“打开”>“项目”并选择 .sln 文件

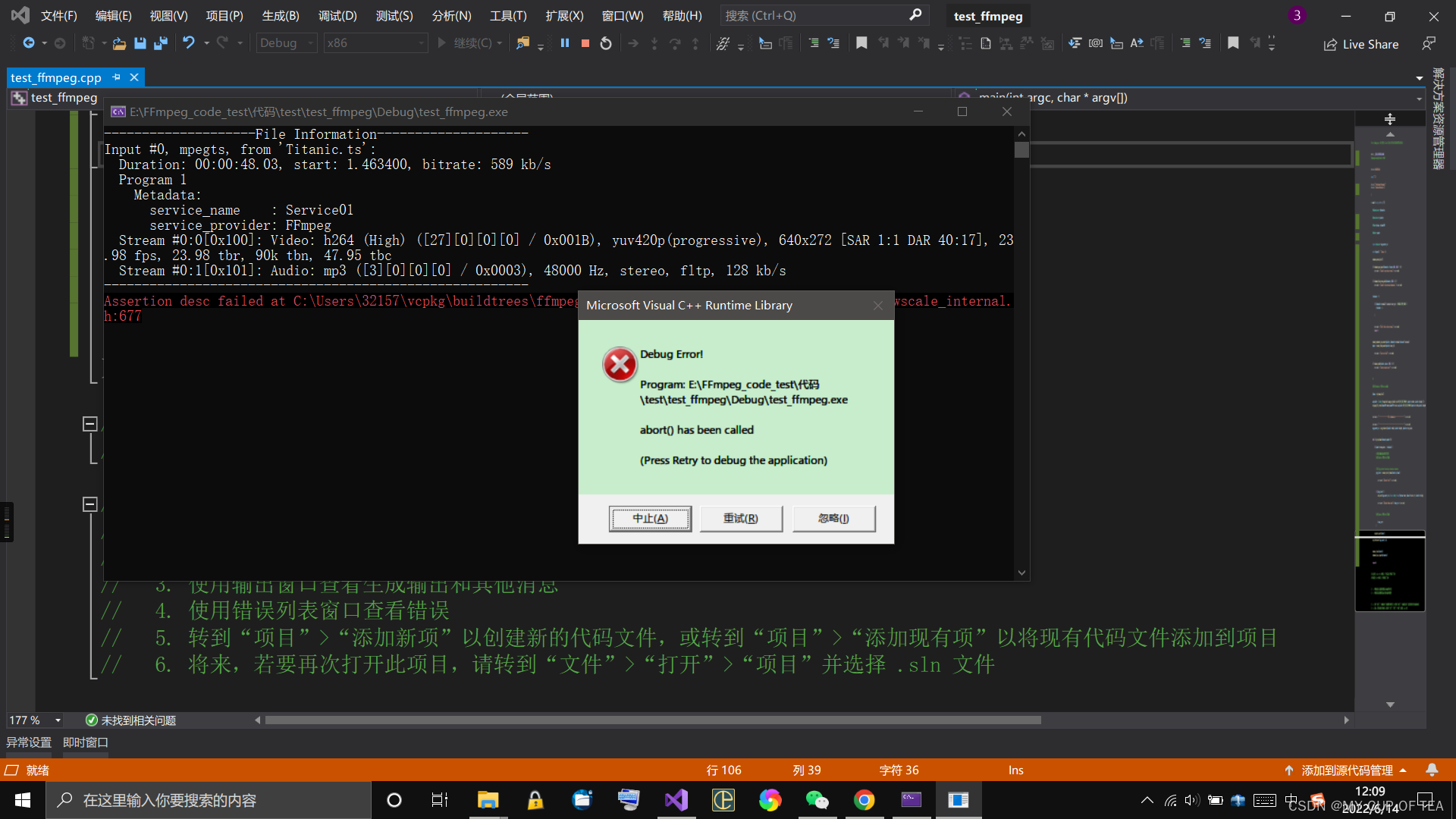

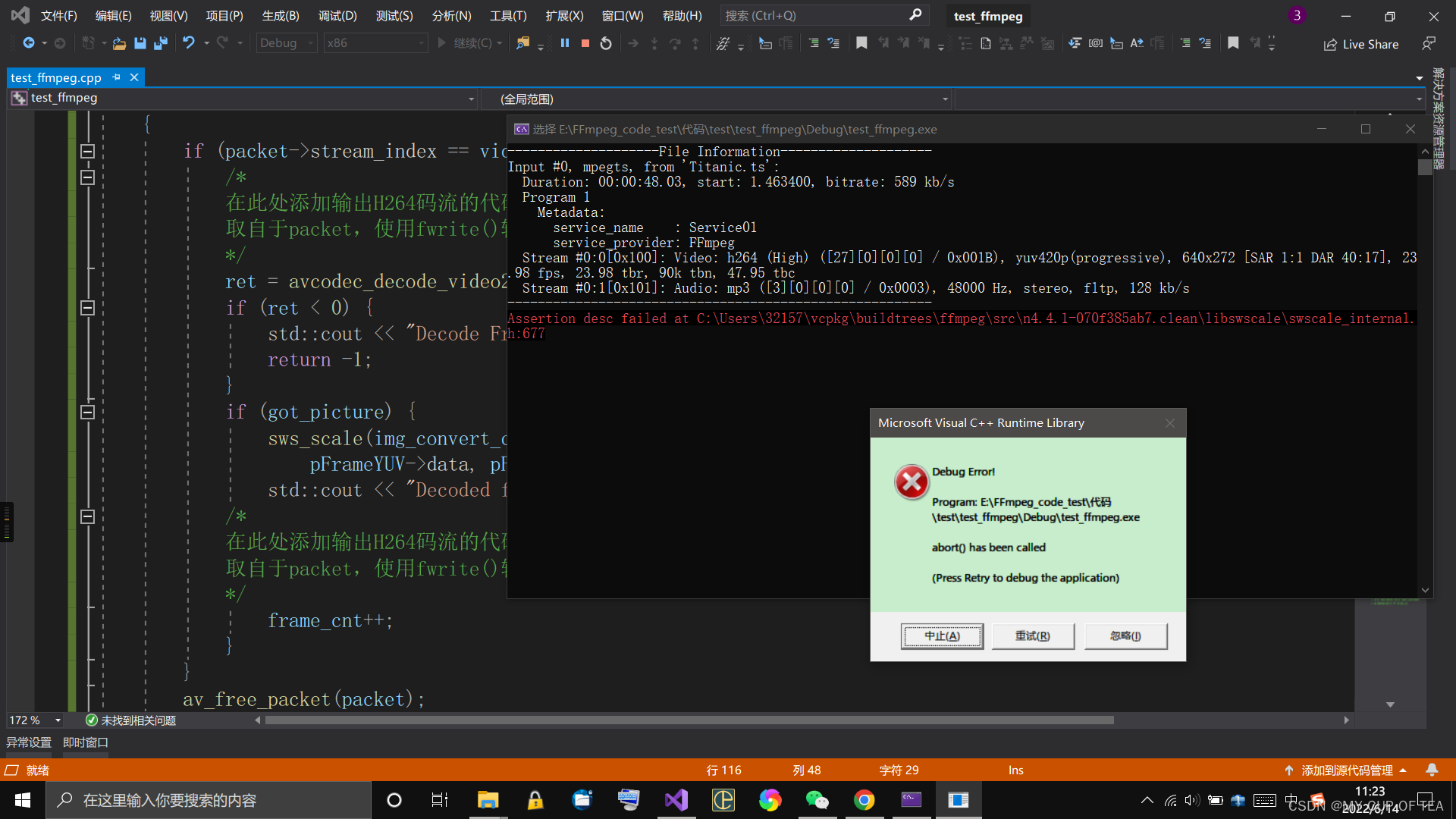

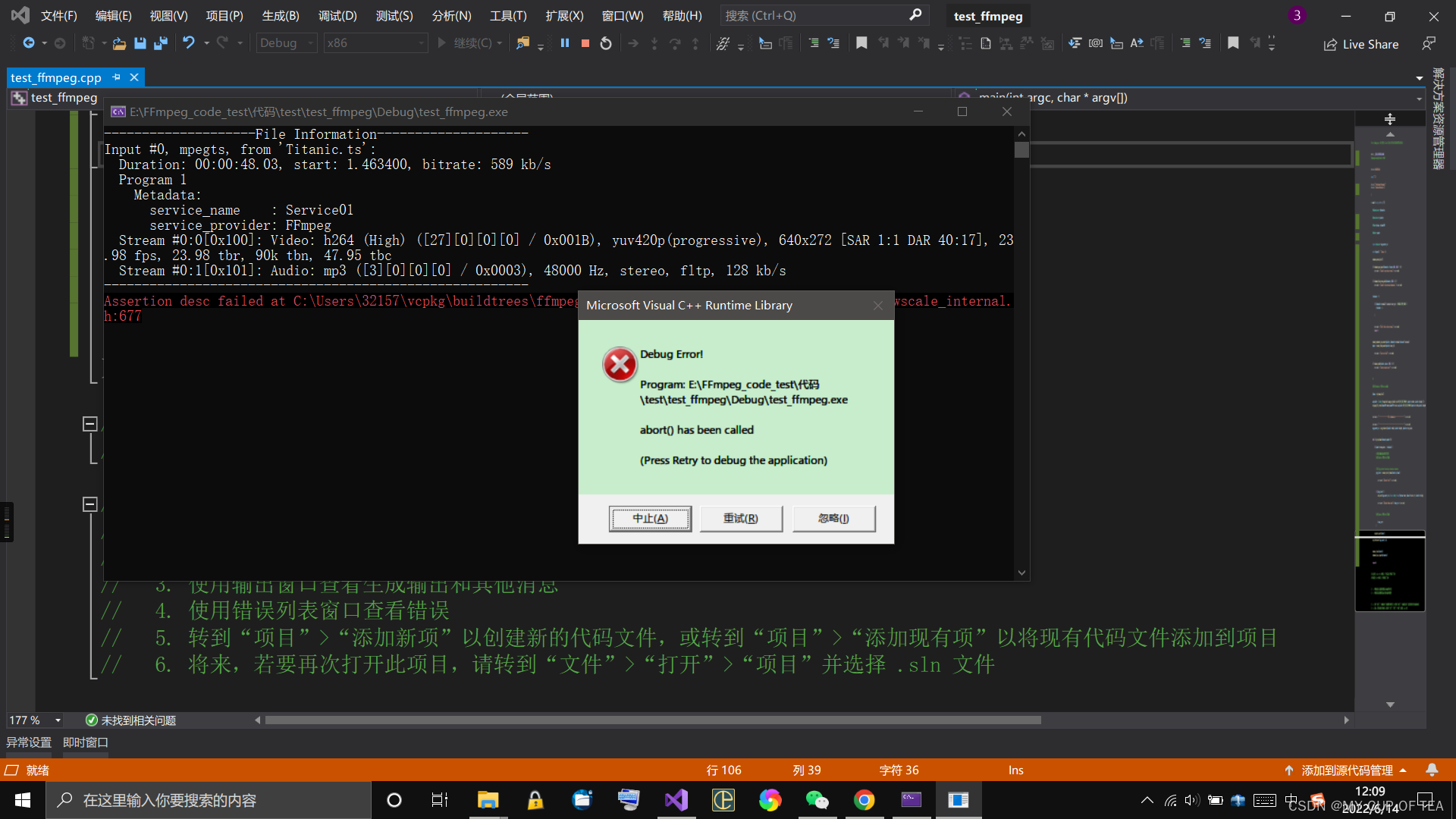

问题仍未解决

- 还是同一个错误

- Assertion desc failed at C:\Users\32157\vcpkg\buildtrees\ffmpeg\src\n4.4.1-070f385ab7.clean\libswscale\swscale_internal.h:677

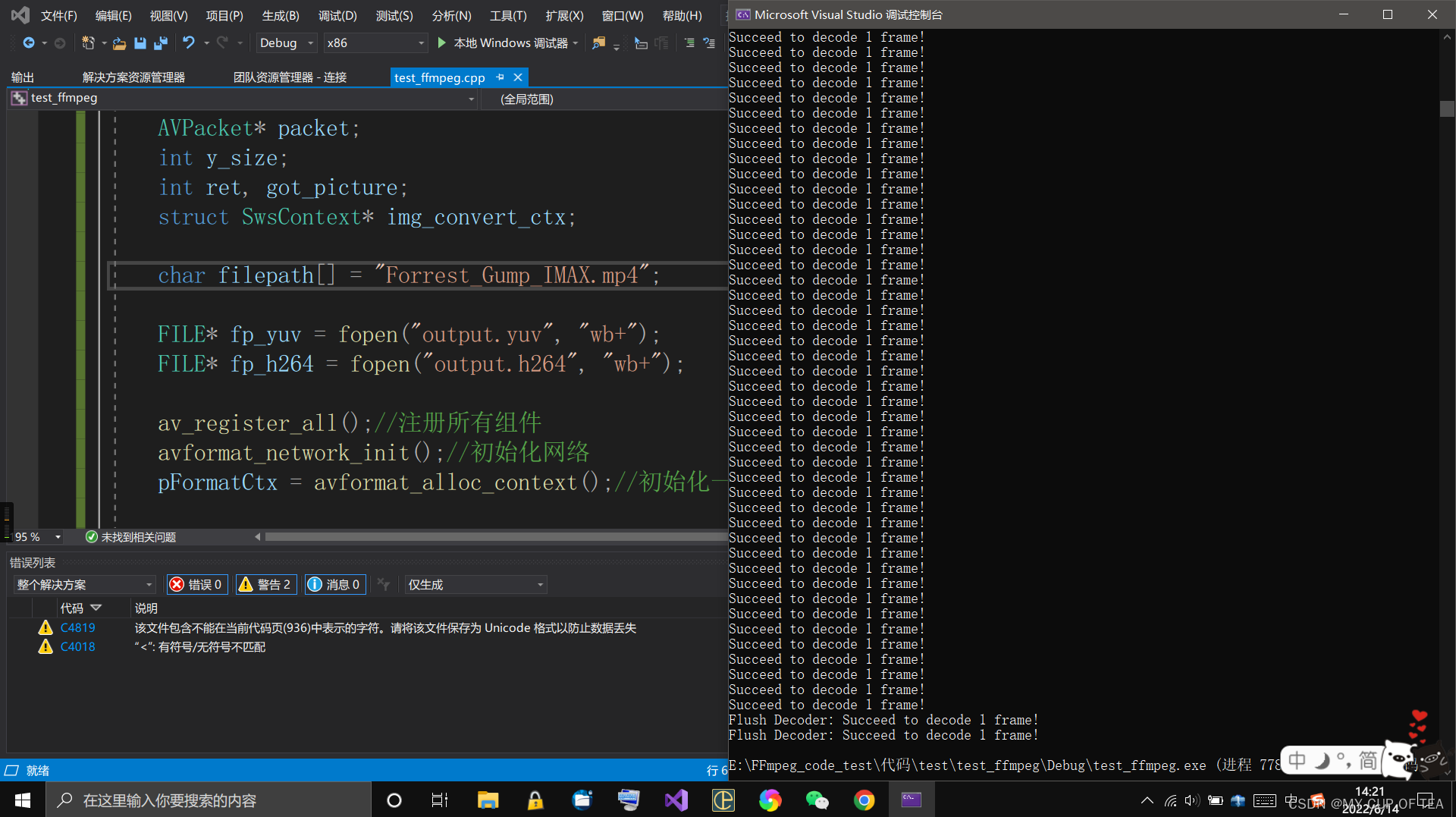

再次修改代码

// test_ffmpeg.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

//

/*

#define SDL_MAIN_HANDLED

#define __STDC_CONSTANT_MACROS

#pragma warning(disable: 4819)

#include <iostream>

#include <SDL2/SDL.h>

extern "C" {

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

}

*/

#pragma warning(disable: 4996)

#include <stdio.h>

#define __STDC_CONSTANT_MACROS

#ifdef _WIN32

//Windows

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

};

#else

//Linux...

#ifdef __cplusplus

extern "C"

{

#endif

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#ifdef __cplusplus

};

#endif

#endif

int main(int argc, char* argv[])

{

AVFormatContext* pFormatCtx;

int i, videoindex;

AVCodecContext* pCodecCtx;

AVCodec* pCodec;

AVFrame* pFrame, * pFrameYUV;

uint8_t* out_buffer;

AVPacket* packet;

int y_size;

int ret, got_picture;

struct SwsContext* img_convert_ctx;

char filepath[] = "Forrest_Gump_IMAX.mp4";

FILE* fp_yuv = fopen("output.yuv", "wb+");

FILE* fp_h264 = fopen("output.h264", "wb+");

av_register_all();//注册所有组件

avformat_network_init();//初始化网络

pFormatCtx = avformat_alloc_context();//初始化一个AVFormatContext

if (avformat_open_input(&pFormatCtx, filepath, NULL, NULL) != 0) {//打开输入的视频文件

printf("Couldn't open input stream.\n");

return -1;

}

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {//获取视频文件信息

printf("Couldn't find stream information.\n");

return -1;

}

videoindex = -1;

for (i = 0; i < pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

if (videoindex == -1) {

printf("Didn't find a video stream.\n");

return -1;

}

pCodecCtx = pFormatCtx->streams[videoindex]->codec;

pCodec = avcodec_find_decoder(pCodecCtx->codec_id);//查找解码器

if (pCodec == NULL) {

printf("Codec not found.\n");

return -1;

}

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {//打开解码器

printf("Could not open codec.\n");

return -1;

}

pFrame = av_frame_alloc();

pFrameYUV = av_frame_alloc();

out_buffer = (uint8_t*)av_malloc(avpicture_get_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height));

avpicture_fill((AVPicture*)pFrameYUV, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

packet = (AVPacket*)av_malloc(sizeof(AVPacket));

//Output Info-----------------------------

printf("--------------- File Information ----------------\n");

av_dump_format(pFormatCtx, 0, filepath, 0);

printf("-------------------------------------------------\n");

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

while (av_read_frame(pFormatCtx, packet) >= 0) {//读取一帧压缩数据

if (packet->stream_index == videoindex) {

fwrite(packet->data, 1, packet->size, fp_h264); //把H264数据写入fp_h264文件

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);//解码一帧压缩数据

if (ret < 0) {

printf("Decode Error.\n");

return -1;

}

if (got_picture) {

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

y_size = pCodecCtx->width * pCodecCtx->height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv); //Y

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv); //U

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv); //V

printf("Succeed to decode 1 frame!\n");

}

}

av_free_packet(packet);

}

//flush decoder

/*当av_read_frame()循环退出的时候,实际上解码器中可能还包含剩余的几帧数据。

因此需要通过“flush_decoder”将这几帧数据输出。

“flush_decoder”功能简而言之即直接调用avcodec_decode_video2()获得AVFrame,而不再向解码器传递AVPacket。*/

while (1) {

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0)

break;

if (!got_picture)

break;

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

int y_size = pCodecCtx->width * pCodecCtx->height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv); //Y

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv); //U

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv); //V

printf("Flush Decoder: Succeed to decode 1 frame!\n");

}

sws_freeContext(img_convert_ctx);

//关闭文件以及释放内存

fclose(fp_yuv);

fclose(fp_h264);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

// 运行程序: Ctrl + F5 或调试 >“开始执行(不调试)”菜单

// 调试程序: F5 或调试 >“开始调试”菜单

// 入门使用技巧:

// 1. 使用解决方案资源管理器窗口添加/管理文件

// 2. 使用团队资源管理器窗口连接到源代码管理

// 3. 使用输出窗口查看生成输出和其他消息

// 4. 使用错误列表窗口查看错误

// 5. 转到“项目”>“添加新项”以创建新的代码文件,或转到“项目”>“添加现有项”以将现有代码文件添加到项目

// 6. 将来,若要再次打开此项目,请转到“文件”>“打开”>“项目”并选择 .sln 文件

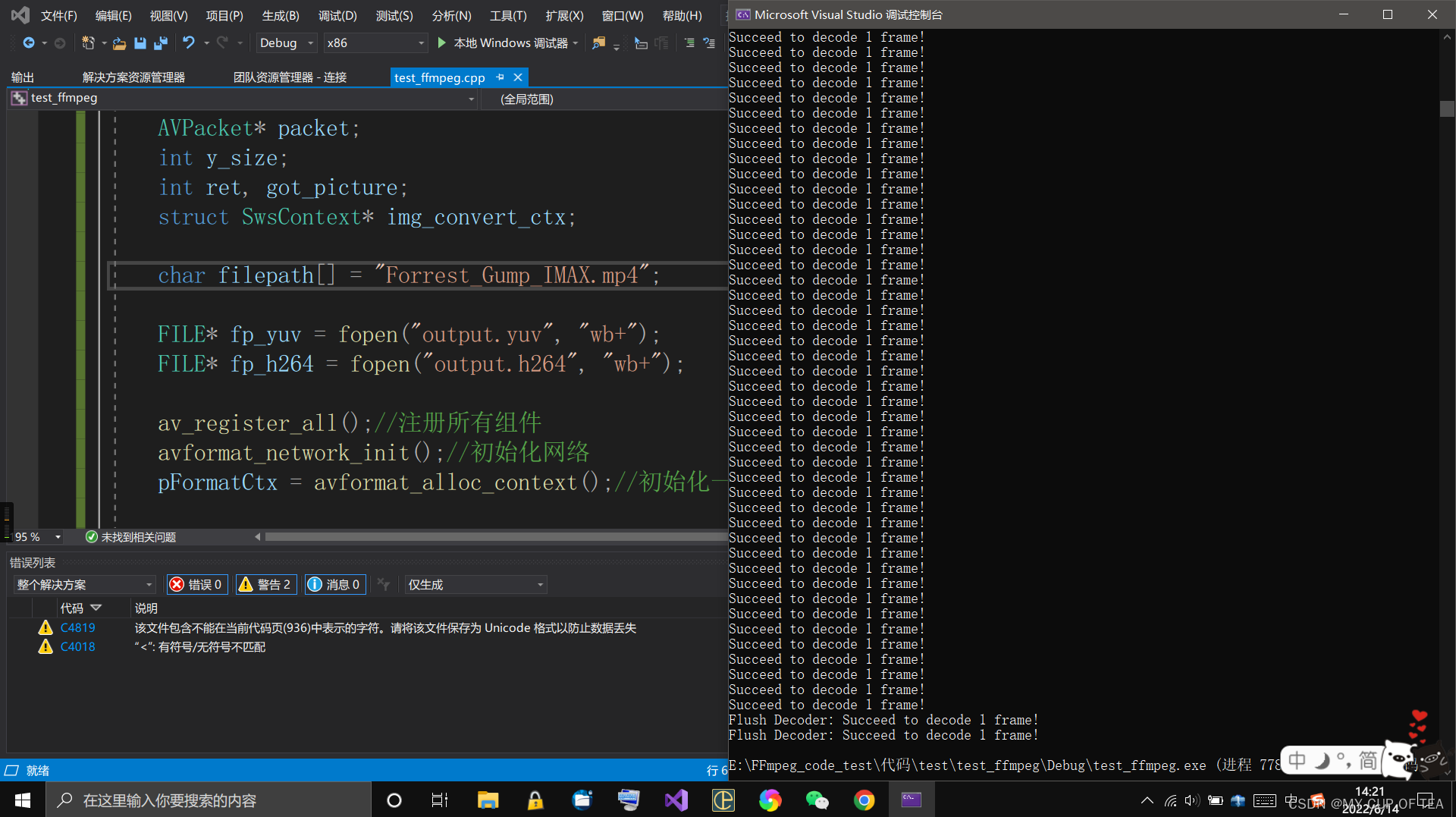

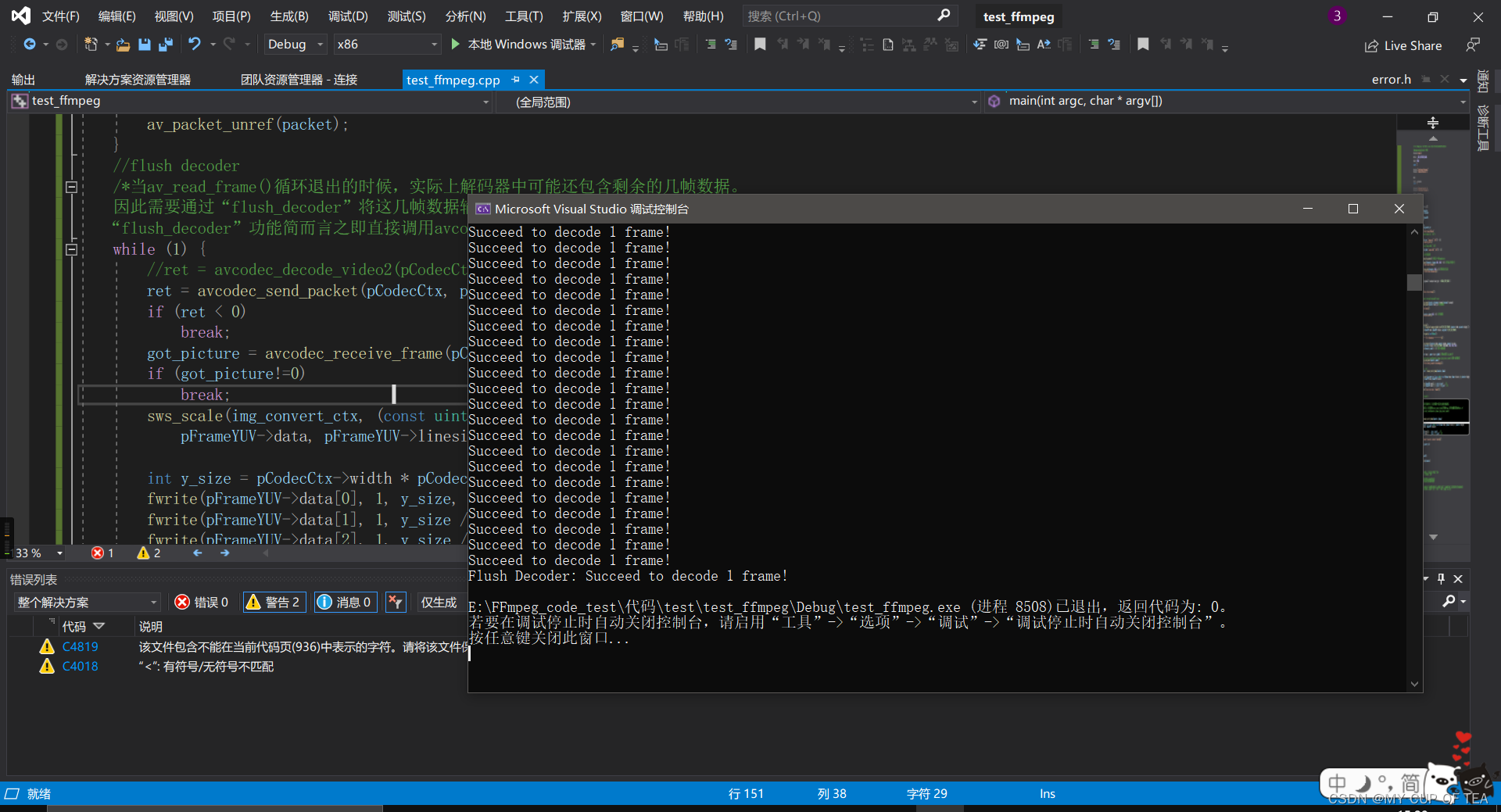

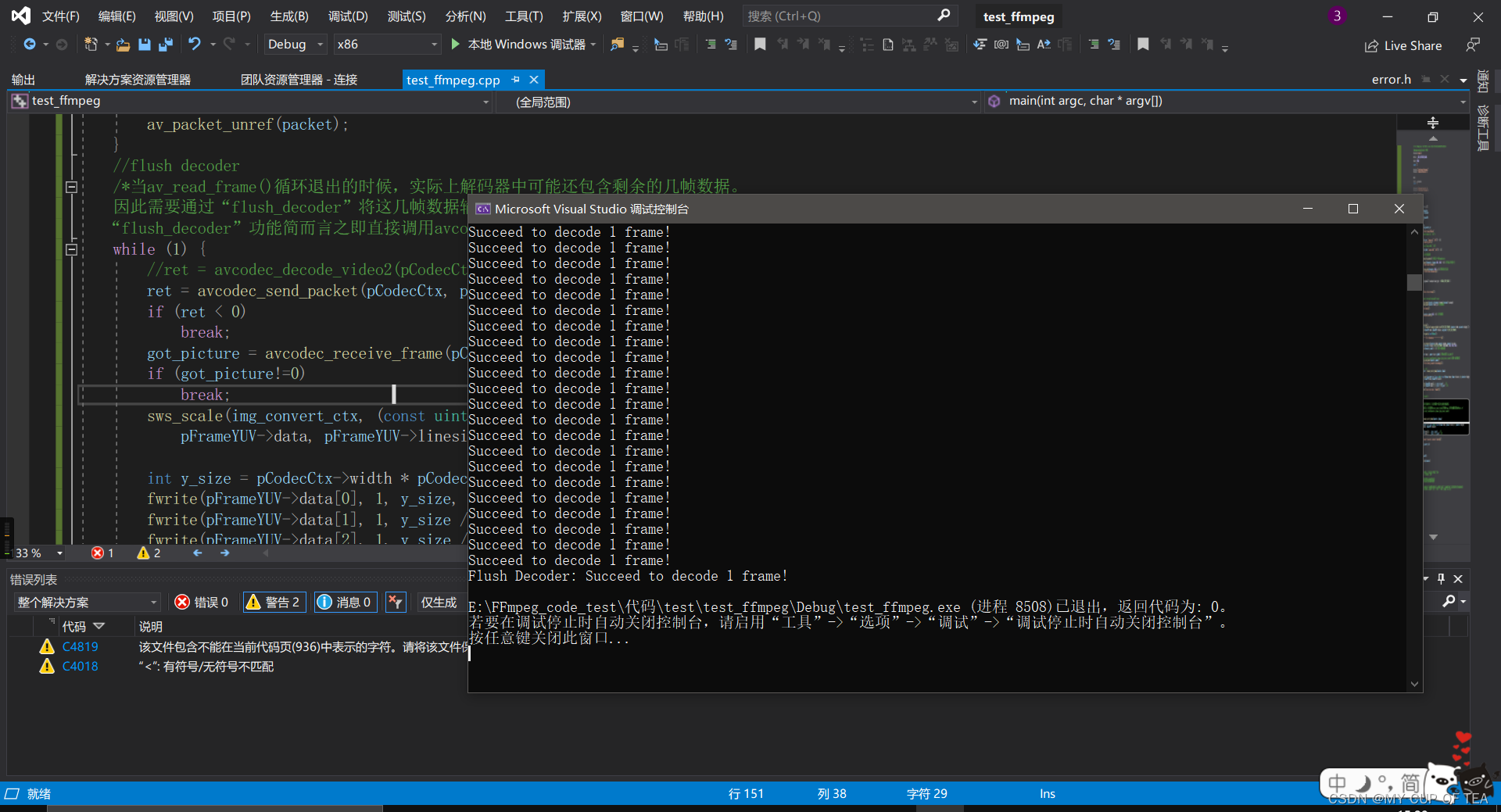

最后修改代码

- fopen 和 fopen_s 之间的差异

- avcodec_send_packet 和 avcodec_receive_frame 替代 avcodec_decode_video2

// test_ffmpeg.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

//

//#pragma warning(disable: 4996)

#include <stdio.h>

#define __STDC_CONSTANT_MACROS

#ifdef _WIN32

//Windows

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

};

#else

//Linux...

#ifdef __cplusplus

extern "C"

{

#endif

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#ifdef __cplusplus

};

#endif

#include <cerrno>

#endif

int main(int argc, char* argv[])

{

AVFormatContext* pFormatCtx;

int i, videoindex;

AVCodecContext* pCodecCtx;

AVCodec* pCodec;

AVFrame* pFrame, * pFrameYUV;

uint8_t* out_buffer;

AVPacket* packet;

int y_size;

int ret, got_picture;

struct SwsContext* img_convert_ctx;

char filepath[] = "Forrest_Gump_IMAX.mp4";

//FILE* fp_yuv = fopen("output.yuv", "wb+");

errno_t err;

FILE* fp_yuv = NULL;

if ((err = fopen_s(&fp_yuv, "output.yuv", "wb+")) != 0) {

printf("Couldn't open fp_yuv.\n");

}

//FILE* fp_h264 = fopen("output.h264", "wb+");

FILE* fp_h264 = NULL;

if ((err = fopen_s(&fp_h264, "output.h264", "wb+")) != 0) {

printf("Couldn't open fp_h264.\n");

}

//av_register_all();//注册所有组件

avformat_network_init();//初始化网络

pFormatCtx = avformat_alloc_context();//初始化一个AVFormatContext

if (avformat_open_input(&pFormatCtx, filepath, NULL, NULL) != 0) {//打开输入的视频文件

printf("Couldn't open input stream.\n");

return -1;

}

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {//获取视频文件信息

printf("Couldn't find stream information.\n");

return -1;

}

videoindex = -1;

for (i = 0; i < pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

if (videoindex == -1) {

printf("Didn't find a video stream.\n");

return -1;

}

//pCodecCtx = pFormatCtx->streams[videoindex]->codec;

pCodecCtx = avcodec_alloc_context3(NULL);

avcodec_parameters_to_context(pCodecCtx, pFormatCtx->streams[videoindex]->codecpar);

pCodec = avcodec_find_decoder(pCodecCtx->codec_id);//查找解码器

if (pCodec == NULL) {

printf("Codec not found.\n");

return -1;

}

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {//打开解码器

printf("Could not open codec.\n");

return -1;

}

pFrame = av_frame_alloc();

pFrameYUV = av_frame_alloc();

out_buffer = (uint8_t*)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height,1));

//avpicture_fill((AVPicture*)pFrameYUV, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize, out_buffer, AV_PIX_FMT_YUV420P,

pCodecCtx->width, pCodecCtx->height, 1);

packet = (AVPacket*)av_malloc(sizeof(AVPacket));

//Output Info-----------------------------

printf("--------------- File Information ----------------\n");

av_dump_format(pFormatCtx, 0, filepath, 0);

printf("-------------------------------------------------\n");

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

while (av_read_frame(pFormatCtx, packet) >= 0) {//读取一帧压缩数据

if (packet->stream_index == videoindex) {

fwrite(packet->data, 1, packet->size, fp_h264); //把H264数据写入fp_h264文件

//ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);//解码一帧压缩数据

ret = avcodec_send_packet(pCodecCtx, packet);

if (ret < 0) {

printf("Error sending a packet for decoding.\n");

return -1;

}

//while (ret >= 0) {

got_picture = avcodec_receive_frame(pCodecCtx, pFrame);

//缺失错误处理和检测

if (got_picture==0) {

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

y_size = pCodecCtx->width * pCodecCtx->height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv); //Y

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv); //U

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv); //V

printf("Succeed to decode 1 frame!\n");

}

//}

}

//av_free_packet(packet);

av_packet_unref(packet);

}

//flush decoder

/*当av_read_frame()循环退出的时候,实际上解码器中可能还包含剩余的几帧数据。

因此需要通过“flush_decoder”将这几帧数据输出。

“flush_decoder”功能简而言之即直接调用avcodec_decode_video2()获得AVFrame,而不再向解码器传递AVPacket。*/

while (1) {

//ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

ret = avcodec_send_packet(pCodecCtx, packet);

if (ret < 0)

break;

got_picture = avcodec_receive_frame(pCodecCtx, pFrame);

if (got_picture!=0)

break;

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

int y_size = pCodecCtx->width * pCodecCtx->height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv); //Y

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv); //U

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv); //V

printf("Flush Decoder: Succeed to decode 1 frame!\n");

}

sws_freeContext(img_convert_ctx);

//关闭文件以及释放内存

fclose(fp_yuv);

fclose(fp_h264);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

// 运行程序: Ctrl + F5 或调试 >“开始执行(不调试)”菜单

// 调试程序: F5 或调试 >“开始调试”菜单

// 入门使用技巧:

// 1. 使用解决方案资源管理器窗口添加/管理文件

// 2. 使用团队资源管理器窗口连接到源代码管理

// 3. 使用输出窗口查看生成输出和其他消息

// 4. 使用错误列表窗口查看错误

// 5. 转到“项目”>“添加新项”以创建新的代码文件,或转到“项目”>“添加现有项”以将现有代码文件添加到项目

// 6. 将来,若要再次打开此项目,请转到“文件”>“打开”>“项目”并选择 .sln 文件

756

756

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?