这里是一份学习笔记…

学习视频点这里🔗

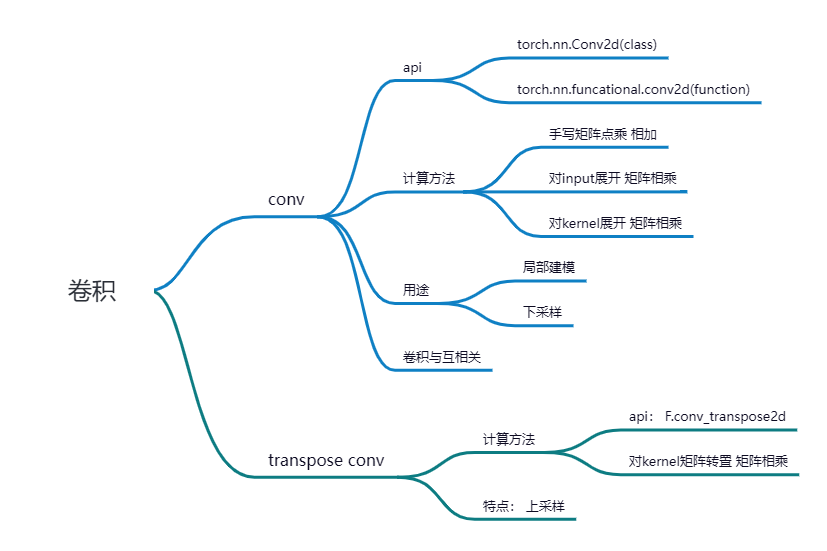

内容概述:

1-Pytorch中conv2d API

Pytorch包含连个conv2dAPI:

- torch.nn.Conv2d,这是一个class,需要实例化后使用,会自动创建权重weight和偏置bias;

- torch.nn.funcational.conv2d,这是一个函数,不需要实例化,需要手动传入权重weight和偏置bias;

- 注:torch.nn.Conv2d的底层调用的就是torch.nn.funcational.conv2d,torch.nn.funcational.conv2d的接口由C++实现;

1.1 用法

1.1.1 torch.nn.Conv2d

接口及参数:

torch.nn.Conv2d(

in_channels,

out_channels,

kernel_size, # 整数或元组

stride=1,

padding=0, # valid/same

dilation=1, # 空洞 空洞卷积

groups=1, # 通道分组 分组卷积 计算量下降

bias=True, # 偏置

padding_mode='zeros',

device=None,

dtype=None

)

调用:

import torch

import torch.nn as nn

import torch.nn.functional as F

in_channels = 1

out_channels = 1

kernel_size = 3

bias = False

batch_size = 1

input_size = [batch_size,in_channels, 4 ,4]

# 普普通通的一个卷积

conv_layer = torch.nn.Conv2d(

in_channels,

out_channels,

kernel_size,

bias = bias

)

# 随机产生一个input

input = torch.randn(input_size)

output = conv_layer(input)

# print(conv_layer.weight)

# print(conv_layer.weight.size())

1.1.2 torch.nn.funcational.conv2d

接口及参数:

torch.nn.functional.conv2d(

input,

weight,

bias=None,

stride=1,

padding=0,

dilation=1,

groups=1

)

调用:

filters = torch.randn(8, 4, 3, 3)

inputs = torch.randn(1, 4, 5, 5)

F.conv2d(inputs, filters, padding=1)

1.2 源码

1.2.1 torch.nn.Conv2d源码

# 继承自ConvNd类

from .. import functional as F

class Conv2d(_ConvNd):

def __init__(...)

def _conv_forward(self, input: Tensor, weight: Tensor, bias: Optional[Tensor]):

if self.padding_mode != 'zeros':

return F.conv2d(F.pad(input, self._reversed_padding_repeated_twice, mode=self.padding_mode),

weight, bias, self.stride,

_pair(0), self.dilation, self.groups)

# 调用对应functional

return F.conv2d(input, weight, bias, self.stride,

self.padding, self.dilation, self.groups)

def forward(self, input: Tensor) -> Tensor:

return self._conv_forward(input, self.weight, self.bias)

1.2.2 torch.nn.functional.conv2d源码

torch.nn.functional.conv2d源代码?

功能代码都是用C++实现的。conv2d的C++ pytorch代码的入口点是here;

2-Pytorch手写实现conv2d

2.1 用矩阵乘法实现conv2d

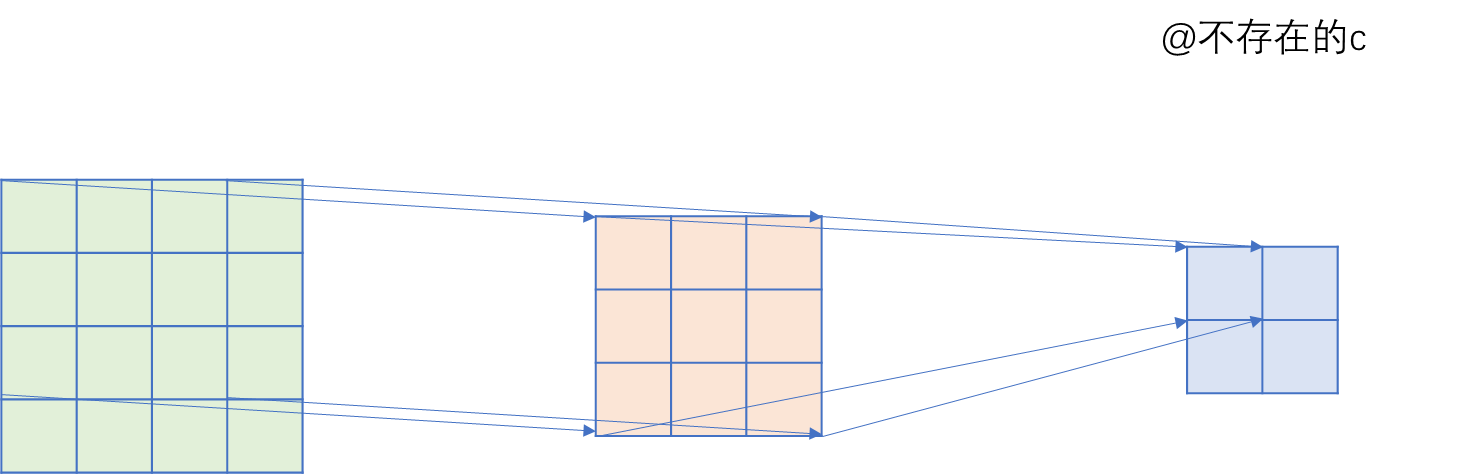

2.1.1 不考虑batch_size, channel维度

手动实现图1的卷积:

# 实现 不考虑batch_size维度与channels维度

import torch

import torch.nn as nn

import torch.nn.functional as F

import math

input = torch.randn(5, 5)

kernel = torch.randn(3, 3)

bias = torch.randn(1)

# 用矩阵运算实现二维卷积

def matrix_multiplication_for_conv2d(input, kernel,bias = 0, stride=1, padding=0):

if padding > 0:

input = F.pad(input, (padding, padding, padding, padding))

# 计算输出大小

input_h, input_w = input.shape

kernel_h, kernel_w = kernel.shape

output_h = (math.floor((input_h - kernel_h) / stride) + 1)

output_w = (math.floor((input_w - kernel_w) / stride) + 1)

# 初始化输出矩阵

output = torch.zeros(output_h, output_w)

for i in range(0, input_h - kernel_h + 1, stride):

for j in range(0, input_w - kernel_w + 1, stride):

region = input[i:i + kernel_h, j: j+kernel_w]

# 点乘相加

output[int(i/stride), int(j/stride)] = torch.sum(region * kernel) + bias

return output

# 调用自己写的函数

res = matrix_multiplication_for_conv2d(input, kernel,bias = bias, padding=1)

print(res)

# 验证一下

# 调用API结果

res2 = F.conv2d(

input.reshape((1, 1, input.shape[0], input.shape[1])),

kernel.reshape((1,1,kernel.shape[0], kernel.shape[1])),

padding=1,

bias=bias

)

print(res2)

# True

print(torch.allclose(res, res2))

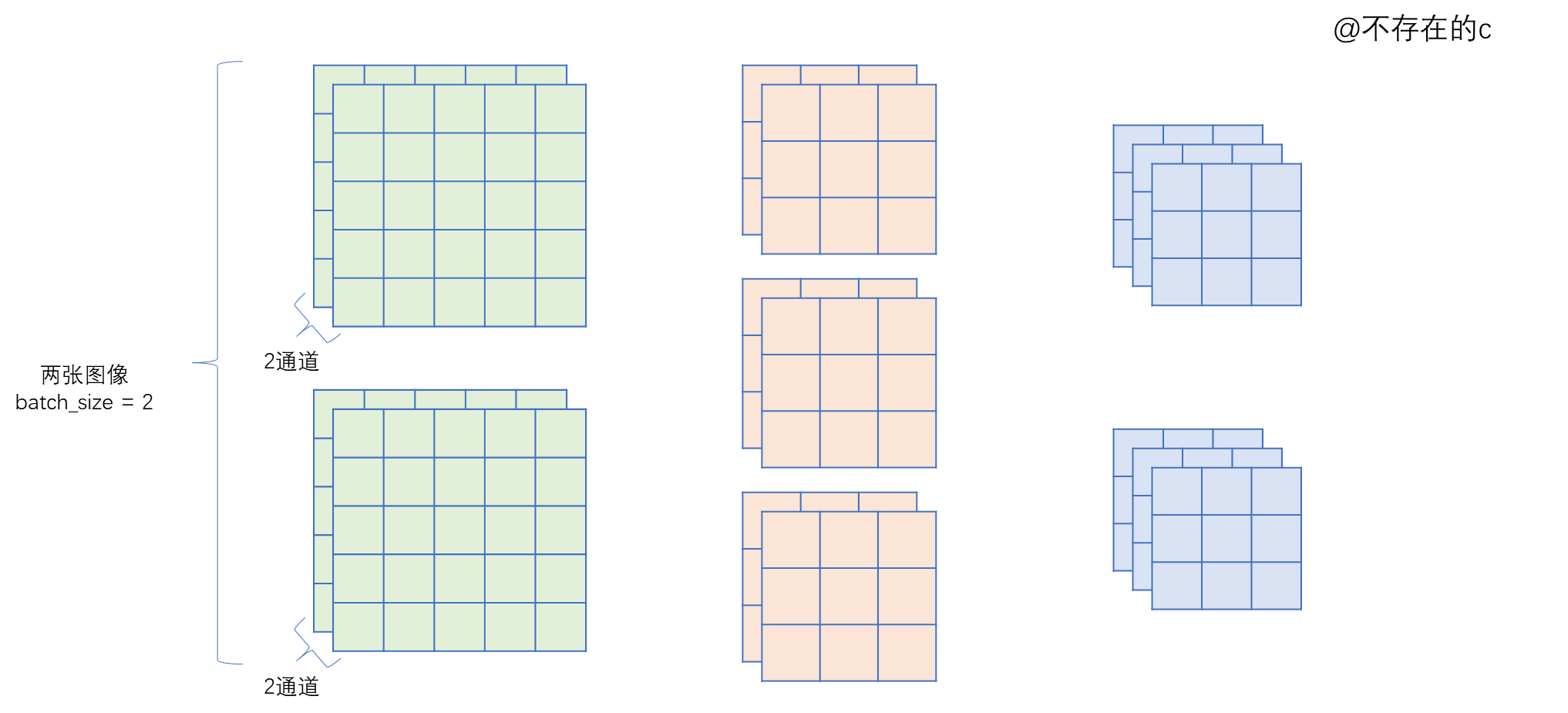

2.1.2 考虑batch_size, channel维度

# 实现 不考虑batch_size维度与channels维度

import torch

import torch.nn as nn

import torch.nn.functional as F

import math

# bs*in_channel*in_h*in_w

input = torch.randn(2, 2, 5, 5)

# out_channel*inchannel*kernel_h*kernel*w

kernel = torch.randn(3, 2, 3, 3)

bias = torch.randn(3)

# 用矩阵运算实现二维卷积 考虑batch_size和channels维度

def matrix_multiplication_for_conv2d(input, kernel,bias = 0, stride=1, padding=0):

if padding > 0:

input = F.pad(input, (padding, padding, padding, padding, 0, 0, 0, 0))

# 计算输出大小

bs, in_channels, input_h, input_w = input.shape

out_channel, in_channel, kernel_h, kernel_w = kernel.shape

if bias is None:

bias = torch.zeros(out_channel)

output_h = (math.floor((input_h - kernel_h) / stride) + 1)

output_w = (math.floor((input_w - kernel_w) / stride) + 1)

# 初始化输出矩阵

output = torch.zeros(bs, out_channel, output_h, output_w)

# 不考虑性能

for ind in range(bs):

for oc in range(out_channel):

for ic in range(in_channel):

for i in range(0, input_h - kernel_h + 1, stride):

for j in range(0, input_w - kernel_w + 1, stride):

region = input[ind, ic, i:i + kernel_h, j: j + kernel_w]

# 点乘相加

output[ind, oc, int(i / stride), int(j / stride)] += torch.sum(region * kernel[oc, ic])

output[ind, oc] += bias[oc]

return output

res = matrix_multiplication_for_conv2d(input, kernel, bias = bias, padding=1, stride=2)

print(res)

# 验证一下

# 调用API结果

res2 = F.conv2d(

input,

kernel,

padding=1,

bias=bias,

stride=2

)

print(res2)

print(torch.allclose(res, res2))

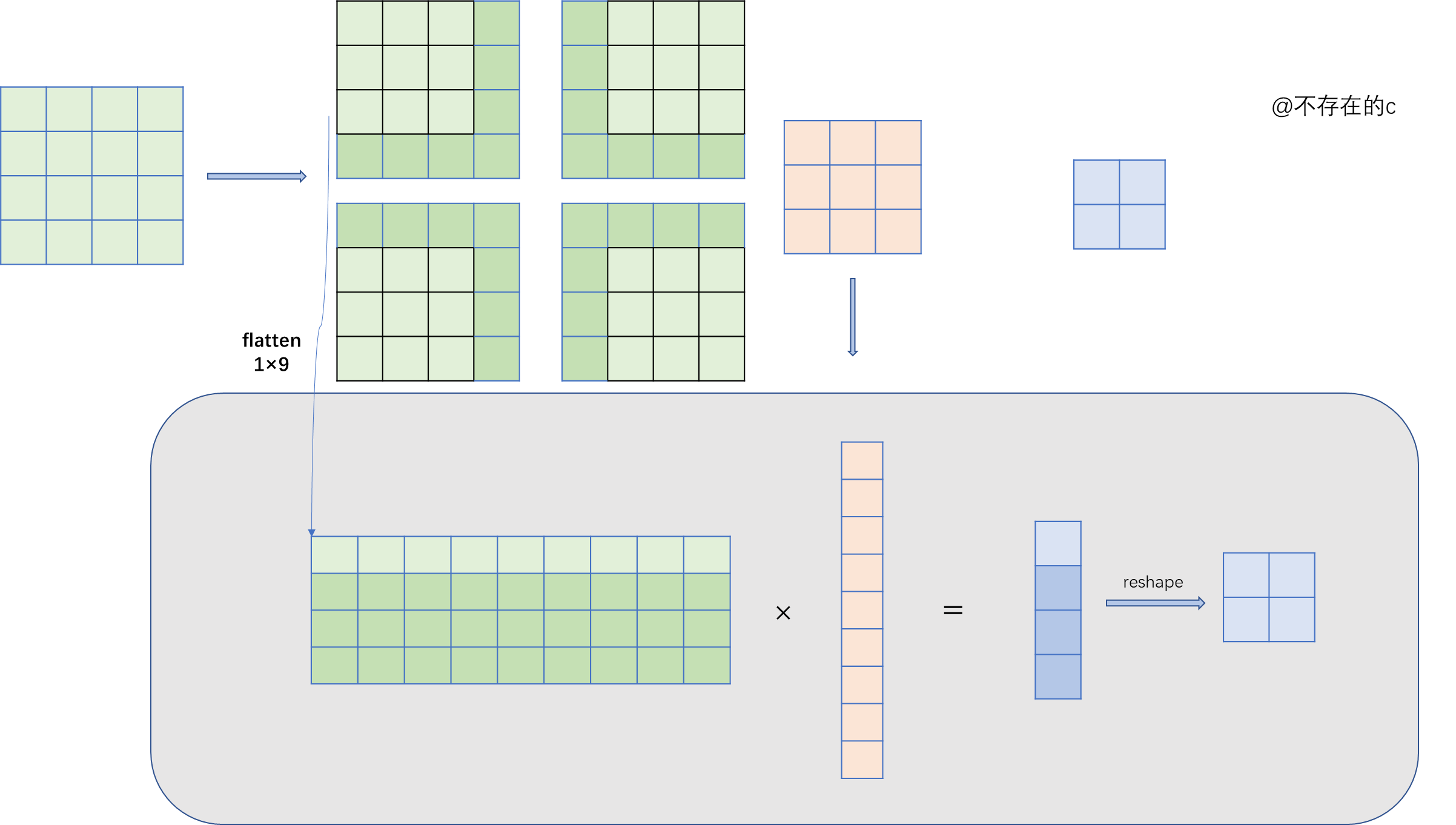

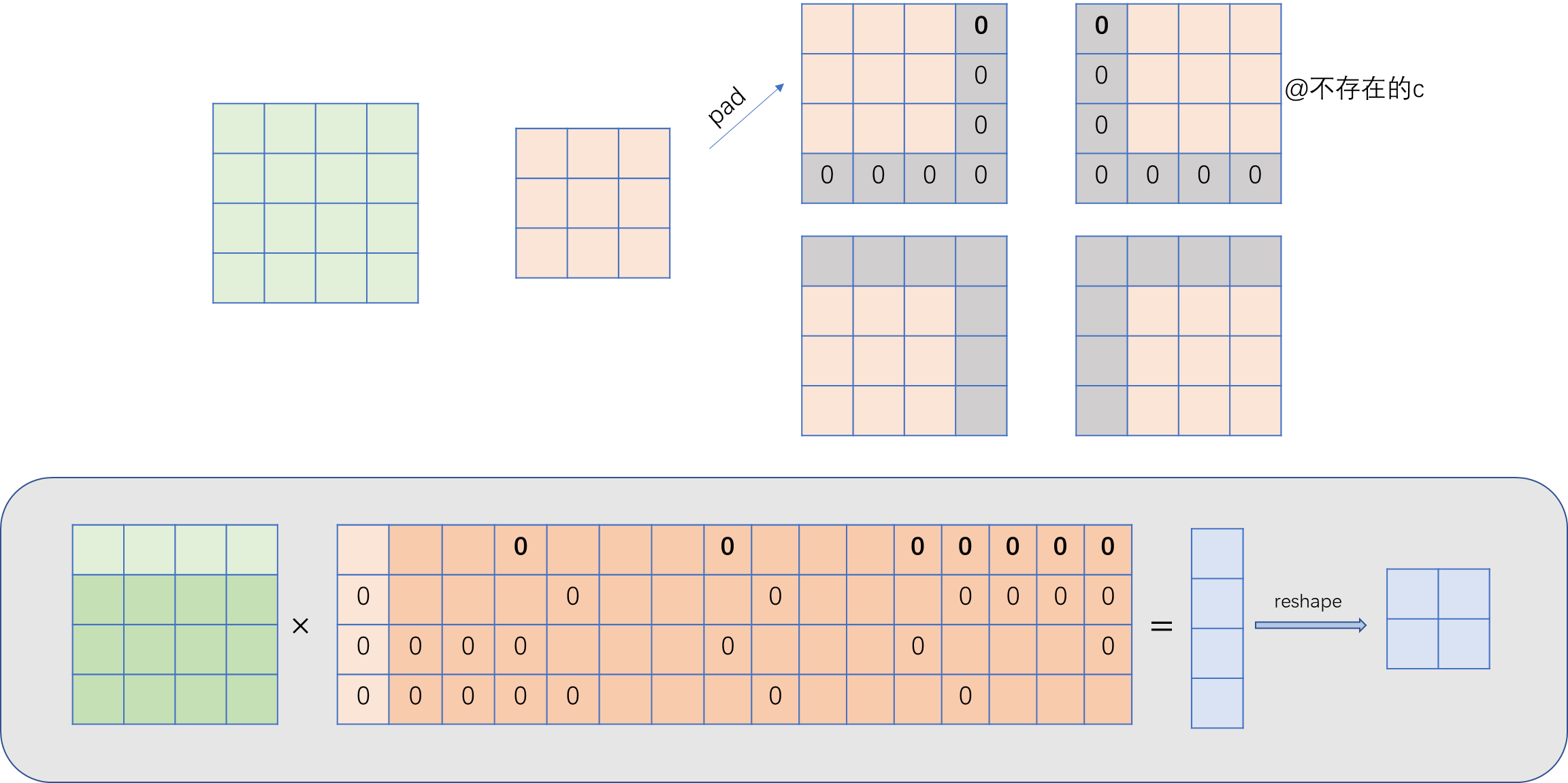

2.2 对input展开成矩阵 实现conv2d

import torch

import torch.nn as nn

import torch.nn.functional as F

import math

input = torch.randn(5, 5)

kernel = torch.randn(3, 3)

bias = torch.randn(1)

# 用矩阵运算实现二维卷积 input flatten版

def matrix_multiplication_for_conv2d_flatten(input, kernel,bias = 0, stride=1, padding=0):

if padding > 0:

input = F.pad(input, (padding, padding, padding, padding))

# 计算输出大小

input_h, input_w = input.shape

kernel_h, kernel_w = kernel.shape

output_h = (math.floor((input_h - kernel_h) / stride) + 1)

output_w = (math.floor((input_w - kernel_w) / stride) + 1)

# 初始化输出矩阵

output = torch.zeros(output_h, output_w)

# 所有特征区域拉平后的向量

region_matrix = torch.zeros(output.numel(), kernel.numel())

# kernel向量形式

kernel_matrix = kernel.reshape((kernel.numel(), 1))

row_index = 0

for i in range(0, input_h - kernel_h + 1, stride):

for j in range(0, input_w - kernel_w + 1, stride):

region = input[i:i + kernel_h, j: j+kernel_w]

# 区域拉平成向量

region_vector = torch.flatten(region)

# 赋值

region_matrix[row_index] = region_vector

row_index += 1

# 矩阵惩罚

output_matrix = region_matrix @ kernel_matrix

output = output_matrix.reshape((output_h, output_w)) + bias

return output

# 调用自己实现的函数

res = matrix_multiplication_for_conv2d_flatten(input, kernel,bias = bias, padding=1, stride=2)

print(res)

# 验证一下

# 调用API结果

res2 = F.conv2d(

input.reshape((1, 1, input.shape[0], input.shape[1])),

kernel.reshape((1, 1, kernel.shape[0], kernel.shape[1])),

padding=1,

bias=bias,

stride=2

).squeeze(0).squeeze(0)

print(res2)

print(torch.allclose(res, res2))

2.3 对kernel展开成矩阵 实现conv2d

# 实现 不考虑batch_size维度与channels维度

import torch

import torch.nn as nn

import torch.nn.functional as F

import math

# 通过对kernel进行展开来实现二维卷积 并推导出转置卷积

# 不考虑batch_size, channel维度

# padding = 1 stride = 1

# 基于kernel和输入特征图大小得到填充拉直后的kernel堆叠的矩阵

def get_kernel_matrix(kernel, input_size, stride=1):

kernel_h, kernel_w = kernel.shape

input_h, input_w = input.shape

num_out_feat_map = (input_h - kernel_h + 1) * (input_w - kernel_w + 1)

# 初始化结果矩阵

result = torch.zeros((num_out_feat_map, input_h * input_w))

count = 0

# 计算上下左右各填充多少个)

for i in range(0, input_h - kernel_h + 1, stride):

for j in range(0, input_w - kernel_w + 1, stride):

# 填充成跟输入特征图一样大小

padded_kernel = F.pad(kernel, (j, input_w - kernel_w - j, i, input_h - kernel_h - i))

# (填充后)拉成向量 保存在result中

result[count] = padded_kernel.flatten()

count += 1

return result

# 那就验证一下吧: 验证二维卷积

input = torch.randn(4, 4)

kernel = torch.randn(3, 3)

# 4 * 16

kernel_matrix = get_kernel_matrix(kernel, input.shape)

# 通过矩阵相乘方法计算卷积

mm_conv2d_output = kernel_matrix @ input.reshape((-1, 1))

# 通过API

pytorch_output = F.conv2d(input.unsqueeze(0).unsqueeze(0), kernel.unsqueeze(0).unsqueeze(0))

# print(mm_conv2d_output)

# print(pytorch_output)

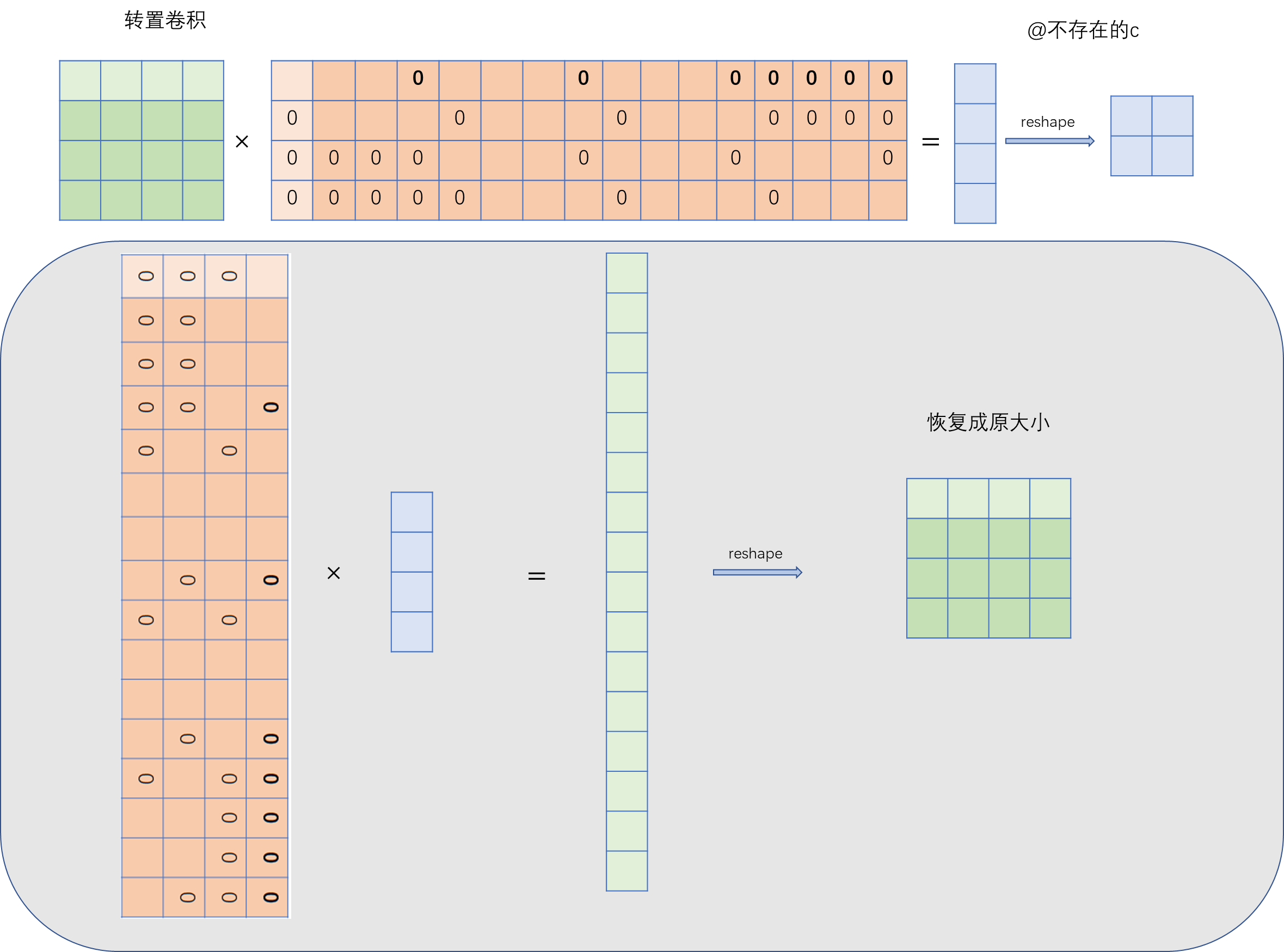

3-转置卷积

转置卷积(Transpose Convolution),一些地方也称为“反卷积”,在深度学习中表示为卷积的一个逆向过程,可以根据卷积核大小和输出的大小,恢复卷积前的图像尺寸,而不是恢复原始值;

3.1 Pytorch 调用API

pytorch_transposed_conv2d = F.conv_transpose2d(pytorch_output, kernel.unsqueeze(0).unsqueeze(0))

3.2 对kernel矩阵转置 手动实现

# 转置卷积

# 通过矩阵乘法实现转置卷积

# 2*2 变成 4*4=>上采样的效果

mm_transposed_conv2d = kernel_matrix.transpose(-1,-2) @ mm_conv2d_output

# pytorch 转置卷积

pytorch_transposed_conv2d = F.conv_transpose2d(pytorch_output, kernel.unsqueeze(0).unsqueeze(0))

print(mm_transposed_conv2d.reshape((4, 4)))

print(pytorch_transposed_conv2d)

文章详细介绍了Pytorch中的卷积操作,包括nn.Conv2d类和nn.functional.conv2d函数的使用,以及它们的源码分析。同时,通过矩阵乘法手动实现了卷积和转置卷积,以加深理解,并进行了结果验证。

文章详细介绍了Pytorch中的卷积操作,包括nn.Conv2d类和nn.functional.conv2d函数的使用,以及它们的源码分析。同时,通过矩阵乘法手动实现了卷积和转置卷积,以加深理解,并进行了结果验证。

3346

3346

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?