一、前言

当下系统开发过程中,普遍会采用分布式微服务架构,在此技术背景下,分布式ID的生成和获取就成为一个不得不考虑的问题。常见的分布式ID生成策略有基于数据库号段模式、UUID、基于Redis、基于zookeeper、雪花算法(snowflake)等方案,这其中雪花算法由于其简单、独立、易用的特性,被众多技术选型推荐。

雪花算法 (SnowFlake),是 Twitter 开源的分布式 id 生成算法,可以不用依赖任何第三方工具进行自动增长的数字类型的ID生成;雪花算法的核心逻辑是使用一个 64 bit 的 long 型的数字作为全局唯一 ID。

我们先看一下雪花算法生成的ID构成逻辑:雪花算法生成的唯一ID均为正数,所以这 64 个 bit 中,其中 1 个 bit 是不用的(第一个 bit 默认都是 0),然后用其中的 41 bit 作为毫秒数,用 10 bit 作为工作机器 id,12 bit 作为序列号。

-

1个bit, 必须取值为0。因为二进制中第一个bit为1代表整体是负数,而生成的分布式id是正数,因此第一个bit恒为0。

-

41 bit 作为毫秒数:雪花算法初始化时,会指定一个起始时间,算法执行时,会根据机器时间和指定的起始时间差值进行计算存储到41bit的毫秒数中,

2^41 - 1 = 2199023255551毫秒,2199023255551 / 1000 / 60 / 60 / 24 / 365 ≈ 69.73年,所以雪花算法根据设置的起始时间可以使用大概69年。 -

10 bit 作为工作机器 id:在分布式架构下,应用会部署到不同的机器节点上,为了防止不同的机器生成的雪花算法ID重复,引入了机器ID的概念,2^10,可以支持

1024个机器。 -

12 bit 作为序列号:这12bit的数字,是指1毫秒内生成的ID数量,2^12,支持每个节点每个毫秒生成

4096个ID。

当算法请求执行时,snowflake算法先基于二进制位的运算方式生成一个64bit的long类型的id, 其中第一个bit设为0,接着41个bit,采用当前时间戳和初始化时间差值,然后5个bit设置机房id, 后5个bit设置机器id,最后进行判断,当前机房的这台机器在这毫秒内,是第几个请求,并给当前请求赋予一个累加的序号,作为最后的12个bit. 最终得到一个唯一的id.

有人说雪花算法生成的ID递增规律不明显?看同一毫秒内的数据,可能更加明显一些。

有人说雪花算法为什么要设置起始时间,直接取系统时间不行吗?由上面描述可以发现,雪花算法最多大概支持69年,1970 + 69 = 2039年,这样2039年以后雪花算法使用就到期了。

上面说了雪花算法的好处,但是雪花算法就没有问题吗?问题肯定是有的,雪花算法最大的问题就是时间回拨问题。所谓时间回拨,就是雪花算法需要依赖机器所在时间生成ID,比如在昨天生成了一些ID,今天将机器时间调成跟昨天一样,这样某一个时刻41bit存的毫秒数,和之前生成ID的时候一样,导致生成的ID出现重复的问题。那有什么解决方案吗?可以,就是使用百度UidGenerator。

二、百度UidGenerator

1. 介绍

百度UidGenerator是基于snowflake算法思想实现的,但与原始算法不同的地方在于,UidGenerator支持自定义时间戳、工作机器id(workId)以及序列号等各个组成部分的位数,并且工作机器id采用用户自定义的生成策略。百度uid-generator有两种实现方式: DefaultUidGenerator和CachedUidGenerator。从性能和时间回拨问题考虑,一般都是考虑CachedUidGenerator类实现。

更多了解可以参考:https://github.com/baidu/uid-generator/blob/master/README.zh_cn.md

我这里截部分官方文档图片:

2. springboot集成UidGenerator

我们先试用一下原生的UidGenerator的功能,体验一下UidGenerator方便之处。

1. 引入UidGenerator相关依赖

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>com.xfvape.uid</groupId>

<artifactId>uid-generator</artifactId>

<version>0.0.4-RELEASE</version>

<!-- 解决包依赖冲突-->

<exclusions>

<exclusion>

<artifactId>mybatis</artifactId>

<groupId>org.mybatis</groupId>

</exclusion>

<exclusion>

<artifactId>mybatis-spring</artifactId>

<groupId>org.mybatis</groupId>

</exclusion>

</exclusions>

</dependency>

<!-- 原生UidGenerator需要使用到数据库-->

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.3.0</version>

</dependency>

<dependency>

<groupId>com.mysql</groupId>

<artifactId>mysql-connector-j</artifactId>

<version>8.2.0</version>

</dependency>

</dependencies>

2. 执行初始化SQL

原生UidGenerator是需要依赖数据库ID自增的机制,防止workID重复的。

DROP TABLE IF EXISTS WORKER_NODE;

CREATE TABLE WORKER_NODE

(

ID BIGINT NOT NULL AUTO_INCREMENT COMMENT 'auto increment id',

HOST_NAME VARCHAR(64) NOT NULL COMMENT 'host name',

PORT VARCHAR(64) NOT NULL COMMENT 'port',

TYPE INT NOT NULL COMMENT 'node type: ACTUAL or CONTAINER',

LAUNCH_DATE DATE NOT NULL COMMENT 'launch date',

MODIFIED TIMESTAMP NOT NULL COMMENT 'modified time',

CREATED TIMESTAMP NOT NULL COMMENT 'created time',

PRIMARY KEY(ID)

)

COMMENT='DB WorkerID Assigner for UID Generator',ENGINE = INNODB;

3. 声明UidGeneratorConfig配置类

import com.xfvape.uid.impl.CachedUidGenerator;

import com.xfvape.uid.worker.DisposableWorkerIdAssigner;

import com.xfvape.uid.worker.WorkerIdAssigner;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

@MapperScan(basePackages = "com.xfvape.uid.worker.dao")

public class UidGeneratorConfig {

@Bean

public CachedUidGenerator cachedUidGenerator(WorkerIdAssigner disposableWorkerIdAssigner) {

CachedUidGenerator cachedUidGenerator = new CachedUidGenerator();

cachedUidGenerator.setWorkerIdAssigner(disposableWorkerIdAssigner);

// 时间戳位数

cachedUidGenerator.setTimeBits(29);

// 机器位数

cachedUidGenerator.setWorkerBits(21);

// 每毫秒生成序号位数

cachedUidGenerator.setSeqBits(13);

//从初始化时间起起, 可以使用8.7年

cachedUidGenerator.setEpochStr("2024-02-05");

return cachedUidGenerator;

}

@Bean

public DisposableWorkerIdAssigner disposableWorkerIdAssigner() {

DisposableWorkerIdAssigner disposableWorkerIdAssigner = new DisposableWorkerIdAssigner();

return disposableWorkerIdAssigner;

}

}

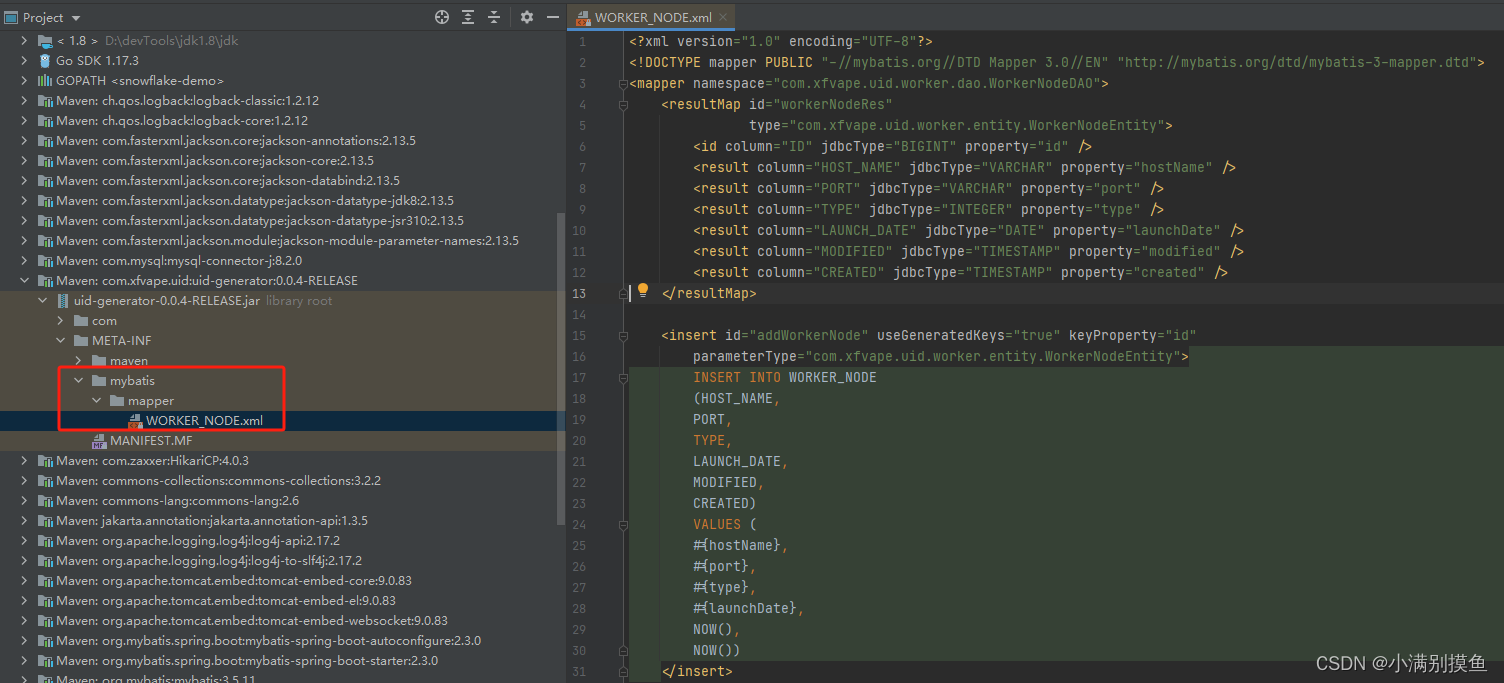

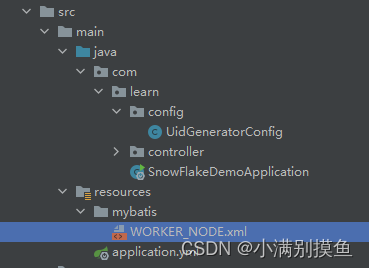

4. 将WORKER_NODE.xml拷贝到项目中

WORKER_NODE.xml在uid-generator依赖的META-INF目录下:

拷贝到项目中resource/mybatis目录下:

5. 配置数据库连接信息

server:

port: 7090

spring:

datasource:

url: jdbc:mysql://127.0.0.1:3306/uid

username: root

password: root

mybatis:

mapper-locations: classpath*:/mybatis/*.xml

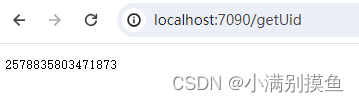

6. 运行并测试

-

测试代码:

@RestController public class HelloController { @Autowired private CachedUidGenerator cachedUidGenerator; @GetMapping("getUid") public long hello() { return cachedUidGenerator.getUID(); } } -

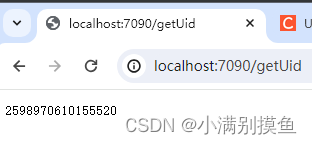

测试结果:

-

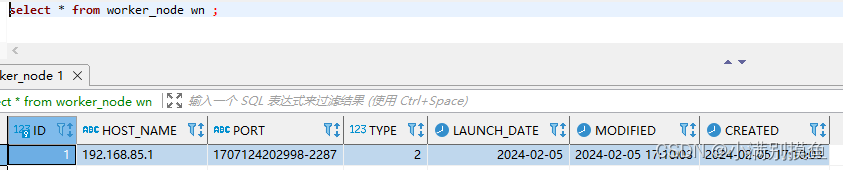

数据库:

至此,springboot集成UidGenerator生成ID就完成了。

三、UidGenerator自定义增强workID获取

大家有没有发现一个问题,原生的UidGenerator是强依赖数据库的,虽然可以保证workID不重复,却减少了灵活性,比较很多项目是没有使用数据库的,其实,UidGenerator也是支持大家自定义workID获取逻辑的,下面我们就封装一下UidGenerator,自定义一个starter,支持数据库、Redis、自定义workID三种方式。

1. 创建一个springboot项目,导入相关依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.18</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.jhx</groupId>

<artifactId>jhx-snowflake-spring-boot-starter</artifactId>

<version>1.0.0-SNAPSHOT</version>

<name>jhx-snowflake-spring-boot-starter</name>

<description>jhx-snowflake-spring-boot-starter</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<!-- uid-generator依赖 -->

<dependency>

<groupId>com.xfvape.uid</groupId>

<artifactId>uid-generator</artifactId>

<version>0.0.4-RELEASE</version>

<!-- 防止jar包冲突 -->

<exclusions>

<exclusion>

<artifactId>mybatis-spring</artifactId>

<groupId>org.mybatis</groupId>

</exclusion>

<exclusion>

<artifactId>mybatis</artifactId>

<groupId>org.mybatis</groupId>

</exclusion>

<exclusion>

<artifactId>spring-jdbc</artifactId>

<groupId>org.springframework</groupId>

</exclusion>

</exclusions>

</dependency>

<!-- 支持原生使用数据库-->

<dependency>

<groupId>org.mybatis</groupId>

<artifactId>mybatis-spring</artifactId>

<version>2.1.0</version>

<scope>provided</scope>

</dependency>

<!-- 支持Redis相关依赖-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<scope>provided</scope>

</dependency>

<!--用于生成配置文件提示信息-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

</dependency>

<!--工具类-->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

</dependencies>

</project>

2. 定义属性接收类

由于我们是自定义一个starter进行封装,属性配置肯定是必不可少的,那么我们需要定义一个类进行属性接收:

package com.jhx.snowflake.support.property;

import com.xfvape.uid.BitsAllocator;

import com.xfvape.uid.buffer.RingBuffer;

import lombok.Data;

import lombok.Getter;

import lombok.Setter;

import org.springframework.beans.factory.InitializingBean;

import org.springframework.boot.context.properties.ConfigurationProperties;

/**

* 配置属性

*/

@ConfigurationProperties(prefix = JhxSnowFlakeProperties.KEY_PREFIX)

@Data

public class JhxSnowFlakeProperties implements InitializingBean {

/**

* 属性前缀

*/

public static final String KEY_PREFIX = "jhx.sf";

/**

* 是否启用雪花算法

*/

private boolean enabled = true;

/**

* 时间戳位数

*/

private int timeBits = 28;

/**

* 机器ID位数

*/

private int workerBits = 22;

/**

* 最大机器ID

*/

private long maxWorkId;

/**

* 序列号位数

*/

private int seqBits = 13;

/**

* 默认起始时间

*/

private String epochStr = "2024-02-05";

/**

* 当RingBuffer不够用默认是扩大三倍,

*/

private int boostPower = 3;

/**

* 当环上的缓存的uid少于多少的时候, 进行新生成填充

*/

private int paddingFactor = RingBuffer.DEFAULT_PADDING_PERCENT;

/**

* 开启一个线程, 定时检查填充uid

*/

private Long scheduleInterval = 0L;

/**

* 使用Redis获取workID的相关配置

*/

private Redis redis = new Redis();

/**

* 本地设置workID

*/

private Local local = new Local();

/**

* 使用DB的方式获取workID

*/

private DB db = new DB();

@Override

public void afterPropertiesSet() throws Exception {

/**

* 填充最大机器ID

*/

maxWorkId = new BitsAllocator(timeBits, workerBits, seqBits).getMaxWorkerId();

}

@Getter

@Setter

public static class Local {

/**

* 是否启用设置本地workID方式

*/

private boolean enable = false;

/**

* 本地workID

*/

private int workerId;

}

@Getter

@Setter

public static class Redis {

/**

* 是否启用Redis方式获取workID

*/

private boolean enable = false;

/**

* 应用向Redis刷新workID的使用时间戳间隔

*/

private int renewalTime = 30;

/**

* redis方式获取workID超时等待

*/

private int recoverWaiteTime = 6;

/**

* redis方式获取workID超时等待次数

*/

private int maxRecoverTimes = 5;

/**

* 是否开启workID回收,从Redis回收没有使用了的workID

*/

private boolean enableRecoverWorkId = true;

}

@Getter

@Setter

public static class DB {

/**

* 是否启用Redis方式获取workID

*/

private boolean enable = false;

}

}

3. 自定义CachedUidGenerator

由于实际开发中,CachedUidGenerator可能会有自定义逻辑,所以我们也可以定义自己的CachedUidGenerator:

package com.jhx.snowflake.support.generator;

import com.xfvape.uid.impl.CachedUidGenerator;

public class JhxCachedUidGenerator extends CachedUidGenerator {

/**

* 获取十六进制格式的UID

*

* @return

*/

public String getUIDToHex() {

String hexUid = Long.toHexString(getUID());

int uidLength = hexUid.length();

if (uidLength < seqBits) {

StringBuilder sb = new StringBuilder();

for (int i = 0; i < seqBits - uidLength; i++) {

sb = sb.append("0");

}

hexUid = sb.append(hexUid).toString();

}

return hexUid;

}

}

4. 定义本地workID设置获取逻辑

本地workID设置和获取比较简单,通过配置文件直接读取就行:

package com.jhx.snowflake.support.assigner;

import com.xfvape.uid.worker.WorkerIdAssigner;

import lombok.Data;

import lombok.extern.slf4j.Slf4j;

@Data

@Slf4j

public class JhxLocalWorkerIdAssigner implements WorkerIdAssigner {

private long workId;

private long maxWorkId;

@Override

public long assignWorkerId() {

if (workId < 0 || workId > maxWorkId) {

log.error("worId is error, worId: {},maxWorkId", workId, maxWorkId);

throw new IllegalArgumentException("worId is error!");

}

return workId;

}

}

5. 定义Redis方式获取workID

Redis方式获取workID逻辑其实比较复杂,一个机器从Redis获取workID以后,应该有心跳续约机制,这样可以保证workID是有效占用的,同时云原生环境部署时,pod可能因为异常原因进行重启,这时如果重启次数过多,可能导致workID被全部占用,此时需要进行workID回收,防止无效workId占用。

package com.jhx.snowflake.support.assigner;

import com.jhx.snowflake.support.property.JhxSnowFlakeProperties;

import com.xfvape.uid.utils.DockerUtils;

import com.xfvape.uid.utils.NetUtils;

import com.xfvape.uid.worker.WorkerIdAssigner;

import lombok.Data;

import lombok.SneakyThrows;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.StringUtils;

import org.apache.commons.lang3.concurrent.BasicThreadFactory;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.core.ZSetOperations;

import javax.annotation.PreDestroy;

import java.util.Set;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.ScheduledThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

@Data

@Slf4j

public class JhxRedisWorkerIdAssigner implements WorkerIdAssigner {

public final static String WORKER_ID_REDIS_PREFIX = "JhxFlakeWorkerId";

private RedisTemplate<String, String> redisTemplate;

private String appName;

/**

* 当前应用的workID

*/

private Long currentWorkId;

public JhxSnowFlakeProperties jhxSnowFlakeProperties;

private String redisCacheName;

private static long time = System.currentTimeMillis();

public JhxRedisWorkerIdAssigner(JhxSnowFlakeProperties jhxSnowFlakeProperties, RedisTemplate redisTemplate) {

this.jhxSnowFlakeProperties = jhxSnowFlakeProperties;

this.redisTemplate = redisTemplate;

}

private String getRedisCacheName() {

if (StringUtils.isBlank(this.redisCacheName)) {

StringBuilder sb = new StringBuilder().append(WORKER_ID_REDIS_PREFIX).append(":").append(appName).append(":");

if (DockerUtils.isDocker()) {

sb.append(DockerUtils.getDockerHost());

} else {

sb.append(NetUtils.getLocalAddress());

}

this.redisCacheName = sb.toString();

}

return this.redisCacheName;

}

@SneakyThrows

@Override

public long assignWorkerId() {

// 获取当前workId

Long workId = redisTemplate.opsForZSet().zCard(this.getRedisCacheName());

while (workId < jhxSnowFlakeProperties.getMaxWorkId()) {

Boolean flag = redisTemplate.opsForZSet().add(this.getRedisCacheName(), String.valueOf(workId), System.currentTimeMillis());

if (flag) {

currentWorkId = workId;

break;

}

++workId;

}

if (workId >= jhxSnowFlakeProperties.getMaxWorkId() && jhxSnowFlakeProperties.getRedis().isEnableRecoverWorkId()) {

workId = recoverWorkId();

}

if (workId < 0) {

log.error("worId is error, worId: {}", workId);

throw new IllegalArgumentException("worId is error!");

}

this.currentWorkId = workId;

log.info("current workId:{} ", currentWorkId);

renewalWorkId();

return workId;

}

/**

* 回收workId

*

* @return

*/

@SneakyThrows

public long recoverWorkId() {

long workId = -1;

int maxRecoverTimes = jhxSnowFlakeProperties.getRedis().getMaxRecoverTimes();

while (maxRecoverTimes > 0) {

Set<ZSetOperations.TypedTuple<String>> typedTuples = redisTemplate.opsForZSet().rangeWithScores(this.getRedisCacheName(), 0, 0);

if (typedTuples != null && !typedTuples.isEmpty()) {

for (ZSetOperations.TypedTuple<String> maxId : typedTuples) {

Double score = maxId.getScore();

String value = maxId.getValue();

if (System.currentTimeMillis() - score > jhxSnowFlakeProperties.getRedis().getRenewalTime() * jhxSnowFlakeProperties.getRedis().getRenewalTime()) {

Boolean flag = redisTemplate.opsForZSet().add(this.getRedisCacheName(), value, System.currentTimeMillis());

if (flag) {

workId = Long.parseLong(value);

break;

}

}

}

} else {

workId = 0;

Boolean flag = redisTemplate.opsForZSet().add(this.getRedisCacheName(), String.valueOf(workId), System.currentTimeMillis());

if (flag) {

break;

}

}

TimeUnit.SECONDS.sleep(jhxSnowFlakeProperties.getRedis().getRecoverWaiteTime()); // 等待

--maxRecoverTimes;

}

currentWorkId = workId;

return workId;

}

/**

* 定时去redis刷新在使用的key

*/

private void renewalWorkId() {

ScheduledExecutorService executorService = new ScheduledThreadPoolExecutor(1,

new BasicThreadFactory.Builder().namingPattern("jhx-snowFlake-renewal-workId-%d").daemon(true).build());

executorService.scheduleAtFixedRate(() -> {

redisTemplate.opsForZSet().add(this.getRedisCacheName(), String.valueOf(currentWorkId), System.currentTimeMillis());

log.info("jhx snowFlake key : {},workId:{} renewal.", this.getRedisCacheName(), currentWorkId);

}, 0, jhxSnowFlakeProperties.getRedis().getRenewalTime(), TimeUnit.SECONDS);

}

/**

* 删除redis中占用的雪花算法workID key

*/

@PreDestroy

public void doDestroy() {

if (currentWorkId > 0 && jhxSnowFlakeProperties.getRedis().isEnable()) {

try {

redisTemplate.opsForZSet().remove(this.getRedisCacheName(), String.valueOf(currentWorkId));

} catch (Exception e) {

log.debug("delete workerId from redis failed");

}

}

}

}

6. 定义DB方式自动装配获取workID逻辑

当我们配置文件中选择使用DB方式时,DB相关配置就会进行配置:

package com.jhx.snowflake.boot;

import com.xfvape.uid.worker.DisposableWorkerIdAssigner;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* DB 原生方式获取workID自动配置类

*/

@ConditionalOnProperty(name = "jhx.sf.db.enable", havingValue = "true")

@Configuration

@MapperScan(basePackages = "com.xfvape.uid.worker.dao")

public class DBWorkIdAssignerConfig {

@Bean

public DisposableWorkerIdAssigner disposableWorkerIdAssigner() {

return new DisposableWorkerIdAssigner();

}

}

由于DB方式需要扫描mapper文件,为了减少用户使用难度,默认设置一下mapper文件的位置:

package com.jhx.snowflake.boot;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.StringUtils;

import org.springframework.boot.context.event.ApplicationEnvironmentPreparedEvent;

import org.springframework.context.ApplicationListener;

@Slf4j

public class DBWorkIdApplicationListener implements ApplicationListener<ApplicationEnvironmentPreparedEvent> {

@Override

public void onApplicationEvent(ApplicationEnvironmentPreparedEvent event) {

if ("true".equals(event.getEnvironment().getProperty("jhx.sf.db.enable"))) {

String property = event.getEnvironment().getProperty("mybatis.mapper-locations");

property = StringUtils.isBlank(property) ? "classpath*:/mybatis/uid/*.xml" : property + ",classpath*:/mybatis/uid/*.xml";

System.setProperty("mybatis.mapper-locations", property);

log.info("mybatis.mapper-locations set {}", property);

}

}

}

7. 定义redis方式自动装配获取workID逻辑

当我们配置文件中选择使用Redis方式时,Redis相关配置就会进行配置:

package com.jhx.snowflake.boot;

import com.jhx.snowflake.support.assigner.JhxRedisWorkerIdAssigner;

import com.jhx.snowflake.support.property.JhxSnowFlakeProperties;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.RedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

/**

* redis方式获取workID自动配置类

*/

@Configuration

@ConditionalOnProperty(name = "jhx.sf.redis.enable", havingValue = "true")

@EnableConfigurationProperties(JhxSnowFlakeProperties.class)

public class RedisWorkIdAssignerConfig {

@Value("${spring.application.name:jhxSnowFlakeApp}")

private String appName;

@Bean

public JhxRedisWorkerIdAssigner jhxRedisWorkerIdAssigner(JhxSnowFlakeProperties jhxSnowFlakeProperties, @Qualifier("jhxSnowFlakeRedisTemplate") RedisTemplate<String, String> redisTemplate) {

JhxRedisWorkerIdAssigner jhxRedisWorkerIdAssigner = new JhxRedisWorkerIdAssigner(jhxSnowFlakeProperties, redisTemplate);

jhxRedisWorkerIdAssigner.setAppName(appName);

return jhxRedisWorkerIdAssigner;

}

@Bean("jhxSnowFlakeRedisTemplate")

public RedisTemplate<String, String> jhxSnowFlakeRedisTemplate(@Autowired(required = false) RedisConnectionFactory factory) {

RedisTemplate<String, String> redisTemplate = new RedisTemplate<>();

RedisSerializer<String> stringRedisSerializer = new StringRedisSerializer();

redisTemplate.setConnectionFactory(factory);

redisTemplate.setKeySerializer(stringRedisSerializer);

redisTemplate.setValueSerializer(stringRedisSerializer);

return redisTemplate;

}

}

8. 自定义CachedUidGenerator装配

我们最终是在项目里面直接使用spring容器注入自定义CachedUidGenerator使用,所以需要进行自动装配:

package com.jhx.snowflake.boot;

import com.jhx.snowflake.support.assigner.JhxLocalWorkerIdAssigner;

import com.jhx.snowflake.support.assigner.JhxRedisWorkerIdAssigner;

import com.jhx.snowflake.support.generator.JhxCachedUidGenerator;

import com.jhx.snowflake.support.property.JhxSnowFlakeProperties;

import com.xfvape.uid.buffer.RejectedPutBufferHandler;

import com.xfvape.uid.buffer.RejectedTakeBufferHandler;

import com.xfvape.uid.worker.DisposableWorkerIdAssigner;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

@EnableConfigurationProperties(JhxSnowFlakeProperties.class)

@ConditionalOnProperty(name = "jhx.sf.enabled", havingValue = "true", matchIfMissing = true)

public class JhxSnowFlakeAutoConfiguration {

@Autowired(required = false)

private RejectedPutBufferHandler rejectedPutBufferHandler;

@Autowired(required = false)

private RejectedTakeBufferHandler rejectedTakeBufferHandler;

/**

* DB 方式

*/

@Autowired(required = false)

private DisposableWorkerIdAssigner disposableWorkerIdAssigner;

/**

* redis 方式

*/

@Autowired(required = false)

private JhxRedisWorkerIdAssigner jhxRedisWorkerIdAssigner;

@Bean

public JhxCachedUidGenerator jhxCachedUidGenerator(JhxSnowFlakeProperties jhxSnowFlakeProperties) {

JhxCachedUidGenerator jhxCachedUidGenerator = new JhxCachedUidGenerator();

jhxCachedUidGenerator.setBoostPower(jhxSnowFlakeProperties.getBoostPower());

jhxCachedUidGenerator.setPaddingFactor(jhxSnowFlakeProperties.getPaddingFactor());

if (jhxSnowFlakeProperties.getScheduleInterval() > 0) {

jhxCachedUidGenerator.setScheduleInterval(jhxSnowFlakeProperties.getScheduleInterval());

}

if (rejectedPutBufferHandler != null) {

jhxCachedUidGenerator.setRejectedPutBufferHandler(rejectedPutBufferHandler);

}

if (rejectedTakeBufferHandler != null) {

jhxCachedUidGenerator.setRejectedTakeBufferHandler(rejectedTakeBufferHandler);

}

jhxCachedUidGenerator.setTimeBits(jhxSnowFlakeProperties.getTimeBits());

jhxCachedUidGenerator.setWorkerBits(jhxSnowFlakeProperties.getWorkerBits());

jhxCachedUidGenerator.setSeqBits(jhxSnowFlakeProperties.getSeqBits());

jhxCachedUidGenerator.setEpochStr(jhxSnowFlakeProperties.getEpochStr());

// 数据库方式优先

if (jhxSnowFlakeProperties.getDb().isEnable()) {

jhxCachedUidGenerator.setWorkerIdAssigner(disposableWorkerIdAssigner);

} else if (jhxSnowFlakeProperties.getLocal().isEnable()) {

jhxCachedUidGenerator.setWorkerIdAssigner(jhxLocalWorkerIdAssigner(jhxSnowFlakeProperties));

} else if (jhxSnowFlakeProperties.getRedis().isEnable()) {

jhxCachedUidGenerator.setWorkerIdAssigner(jhxRedisWorkerIdAssigner);

} else {

throw new IllegalArgumentException("未设置合理雪花算法参数");

}

return jhxCachedUidGenerator;

}

/**

* 本地方式设置workID

*

* @param jhxSnowFlakeProperties

* @return

*/

@Bean

@ConditionalOnProperty(value = "jhx.sf.local.enable", havingValue = "true")

public JhxLocalWorkerIdAssigner jhxLocalWorkerIdAssigner(JhxSnowFlakeProperties jhxSnowFlakeProperties) {

JhxLocalWorkerIdAssigner jhxLocalWorkerIdAssigner = new JhxLocalWorkerIdAssigner();

jhxLocalWorkerIdAssigner.setWorkId(jhxSnowFlakeProperties.getLocal().getWorkerId());

jhxLocalWorkerIdAssigner.setMaxWorkId(jhxSnowFlakeProperties.getMaxWorkId());

return jhxLocalWorkerIdAssigner;

}

}

9. 设置自动装配spring.factories

org.springframework.context.ApplicationListener=com.jhx.snowflake.boot.DBWorkIdApplicationListener

org.springframework.boot.autoconfigure.EnableAutoConfiguration=\

com.jhx.snowflake.boot.DBWorkIdAssignerConfig,com.jhx.snowflake.boot.JhxSnowFlakeAutoConfiguration,com.jhx.snowflake.boot.RedisWorkIdAssignerConfig

10. 最终项目结构

四、自定义增强UidGenerator测试

1. DB原生方式测试

-

导包

<dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>com.jhx</groupId> <artifactId>jhx-snowflake-spring-boot-starter</artifactId> <version>1.0.0-SNAPSHOT</version> </dependency> <!-- 原生UidGenerator需要使用到数据库--> <dependency> <groupId>org.mybatis.spring.boot</groupId> <artifactId>mybatis-spring-boot-starter</artifactId> <version>2.3.0</version> </dependency> <dependency> <groupId>com.mysql</groupId> <artifactId>mysql-connector-j</artifactId> <version>8.2.0</version> </dependency> </dependencies> -

测试代码:

@RestController public class HelloController { @Autowired private JhxCachedUidGenerator cachedUidGenerator; @GetMapping("getUid") public long hello() { return cachedUidGenerator.getUID(); } } -

配置文件:

server: port: 7090 spring: datasource: url: jdbc:mysql://127.0.0.1:3306/uid username: root password: root jhx: sf: enabled: true db: enable: true -

测试结果:

2. 本地设置workID方式

-

导包

<dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>com.jhx</groupId> <artifactId>jhx-snowflake-spring-boot-starter</artifactId> <version>1.0.0-SNAPSHOT</version> </dependency> </dependencies> -

测试代码

@RestController public class HelloController { @Autowired private JhxCachedUidGenerator cachedUidGenerator; @GetMapping("getUid") public long hello() { return cachedUidGenerator.getUID(); } } -

配置文件

server: port: 7090 jhx: sf: enabled: true local: enable: true worker-id: 0 -

测试结果

3. redis方式测试

-

导包

<dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>com.jhx</groupId> <artifactId>jhx-snowflake-spring-boot-starter</artifactId> <version>1.0.0-SNAPSHOT</version> </dependency> <!-- redis相关依赖--> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-redis</artifactId> </dependency> </dependencies> -

测试代码

package com.learn.controller; import com.jhx.snowflake.support.generator.JhxCachedUidGenerator; import com.xfvape.uid.impl.CachedUidGenerator; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.RestController; @RestController public class HelloController { @Autowired private JhxCachedUidGenerator cachedUidGenerator; @GetMapping("getUid") public long hello() { return cachedUidGenerator.getUID(); } } -

配置文件

server: port: 7090 jhx: sf: enabled: true redis: enable: true spring: redis: host: 127.0.0.1 port: 6379 -

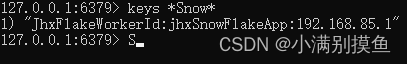

测试结果

-

Redis查询key

五、总结

上述就是通过自定义starter封装UidGenerator,支持原生DB,自定义workID和Redis方式获取workID的原理啦,大家有兴趣也可以自己进行尝试。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?