模板匹配法

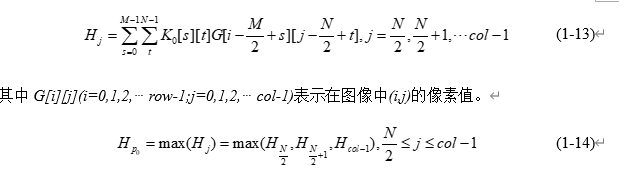

方向模板法是由胡斌等提出的一种利用可变方向模板检测结构光条纹中心的方法,是基于灰度重心法的改进算法[5]。当线结构光投射到粗糙的物体材料表面时,光条纹会产生偏移与形变等现象,在精度要求不是很高的情况下,可近似地构建光条纹发生偏移的四个方向模板,即水平、垂直、左倾45°、右倾45°四个偏移方向。对应上述光条纹发生偏移的四种偏移模式,构建四个方向的模板(四种方向模板见式1-8、1-9、1-10、1-11、1-2),对光条纹截面各行的像素块分别用四个模板进行卷积操作,将计算得到的响应值最大的像素块中心作为该行光条纹截面的条纹中心点。设图像大小为row行,col列,模板在图像的某一行i上滑动,例如第i行,第j列对模板 有:

方向模板法是由胡斌等提出的一种利用可变方向模板检测结构光条纹中心的方法,是基于灰度重心法的改进算法[5]。当线结构光投射到粗糙的物体材料表面时,光条纹会产生偏移与形变等现象,在精度要求不是很高的情况下,可近似地构建光条纹发生偏移的四个方向模板,即水平、垂直、左倾45°、右倾45°四个偏移方向。对应上述光条纹发生偏移的四种偏移模式,构建四个方向的模板(四种方向模板见式1-8、1-9、1-10、1-11、1-2),对光条纹截面各行的像素块分别用四个模板进行卷积操作,将计算得到的响应值最大的像素块中心作为该行光条纹截面的条纹中心点。设图像大小为row行,col列,模板在图像的某一行i上滑动,例如第i行,第j列对模板 有:

模板𝐾1,𝐾2,𝐾3分别有对应的响应值𝐻𝑝1、𝐻𝑝2、𝐻𝑝3,如果有𝐻𝑝=𝑚𝑎𝑥{𝐻𝑝0,𝐻𝑝1,𝐻𝑝2,𝐻𝑝3},则第i行上激光条纹中心位置为点𝑃处。方向模板法是一种可以克服白噪声干扰的光条纹中心线提取方法。除此之外,在一定程度上,方向模板法还可以用于断线的修补与连接,但受限于有限的模板方向,纹理复杂的实体物体的表面将可能会直接导致一个条纹朝着较多的方向移动产生高度偏移。在精度要求更高的物体测量过程中,仅选取四个方向的偏移模板不再能够满足实际任务中测量精度的需求,但是选择更多不同的方向模板又会出现运算时间和计算量进一步增加的问题,影响处理效率。

python 代码

# Direction template method

# author luoye 2022/1/21

# 方向模板匹配效果不好

import cv2

import os

import tqdm

import numpy as np

from scipy import ndimage

from skimage import morphology

def imgConvolve(image, kernel):

'''

:param image: 图片矩阵

:param kernel: 滤波窗口

:return:卷积后的矩阵

'''

img_h = int(image.shape[0])

img_w = int(image.shape[1])

kernel_h = int(kernel.shape[0])

kernel_w = int(kernel.shape[1])

# padding

padding_h = int((kernel_h - 1) / 2)

padding_w = int((kernel_w - 1) / 2)

convolve_h = int(img_h + 2 * padding_h)

convolve_W = int(img_w + 2 * padding_w)

# 分配空间

img_padding = np.zeros((convolve_h, convolve_W))

# 中心填充图片

img_padding[padding_h:padding_h + img_h, padding_w:padding_w + img_w] = image[:, :]

# 卷积结果

image_convolve = np.zeros(image.shape)

# 卷积

for i in range(padding_h, padding_h + img_h):

for j in range(padding_w, padding_w + img_w):

image_convolve[i - padding_h][j - padding_w] = int(

np.sum(img_padding[i - padding_h:i + padding_h + 1, j - padding_w:j + padding_w + 1] * kernel))

return image_convolve

def imgGaussian(sigma):

'''

:param sigma: σ标准差

:return: 高斯滤波器的模板

'''

img_h = img_w = 2 * sigma + 1

gaussian_mat = np.zeros((img_h, img_w))

for x in range(-sigma, sigma + 1):

for y in range(-sigma, sigma + 1):

gaussian_mat[x + sigma][y + sigma] = np.exp(-0.5 * (x ** 2 + y ** 2) / (sigma ** 2))

return gaussian_mat

def imgAverageFilter(image, kernel):

'''

:param image: 图片矩阵

:param kernel: 滤波窗口

:return:均值滤波后的矩阵

'''

return imgConvolve(image, kernel) * (1.0 / kernel.size)

T1=np.array([[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0]])

T2=np.array([[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[1,1,1,1,1,1,1,1,1,1,1,1,1],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0]])

T3=np.array([[1,0,0,0,0,0,0,0,0,0,0,0,0],

[0,1,0,0,0,0,0,0,0,0,0,0,0],

[0,0,1,0,0,0,0,0,0,0,0,0,0],

[0,0,0,1,0,0,0,0,0,0,0,0,0],

[0,0,0,0,1,0,0,0,0,0,0,0,0],

[0,0,0,0,0,1,0,0,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,0,1,0,0,0,0,0],

[0,0,0,0,0,0,0,0,1,0,0,0,0],

[0,0,0,0,0,0,0,0,0,1,0,0,0],

[0,0,0,0,0,0,0,0,0,0,1,0,0],

[0,0,0,0,0,0,0,0,0,0,0,1,0],

[0,0,0,0,0,0,0,0,0,0,0,0,1]])

T4=np.array([[0,0,0,0,0,0,0,0,0,0,0,0,1],

[0,0,0,0,0,0,0,0,0,0,0,1,0],

[0,0,0,0,0,0,0,0,0,0,1,0,0],

[0,0,0,0,0,0,0,0,0,1,0,0,0],

[0,0,0,0,0,0,0,0,1,0,0,0,0],

[0,0,0,0,0,0,0,1,0,0,0,0,0],

[0,0,0,0,0,0,1,0,0,0,0,0,0],

[0,0,0,0,0,1,0,0,0,0,0,0,0],

[0,0,0,0,1,0,0,0,0,0,0,0,0],

[0,0,0,1,0,0,0,0,0,0,0,0,0],

[0,0,1,0,0,0,0,0,0,0,0,0,0],

[0,1,0,0,0,0,0,0,0,0,0,0,0],

[1,0,0,0,0,0,0,0,0,0,0,0,0]])

def DTM(img, thresh):

# thresh = 40

# image_orig = cv2.imread("./3.png")

# image = np.copy(image_orig)

image = np.copy(img)

# 原始图像

# cv2.namedWindow("original image", 0)

# cv2.imshow("original image", image)

# cv2.waitKey(10)

image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

image0 = image

# 过滤噪声

image[image < thresh] = 0

# 高斯滤波

# image = cv2.GaussianBlur(image, (5, 5), 0, 0)

image = ndimage.filters.convolve(image, imgGaussian(5), mode='nearest')

image = np.uint8(np.log(1 + np.array(image, dtype=np.float32)))

# 滤波后的图像

# cv2.namedWindow("lvbo image", 0)

# cv2.imshow("lvbo image", image)

# cv2.waitKey(10)

# 大津阈值

ret, image = cv2.threshold(image, 0, 255, cv2.THRESH_OTSU)

if ret:

image = image / 255.

kernel = np.ones((3, 3), np.uint8)

image = cv2.dilate(image, kernel, iterations=1)

# cv2.namedWindow("dilate image", 0)

# cv2.imshow('dilate image', image)

# cv2.waitKey(10)

# cv2.destroyAllWindows()

# 闭运算

kernel1 = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (5, 5))

image = cv2.morphologyEx(image, cv2.MORPH_CLOSE, kernel1)

# image = cv2.erode(image, kernel, iterations=6)

image = morphology.skeletonize(image)

image = np.multiply(image0, image)

# image = image.astype(np.uint8)*255

# cv2.namedWindow("thin image", 0)

# cv2.imshow('thin image', image)

# cv2.waitKey(10)

row, col = image.shape

# np.zeros_like(image)

H1 = np.zeros((row, col))

H2 = np.zeros((row, col))

H3 = np.zeros((row, col))

H4 = np.zeros((row, col))

num1 = np.zeros((11, 1))

num2 = np.zeros((11, 1))

centerU = 0

centerV = 0

center = []

CenterPoint = []

image1 = np.array(image, np.uint8)

for u in range(7, row - 6):

for v in range(7, col - 6):

if image1[u][v]:

for i in range(0, 13):

for j in range(0, 13):

H1[u][v] = image1[u - 7 + i][v - 7 + j] * T1[i][j] + H1[u][v]

H2[u][v] = image1[u - 7 + i][v - 7 + j] * T2[i][j] + H2[u][v]

H3[u][v] = image1[u - 7 + i][v - 7 + j] * T3[i][j] + H3[u][v]

H4[u][v] = image1[u - 7 + i][v - 7 + j] * T4[i][j] + H4[u][v]

data = np.array([H1[u][v], H2[u][v], H3[u][v], H4[u][v]])

# h = np.where(data == np.max(data))

h = np.argmax(data)

# cc = len(h)

# if cc > 1: h = h[1]

if h == 0:

for k in range(0, 11):

num1[k] = image0[u - 5 + k, v] * (u - 5 + k)

num2[k] = image0[u - 5 + k, v]

if sum(num2)[0] != 0:

centerU = sum(num1) / sum(num2)

centerV = v

CenterPoint.append([int(centerV), int(centerU[0])])

elif h == 1:

for k in range(0, 9):

num1[k] = image0[u, v - 5 + k] * (v - 5 + k)

num2[k] = image0[u, v - 5 + k]

if sum(num2)[0] != 0:

centerU = sum(num1) / sum(num2)

centerV = u

CenterPoint.append([int(centerU[0]), int(centerV)])

# print(int(centerV), int(centerU[0]))

elif h == 2:

for k in range(0, 9):

num1[k] = image0[u + 5 - k, v - 5 + k] * (v - 5 + k)

num2[k] = image0[u + 5 - k, v - 5 + k]

if sum(num2)[0] != 0:

centerU = sum(num1) / sum(num2)

for k in range(0, 9):

num1[k] = image0[u + 5 - k, v - 5 + k] * (u + 5 - k)

num2[k] = image0[u + 5 - k, v - 5 + k]

if sum(num2)[0] != 0:

centerV = sum(num1) / sum(num2)

CenterPoint.append([int(centerU[0]), int(centerV[0])])

# print(int(centerV), int(centerU[0]))

elif h == 3:

for k in range(0, 9):

num1[k] = image0[u - 5 + k, v - 5 + k] * (v - 5 + k)

num2[k] = image0[u - 5 + k, v - 5 + k]

if sum(num2)[0] != 0:

centerU = sum(num1) / sum(num2)

for k in range(0, 9):

num1[k] = image0[u - 5 + k, v - 5 + k] * (u - 5 + k)

num2[k] = image0[u - 5 + k, v - 5 + k]

if sum(num2)[0] != 0:

centerV = sum(num1) / sum(num2)

CenterPoint.append([int(centerU[0]), int(centerV[0])])

# print(int(centerV), int(centerU[0]))

# print(CenterPoint)

newimage = np.zeros_like(image)

for point in CenterPoint:

img[point[1], point[0], :] = (0, 0, 255)

newimage[point[1],point[0]] = 255

# cv2.namedWindow("result", 0)

# cv2.imshow("result", image_orig)

# cv2.waitKey(0)

return img, newimage

if __name__ == "__main__":

import time

import os

import tqdm

image_path = "./Jay/images/"

save_path = "./Jay/dmt/"

if not os.path.isdir(save_path): os.makedirs(save_path)

sum_time = 0

for img in tqdm.tqdm(os.listdir(image_path)):

image = cv2.imread(os.path.join(image_path, img))

start_time = time.time()

image_c, line = DTM(image, thresh=40)

end_time = time.time()

sum_time += end_time - start_time

cv2.imwrite(os.path.join(save_path, img), image_c)

cv2.imwrite(os.path.join(save_path, img.split('.')[0] + "_line.png"), line)

average_time = sum_time / len(os.listdir(image_path))

print("Average one image time: ", average_time)

594

594

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?