整个过程,边安装,边写文档,全部自测过

centos7+三台虚拟机+jdk8 1.8.0_161 + mysql 5.7.38 + hadoop 3.2.2 + hive 3.1.2 + sqoop1.4.7

一、Linux下安装JDK

1、本来准备直接用自带jdk

1)查看是否有JDK

[root@master ~]# java -version

openjdk version "1.8.0_262"

OpenJDK Runtime Environment (build 1.8.0_262-b10)

OpenJDK 64-Bit Server VM (build 25.262-b10, mixed mode)

2)查看java位置

[root@master ~]# echo $JAVA_HOME

[root@master ~]# which java

/usr/bin/java

3)查看java位置对应的软链位置

[root@master ~]# ls -l /usr/bin/java

lrwxrwxrwx. 1 root root 22 6月 3 02:18 /usr/bin/java -> /etc/alternatives/java

4)通过软链地址查看JDK的安装目录

[root@master ~]# ls -l /etc/alternatives/java

lrwxrwxrwx. 1 root root 71 6月 3 02:18 /etc/alternatives/java -> /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64/jre/bin/java

[root@master ~]#

5)配置环境变量

[root@master ~]# vim /etc/profile

添加

# JDK

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64

export PATH=$JAVA_HOME/bin/:$PATH

export CLASSPATH=.$JAVA_HOME/lib

后来查资料发现,open jdk 跟下载的jdk 有协议和功能的区别,未经自己验证,怕使用过程中,会报奇怪的错,就重新下载安装

2、jdk卸载重装

1)查看是否有JDK

[root@master ~]# java -version

openjdk version "1.8.0_262"

OpenJDK Runtime Environment (build 1.8.0_262-b10)

OpenJDK 64-Bit Server VM (build 25.262-b10, mixed mode)

2)查看JDK默认安装包

[root@master ~]# rpm -qa | grep java

tzdata-java-2020a-1.el7.noarch

libvirt-java-devel-0.4.9-4.el7.noarch

java-1.6.0-openjdk-1.6.0.41-1.13.13.1.el7_3.x86_64

java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64

python-javapackages-3.4.1-11.el7.noarch

java-1.8.0-openjdk-devel-1.8.0.262.b10-1.el7.x86_64

java-1.7.0-openjdk-headless-1.7.0.261-2.6.22.2.el7_8.x86_64

libvirt-java-0.4.9-4.el7.noarch

javassist-3.16.1-10.el7.noarch

javamail-1.4.6-8.el7.noarch

java-1.7.0-openjdk-1.7.0.261-2.6.22.2.el7_8.x86_64

java-1.7.0-openjdk-devel-1.7.0.261-2.6.22.2.el7_8.x86_64

java-1.8.0-openjdk-headless-1.8.0.262.b10-1.el7.x86_64

java-1.6.0-openjdk-devel-1.6.0.41-1.13.13.1.el7_3.x86_64

javapackages-tools-3.4.1-11.el7.noarch

3)卸载OpenJDK

[root@master ~]# yum remove *openjdk*

4)查看是否卸载成功

[root@master ~]# rpm -qa | grep java

tzdata-java-2020a-1.el7.noarch

python-javapackages-3.4.1-11.el7.noarch

javapackages-tools-3.4.1-11.el7.noarch

# 或者

[root@master ~]# java -version

-bash: /usr/bin/java: 没有那个文件或目录

5)下载JDK

6)安装JDK

[root@master jdk]# tar -zxvf jdk-8u161-linux-x64.tar.gz # tar进行安装

[root@master jdk]# vim /etc/profile

添加

# JDK

export JAVA_HOME=/opt/jdk/jdk1.8.0_161

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib:$CLASSPATH

export JAVA_PATH=${JAVA_HOME}/bin:${JRE_HOME}/bin

export PATH=$PATH:${JAVA_PATH}

[root@master jdk]# source /etc/profile

[root@master jdk]# java -version

java version "1.8.0_161"

Java(TM) SE Runtime Environment (build 1.8.0_161-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.161-b12, mixed mode)

注:如果Linux下重装jdk后,java -version版本没变

[root@master ~]# java -version

java version "1.8.0_131"

Java(TM) SE Runtime Environment (build 1.8.0_131-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.131-b11, mixed mode)

[root@master ~]# vim /etc/profile

[root@master ~]# which java

/usr/bin/java

[root@master ~]# which javac

/usr/bin/javac

[root@master ~]# rm -rf /usr/bin/java # (javac可删可不删)

[root@master ~]# ln -s /opt/jdk/jdk1.8.0_161/bin/java /usr/bin/java # (javac连接可建可不建)

[root@master ~]# java -version

java version "1.8.0_161"

Java(TM) SE Runtime Environment (build 1.8.0_161-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.161-b12, mixed mode)

[root@master ~]#

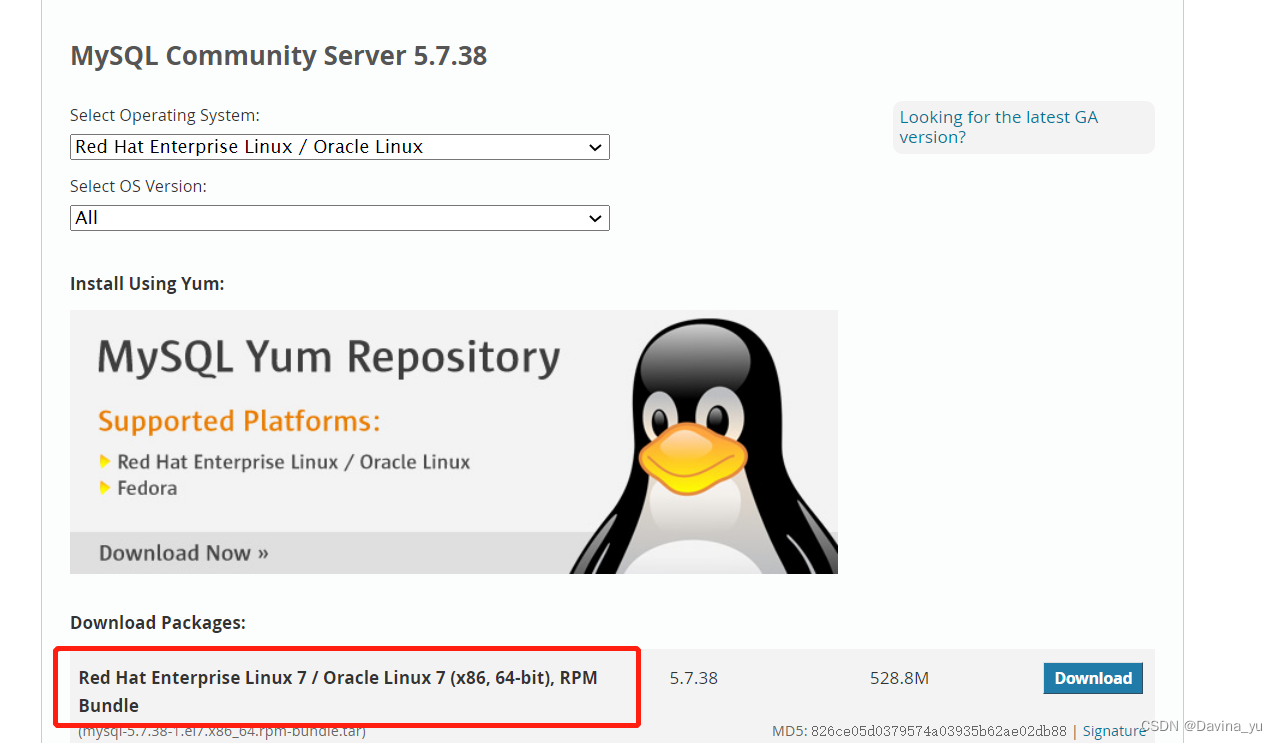

二、linux下RPM方式mysql的安装下载

1、上传解压文件

[root@master mysql]# pwd

/opt/mysql

[root@master mysql]# tar -xvf mysql-5.7.38-1.el7.x86_64.rpm-bundle.tar

mysql-community-client-5.7.38-1.el7.x86_64.rpm

mysql-community-common-5.7.38-1.el7.x86_64.rpm

mysql-community-devel-5.7.38-1.el7.x86_64.rpm

mysql-community-embedded-5.7.38-1.el7.x86_64.rpm

mysql-community-embedded-compat-5.7.38-1.el7.x86_64.rpm

mysql-community-embedded-devel-5.7.38-1.el7.x86_64.rpm

mysql-community-libs-5.7.38-1.el7.x86_64.rpm

mysql-community-libs-compat-5.7.38-1.el7.x86_64.rpm

mysql-community-server-5.7.38-1.el7.x86_64.rpm

mysql-community-test-5.7.38-1.el7.x86_64.rpm

2、检查环境,删除原有的MySQL/MariaDB

[root@master mysql]# rpm -qa | grep mysqld

[root@master mysql]# rpm -qa | grep mariadb

mariadb-devel-5.5.68-1.el7.x86_64

mariadb-libs-5.5.68-1.el7.x86_64

[root@master mysql]# rpm -e mariadb-libs-5.5.68-1.el7.x86_64 --nodeps

[root@master mysql]# rpm -e mariadb-devel-5.5.68-1.el7.x86_64 --nodeps

[root@master mysql]# rpm -qa | grep mariadb

[root@master mysql]#

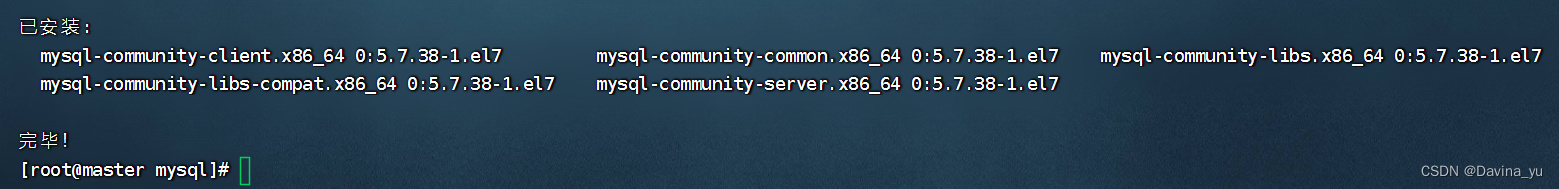

3、安装MySQL相关包

[root@master mysql]# yum install -y mysql-community-{server,client,common,libs}-*

4、启动mysql

[root@master mysql]# systemctl start mysqld

[root@master mysql]# systemctl status mysqld

● mysqld.service - MySQL Server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; enabled; vendor preset: disabled)

Active: active (running) since 一 2022-07-11 13:44:11 CST; 7s ago

Docs: man:mysqld(8)

http://dev.mysql.com/doc/refman/en/using-systemd.html

Process: 65940 ExecStart=/usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid $MYSQLD_OPTS (code=exited, status=0/SUCCESS)

Process: 65715 ExecStartPre=/usr/bin/mysqld_pre_systemd (code=exited, status=0/SUCCESS)

Main PID: 65942 (mysqld)

Tasks: 27

CGroup: /system.slice/mysqld.service

└─65942 /usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid

7月 11 13:44:06 master systemd[1]: Starting MySQL Server...

7月 11 13:44:11 master systemd[1]: Started MySQL Server.

[root@master mysql]#

5、获取临时密码

[root@master mysql]# cat /var/log/mysqld.log | grep password

2022-07-11T05:44:08.653957Z 1 [Note] A temporary password is generated for root@localhost: qR(s.(ck9tt<

6、临时密码,登录mysql

[root@master mysql]# mysql -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 3

Server version: 5.7.38

Copyright (c) 2000, 2022, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

7、修改登录密码

mysql> alter user 'root'@'localhost' identified by 'QWE@123asd';

Query OK, 0 rows affected (0.00 sec)

8、验证

mysql> show databases; # 查看数据库

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

mysql>

[1]+ 已停止 mysql -uroot -p

[root@master mysql]# mysql -uroot -pQWE@123asd # 重新登录

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 4

Server version: 5.7.38 MySQL Community Server (GPL)

Copyright (c) 2000, 2022, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

三、Hadoop集群版

1、关闭防火墙

[root@master opt]# systemctl status firewalld

[root@master opt]# systemctl stop firewalld

[root@master opt]# systemctl disable firewalld

[root@master opt]# systemctl status firewalld

2、配置SSH免密登录

[root@master ~]# cd /root/.ssh/ # 进入 /root/.ssh 目录

[root@master .ssh]# ll

总用量 12

-rw-------. 1 root root 601 7月 11 16:20 authorized_keys

-rw-------. 1 root root 668 7月 11 16:19 id_dsa

-rw-r--r--. 1 root root 601 7月 11 16:19 id_dsa.pub

[root@master .ssh]# rm -rf *

[root@master .ssh]# ll

总用量 0

# 生成 master 的秘钥(私钥和公钥)

[root@master .ssh]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:r6RfYMt+wBjjpCZjdYfez5HCw1UaVpl2R2a4/RY4ybE root@master

The key's randomart image is:

+---[RSA 2048]----+

| . |

| +.. |

| . o +. =o |

| . * * . .E...|

| . * XSo . . o|

| + o *o=+o o|

| . + . =+=oo . |

| .+=o= |

| ..++ |

+----[SHA256]-----+

[root@master .ssh]# ll # id_rsa 是私钥,id_rsa.pub 是公钥

总用量 8

-rw-------. 1 root root 1679 7月 12 08:53 id_rsa

-rw-r--r--. 1 root root 393 7月 12 08:53 id_rsa.pub

[root@master .ssh]# cat id_rsa.pub >authorized_keys # 将公钥(id_rsa.pub)信息,弄到认证文件 authorized_keys 中

[root@master .ssh]# ll

总用量 12

-rw-r--r--. 1 root root 393 7月 12 08:54 authorized_keys

-rw-------. 1 root root 1679 7月 12 08:53 id_rsa

-rw-r--r--. 1 root root 393 7月 12 08:53 id_rsa.pub

[root@master .ssh]# scp authorized_keys root@node1:/root/.ssh/ # 将 master 的公钥信息,追加到 node1 的认证文件(authorized_keys)中

The authenticity of host 'node1 (192.168.1.110)' can't be established.

ECDSA key fingerprint is SHA256:WKi3J1gASDIQ1Prg0wwwrlM7U41mcd83ZqpB1F1lo.

ECDSA key fingerprint is MD5:d0:14:2f:be:38:e9:28:85:c3:2d:6d:85:79:f7:32:6c.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node1,192.168.1.110' (ECDSA) to the list of known hosts.

root@node1's password:

authorized_keys 100% 393 330.2KB/s 00:00

[root@master .ssh]# scp authorized_keys root@node2:/root/.ssh/

The authenticity of host 'node2 (192.168.1.120)' can't be established.

ECDSA key fingerprint is SHA256:WKi3J1gZASDIQ1Prg0wwwrlM7U41mcd83ZqpB1F1lo.

ECDSA key fingerprint is MD5:d0:14:2f:be:38:e9:21:85:c1:2d:6d:85:79:f7:32:6c.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node2,192.168.1.120' (ECDSA) to the list of known hosts.

root@node2's password:

authorized_keys

[root@node1 .ssh]# cd /root/.ssh/

[root@node1 .ssh]# ll

总用量 4

-rw-r--r--. 1 root root 393 7月 12 08:56 authorized_keys

[root@node2 .ssh]# cd /root/.ssh/

[root@node2 .ssh]# ll

总用量 4

-rw-r--r--. 1 root root 393 7月 12 08:56 authorized_keys

# 测试一下

[root@master .ssh]# cd /opt/

[root@master opt]# ll

总用量 8

drwxr-xr-x. 2 root root 4096 7月 11 13:23 mysql

drwxr-xr-x. 2 root root 6 10月 31 2018 rh

-rw-r--r--. 1 root root 2 7月 11 17:35 test

[root@master opt]# scp /opt/test root@node1:/opt/

test 100% 2 1.3KB/s 00:00

[root@master opt]# scp /opt/test root@node2:/opt/

test

3、时间同步

1)修改时区(非必要操作)

# 这个是东八区就不用修改

[root@master opt]# date -R

Mon, 11 Jul 2022 16:27:56 +0800 # 如果不是这个

[root@master opt]# ls -al /etc/localtime

[root@master opt]# timedatectl set-timezone Asia/Shanghai # 修改成东八区

[root@master opt]# timedatectl

[root@master opt]# ls -al /etc/localtime

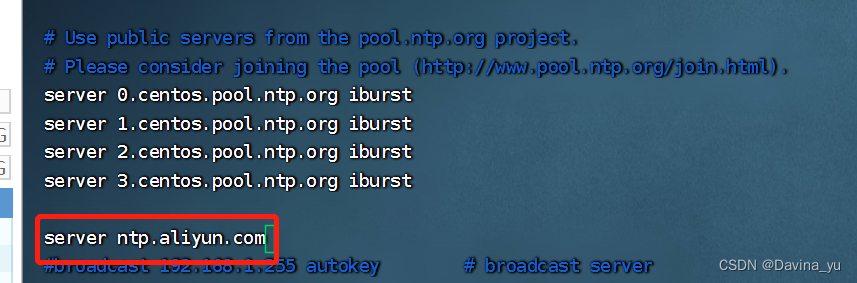

2)同步时间(非必要操作)

[root@master opt]# yum -y install ntp

[root@master opt]# vim /etc/ntp.conf

添加

server ntp.aliyun.com

[root@master opt]# cd /etc/

[root@master etc]# systemctl restart ntpd.service # 重启服务

[root@master etc]# systemctl status ntpd.service # 查看状态

[root@master etc]# ntpq -p

[root@master opt]# date

[root@master opt]# systemctl enable ntpd # ntp开机启动

[root@master opt]# systemctl is-enabled ntpd # 查看开机启动是否设置成功

enabled

4、安装Hadoop

1)解压

# 创建Hadoop目录

[root@master opt]# mkdir hadoop

[root@master opt]# cd hadoop

# 上传包

[root@master hadoop]# ll

-rw-r--r--. 1 root root 395448622 7月 12 09:30 hadoop-3.2.2.tar.gz

[root@master hadoop]# tar -zxvf hadoop-3.2.2.tar.gz

[root@master hadoop]# ll

总用量 386184

drwxr-xr-x. 9 fan fan 149 1月 3 2021 hadoop-3.2.2

-rw-r--r--. 1 root root 395448622 7月 12 09:30 hadoop-3.2.2.tar.gz

[root@master hadoop]# cd hadoop-3.2.2/

[root@master hadoop-3.2.2]#

2) 配置环境变量

[root@master hadoop-3.2.2]# pwd

/opt/hadoop/hadoop-3.2.2

[root@master hadoop-3.2.2]# vim /etc/profile

添加

# HADOOP

export HADOOP_HOME=/opt/hadoop/hadoop-3.2.2

export PATH=${PATH}:${HADOOP_HOME}/bin

export PATH=${PATH}:${HADOOP_HOME}/sbin

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

[root@master hadoop-3.2.2]#

[root@master hadoop-3.2.2]# source /etc/profile

3)修改配置文件

# 创建 hadoop dfs 数据目录

[root@master hadoop]# mkdir -p /opt/hadoop/data/dfs/data

# 创建 hadoop dfs 名称目录

[root@master hadoop]# mkdir -p /opt/hadoop/data/dfs/name

# 创建 hadoop 临时数据目录

[root@master hadoop]# mkdir -p /opt/hadoop/data/temp

[root@master hadoop-3.2.2]# cd /opt/hadoop/hadoop-3.2.2/etc/hadoop/

1、hadoop-env.sh

[root@master hadoop]# vim hadoop-env.sh

添加

export JAVA_HOME=/opt/jdk/jdk1.8.0_161/

export HADOOP_CONF_DIR=/opt/hadoop/hadoop-3.2.2/etc/hadoop

2、 core-site.xml

[root@master hadoop]# vim core-site.xml

添加

<configuration>

<!-- 指定HDFS中NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9001</value>

</property>

<!-- 指定hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/data/temp</value>

</property>

<!-- 启用回收站 -->

<property>

<name>fs.trash.interval</name>

<value>1440</value>

</property>

<property>

<name>fs.trash.checkpoint.interval</name>

<value>120</value>

</property>

</configuration>

3、hdfs-site.xml

[root@master hadoop]# vim hdfs-site.xml

添加

<configuration>

<!-- 设置集群副本数 -->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- hdfs的web管理页面的端口 -->

<property>

<name>dfs.http.address</name>

<value>0.0.0.0:50070</value>

</property>

<!-- name目录 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop/data/dfs/name</value>

</property>

<!-- data目录 -->

<property>

<name>dfs.namenode.data.dir</name>

<value>/opt/hadoop/data/dfs/data</value>

</property>

<!-- 设置secondname的端口 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9002</value>

</property>

</configuration>

4、 mapred-site.xml

[root@master hadoop]# vim mapred-site.xml

添加

<configuration>

<!-- 指定MapReduce运行在yarn上 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

5、yarn-site.xml

[root@master hadoop]# vim yarn-site.xml

添加

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- reducer 获取数据的方式 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定 YARN 的 ResourceManager 的地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<!-- 该节点上YARN可使用的物理内存总量,默认是 8192(MB)-->

<!-- 注意,如果你的节点内存资源不够8GB,则需要调减小这个值 -->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>6144</value>

</property>

<!-- 单个任务可申请最少内存,默认 1024 MB -->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<!-- 单个任务可申请最大内存,默认 8192 MB -->

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>6144</value>

</property>

</configuration>

6、works

[root@master hadoop]# vim workers

添加

node1

node2

7、yarn-env.sh 和 mapred-env.sh

[root@master hadoop]# vim yarn-env.sh

添加

export JAVA_HOME=/opt/jdk/jdk1.8.0_161

[root@master hadoop]# vim mapred-env.sh

添加

export JAVA_HOME=/opt/jdk/jdk1.8.0_161

5、分发Hadoop

[root@master opt]# scp -r hadoop/ root@node1:/opt/

[root@master opt]# scp -r hadoop/ root@node2:/opt/

[root@master opt]# scp -r /etc/profile root@node1:/etc/

profile 100% 2136 1.7MB/s 00:00

[root@master opt]# scp -r /etc/profile root@node2:/etc/

profile 100% 2136 795.6KB/s 00:00

[root@master opt]#

[root@node1 opt]# vim /etc/profile

[root@node1 opt]# source /etc/profile

[root@node2 opt]# vim /etc/profile

[root@node2 opt]# source /etc/profile

6、Hadoop启动

1)hadoop 格式化

# 注意:只有第一次需要

[root@master hadoop-3.2.2]# hadoop namenode -format

注:如果需要重新格式化,需要先删除 tmp 和 logs 目录:

rm -rf $HADOOP_HOME/tmp

rm -rf $HADOOP_HOME/logs

2)启动

[root@master /]# start-all.sh

3)关闭

[root@master /]# stop-all.sh

4)验证

查看Hadoop版本

[root@master /]# hadoop version

Hadoop 3.2.2

Source code repository Unknown -r 7a3bc90b05f25555555576d74264906f0f7a932

Compiled by hexiaoqiao on 2021-01-03T09:26Z

Compiled with protoc 2.5.0

From source with checksum 5a8f564f43334b27f6a4446f56

This command was run using /opt/hadoop/hadoop-3.2.2/share/hadoop/common/hadoop-common-3.2.2.jar

[root@master /]#

各节点进程

[root@master /]# jps

71698 Jps

14804 ResourceManager

13704 NameNode

14298 SecondaryNameNode

[root@node1 /]# jps

45810 DataNode

46439 NodeManager

49870 Jps

[root@node2 /]# jps

11795 Jps

7368 DataNode

7998 NodeManager

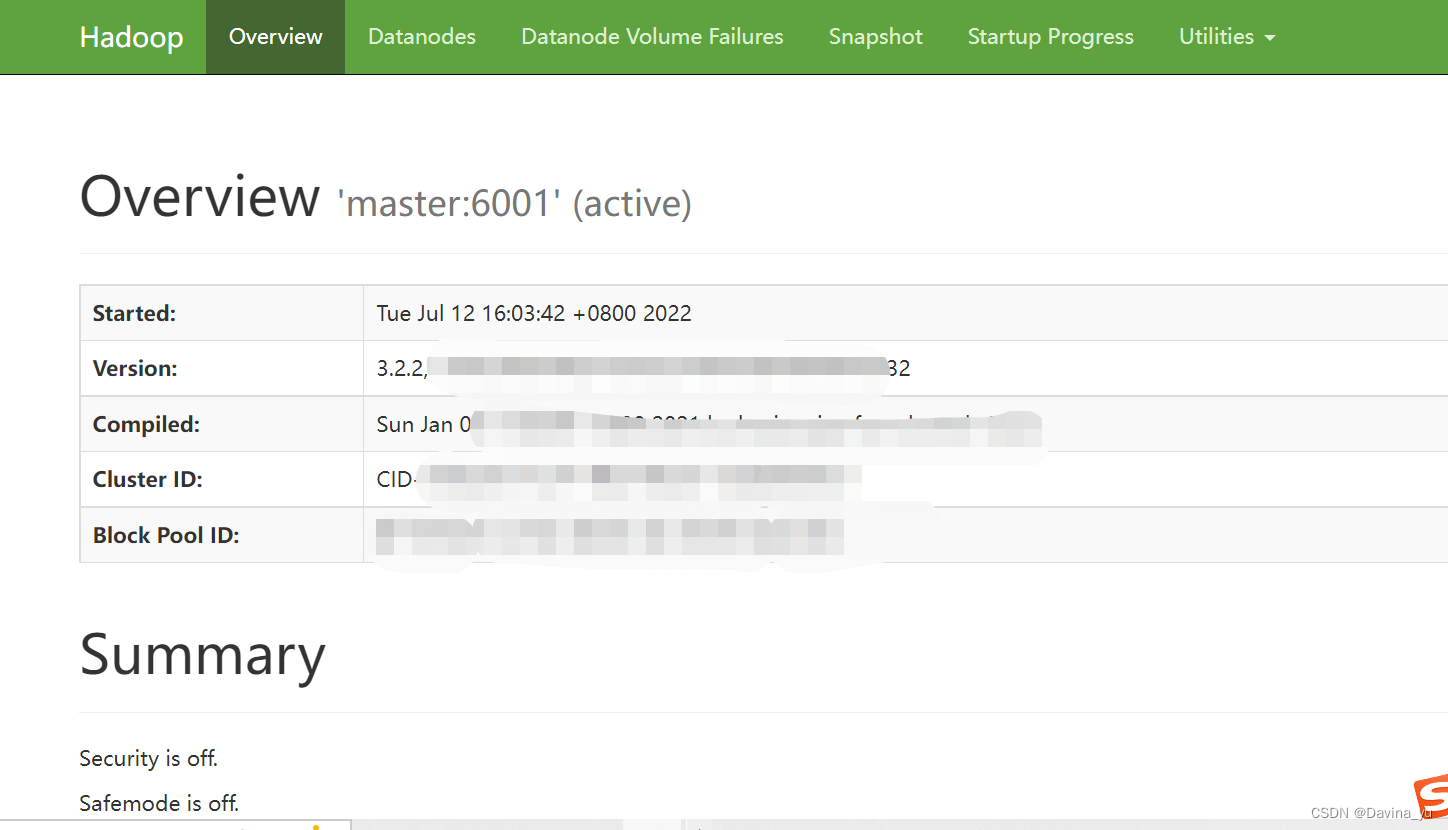

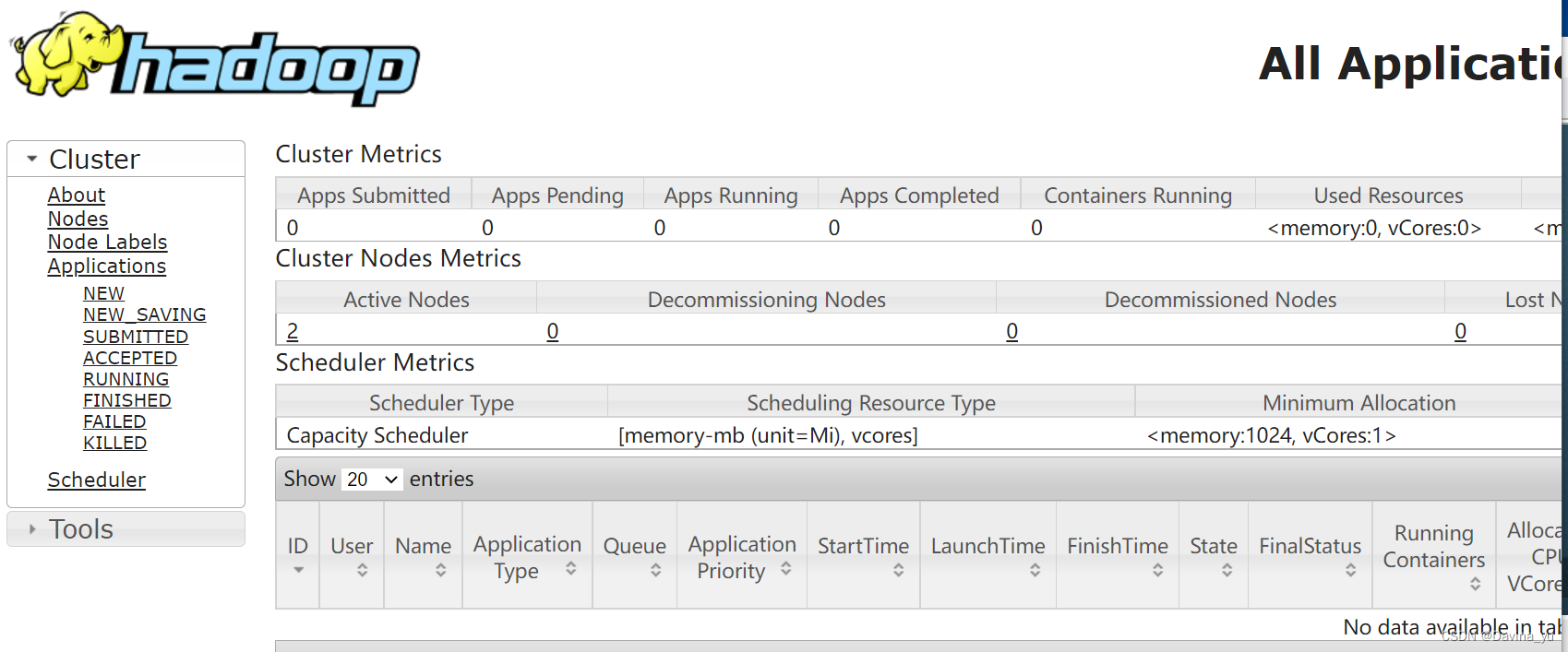

网页登录

1、HDFS平台

http://192.168.1.100:50070/

2、yarn平台

http://192.168.1.100:8088/

四、hive搭建

1、上传安装包

[root@master opt]# mkdir hive

[root@master opt]# cd hive

[root@master hive-3.1]# tar -zxvf apache-hive-3.1.2-bin.tar.gz

[root@master hive-3.1]# mv apache-hive-3.1.2-bin hive-3.1

2、配置环境变量

[root@master hive-3.1]# vim /etc/profile

添加

# HIVE

export HIVE_HOME=/opt/hive/hive-3.1

export PATH=$PATH:$HIVE_HOME/bin

[root@master hive-3.1]# source /etc/profile

3、配置文件

[root@master hive-3.1]# cd /opt/hive/hive-3.1/conf/

[root@master conf]# cp hive-env.sh.template hive-env.sh

[root@master conf]# cp hive-default.xml.template hive-site.xml

[root@master conf]# cp hive-log4j2.properties.template hive-log4j2.properties

[root@master conf]# cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties

1) hive-env.sh

[root@master conf]# vim hive-env.sh

添加

#hadoop安装路径

export HADOOP_HOME=/opt/hadoop/hadoop-3.2.2

#hive路径

export HIVE_CONF_DIR=/opt/hive/hive-3.1/conf

#hivejar包路径

export HIVE_AUX_JARS_PATH=/opt/hive/hive-3.1/lib

#jdk

export JAVA_HOME=/opt/jdk/jdk1.8.0_161

2)hive-site.xml

# 使用hadoop创建3个文件夹

[root@master conf]# hadoop fs -mkdir -p /opt/hive/hive-3.1/warehouse

[root@master conf]# hadoop fs -mkdir -p /opt/hive/hive-3.1/tmp

[root@master conf]# hadoop fs -mkdir -p /opt/hive/hive-3.1/log

[root@master conf]#

# 修改文件夹权限

[root@master conf]# hadoop fs -chmod -R 777 /opt/hive/hive-3.1/warehouse

[root@master conf]# hadoop fs -chmod -R 777 /opt/hive/hive-3.1/tmp

[root@master conf]# hadoop fs -chmod -R 777 /opt/hive/hive-3.1/log

[root@master conf]#

# 查看HDFS文件

[root@master conf]# hadoop fs -ls /opt/hive/hive-3.1/

Found 3 items

drwxrwxrwx - root supergroup 0 2022-07-13 09:06 /opt/hive/hive-3.1/log

drwxrwxrwx - root supergroup 0 2022-07-13 09:06 /opt/hive/hive-3.1/tmp

drwxrwxrwx - root supergroup 0 2022-07-13 09:06 /opt/hive/hive-3.1/warehouse

[root@master conf]#

[root@master conf]# vim hive-site.xml

将文件中的${system:java.io.tmpdir}替换为创建的tmp目录 /opt/hive/hive-3.1/tmp

${system:user.name}改为用户:root

修改为

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.93.100:3306/hive?createDatabaseIfNotExist=true</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>Test@123</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/opt/hive/hive-3.1/log</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/opt/hive/hive-3.1/tmp</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/opt/hive/hive-3.1/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/opt/hive/hive-3.1/tmp</value>

</property>

<property>

<name>hive.txn.xlock.iow</name>

<value>true</value>

<!--

<description>

Ensures commands with OVERWRITE (such as INSERT OVERWRITE) acquire Exclusive locks fortransactional tables. This ensures that inserts (w/o overwrite) running concurrently

are not hidden by the INSERT OVERWRITE.

</description>

-->

</property>

3)匹配高版本guava.jar包

[root@master lib]# rm -rf /opt/hive/hive-3.1/lib/guava-19.0.jar

[root@master lib]# cp /opt/hadoop/hadoop-3.2.2/share/hadoop/common/lib/guava-27.0-jre.jar /opt/hive/hive-3.1/lib/

4)配置Mysql

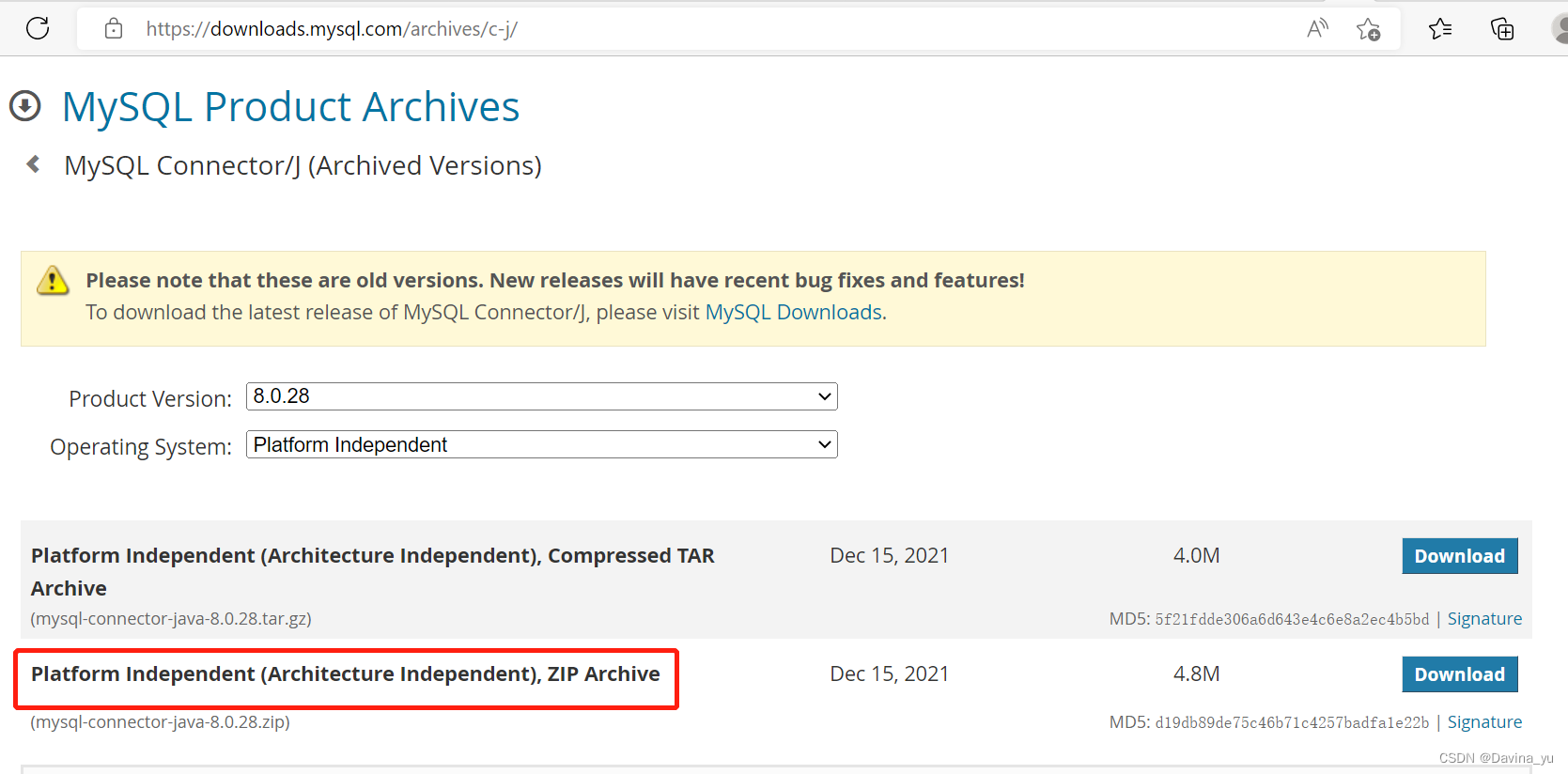

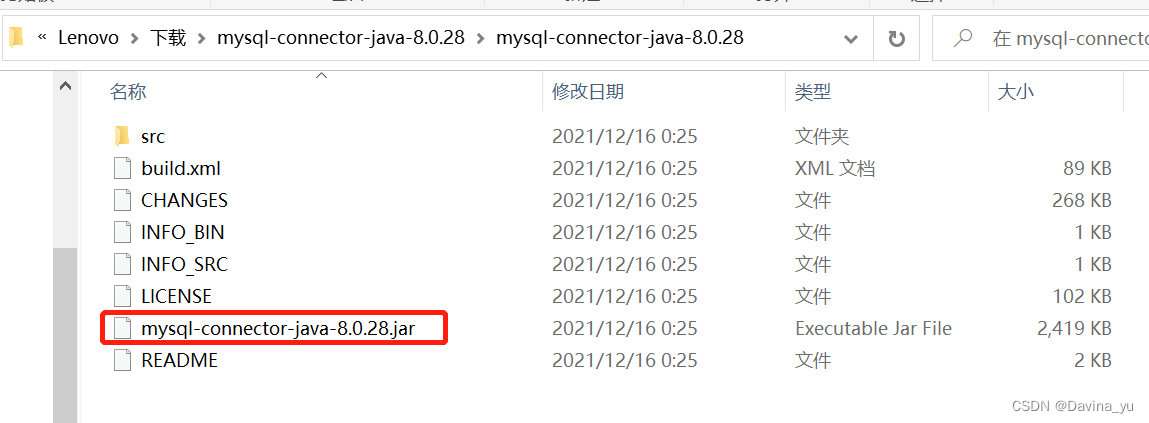

1、下载MySQLjdbc驱动包

解压后,上传

[root@master lib]# cd /opt/hive/hive-3.1/lib/

上传

2、mysql中创建hive相关的数据库及权限

[root@master lib]# mysql -uroot -pTest@123

mysql> create database hive;

Query OK, 1 row affected (0.00 sec)

mysql> CREATE USER 'hive'@'%' IDENTIFIED BY 'Test_hive@123';

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT ALL PRIVILEGES ON hive.* TO 'hive'@'%' IDENTIFIED BY 'Test_hive@123';

Query OK, 0 rows affected, 1 warning (0.02 sec)

# 授权root用户拥有hive数据库的所有权限

mysql> GRANT ALL PRIVILEGES ON hive.* TO 'root'@'%' IDENTIFIED BY 'Test@123';

Query OK, 0 rows affected, 1 warning (0.00 sec)

# 刷新系统权限表

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

5)hadoop里的core-site.xml(可忽略的操作)

[root@master lib]# cd /opt/hadoop/hadoop-3.2.2/etc/hadoop/

[root@master hadoop]# vim core-site.xml

添加

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>root</value>

<description>Allow the superuser oozie to impersonate any members of the group group1 and group2</description>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

<description>The superuser can connect only from host1 and host2 to impersonate a user</description>

</property>

然后,重启Hadoop

[root@master hadoop]# stop-all.sh

[root@master hadoop]# start-all.sh

4、初始化

[root@master conf]# cd /opt/hive/hive-3.1/

[root@master hive-3.1]# schematool -initSchema -dbType mysql

查看Hive元数据仓库中是否有库、表

Initialization script completed

schemaTool completed

[root@master hive-3.1]# mysql -uroot -pTest@123

mysql> use hive;

Database changed

mysql> show tables;

74 rows in set (0.00 sec)

5、登录hive

[root@master conf]# hive

hive> show databases;

OK

default

Time taken: 0.342 seconds, Fetched: 1 row(s)

hive>

6、退出hive

CTRL+Z

或

quit;

五、sqoop搭建

1、解压

[root@master /]# cd /opt/

[root@master opt]# mkdir sqoop

[root@master opt]# cd sqoop/

[root@master sqoop]# ll

总用量 0

[root@master sqoop]# ll

总用量 17536

-rw-r--r--. 1 root root 17953604 7月 14 09:35 sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@master sqoop]# tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@master sqoop]# ll

总用量 17540

drwxr-xr-x. 9 fan fan 4096 12月 19 2017 sqoop-1.4.7.bin__hadoop-2.6.0

-rw-r--r--. 1 root root 17953604 7月 14 09:35 sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@master sqoop]# mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoop-1.4.7

[root@master sqoop]# rm -rf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@master sqoop]# ll

总用量 4

drwxr-xr-x. 9 fan fan 4096 12月 19 2017 sqoop-1.4.7

2、配置sqoop-env.sh

[root@master sqoop]# cd sqoop-1.4.7/conf/

[root@master conf]# ll

总用量 28

-rw-rw-r--. 1 fan fan 3895 12月 19 2017 oraoop-site-template.xml

-rw-rw-r--. 1 fan fan 1404 12月 19 2017 sqoop-env-template.cmd

-rwxr-xr-x. 1 fan fan 1345 12月 19 2017 sqoop-env-template.sh

-rw-rw-r--. 1 fan fan 6044 12月 19 2017 sqoop-site-template.xml

-rw-rw-r--. 1 fan fan 6044 12月 19 2017 sqoop-site.xml

[root@master conf]# cp sqoop-env-template.sh sqoop-env.sh

[root@master conf]# ll

总用量 32

-rw-rw-r--. 1 fan fan 3895 12月 19 2017 oraoop-site-template.xml

-rwxr-xr-x. 1 root root 1345 7月 14 09:37 sqoop-env.sh

-rw-rw-r--. 1 fan fan 1404 12月 19 2017 sqoop-env-template.cmd

-rwxr-xr-x. 1 fan fan 1345 12月 19 2017 sqoop-env-template.sh

-rw-rw-r--. 1 fan fan 6044 12月 19 2017 sqoop-site-template.xml

-rw-rw-r--. 1 fan fan 6044 12月 19 2017 sqoop-site.xml

[root@master conf]# vim sqoop-env.sh

添加

export HADOOP_COMMON_HOME=/opt/hadoop/hadoop-3.2.2

export HADOOP_MAPRED_HOME=/opt/hadoop/hadoop-3.2.2

export HIVE_HOME=/opt/hive/hive-3.1

export HIVE_CONF_DIR=//opt/hive/hive-3.1/conf

3、添加JDBC驱动

[root@master conf]# cp /opt/hive/hive-3.1/lib/mysql-connector-java-8.0.28.jar /opt/sqoop/sqoop-1.4.7/lib/

[root@master conf]# cd /opt/sqoop/sqoop-1.4.7/lib/

[root@master lib]# ll

4、配置环境变量

[root@master lib]# vim /etc/profile

添加

#SQOOP

export SQOOP_HOME=/opt/sqoop/sqoop-1.4.7

export PATH=$PATH:$SQOOP_HOME/bin

[root@master lib]# source /etc/profile

5、启动Sqoop

[root@master lib]# sqoop list-databases --connect jdbc:mysql://192.168.1.100:3306/ --username root --password Test@123

....

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

information_schema

hive

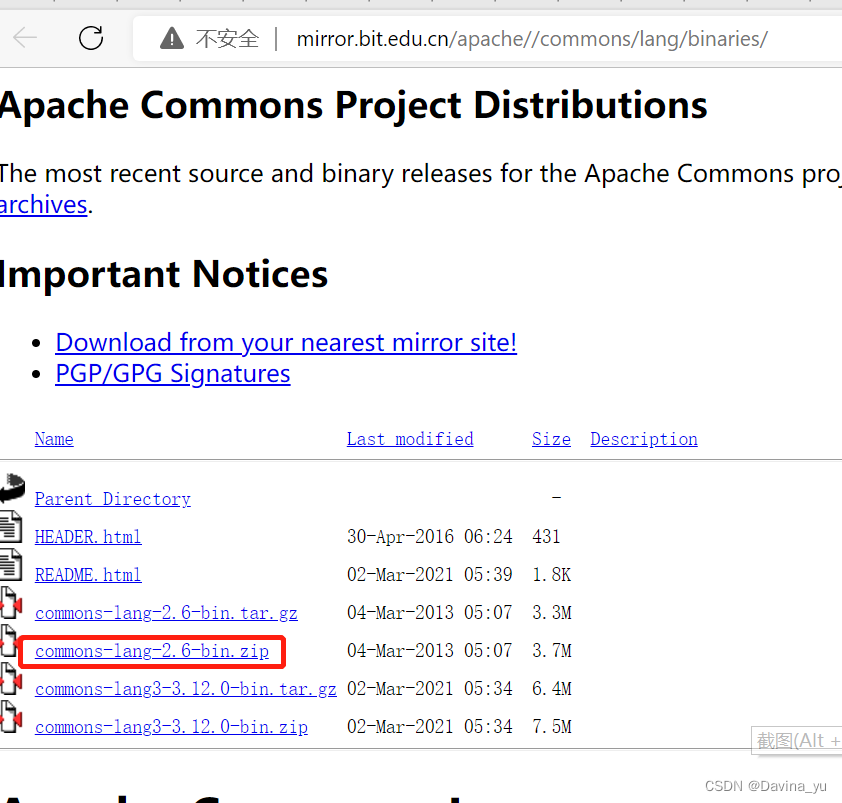

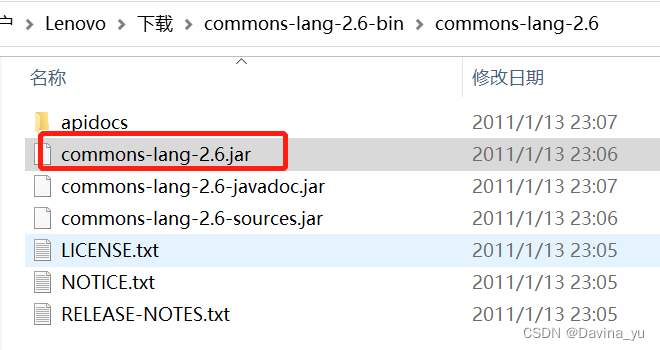

6、报错

处理

下载jar包

commons-lang

本地解压,上传里面的jar包

[root@master lib]# cd /opt/sqoop/sqoop-1.4.7/lib/

[root@master lib]# ll

[root@master lib]# sqoop list-databases --connect jdbc:mysql://192.168.1.100:3306/ --username root --password Test@123

....

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

information_schema

hive

2776

2776

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?