京东云Kubernetes集群

京东云Kubernetes整合京东云虚拟化、存储和网络能力,提供高性能可伸缩的容器应用管理能力,简化集群的搭建和扩容等工作,让用户专注于容器化的应用的开发与管理。

用户可以在京东云创建一个安全高可用的 Kubernetes 集群,并由京东云完全托管 Kubernetes 服务,并保证集群的稳定性和可靠性。让用户可以方便地在京东云上使用 Kubernetes 管理容器应用。

京东云Kubernetes集群:https://www.jdcloud.com/cn/products/jcs-for-kubernetes

创建Kubernetes集群请参考:https://docs.jdcloud.com/cn/jcs-for-kubernetes/create-to-cluster

集群创建完成后,需要配置kubectl客户端以连接Kubernetes集群。请参考:https://docs.jdcloud.com/cn/jcs-for-kubernetes/connect-to-cluster

Ingress边界路由

虽然Kubernetes集群内部署的pod、server都有自己的IP,但是却无法提供外网访问,以前我们可以通过监听NodePort的方式暴露服务,但是这种方式并不灵活,生产环境也不建议使用。

Ingresss是k8s集群中的一个API资源对象,扮演边缘路由器(edge router)的角色,也可以理解为集群防火墙、集群网关,我们可以自定义路由规则来转发、管理、暴露服务(一组pod),非常灵活,生产环境建议使用这种方式。

本文利用Helm在京东云上部署Ingress(选型为Traefik)。

安装Helm

Helm介绍

Helm 可以理解为Kubernetes的包管理工具,可以方便地发现、共享和使用为Kubernetes构建的应用。

在Kubernetes中部署容器云的应用也是一项有挑战性的工作,Helm就是为了简化在Kubernetes中安装部署容器云应用的一个客户端工具。

通过helm能够帮助开发者定义、安装和升级Kubernetes中的容器云应用,同时,也可以通过helm进行容器云应用的分享。

在Kubeapps Hub中提供了包括Redis、MySQL和Jenkins等参见的应用,通过helm可以使用一条命令就能够将其部署安装在自己的Kubernetes集群中。

Kubeapps Hub:https://hub.kubeapps.com/

Helm架构由Helm客户端、Tiller服务器端和Chart仓库所组成;Tiller部署在Kubernetes中,Helm客户端从Chart仓库中获取Chart安装包,并将其安装部署到Kubernetes集群中。

Helm是管理Kubernetes包的工具,Helm能提供下面的能力:

- 创建新的charts

- 将charts打包成tgz文件

- 与chart仓库交互

- 安装和卸载Kubernetes的应用

- 管理使用Helm安装的charts的生命周期

Helm部署

Helm客户端

在进行Helm客户端安装前,请确认已有可用的Kubernetes集群环境,并已安装了kubectl。

通过访问:https://github.com/kubernetes/helm/releases 下载Helm的合适的版本。

# wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

# tar -xvf helm-v2.11.0-linux-amd64.tar.gz

# cp linux-amd64/helm /usr/local/bin/

验证(此时还没有部署tiller):

# helm version

Client: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

Error: could not find tiller

部署Helm Tiller

helm官方charts仓库以及tiller镜像均需翻墙访问,国内可采用如下方式配置。

Tiller镜像

利用Docker Hub的自动build功能,将gcr.io的tiller镜像转到Docker hub。

Tiller Dockerfile:

https://github.com/bingli7/autobuildindockerhub/blob/master/tiller/Dockerfile

Build成功后,可直接获取Docker Hub上的tiller镜像:

docker.io/bingli7/tiller:v2.11.0

Helm Charts仓库

helm官方charts仓库需翻墙访问,使用Github上的个人Mirror:

https://github.com/BurdenBear/kube-charts-mirror

Tiller部署

有了能访问的tiller镜像和Charts仓库,便可以初始化tiller了:

# helm init --tiller-image docker.io/bingli7/tiller:v2.11.0 --stable-repo-url https://burdenbear.github.io/kube-charts-mirror/

Creating /root/.helm

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://burdenbear.github.io/kube-charts-mirror/

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

helm init手动指定tiller镜像(–tiller-image),以及charts仓库(–stable-repo-url)

Tiller默认安装于Kubernetes集群的kube-system namespace:

# kubectl get pod -n kube-system | grep tiller

tiller-deploy-5f8b54666f-n62jz 1/1 Running 0 1m

创建serviceaccount、clusterrolebinding:

(https://github.com/helm/helm/issues/3055)

kubectl create serviceaccount --namespace kube-system tiller

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

部署完成:

# helm version

Client: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

使用helm搜索package:

# helm search traefik

NAME CHART VERSION APP VERSION DESCRIPTION

stable/traefik 1.54.0 1.7.4 A Traefik based Kubernetes ingress controller with Let's ...

Helm部署成功,Happy Helming!

Traefik Ingress部署

什么是Ingress?

在Kubernetes中,service和Pod的IP地址仅可以在集群网络内部使用,对于集群外的应用是不可见的。为了使外部的应用能够访问集群内的服务,在Kubernetes中可以通过NodePort和LoadBalancer这两种类型的service,或者使用Ingress。

Ingress本质是通过http代理服务器将外部的http请求转发到集群内部的后端服务。通过Ingress,外部应用访问群集内容服务的过程如下所示:

Ingress 就是为进入集群的请求提供路由规则的集合。

Ingress 可以给 service 提供集群外部访问的URL、负载均衡、SSL终止、HTTP路由等。为了配置这些 Ingress 规则,集群管理员需要部署一个 Ingress controller,它监听 Ingress 和 service 的变化,并根据规则配置负载均衡并提供访问入口。

Traefik是什么?

Traefik在Github上有19K星星:

Traefik is a modern HTTP reverse proxy and load balancer designed for deploying microservices.

Traefik是一个为了让部署微服务更加便捷而诞生的现代HTTP反向代理、负载均衡工具。

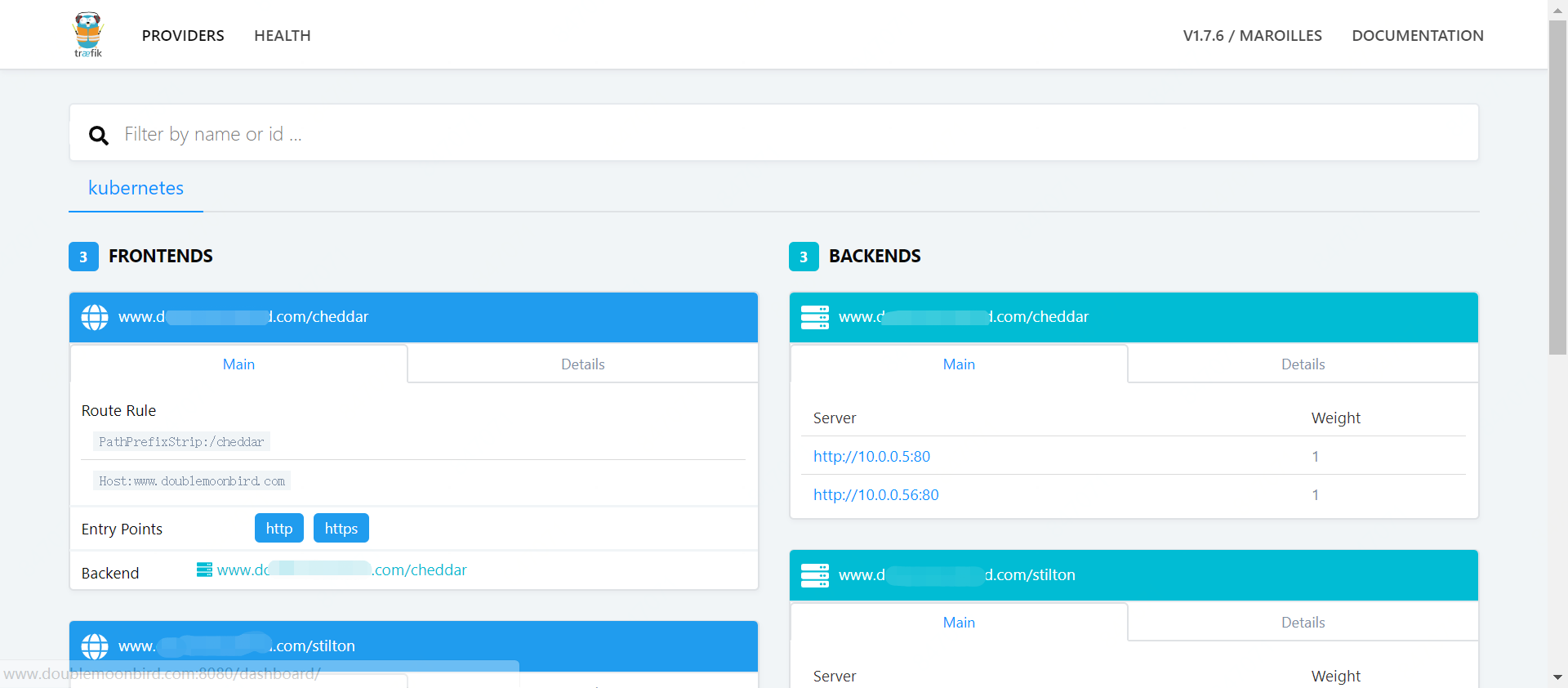

Traefik是一个用Golang开发的轻量级的Http反向代理和负载均衡器,虽然相比于Nginx,它是后起之秀,但是它天然拥抱kubernetes,直接与集群k8s的Api Server通信,反应非常迅速,同时还提供了友好的控制面板和监控界面,不仅可以方便地查看Traefik根据Ingress生成的路由配置信息,还可以查看统计的一些性能指标数据,如:总响应时间、平均响应时间、不同的响应码返回的总次数等。

不仅如此,Traefik还支持丰富的annotations配置,可配置众多出色的特性,例如:自动熔断、负载均衡策略、黑名单、白名单。所以Traefik对于微服务来说简直就是一神器。

Traefik User Guide for Kubernetes:

使用traefik chart直接安装

# helm search traefik

NAME CHART VERSION APP VERSION DESCRIPTION

stable/traefik 1.54.0 1.7.4 A Traefik based Kubernetes ingress controller with Let's ...

# helm install stable/traefik --version 1.54.0 --namespace traefik

NAME: vested-deer

LAST DEPLOYED: Fri Dec 7 13:27:33 2018

NAMESPACE: traefik

STATUS: DEPLOYED

RESOURCES:

==> v1beta1/Deployment

NAME AGE

vested-deer-traefik 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

vested-deer-traefik-66cc9759bd-62d8q 0/1 ContainerCreating 0 0s

==> v1/ConfigMap

NAME AGE

vested-deer-traefik 0s

==> v1/Service

vested-deer-traefik 0s

NOTES:

1. Get Traefik's load balancer IP/hostname:

NOTE: It may take a few minutes for this to become available.

You can watch the status by running:

$ kubectl get svc vested-deer-traefik --namespace traefik -w

Once 'EXTERNAL-IP' is no longer '<pending>':

$ kubectl describe svc vested-deer-traefik --namespace traefik | grep Ingress | awk '{print $3}'

2. Configure DNS records corresponding to Kubernetes ingress resources to point to the load balancer IP/hostname found in step 1

正常启动后:

$ kubectl get all -n traefik

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/dinky-gnat-traefik 1 1 1 1 1d

NAME DESIRED CURRENT READY AGE

rs/dinky-gnat-traefik-56db8dbb7f 1 1 1 1d

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/dinky-gnat-traefik 1 1 1 1 1d

NAME DESIRED CURRENT READY AGE

rs/dinky-gnat-traefik-56db8dbb7f 1 1 1 1d

NAME READY STATUS RESTARTS AGE

po/dinky-gnat-traefik-56db8dbb7f-m52mz 1/1 Running 0 1d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/dinky-gnat-traefik LoadBalancer 10.0.62.154 114.67.89.178 80:30459/TCP,443:32439/TCP 1d

发现没有dashboard。

建议将chart下载到本地,然后按需修改yaml文件。

下载Traefik chart

通过Helm获取Traefik:

$ helm search traefik

NAME CHART VERSION APP VERSION DESCRIPTION

stable/traefik 1.55.0 1.7.4 A Traefik based Kubernetes ingress controller with Let's ...

将Package下载到本地:

$ helm fetch stable/traefik

$ ls

traefik-1.55.0.tgz

下载到本地的为package压缩包,该压缩包内包含了所有的Yaml文件。

解压缩:

$ tar -xvf traefik-1.55.0.tgz

查看package内的所有文件:

$ tree traefik

traefik

├── Chart.yaml

├── README.md

├── templates

│ ├── acme-pvc.yaml

│ ├── configmap.yaml

│ ├── dashboard-ingress.yaml

│ ├── dashboard-service.yaml

│ ├── default-cert-secret.yaml

│ ├── deployment.yaml

│ ├── dns-provider-secret.yaml

│ ├── _helpers.tpl

│ ├── NOTES.txt

│ ├── poddisruptionbudget.yaml

│ ├── rbac.yaml

│ ├── service.yaml

│ └── storeconfig-job.yaml

└── values.yaml

1 directory, 16 files

注:

Helm charts也可在Github上查看,如Traefik chart:

https://github.com/helm/charts/tree/master/stable/traefik且页面内有简介及安装步骤

修改定义文件

编辑values.yaml,启用dashboard:

dashboard:

enabled: true

domain: traefik.example.com

删除之前的部署:

$ helm list

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

dinky-gnat 1 Fri Dec 7 18:25:44 2018 DEPLOYED traefik-1.55.0 1.7.4 traefik

$ helm delete dinky-gnat

release "dinky-gnat" deleted

$ kubectl get all -n traefik

No resources found.

上一步没有指定名字,所以自动生成的名字为dinky-gnat

重新部署:

$ helm install . --name traefik --namespace traefik

NAME: traefik

LAST DEPLOYED: Sun Dec 9 00:02:20 2018

NAMESPACE: traefik

STATUS: DEPLOYED

RESOURCES:

==> v1/ConfigMap

NAME AGE

traefik 0s

==> v1/Service

traefik-dashboard 0s

traefik 0s

==> v1beta1/Deployment

traefik 0s

==> v1beta1/Ingress

traefik-dashboard 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

traefik-c495994c7-5v6pj 0/1 ContainerCreating 0 0s

NOTES:

1. Get Traefik's load balancer IP/hostname:

NOTE: It may take a few minutes for this to become available.

You can watch the status by running:

$ kubectl get svc traefik --namespace traefik -w

Once 'EXTERNAL-IP' is no longer '<pending>':

$ kubectl describe svc traefik --namespace traefik | grep Ingress | awk '{print $3}'

2. Configure DNS records corresponding to Kubernetes ingress resources to point to the load balancer IP/hostname found in step 1

可以看到部署了traefik dashboard。

$ kubectl get all -n traefik

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/traefik 1 1 1 1 2m

NAME DESIRED CURRENT READY AGE

rs/traefik-c495994c7 1 1 1 2m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/traefik 1 1 1 1 2m

NAME DESIRED CURRENT READY AGE

rs/traefik-c495994c7 1 1 1 2m

NAME READY STATUS RESTARTS AGE

po/traefik-c495994c7-5v6pj 1/1 Running 0 2m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/traefik LoadBalancer 10.0.57.215 114.67.90.230 80:30954/TCP,443:31037/TCP 2m

svc/traefik-dashboard ClusterIP 10.0.63.18 <none> 80/TCP 2m

Traefik pod内有报错的日志,原因为权限不够:

$ kubectl logs traefik-c495994c7-5v6pj -n traefik

E1208 16:57:39.036588 1 reflector.go:205] github.com/containous/traefik/vendor/k8s.io/client-go/informers/factory.go:86: Failed to list *v1.Service: services is forbidden: User "system:serviceaccount:traefik:default" cannot list services at the cluster scope

E1208 16:57:39.037329 1 reflector.go:205] github.com/containous/traefik/vendor/k8s.io/client-go/informers/factory.go:86: Failed to list *v1.Endpoints: endpoints is forbidden: User "system:serviceaccount:traefik:default" cannot list endpoints at the cluster scope

E1208 16:57:39.038327 1 reflector.go:205] github.com/containous/traefik/vendor/k8s.io/client-go/informers/factory.go:86: Failed to list *v1beta1.Ingress: ingresses.extensions is forbidden: User "system:serviceaccount:traefik:default" cannot list ingresses.extensions at the cluster scope

启动RBAC:

$ vi values.yaml

rbac:

enabled: true

删除上一个release:

$ helm delete traefik --purge

release "traefik" deleted

重新部署:

$ helm install . --name traefik --namespace traefik

NAME: traefik

LAST DEPLOYED: Sun Dec 9 01:01:27 2018

NAMESPACE: traefik

STATUS: DEPLOYED

RESOURCES:

==> v1/Service

NAME AGE

traefik-dashboard 1s

traefik 1s

==> v1beta1/Deployment

traefik 1s

==> v1beta1/Ingress

traefik-dashboard 1s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

traefik-54bb9fbf87-8cgwg 0/1 ContainerCreating 0 1s

==> v1/ConfigMap

NAME AGE

traefik 1s

==> v1/ServiceAccount

traefik 1s

==> v1/ClusterRole

traefik 1s

==> v1/ClusterRoleBinding

traefik 1s

NOTES:

1. Get Traefik's load balancer IP/hostname:

NOTE: It may take a few minutes for this to become available.

You can watch the status by running:

$ kubectl get svc traefik --namespace traefik -w

Once 'EXTERNAL-IP' is no longer '<pending>':

$ kubectl describe svc traefik --namespace traefik | grep Ingress | awk '{print $3}'

2. Configure DNS records corresponding to Kubernetes ingress resources to point to the load balancer IP/hostname found in step 1

ClusterRole、ClusterRoleBinding、ServiceAccount均已创建成功。

查看POD日志,启动成功:

$ kubectl logs po/traefik-54bb9fbf87-8cgwg -n traefik

{"level":"info","msg":"Using TOML configuration file /config/traefik.toml","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Traefik version v1.7.4 built on 2018-10-30_10:44:30AM","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"\nStats collection is disabled.\nHelp us improve Traefik by turning this feature on :)\nMore details on: https://docs.traefik.io/basics/#collected-data\n","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Preparing server http \u0026{Address::80 TLS:\u003cnil\u003e Redirect:\u003cnil\u003e Auth:\u003cnil\u003e WhitelistSourceRange:[] WhiteList:\u003cnil\u003e Compress:true ProxyProtocol:\u003cnil\u003e ForwardedHeaders:0xc00048f860} with readTimeout=0s writeTimeout=0s idleTimeout=3m0s","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Preparing server httpn \u0026{Address::8880 TLS:\u003cnil\u003e Redirect:\u003cnil\u003e Auth:\u003cnil\u003e WhitelistSourceRange:[] WhiteList:\u003cnil\u003e Compress:true ProxyProtocol:\u003cnil\u003e ForwardedHeaders:0xc00048f880} with readTimeout=0s writeTimeout=0s idleTimeout=3m0s","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Preparing server traefik \u0026{Address::8080 TLS:\u003cnil\u003e Redirect:\u003cnil\u003e Auth:\u003cnil\u003e WhitelistSourceRange:[] WhiteList:\u003cnil\u003e Compress:false ProxyProtocol:\u003cnil\u003e ForwardedHeaders:0xc00048f8a0} with readTimeout=0s writeTimeout=0s idleTimeout=3m0s","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Starting provider configuration.ProviderAggregator {}","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Starting server on :80","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Starting server on :8880","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Starting server on :8080","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Starting provider *kubernetes.Provider {\"Watch\":true,\"Filename\":\"\",\"Constraints\":[],\"Trace\":false,\"TemplateVersion\":0,\"DebugLogGeneratedTemplate\":false,\"Endpoint\":\"\",\"Token\":\"\",\"CertAuthFilePath\":\"\",\"DisablePassHostHeaders\":false,\"EnablePassTLSCert\":false,\"Namespaces\":null,\"LabelSelector\":\"\",\"IngressClass\":\"\",\"IngressEndpoint\":null}","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"ingress label selector is: \"\"","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Creating in-cluster Provider client","time":"2018-12-08T17:01:28Z"}

{"level":"info","msg":"Server configuration reloaded on :80","time":"2018-12-08T17:01:29Z"}

{"level":"info","msg":"Server configuration reloaded on :8880","time":"2018-12-08T17:01:29Z"}

{"level":"info","msg":"Server configuration reloaded on :8080","time":"2018-12-08T17:01:29Z"}

{"level":"info","msg":"Server configuration reloaded on :80","time":"2018-12-08T17:01:44Z"}

{"level":"info","msg":"Server configuration reloaded on :8880","time":"2018-12-08T17:01:44Z"}

{"level":"info","msg":"Server configuration reloaded on :8080","time":"2018-12-08T17:01:44Z"}

部署成功后,其实traefik已经为其dashboard添加了ingress访问规则:

$ kubectl get ingress -n traefik

NAME HOSTS ADDRESS PORTS AGE

traefik-dashboard traefik.example.com 80 3m

具体规则为“host: traefik.example.com”,即请求header的host为 “traefik.example.com”:

$ kubectl get ingress/traefik-dashboard -n traefik -o yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

creationTimestamp: 2018-12-08T17:01:27Z

generation: 1

labels:

app: traefik

chart: traefik-1.55.0

heritage: Tiller

release: traefik

name: traefik-dashboard

namespace: traefik

resourceVersion: "141453"

selfLink: /apis/extensions/v1beta1/namespaces/traefik/ingresses/traefik-dashboard

uid: e6f0caf2-fb0a-11e8-b7e3-fa163e934135

spec:

rules:

- host: traefik.example.com

http:

paths:

- backend:

serviceName: traefik-dashboard

servicePort: 80

status:

loadBalancer: {}

查看Traefik的外网访问IP为114.67.90.234:

$ kubectl get svc -n traefik

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

traefik LoadBalancer 10.0.62.185 114.67.90.234 80:31639/TCP,443:30379/TCP 9m

traefik-dashboard ClusterIP 10.0.60.84 <none> 80/TCP 9m

配置本地的hosts,添加traefik dashboard:

114.67.90.234 traefik.example.com

浏览器内访问:traefik.example.com

注意:未备案的域名访问会被京东云禁止访问!

暴露公网IP访问Traefik Dashboard

创建负载均衡service外网访问dashboard:

$ kubectl get svc -n traefik

kubeNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

traefik LoadBalancer 10.0.62.185 114.67.90.234 80:31639/TCP,443:30379/TCP 52m

traefik-dashboard ClusterIP 10.0.60.84 <none> 80/TCP 52m

$ kubectl get deployment -n traefik

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

traefik 1 1 1 1 52m

$ kubectl expose deployment/traefik -n traefik --port 8080 --target-port 8080 --type LoadBalancer --name traefik-dashboard-external -n traefik

service "traefik-dashboard-external" exposed

负载均衡创建成功:

$ kubectl get svc/traefik-dashboard-external -n traefik

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

traefik-dashboard-external LoadBalancer 10.0.59.85 114.67.90.235 8080:31369/TCP 36s

访问负载均衡IP:8080:

114.67.90.235:8080

关于Helm Chart中各个配置参数的含义,请参考:

https://github.com/helm/charts/tree/master/stable/traefik#configuration

792

792

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?