2 python k近邻算法

便于阅读,先贴完整代码,有个大致印象。后面再分布讲解

# -*- coding: utf-8 -*-

"""

Created on Sat Sep 17 15:31:01 2016

@author: 打江南走过一阵

"""

from numpy import *

import operator

from os import listdir

def createDataSet():

group = array([[1.0,1.1],[1.0,1.0],[0,0],[0,0.1]])

labels = ['A','A','B','B']

return group, labels

def classify0(inX, dataSet, labels, k):

dataSetSize = dataSet.shape[0]

diffMat = tile(inX, (dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount={}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

sortedClassCount = sorted(classCount.iteritems(), key=operator.itemgetter(1), reverse=True)

return sortedClassCount[0][0]

def file2matrix(filename):

fr = open(filename)

numberOfLines = len(fr.readlines()) #get the number of lines in the file

returnMat = zeros((numberOfLines,3)) #prepare matrix to return

classLabelVector = [] #prepare labels return

fr = open(filename)

index = 0

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat[index,:] = listFromLine[0:3]

classLabelVector.append(int(listFromLine[-1]))

index += 1

return returnMat,classLabelVector

def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = zeros(shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - tile(minVals, (m,1))

normDataSet = normDataSet/tile(ranges, (m,1)) #element wise divide

return normDataSet, ranges, minVals

def datingClassTest():

hoRatio = 0.10 #hold out 10%

datingDataMat,datingLabels = file2matrix('datingTestSet2.txt') #load data setfrom file

normMat, ranges, minVals = autoNorm(datingDataMat)

m = normMat.shape[0]

numTestVecs = int(m*hoRatio)

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i,:],normMat[numTestVecs:m,:],\

datingLabels[numTestVecs:m],3)

print "the classifier came back with: %d, the real answer is: %d" \

% (classifierResult, datingLabels[i])

if (classifierResult != datingLabels[i]): errorCount += 1.0

print "the total error rate is: %f" % (errorCount/float(numTestVecs))

print errorCount

#输入某人的信息,便得出对对方喜欢程度的预测值

#python中raw_input允许用户输入文本行命令并返回用户所输入的命令

def classifyPerson():

resultList = ['not at all', 'in small doses', 'in large doses']

percentTats = float(raw_input("percentage of time spent playing video games?"))

ffMiles = float(raw_input("frequent flier miles earned per year?"))

iceCream = float(raw_input("liters of ice cream consumed per year?"))

datingDataMat, datingLabels = file2matrix('datingTestSet2.txt')

normMat, ranges, minVals = autoNorm(datingDataMat)

inArr = array([ffMiles, percentTats, iceCream])

classifierResult = classify0((inArr - minVals)/ranges, normMat, datingLabels,3)

print 'You will probably like this person: ', resultList[classifierResult - 1]

def img2vector(filename):

returnVect = zeros((1,1024))

fr = open(filename)

for i in range(32):

lineStr = fr.readline()

for j in range(32):

returnVect[0,32*i+j] = int(lineStr[j])

return returnVect

def handwritingClassTest():

hwLabels = []

trainingFileList = listdir('trainingDigits') #load the training set

m = len(trainingFileList)

trainingMat = zeros((m,1024))

for i in range(m):

fileNameStr = trainingFileList[i]

fileStr = fileNameStr.split('.')[0] #take off .txt

classNumStr = int(fileStr.split('_')[0])

hwLabels.append(classNumStr)

trainingMat[i,:] = img2vector('trainingDigits/%s' % fileNameStr)

testFileList = listdir('testDigits') #iterate through the test set

errorCount = 0.0

mTest = len(testFileList)

for i in range(mTest):

fileNameStr = testFileList[i]

fileStr = fileNameStr.split('.')[0] #take off .txt

classNumStr = int(fileStr.split('_')[0])

vectorUnderTest = img2vector('testDigits/%s' % fileNameStr)

classifierResult = classify0(vectorUnderTest, trainingMat, hwLabels, 3)

print "the classifier came back with: %d, the real answer is: %d" % (classifierResult, classNumStr)

if (classifierResult != classNumStr): errorCount += 1.0

print "\nthe total number of errors is: %d" % errorCount

print "\nthe total error rate is: %f" % (errorCount/float(mTest))2.1算法简单说明

这个算法主要工作是测量不同特征值之间的距离,有个这个距离,就可以进行分类了。

简称kNN。

已知:训练集,以及每个训练集的标签。

接下来:和训练集中的数据对比,计算最相似的k个距离。选择相似数据中最多的那个分类。作为新数据的分类。

fromnumpy import *#引入科学计算包

import operator #经典python函数库。运算符模块。

#创建数据集

def createDataSet():

group=array([[1.0,1.1],[1.0,1.0],[0,0],[0,0.1]])

labels=['A','A','B','B']

return group,labels

#算法核心

#inX:用于分类的输入向量。即将对其进行分类。

#dataSet:训练样本集

#labels:标签向量

def classfy0(inX,dataSet,labels,k):

#距离计算

dataSetSize =dataSet.shape[0]#得到数组的行数。即知道有几个训练数据。.shape[0]读取第一维度长度

diffMat =tile(inX,(dataSetSize,1))-dataSet#tile:numpy中的函数。tile将原来的一个数组,扩充成了4个一样的数组。diffMat得到了目标与训练数值之间的差值。

sqDiffMat =diffMat**2#各个元素分别平方

sqDistances =sqDiffMat.sum(axis=1)# sum函数中加入参数。sum(a,axis=0)或者是.sum(axis=1)axis=0 就是普通的相加;加入axis=1以后就是将一个矩阵的每一行向量相加

distances =sqDistances**0.5#开方,得到距离。

sortedDistIndicies=distances.argsort()#升序排列

#选择距离最小的k个点。

classCount={}

for i in range(k):

voteIlabel=labels[sortedDistIndicies[i]]

classCount[voteIlabel]=classCount.get(voteIlabel,0)+1

#排序

sortedClassCount=sorted(classCount.iteritems(),key=operator.itemgetter(1),reverse=True)

return sortedClassCount[0][0]函数再解释:

.shape用于计算array各维度的长度,在python中都是从0开始的。

tile函数是numpy包中的,用于重复array,比如上面代码中的tile(inX,(dataSetSize,1)),表示重复inX,其行重复dataSetSize次,而列不重复

.sum是numpy中用于计算一个array内部行列求和,axis=1表示按列求和,即把每一行的元素加起来

.argsort是numpy中对array进行排序的函数,排序是升序

classCount = {} 其中{}表示生成的是字典,在字典这个类中,有方法get,对classCount元素赋值,其实是个计数器

sorted是内置函数,可以help(sorted)查看用法operator模块下的itemgetter函数,顾名思义就是提取第X个元素的意思

2.2示例:改进约会网站配对效果

2.2.1准备数据:从文本文件共解析数据

def file2matrix(filename):

fr = open(filename)

numberOfLines = len(fr.readlines()) #get the number of lines in the file

returnMat = zeros((numberOfLines,3)) #prepare matrix to return

classLabelVector = [] #prepare labels return

fr = open(filename)

index = 0

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat[index,:] = listFromLine[0:3]

classLabelVector.append(int(listFromLine[-1]))

index += 1

return returnMat,classLabelVector>>>reload(kNN)

>>>datingDataMat,datingLabels = kNN.file2matrix('datingTestSet2.txt')#此处为datingTestSet2.txt ,书中datingTestSet.txt错误!!

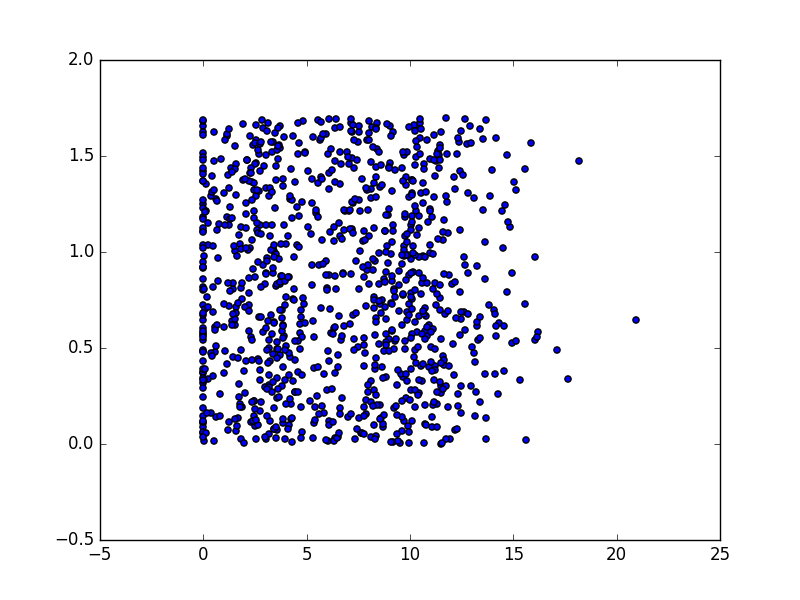

2.2.2分析数据:使用Matplotlib创建散点图

import matplotlib

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(datingDataMat[:,1],datingDataMat[:,2])

plt.show()

##不知什么错误,plt.show后没有显示图像,在导入下面两句后,图像显示。

import matplotlib as mpl

mpl.use('Agg')

import matplotlib as mpl

mpl.use('Agg')

图2-3 没有样本类别标签的约会数据散点图

利用颜色及尺寸标识数据点的属性类别。代码如下:

>>>ax.scatter(datingDataMat[:,1],datingDataMat[:,2],15.0*array(datingLabels),15.0*array(datingLabels))

图2-4 带样本类别标签的约会数据散点图

图2-3和图2-4中散点图采用datingDataMat矩阵中的第二列、第三列数据。虽然图2-4能够比较容易区分数据点从属类别,但依然很难根据这张图得出结论性信息。

针对上述不足,图2-5采用datingDataMat矩阵中的第一列、第二列数据,可以清晰的标识三个不同的样本分类区域。

ax.scatter(datingDataMat[:,0],datingDataMat[:,1],15.0*array(datingLabels),15.0*array(datingLabels))

图2-5 带样本类别标签的约会数据散点图

2.2.3 准备数据:归一化

为防止距离计算中,大数吃小数现象,在距离计算之前需要将数据归一化。

方法是:newValue = (oldValue - min) / (max - min)

def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = zeros(shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - tile(minVals, (m,1))

normDataSet = normDataSet/tile(ranges, (m,1)) #element wise divide

return normDataSet, ranges, minVals

重新加载kNN.py,函数归一化后的执行结果如下:

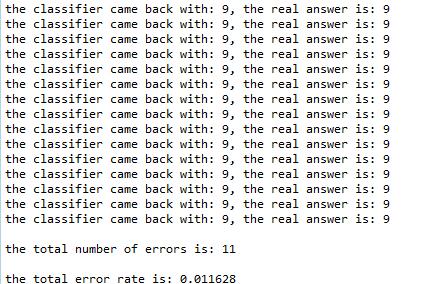

2.2.4 测试算法:作为完整程序验证分类器

定义一个计数器变量,每次分类器错误的分类数据,计数器就加1,程序执行完成后计数器的结果除以数据点总数即是错误率。

def datingClassTest():

hoRatio = 0.10 #hold out 10%

datingDataMat,datingLabels = file2matrix('datingTestSet2.txt') #load data setfrom file

normMat, ranges, minVals = autoNorm(datingDataMat)

m = normMat.shape[0]

numTestVecs = int(m*hoRatio)

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i,:],normMat[numTestVecs:m,:],datingLabels[numTestVecs:m],3)

print "the classifier came back with: %d, the real answer is: %d" % (classifierResult, datingLabels[i])

if (classifierResult != datingLabels[i]): errorCount += 1.0

print "the total error rate is: %f" % (errorCount/float(numTestVecs))

print errorCount重新加载kNN,输入

>>>kNN.datingClassTest()

结果显示错误率是5%

2.2.5 使用算法:构建完整可用系统

#输入某人的信息,便得出对对方喜欢程度的预测值

#python中raw_input允许用户输入文本行命令并返回用户所输入的命令

def classifyPerson():

resultList = ['not at all', 'in small doses', 'in large doses']

percentTats = float(raw_input("percentage of time spent playing video games?"))

ffMiles = float(raw_input("frequent flier miles earned per year?"))

iceCream = float(raw_input("liters of ice cream consumed per year?"))

datingDataMat, datingLabels = file2matrix('datingTestSet2.txt')

normMat, ranges, minVals = autoNorm(datingDataMat)

inArr = array([ffMiles, percentTats, iceCream])

classifierResult = classify0((inArr - minVals)/ranges, normMat, datingLabels,3)

print 'You will probably like this person: ', resultList[classifierResult - 1]

现在分类器已经构建好了,用户可以自己输入数据,得到对他的喜好程度。

2.3 示例:手写识别系统

#将图像转换为测试向量

def img2vector(filename):

returnVect = zeros((1,1024))

fr = open(filename)

for i in range(32):

lineStr = fr.readline()

for j in range(32):

returnVect[0,32*i+j] = int(lineStr[j])

return returnVect

#将 from os import listdir 写在文件起始部分

#测试代码

def handwritingClassTest():

hwLabels = []

trainingFileList = listdir('trainingDigits') #load the training set

m = len(trainingFileList)

trainingMat = zeros((m,1024))

for i in range(m):

fileNameStr = trainingFileList[i]

fileStr = fileNameStr.split('.')[0] #take off .txt

classNumStr = int(fileStr.split('_')[0])

hwLabels.append(classNumStr)

trainingMat[i,:] = img2vector('trainingDigits/%s' % fileNameStr)

testFileList = listdir('testDigits') #iterate through the test set

errorCount = 0.0

mTest = len(testFileList)

for i in range(mTest):

fileNameStr = testFileList[i]

fileStr = fileNameStr.split('.')[0] #take off .txt

classNumStr = int(fileStr.split('_')[0])

vectorUnderTest = img2vector('testDigits/%s' % fileNameStr)

classifierResult = classify0(vectorUnderTest, trainingMat, hwLabels, 3)

print "the classifier came back with: %d, the real answer is: %d" % (classifierResult, classNumStr)

if (classifierResult != classNumStr): errorCount += 1.0

print "\nthe total number of errors is: %d" % errorCount

print "\nthe total error rate is: %f" % (errorCount/float(mTest))

>>>kNN.handwritingClassTest()

执行结果如下:

471

471

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?