一、 Linux环境查看和清洗数据

搜狗数据的数据格式:

访问时间\t用户ID\t[查询词]\t该URL在返回结果中的排名\t用户点击的顺序号\t用户点击的URL

其中,用户ID是根据用户使用浏览器访问搜索引擎时的Cookie信息自动赋值,即同一次使用浏览器输入的不同查询对应同一个用户ID。

1. 查看数据

[HADOOP@hdp-node-01~]$ cd ~

[HADOOP@hdp-node-01 ~]$ less sogou.10k.utf8

2. 查看总行数

[HADOOP@hdp-node-01~]$ wc -l sogou.500w.utf8

5000000 sogou.500w.utf8

3. 添加年、月、日、小时字段,加在每一行最前面

Bash脚本

#! /bin/bash

INFILE=$1

OUTFILE=$2

sed's/^\(....\)\(..\)\(..\)\(..\)/\1\t\2\t\3\t\4\t\1\2\3\4/g' $INFILE >$OUTFILE运行结果:

[HADOOP@hdp-node-01 ~]$ bash timeextend.sh sogou.500w.utf8 sogou.500w.utf8.addedfields

[HADOOP@hdp-node-01 ~]$ less sogou.500w.utf8.addedfields

4. 数据过滤,过滤id或关键词为空的行

运行

[HADOOP@hdp-node-01 ~]$ grep " [[:space:]]* "sogou.500w.utf8

[HADOOP@hdp-node-01 ~]$其中[[:space:]]*两边是制表符,通过ctrl+v,tab打出

发现没有id或关键词字段为空的行

5. 上传文件到hdfs

执行代码:

[HADOOP@hdp-node-01 ~]$ hdfs dfs-mkdir /sogou/

[HADOOP@hdp-node-01 ~]$ hdfs dfs-put ~/sogou.500w.utf8.addedfields /sogou/

[HADOOP@hdp-node-01 ~]$ hdfs dfs-mkdir /sogou/ext/

[HADOOP@hdp-node-01 ~]$ hdfs dfs-mv /sogou/sogou.500w.utf8. addedfields /sogou/ext/

[HADOOP@hdp-node-01 ~]$ hdfs dfs-mkdir /sogou/ori/

[HADOOP@hdp-node-01 ~]$ hdfs dfs-put sogou.500w.utf8 /sogou/ori/一、 基于map-reduce的kmeans算法实现

1. 简介

用map-reduce实现了聚类的数据挖掘算法。选择了k均值聚类(K-means),将关键词特征哈希(feature hash)后数值化,将小时字段、page rank字段、click order字段作为向量附加的三维,然后进行k均值聚类。

由于大部分的关键词都只会含有词汇表中的很少一部分的词,因此我们的词向量中会有大量的0。也就是说词向量是稀疏的。由于特征的维度对应分词词汇表的大小,所以维度可能非常恐怖,此时需要进行降维。我们使用最常用的文本降维方法是Hash Trick(feature hash)。

特征哈希的过程是,先确定特征向量的维度(100),初始化一个特征向量(全0),然后对关键字的每一个字或字母hash成[0,99]的整数,对应向量的100个维度,含有某个字就在这个维度上加5(考虑小时、page rank、clickorder字段的数值大小),最后加上已有的三个数值维度,形成103维的特征向量。

但是上面的方法有一个问题,有可能两个原始特征的哈希后位置在一起导致词频累加特征值突然变大,为了解决这个问题,使用hash Trick的变种signed hash trick,将每个字在加5前重新哈希成-1或1,乘上5后再累加到特征向量上。

k均值算法的分布式实现分三步,每一步对应一个map-reduce算法。第一步,准备,对文本处理,哈希后计算得到特征向量;第二步,迭代计算新的均值向量(中心);第三步,对每一行记录,根据迭代得到的中心向量,判断属于哪一簇,输出结果。第二、三步的输入输出数据格式如下表:

第二步:

KmeansMapper、KmeansReducer | |||||

Mapper | 输入 | key | 一行数据(Text) | value | 特征向量(ArrayWritable) |

输出 | key | 中心编号 | value | 特征向量 | |

Reducer | 输入 | key | 中心编号 | value | 同一个中心的所有特征向量 |

输出 | key | 中心编号 | value | 新的中心点向量 | |

第三步:

LastKmeansMapper | |||||

Mapper | 输入 | key | 一行数据 | value | 特征向量 |

输出 | key | 一行数据 | value | 中心编号 | |

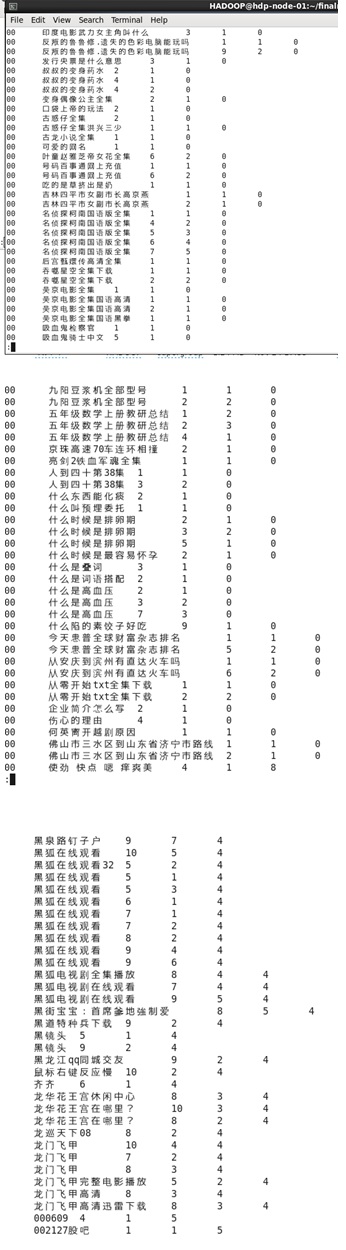

2. 结果查看

电脑配置不行,跑的是10k的数据,最大迭代了10次,花了半个多小时。

[HADOOP@hdp-node-01 finalresult]$hdfs dfs -get /sogoumr35/result/ part-m-00000 ./

[HADOOP@hdp-node-01 finalresult]$less part-m-00000

排序后选特定列

[HADOOP@hdp-node-01 finalresult]$cut -f4,7,8,9,11 part-m-00000 |sort -n -k5 >sortedresult

[HADOOP@hdp-node-01 finalresult]$less sortedresult

3. 代码

一共六个类KmeansRunner、DoubleArrayWritable、Prep、KmeansMapper、KmeansReducer、LastKmeansMapper、KmeansUtil。KmeansRunner负责任务的顺序执行,DoubleArrayWritable是自定义的ArrayWritable,用于序列化特征向量,Prep是第一步的Mapper,KmeansMapper、KmeansReducer负责第二步,LastKmeansMapper负责第三步,KmeansUtil是一些复用的方法。

数据已提前用一3的脚本添加年、月、日、小时字段。

KmeansRunner.java

package Kmeans;

import java.io.BufferedReader;

import java.io.BufferedWriter;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.OutputStreamWriter;

import java.net.URI;

import java.util.HashMap;

import java.util.Map;

import java.util.TreeMap;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.ArrayWritable;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.SequenceFile;

import org.apache.hadoop.io.SequenceFile.Reader;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat;

public class KmeansRunner{

//最大迭代次数

public static final int MAX_ITER = 10;

//测试用运行版本号

public static final int RUN_NUM = 35;

//簇数量(中心数量)

public static final int CLUSTER_NUM = 10;

public static void main(String[] args) throws Exception{

//第一步:加入编号和关键字向量

Configuration conf = new Configuration();

Job prepjob = Job.getInstance(conf);

prepjob.setJarByClass(KmeansRunner.class);

prepjob.setMapperClass(Prep.class);

prepjob.setNumReduceTasks(0);

prepjob.setOutputKeyClass(Text.class);

prepjob.setOutputValueClass(DoubleArrayWritable.class);

prepjob.setOutputFormatClass(SequenceFileOutputFormat.class);

FileInputFormat.setInputPaths(prepjob, new Path(args[0]));

FileOutputFormat.setOutputPath(prepjob,new Path("/sogoumr"+RUN_NUM+"/preped/"));

prepjob.waitForCompletion(true);

//建立中心点,选最开头的10个数据的特征向量

initCenters();

//第二部:迭代

int iteration = 0 ;

while(iteration < MAX_ITER) {

conf = new Configuration();

//set中心点向量路径

if(iteration != 0) {

conf.set("CPATH", "/sogoumr"+RUN_NUM+"/clustering/depth_"

+ (iteration - 1) + "/part-r-00000");

}

else {

conf.set("CPATH", "/sogoumr"+RUN_NUM+"/clusterMean0/000");

}

conf.set("num.iteration", iteration + "");

Job iterJob = Job.getInstance(conf);

iterJob.setJobName("KMeans Clustering " + iteration);

iterJob.setMapperClass(KmeansMapper.class);

iterJob.setReducerClass(KmeansReducer.class);

iterJob.setJarByClass(KmeansRunner.class);

iterJob.setInputFormatClass(SequenceFileInputFormat.class);

iterJob.setOutputFormatClass(SequenceFileOutputFormat.class);

iterJob.setMapOutputKeyClass(IntWritable.class);

iterJob.setMapOutputValueClass(DoubleArrayWritable.class);

iterJob.setOutputKeyClass(IntWritable.class);

iterJob.setOutputValueClass(DoubleArrayWritable.class);

FileInputFormat.setInputPaths(iterJob, new Path("/sogoumr"+RUN_NUM+"/preped/"));

FileOutputFormat.setOutputPath(iterJob, new Path("/sogoumr"+RUN_NUM+"/clustering/depth_" + iteration + "/"));

iterJob.waitForCompletion(true);

//计算中心是否发生变化,是否提前结束

Path oldCenPath = new Path(conf.get("CPATH"));

Path newCenPath = new Path("/sogoumr"+RUN_NUM+"/clustering/depth_" + iteration + "/part-r-00000");

if(isSequenceFileEqual(oldCenPath, newCenPath))

break;

iteration++;

}

iteration--;

//第三部(最后一步):根据所得中心点组合获得最终结果,结果形式:(数据原文本\t中心编号)

Configuration conf2 = new Configuration();

conf2.set("num.iteration",iteration+"");

Job lastJob = Job.getInstance(conf2);

lastJob.setJarByClass(KmeansRunner.class);

lastJob.setMapperClass(LastKmeansMapper.class);

lastJob.setNumReduceTasks(0);

lastJob.setInputFormatClass(SequenceFileInputFormat.class);

lastJob.setOutputKeyClass(Text.class);

lastJob.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(lastJob, new Path("/sogoumr"+RUN_NUM+"/preped/"));

FileOutputFormat.setOutputPath(lastJob,new Path("/sogoumr"+RUN_NUM+"/result/"));

lastJob.waitForCompletion(true);

System.exit(0);

}

@SuppressWarnings("deprecation")

private static void initCenters() throws IOException {

//读入初始化

String uri="/sogoumr"+RUN_NUM+"/preped/part-m-00000";

Configuration conf=new Configuration();

FileSystem fs =FileSystem.get(URI.create(uri),conf);

Path path=new Path(uri);

SequenceFile.Reader reader=null;

reader=new SequenceFile.Reader(fs,path,conf);

Text key = new Text();

DoubleArrayWritable value = new DoubleArrayWritable();

//写sequencefile初始化

String uri2="/sogoumr"+RUN_NUM+"/clusterMean0/000";

Configuration conf2=new Configuration();

FileSystem fs2=FileSystem.get(URI.create(uri2),conf2);

Path path2=new Path(uri2);

IntWritable key2=new IntWritable();

SequenceFile.Writer writer=null;

writer=SequenceFile.createWriter(fs2,conf2,path2,key2.getClass(),value.getClass()

,SequenceFile.CompressionType.NONE);

//输出一般文本格式到001

String uri3="/sogoumr"+RUN_NUM+"/clusterMean0/001";

Configuration conf3=new Configuration();

FileSystem fs3=FileSystem.get(URI.create(uri3),conf3);

FSDataOutputStream fout = fs3.create(new Path(uri3));

BufferedWriter out = new BufferedWriter(new OutputStreamWriter(fout, "UTF-8"));

for(int i=0;i<CLUSTER_NUM;i++) {

//读入

reader.next(key, value);

key2.set(i);

//写到sequencefile

writer.append(key2,value);

//输出到文本文件,便于查看

Double[] doubleArr = new Double[Prep.DIM+3];

KmeansUtil.initDoubleArr(doubleArr);

KmeansUtil.doubleArrayWritableToDoubleArr(value,doubleArr);

out.write(i+"\t{");

for(int j=0;j<doubleArr.length;j++) {

out.write(doubleArr[j]+",");

}

out.write("}");

out.newLine();

out.flush();

}

IOUtils.closeStream(reader);

writer.close();

out.close();

}

private static boolean isSequenceFileEqual(Path path1 , Path path2) throws IOException {

Map<Integer , DoubleArrayWritable> cen1 = new HashMap<Integer , DoubleArrayWritable>();

Map<Integer , DoubleArrayWritable> cen2 = new HashMap<Integer , DoubleArrayWritable>();

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

@SuppressWarnings("deprecation")

SequenceFile.Reader reader1 = new Reader(fs, path1 , conf);

Configuration conf2 = new Configuration();

FileSystem fs2 = FileSystem.get(conf2);

@SuppressWarnings("deprecation")

SequenceFile.Reader reader2 = new Reader(fs2, path1 , conf2);

IntWritable key = new IntWritable();

DoubleArrayWritable value = new DoubleArrayWritable();

while(reader1.next(key, value)) {

cen1.put(key.get(), value);

}

while(reader2.next(key, value)) {

cen2.put(key.get(), value);

}

for(Integer i:cen1.keySet()) {

if(!isDoubleArrayEqual(cen1.get(i),cen2.get(i))){

return false;

}

}

return true;

}

private static boolean isDoubleArrayEqual(DoubleArrayWritable doubleArrayWritable1,

DoubleArrayWritable doubleArrayWritable2) {

Double[] doubleArr1 = new Double[Prep.DIM+3];

Double[] doubleArr2 = new Double[Prep.DIM+3];

KmeansUtil.initDoubleArr(doubleArr1);

KmeansUtil.initDoubleArr(doubleArr2);

KmeansUtil.doubleArrayWritableToDoubleArr(doubleArrayWritable1, doubleArr1);

KmeansUtil.doubleArrayWritableToDoubleArr(doubleArrayWritable2, doubleArr2);

for(int i=0;i<doubleArr1.length;i++) {

if(doubleArr1[i].equals(doubleArr2[i])) {

return false;

}

}

return true;

}

}

DoubleArrayWritable.java

package Kmeans;

import org.apache.hadoop.io.ArrayWritable;

import org.apache.hadoop.io.DoubleWritable;

public class DoubleArrayWritable extends ArrayWritable{

public DoubleArrayWritable() {

super(DoubleWritable.class);

}

}

Prep.java

package Kmeans;

import java.io.IOException;

import org.apache.hadoop.io.ArrayWritable;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Mapper.Context;

public class Prep extends Mapper<LongWritable,Text,Text,DoubleArrayWritable> {

public static final int DIM = 100;

@Override

protected void map(LongWritable key,Text value,Context context) throws IOException,InterruptedException{

String line = value.toString();

String[] fields = line.split("\t");

if(fields.length<10)return;

String keyword = fields[6];

DoubleArrayWritable vec2 = new DoubleArrayWritable();

DoubleWritable[] vec1 = new DoubleWritable[DIM+3];

double vec0[] = new double[DIM+3];

//feature hash降维到100

for(char c:keyword.toCharArray()) {

int cInt = c;

int h1 = cInt % DIM;

int h2 = cInt % 2 - 1 ;

vec0[h1] += h2 * 5 ;

}

//小时字段

vec0[DIM] = Double.parseDouble(fields[3]);

//pagerank字段

vec0[DIM+1] = Double.parseDouble(fields[7]);

//clickorder字段

vec0[DIM+2] = Double.parseDouble(fields[8]);

for(int i=0;i<103;i++) {

vec1[i] = new DoubleWritable(vec0[i]);

}

vec2.set(vec1);

context.write(value , vec2);

}

}

KmeansMapper.java

package Kmeans;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.util.HashMap;

import java.util.Map;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.ArrayWritable;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.SequenceFile;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Mapper.Context;

public class KmeansMapper extends Mapper<Text,DoubleArrayWritable,IntWritable,DoubleArrayWritable>{

private static Map< Integer , Double[] > centers = new HashMap<Integer,Double[]>();

/**

* 将中心点向量从hdfs中加载至内存

*/

@Override

protected void setup(Context context) throws IOException,InterruptedException {

Configuration conf = context.getConfiguration();

Path cPath = new Path(conf.get("CPATH"));

FileSystem fs = FileSystem.get(conf);

@SuppressWarnings("deprecation")

SequenceFile.Reader reader = new SequenceFile.Reader(fs, cPath, conf);

IntWritable key = new IntWritable();

DoubleArrayWritable value = new DoubleArrayWritable();

while(reader.next(key, value)) {

Double[] doubleArr = new Double[Prep.DIM+3];

KmeansUtil.initDoubleArr(doubleArr);

KmeansUtil.doubleArrayWritableToDoubleArr(value,doubleArr);

centers.put(key.get() , doubleArr);

}

reader.close();

}

@Override

protected void map(Text key,DoubleArrayWritable value,Context context) throws IOException,InterruptedException{

Double[] xVec = new Double[Prep.DIM+3];

KmeansUtil.initDoubleArr(xVec);

KmeansUtil.doubleArrayWritableToDoubleArr(value,xVec);

//计算最近的中心点

double minDis =Double.MAX_VALUE;

int minIndex = -1;

for(int j=0;j<KmeansRunner.CLUSTER_NUM;j++) {

Double[] cVec = centers.get(j);

double dis = KmeansUtil.calDistance(xVec,cVec);

if(dis < minDis) {

minDis=dis;

minIndex=j;

}

}

context.write(new IntWritable(minIndex), value);

}

}

KmeansReducer.java

package Kmeans;

import java.io.BufferedWriter;

import java.io.IOException;

import java.io.OutputStreamWriter;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.ArrayWritable;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Reducer.Context;

public class KmeansReducer extends Reducer<IntWritable,DoubleArrayWritable,IntWritable,DoubleArrayWritable>{

private static int iteration;

/**

* 加载迭代次数

*/

@Override

protected void setup(Context context) throws IOException, InterruptedException {

Configuration conf = context.getConfiguration();

String iter = conf.get("num.iteration");

iteration = Integer.parseInt(iter);

}

@Override

protected void reduce(IntWritable key,Iterable<DoubleArrayWritable> values,Context context) throws IOException,InterruptedException{

//计算新均值(中心点)

Double[] newCenter = new Double[Prep.DIM+3];

KmeansUtil.initDoubleArr(newCenter);

int cnt=0;

for(DoubleArrayWritable value:values) {

Double[] vec = new Double[Prep.DIM+3];

KmeansUtil.initDoubleArr(vec);

KmeansUtil.doubleArrayWritableToDoubleArr(value,vec);

addvec(newCenter,vec);

cnt++;

}

calMean(newCenter,cnt);

context.write(key, KmeansUtil.doubleArrayToWritable(newCenter));

//写到文本文件方便查看

String uri="/sogoumr"+KmeansRunner.RUN_NUM+"/clustering/depth_" + iteration + "/centertext" + key.get();

Configuration conf=new Configuration();

FileSystem fs=FileSystem.get(URI.create(uri),conf);

FSDataOutputStream fout = fs.create(new Path(uri));

BufferedWriter out = new BufferedWriter(new OutputStreamWriter(fout, "UTF-8"));

out.write(key.get()+"\t{");

for(int j=0;j<newCenter.length;j++) {

out.write(newCenter[j]+",");

}

out.write("}");

out.newLine();

out.flush();

out.close();

}

private void calMean(Double[] vec, int n) {

for(int i=0;i<Prep.DIM+3;i++) {

vec[i]/=n;

}

}

private void addvec(Double[] vec1, Double[] vec2) {

for(int i=0;i<Prep.DIM+3;i++) {

vec1[i]+=vec2[i];

}

}

}

LastKmeansMapper.java

package Kmeans;

import java.io.IOException;

import java.util.HashMap;

import java.util.Map;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.SequenceFile;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Mapper.Context;

public class LastKmeansMapper extends Mapper<Text,DoubleArrayWritable,Text,IntWritable>{

private static int iteration;

private static Map< Integer , Double[] > centers = new HashMap<Integer,Double[]>();

/**

* 将迭代次数、中心点向量从hdfs中加载至内存

*/

@Override

protected void setup(Context context) throws IOException,InterruptedException {

Configuration conf = context.getConfiguration();

String iter = conf.get("num.iteration");

iteration = Integer.parseInt(iter);

Path centerPath = new Path("/sogoumr" + KmeansRunner.RUN_NUM + "/clustering/depth_" + iteration + "/part-r-00000");

FileSystem fs = FileSystem.get(conf);

@SuppressWarnings("deprecation")

SequenceFile.Reader reader = new SequenceFile.Reader(fs, centerPath, conf);

IntWritable key = new IntWritable();

DoubleArrayWritable value = new DoubleArrayWritable();

while(reader.next(key, value)) {

Double[] doubleArr = new Double[Prep.DIM+3];

KmeansUtil.initDoubleArr(doubleArr);

KmeansUtil.doubleArrayWritableToDoubleArr(value,doubleArr);

centers.put(key.get() , doubleArr);

}

reader.close();

}

@Override

protected void map(Text key,DoubleArrayWritable value,Context context) throws IOException,InterruptedException{

Double[] xVec = new Double[Prep.DIM+3];

KmeansUtil.initDoubleArr(xVec);

KmeansUtil.doubleArrayWritableToDoubleArr(value,xVec);

//计算最近的中心点

double minDis =Double.MAX_VALUE;

int minIndex = -1;

for(int j=0;j<KmeansRunner.CLUSTER_NUM;j++) {

Double[] cVec = centers.get(j);

double dis = KmeansUtil.calDistance(xVec,cVec);

if(dis < minDis) {

minDis=dis;

minIndex=j;

}

}

context.write(key , new IntWritable(minIndex));

}

}

KmeansUtil.java

package Kmeans;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.Writable;

public class KmeansUtil {

public static void doubleArrayWritableToDoubleArr(DoubleArrayWritable doubleArrayWritable, Double[] doubleArr) {

Writable[] Writable = doubleArrayWritable.get();

int i=0;

for(Writable writable:Writable) {

DoubleWritable doubleWritable = (DoubleWritable) writable ;

doubleArr[i]=doubleWritable.get();

i++;

}

}

public static void initDoubleArr(Double[] doubleArr) {

for(int i=0;i<doubleArr.length;i++) {

doubleArr[i]=0.0;

}

}

public static double calDistance(Double[] vec1, Double[] vec2) {

// TODO Auto-generated method stub

double dis =0;

for(int i=0;i<Prep.DIM+3;i++) {

//用距离平方代替,简化开根号

dis+=Math.pow(vec1[i]-vec2[i], 2.0);

}

dis=Math.sqrt(dis);

return dis;

}

public static DoubleArrayWritable doubleArrayToWritable(Double[] doubleArray) {

DoubleWritable[] doubleWritableArr = new DoubleWritable[doubleArray.length];

int i=0;

for(double x:doubleArray) {

DoubleWritable doubleWritable = new DoubleWritable(x);

doubleWritableArr[i]=doubleWritable;

i++;

}

DoubleArrayWritable doubleArrayWritable = new DoubleArrayWritable();

doubleArrayWritable.set(doubleWritableArr);

return doubleArrayWritable;

}

}

本文介绍了一种基于Hadoop平台实现K-means聚类算法的方法,通过对搜索日志数据进行预处理,并利用MapReduce将关键词特征哈希后数值化,结合额外的维度如小时字段、PageRank等进行聚类分析。

本文介绍了一种基于Hadoop平台实现K-means聚类算法的方法,通过对搜索日志数据进行预处理,并利用MapReduce将关键词特征哈希后数值化,结合额外的维度如小时字段、PageRank等进行聚类分析。

7669

7669

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?