温故而知新,重新复习一下Kafka的这两个参数

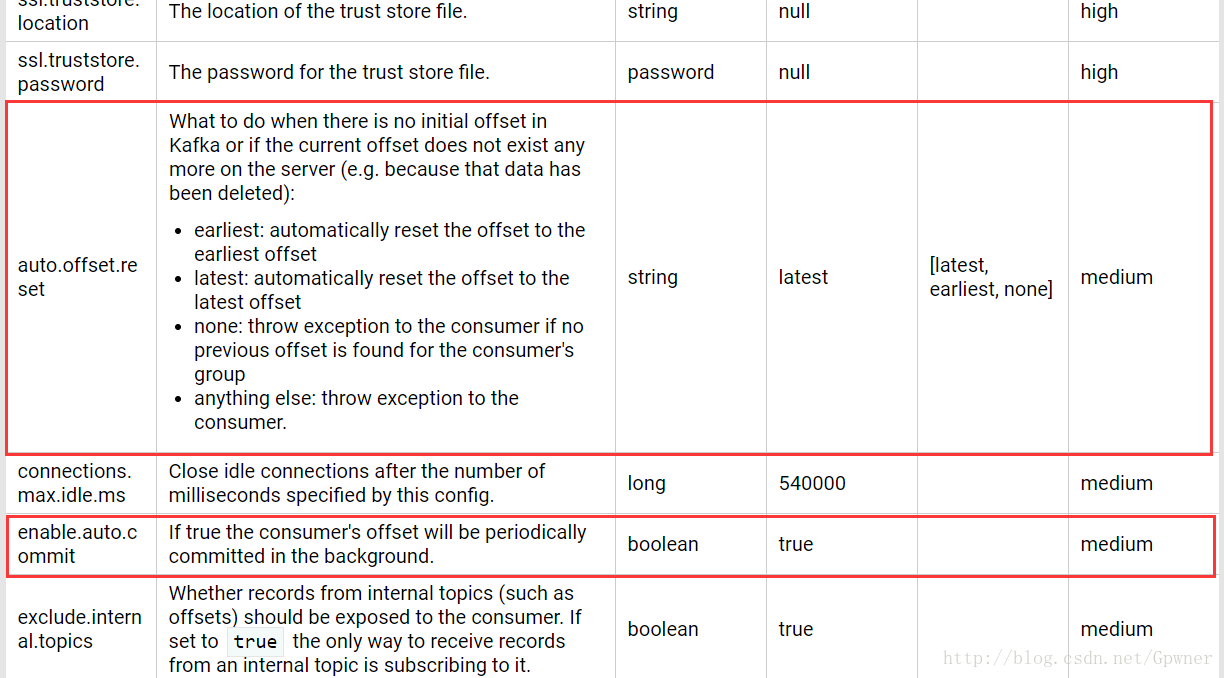

这个两个参数官网也有介绍,不过来自己实践一遍才能更好地理解

https://kafka.apache.org/0102/documentation.html

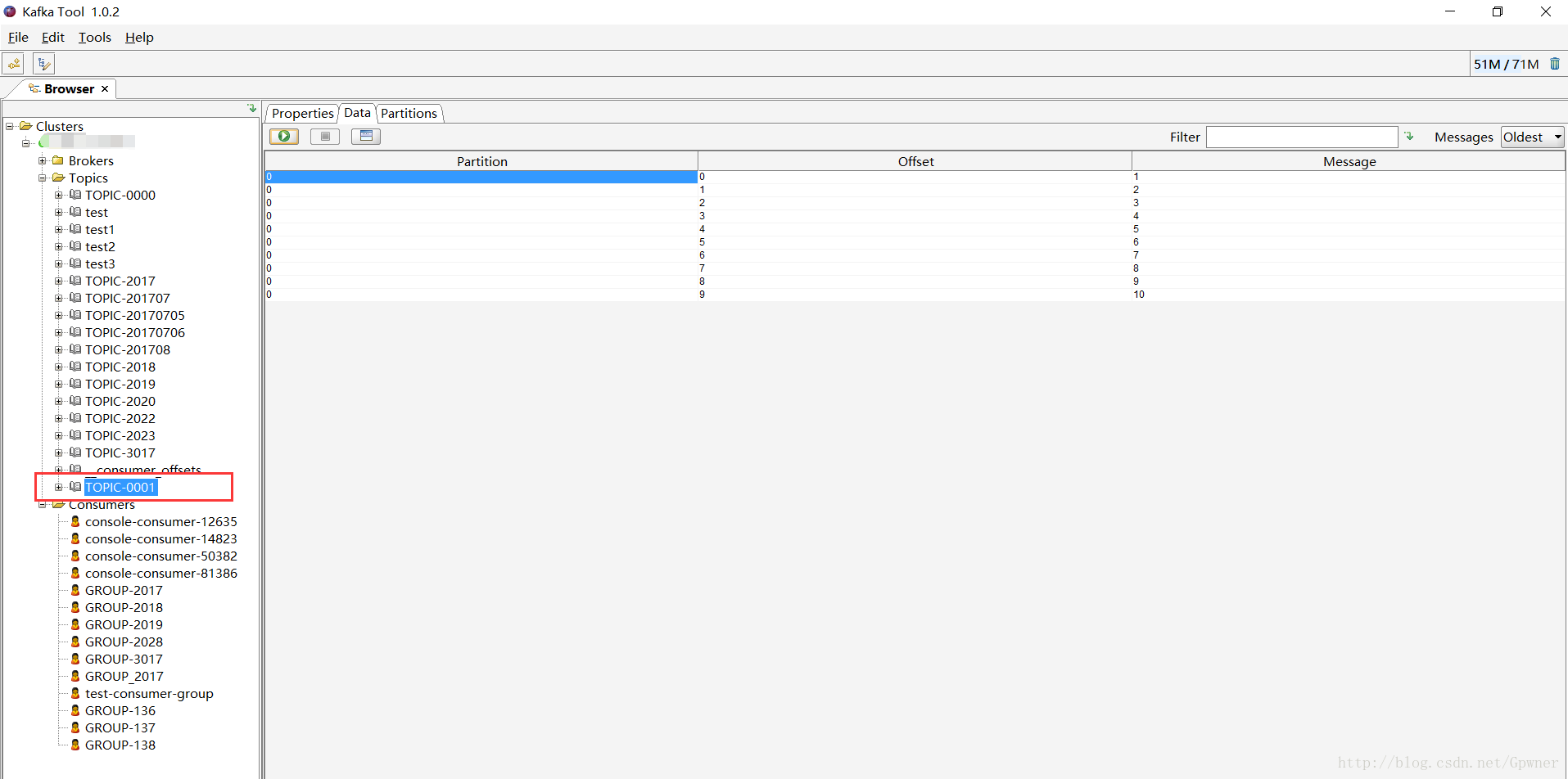

1.Kafka tool

先介绍一个这个工具http://www.kafkatool.com/

这个东西可以很直观地看出Kafka的各个维度地信息

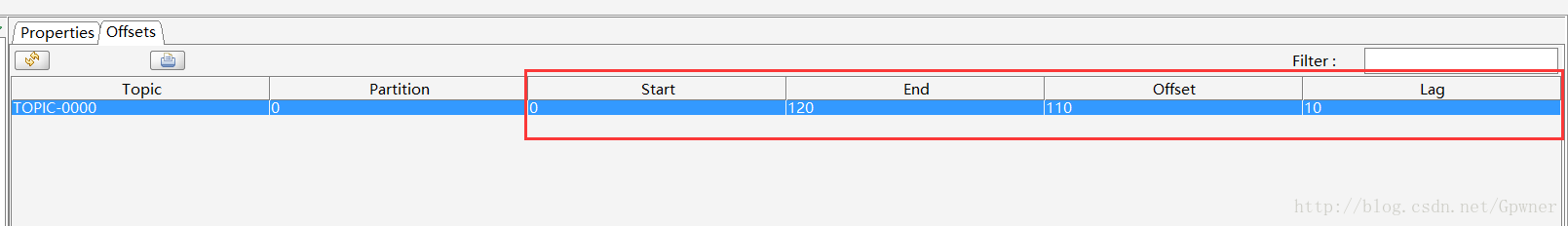

还能看到消费者的消费offset

start:Topic的开始offset

end:Topic的最大offset

Offset:当前消费者的消费到的offset

Lag:消费者还没有消费的消息2.生产者和消费者的代码

package pwner;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.Properties;

/**

* @author

* @version 2017/7/5.22:12

*/

public class Producer {

private final KafkaProducer<Object, String> producer;

private final String topic;

public Producer(String topic, String[] args) {

Properties props = new Properties();

props.put("bootstrap.servers", "172.17.11.85:9092,172.17.11.86:9092,172.17.11.87:9092");

props.put("client.id", "DemoProducer");

props.put("batch.size", 16384);//16M

props.put("linger.ms", 10);

props.put("buffer.memory", 33554432);//32M

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

producer = new KafkaProducer<>(props);

this.topic = topic;

}

public void producerMsg() throws InterruptedException {

int events = 10;

for (long nEvents = 0; nEvents < events; nEvents++) {

try {

producer.send(new ProducerRecord<>(topic ,nEvents + 1 + ""));

} catch (Exception e) {

e.printStackTrace();

}

}

}

public static void main(String[] args) throws InterruptedException {

Producer producer = new Producer("TOPIC-0002", args);

producer.producerMsg();

Thread.sleep(20);

}

}

package pwner;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.util.Collections;

import java.util.Properties;

/**

* @author

* @version 2017/7/5.22:18

*/

public class Consumer {

private final KafkaConsumer<Integer, String> consumer;

private final String topic;

public Consumer(String topic) {

Properties props = new Properties();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "172.17.11.85:9092,172.17.11.86:9092,172.17.11.87:9092");

props.put("zookeeper.connect", "172.17.11.85:218,172.17.11.86:2181,172.17.11.87:2181");

props.put(ConsumerConfig.GROUP_ID_CONFIG, "GROUP-2333");

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

props.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, "1000");

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");//latest,earliest

props.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, "30000");

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

consumer = new KafkaConsumer<>(props);

this.topic = topic;

}

public void consumerMsg(){

try {

consumer.subscribe(Collections.singletonList(this.topic));

while(true){

ConsumerRecords<Integer, String> records = consumer.poll(1000);

for (ConsumerRecord<Integer, String> record : records) {

System.out.println("Received message: (" + record.key() + ", " + record.value() + ") at partition "+record.partition()+" offset " + record.offset());

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

Consumer Consumer = new Consumer("TOPIC-0002");

Consumer.consumerMsg();

}

}

3.enable.auto.commit

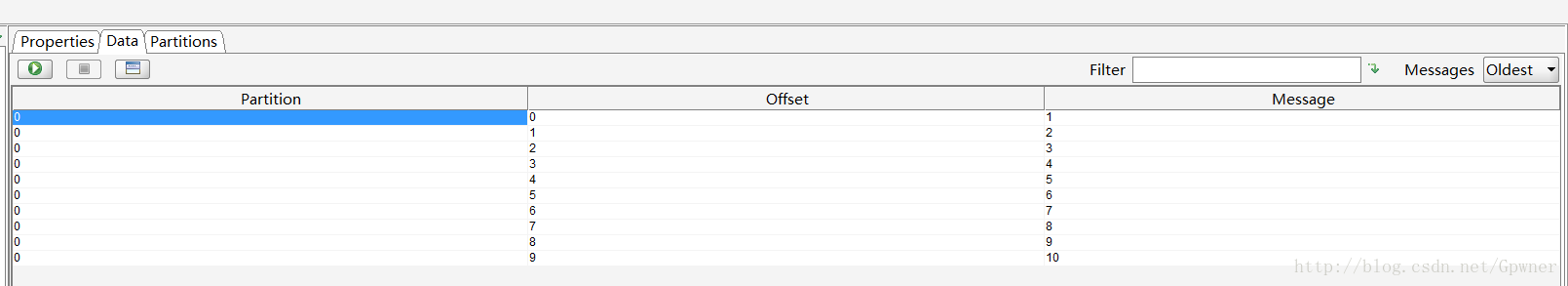

生产者往消4费者生产十条消息(之前从未有任何消费者去消费数据)

现在的配置:

ENABLE_AUTO_COMMIT_CONFIG=false

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");启动消费者去消费:

Received message: (null, 1) at partition 0 offset 0

Received message: (null, 2) at partition 0 offset 1

Received message: (null, 3) at partition 0 offset 2

Received message: (null, 4) at partition 0 offset 3

Received message: (null, 5) at partition 0 offset 4

Received message: (null, 6) at partition 0 offset 5

Received message: (null, 7) at partition 0 offset 6

Received message: (null, 8) at partition 0 offset 7

Received message: (null, 9) at partition 0 offset 8

Received message: (null, 10) at partition 0 offset 9消费者接收到 消息

接着停掉消费者,在生产数据,配置不变

Received message: (null, 1) at partition 0 offset 0

Received message: (null, 2) at partition 0 offset 1

Received message: (null, 3) at partition 0 offset 2

Received message: (null, 4) at partition 0 offset 3

Received message: (null, 5) at partition 0 offset 4

Received message: (null, 6) at partition 0 offset 5

Received message: (null, 7) at partition 0 offset 6

Received message: (null, 8) at partition 0 offset 7

Received message: (null, 9) at partition 0 offset 8

Received message: (null, 10) at partition 0 offset 9

Received message: (null, 1) at partition 0 offset 10

Received message: (null, 2) at partition 0 offset 11

Received message: (null, 3) at partition 0 offset 12

Received message: (null, 4) at partition 0 offset 13

Received message: (null, 5) at partition 0 offset 14

Received message: (null, 6) at partition 0 offset 15

Received message: (null, 7) at partition 0 offset 16

Received message: (null, 8) at partition 0 offset 17

Received message: (null, 9) at partition 0 offset 18

Received message: (null, 10) at partition 0 offset 19可以看到同一个消费者还是从消息队列头开始读取数据(offset=0)

不论你再启动消费者去消费Topic的消息,都时候从消费者设置了ENABLE_AUTO_COMMIT_CONFIG=false之后的offset开始消费

就是说消费者消费了消息之后,不自动提交消费之后的ooffset,需要我们手动提交,如果我们不手动提交offset的位置是不糊改变的

消费者端丢失消息的情形比较简单:如果在消息处理完成前就提交了offset,那么就有可能造成数据的丢失。由于Kafka consumer默认是自动提交位移的,所以在后台提交位移前一定要保证消息被正常处理了,因此不建议采用很重的处理逻辑,如果处理耗时很长,则建议把逻辑放到另一个线程中去做。

ENABLE_AUTO_COMMIT_CONFIG=TRUE

再次启动消费者,控制台输出:

Received message: (null, 1) at partition 0 offset 0

Received message: (null, 2) at partition 0 offset 1

Received message: (null, 3) at partition 0 offset 2

Received message: (null, 4) at partition 0 offset 3

Received message: (null, 5) at partition 0 offset 4

Received message: (null, 6) at partition 0 offset 5

Received message: (null, 7) at partition 0 offset 6

Received message: (null, 8) at partition 0 offset 7

Received message: (null, 9) at partition 0 offset 8

Received message: (null, 10) at partition 0 offset 9

Received message: (null, 1) at partition 0 offset 10

Received message: (null, 2) at partition 0 offset 11

Received message: (null, 3) at partition 0 offset 12

Received message: (null, 4) at partition 0 offset 13

Received message: (null, 5) at partition 0 offset 14

Received message: (null, 6) at partition 0 offset 15

Received message: (null, 7) at partition 0 offset 16

Received message: (null, 8) at partition 0 offset 17

Received message: (null, 9) at partition 0 offset 18

Received message: (null, 10) at partition 0 offset 19

这是因为在修改标志位为TRUE之前,消费者的offset=0,而Topic的最大offset=19,所以再次消费了。此时重启消费者进程,将没有新的消息可以消费了,因为最大的offset=19,消费者的最后消费的offset也是19。

再次启动生产者生产十条消息,启动消费者(消费者一)从offset=20开始消费到offset=29:

Received message: (null, 1) at partition 0 offset 20

Received message: (null, 2) at partition 0 offset 21

Received message: (null, 3) at partition 0 offset 22

Received message: (null, 4) at partition 0 offset 23

Received message: (null, 5) at partition 0 offset 24

Received message: (null, 6) at partition 0 offset 25

Received message: (null, 7) at partition 0 offset 26

Received message: (null, 8) at partition 0 offset 27

Received message: (null, 9) at partition 0 offset 28

Received message: (null, 10) at partition 0 offset 294.auto.offset.reset

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");//latest,earliest在auto.offset.reset标志位为earliest/或者latest,上面的消费者都将在enable.auto.commit的基础之上进行继续消费(如果enable.auto.commit=TRUE,则同一个消费者重启之后不会重复消费之前消费过的消息;enable.auto.commit=FALSE,则消费者重启之后会消费到相同的消息)

那这个auto.offset.reset到底有什么用?

原来这是对新的消费者而言(什么是新的消费者?比如说修改了上面消费者的GROUP_ID标识位)

在auto.offset.reset=earliest情况下,新的消费者(消费者二)将会从头开始消费Topic下的消息:

Received message: (null, 1) at partition 0 offset 0

Received message: (null, 2) at partition 0 offset 1

Received message: (null, 3) at partition 0 offset 2

Received message: (null, 4) at partition 0 offset 3

Received message: (null, 5) at partition 0 offset 4

Received message: (null, 6) at partition 0 offset 5

Received message: (null, 7) at partition 0 offset 6

Received message: (null, 8) at partition 0 offset 7

Received message: (null, 9) at partition 0 offset 8

Received message: (null, 10) at partition 0 offset 9

Received message: (null, 1) at partition 0 offset 10

Received message: (null, 2) at partition 0 offset 11

Received message: (null, 3) at partition 0 offset 12

Received message: (null, 4) at partition 0 offset 13

Received message: (null, 5) at partition 0 offset 14

Received message: (null, 6) at partition 0 offset 15

Received message: (null, 7) at partition 0 offset 16

Received message: (null, 8) at partition 0 offset 17

Received message: (null, 9) at partition 0 offset 18

Received message: (null, 10) at partition 0 offset 19

Received message: (null, 1) at partition 0 offset 20

Received message: (null, 2) at partition 0 offset 21

Received message: (null, 3) at partition 0 offset 22

Received message: (null, 4) at partition 0 offset 23

Received message: (null, 5) at partition 0 offset 24

Received message: (null, 6) at partition 0 offset 25

Received message: (null, 7) at partition 0 offset 26

Received message: (null, 8) at partition 0 offset 27

Received message: (null, 9) at partition 0 offset 28

Received message: (null, 10) at partition 0 offset 29

Received message: (null, 1) at partition 0 offset 30

Received message: (null, 2) at partition 0 offset 31

Received message: (null, 3) at partition 0 offset 32

Received message: (null, 4) at partition 0 offset 33

Received message: (null, 5) at partition 0 offset 34

Received message: (null, 6) at partition 0 offset 35

Received message: (null, 7) at partition 0 offset 36

Received message: (null, 8) at partition 0 offset 37

Received message: (null, 9) at partition 0 offset 38

Received message: (null, 10) at partition 0 offset 39

在auto.offset.reset=latest情况下,新的消费者将会从其他消费者最后消费的offset处(offset=40开始)开始消费Topic下的消息:

也就是说此时再生产十条消息,再启动一个新的消费者(消费者三),它将从offset=40处开始消费:

Received message: (null, 1) at partition 0 offset 40

Received message: (null, 2) at partition 0 offset 41

Received message: (null, 3) at partition 0 offset 42

Received message: (null, 4) at partition 0 offset 43

Received message: (null, 5) at partition 0 offset 44

Received message: (null, 6) at partition 0 offset 45

Received message: (null, 7) at partition 0 offset 46

Received message: (null, 8) at partition 0 offset 47

Received message: (null, 9) at partition 0 offset 48

Received message: (null, 10) at partition 0 offset 49

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?