损失函数的设计

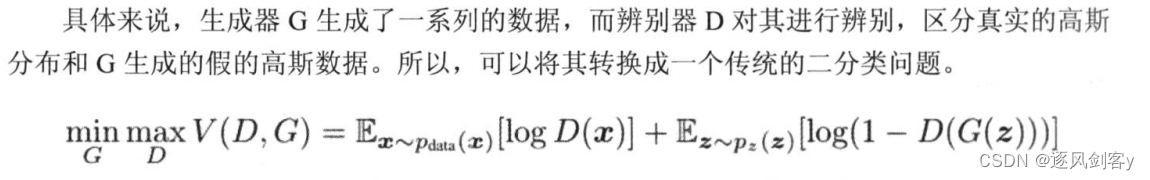

d_loss = tf.reduce_mean(-tf.math.log(real_output) - tf.math.log(1 - fake_output))

g_loss = tf.reduce_mean(-tf.math.log(fake_output))生成器以及判别器的设计

class Generator(tf.keras.Model):

def __init__(self, length, name="generate", **kwargs):

super(Generator, self).__init__(name=name, **kwargs)

self.length = length

self.fc1 = layers.Dense(length, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="g_fc1")

self.softplus = layers.Activation('softplus', name="g_softplus")

self.fc2 = layers.Dense(length, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="g_fc2")

def call(self, inputs, training=None, mask=None):

x = self.fc1(inputs)

x = self.softplus(x)

x = self.fc2(x)

return x

class Discriminator(tf.keras.Model):

def __init__(self, length, name="discriminate", **kwargs):

super(Discriminator, self).__init__(name=name, **kwargs)

self.length = length

self.fc1 = layers.Dense(length, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="d_fc1")

self.tanh1 = layers.Activation('tanh', name="d_tanh1")

self.fc2 = layers.Dense(length, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="d_fc2")

self.tanh2 = layers.Activation('tanh', name="d_tanh2")

self.fc3 = layers.Dense(1, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="d_fc3")

self.tanh3 = layers.Activation('tanh', name="d_tanh3")

self.sigmoid = layers.Activation('sigmoid', name="d_sigmoid")

def call(self, inputs, training=None, mask=None):

x = self.fc1(inputs)

x = self.tanh1(x)

x = self.fc2(x)

x = self.tanh2(x)

x = self.fc3(x)

x = self.tanh3(x)

x = self.sigmoid(x)

return x

generator = Generator(10)

discriminator = Discriminator(10)

# Define optimizers

generator_optimizer = tf.keras.optimizers.Adam(learning_rate=0.0003)

discriminator_optimizer = tf.keras.optimizers.Adam(learning_rate=0.0005)

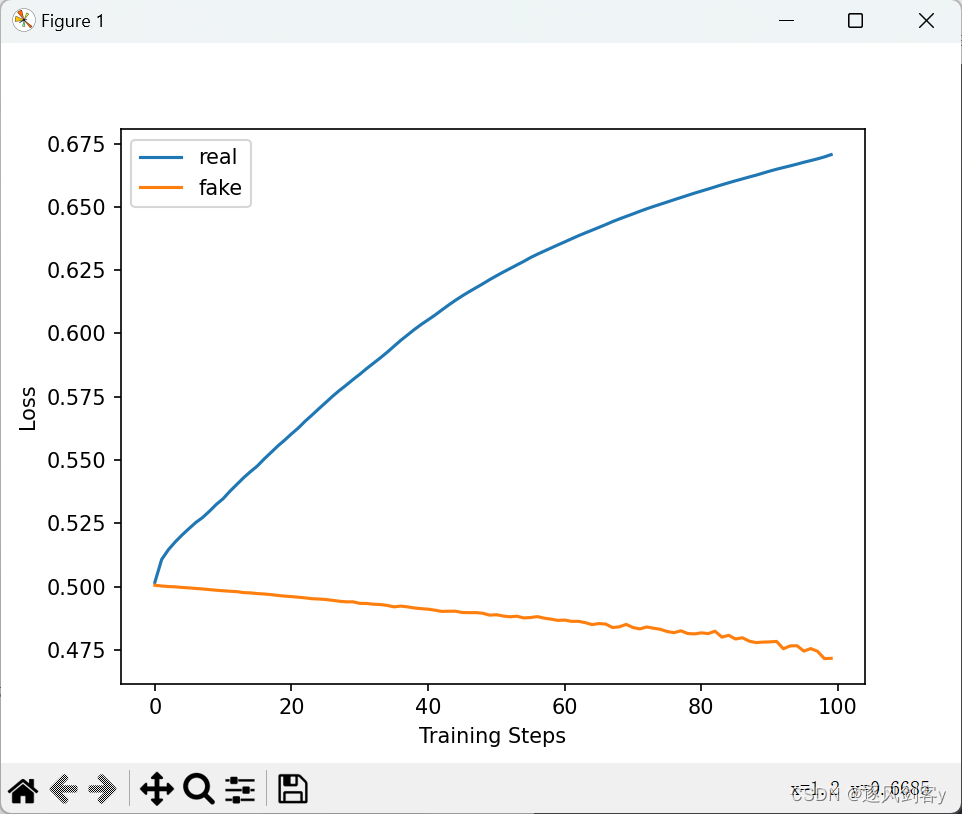

初次迭代

迭代1000轮:

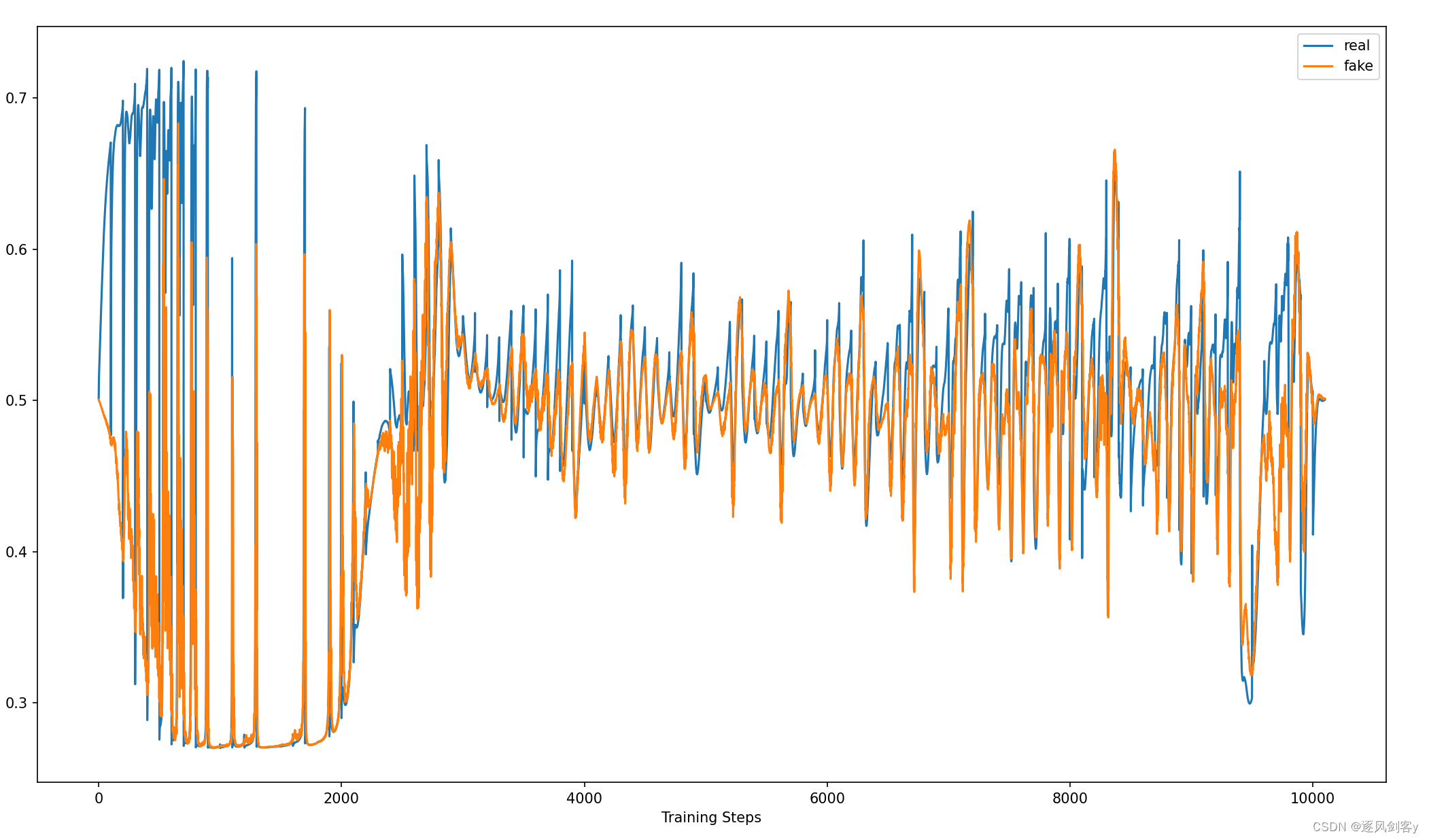

总体代码

import tensorflow as tf

from matplotlib import pyplot as plt

from tensorflow.keras import layers

from keras.src import losses

from tensorflow.python.keras import optimizers

import numpy as np

import matplotlib

matplotlib.use('TkAgg') # Use the TkAgg backend (replace with an appropriate backend)

size = 1

length = 1000

logdir_path = "./simple_norm_gan_ckpt/"

Epoch = 1000

# Set random seed for reproducibility

tf.random.set_seed(42)

np.random.seed(42)

real = []

fake = []

def sample_data(size=size, length=length):

data = []

for _ in range(size):

data.append(np.sort(np.random.normal(4, 1.5, length)))

return np.array(data).astype(np.float32)

def random_data(size=size, length=length):

data = []

for _ in range(size):

data.append(np.random.random(length))

return np.array(data).astype(np.float32)

class Generator(tf.keras.Model):

def __init__(self, length, name="generate", **kwargs):

super(Generator, self).__init__(name=name, **kwargs)

self.length = length

self.fc1 = layers.Dense(length, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="g_fc1")

self.softplus = layers.Activation('softplus', name="g_softplus")

self.fc2 = layers.Dense(length, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="g_fc2")

def call(self, inputs, training=None, mask=None):

x = self.fc1(inputs)

x = self.softplus(x)

x = self.fc2(x)

return x

class Discriminator(tf.keras.Model):

def __init__(self, length, name="discriminate", **kwargs):

super(Discriminator, self).__init__(name=name, **kwargs)

self.length = length

self.fc1 = layers.Dense(length, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="d_fc1")

self.tanh1 = layers.Activation('tanh', name="d_tanh1")

self.fc2 = layers.Dense(length, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="d_fc2")

self.tanh2 = layers.Activation('tanh', name="d_tanh2")

self.fc3 = layers.Dense(1, kernel_initializer=tf.initializers.TruncatedNormal(0.0, 0.1),

kernel_regularizer=tf.keras.regularizers.L1L2(l2=0.0001), activation=None, name="d_fc3")

self.tanh3 = layers.Activation('tanh', name="d_tanh3")

self.sigmoid = layers.Activation('sigmoid', name="d_sigmoid")

def call(self, inputs, training=None, mask=None):

x = self.fc1(inputs)

x = self.tanh1(x)

x = self.fc2(x)

x = self.tanh2(x)

x = self.fc3(x)

x = self.tanh3(x)

x = self.sigmoid(x)

return x

generator = Generator(10)

discriminator = Discriminator(10)

# Define optimizers

generator_optimizer = tf.keras.optimizers.Adam(learning_rate=0.0003)

discriminator_optimizer = tf.keras.optimizers.Adam(learning_rate=0.0005)

# Training step

def train_step(images, noise):

with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

generated_images = generator(noise, training=True)

real_output = discriminator(images, training=True)

fake_output = discriminator(generated_images, training=True)

real.append(real_output)

fake.append(fake_output)

d_loss = tf.reduce_mean(-tf.math.log(real_output) - tf.math.log(1 - fake_output))

g_loss = tf.reduce_mean(-tf.math.log(fake_output))

gradients_of_generator = gen_tape.gradient(g_loss, generator.trainable_variables)

gradients_of_discriminator = disc_tape.gradient(d_loss, discriminator.trainable_variables)

generator_optimizer.apply_gradients(zip(gradients_of_generator, generator.trainable_variables))

discriminator_optimizer.apply_gradients(zip(gradients_of_discriminator, discriminator.trainable_variables))

def train():

sample_dat = sample_data()

random_dat = random_data()

packed_data_random = [random_dat[:, i:i+10] for i in range(0, random_dat.shape[1], 10)]

packed_data_sample = [sample_dat[:, i:i+10] for i in range(0, sample_dat.shape[1], 10)]

for epoch in range(0, Epoch):

for i in range(0, min(len(packed_data_sample), len(packed_data_random))):

image = packed_data_sample[i]

noise = packed_data_random[i]

image = tf.reshape(image, [1, -1])

noise = tf.reshape(noise, [1, -1])

train_step(image, noise)

if epoch%100==0:

plt.plot(np.arange(len(np.squeeze(real))), np.squeeze(real), label='real')

plt.plot(np.arange(len(np.squeeze(fake))), np.squeeze(fake), label='fake')

plt.xlabel('Training Steps')

plt.ylabel('Loss')

plt.legend()

plt.show()

train()

# plt.plot(np.arange(len(real)), real, label='real')

# plt.plot(np.arange(len(fake)), fake, label='fake')

# plt.xlabel('Training Steps')

# plt.ylabel('Loss')

# plt.legend()

# plt.show()

# 假设 your_data 是一维的数据

# your_data = tf.constant([1.0,1.0,1.0]) # 替换成你的实际数据

#

# # 将一维数据转换为形状为 (1, length) 的二维数据

# input_data = tf.reshape(your_data, [1, -1])

# # 通过全连接层

# output = generator(input_data)

# real_output = discriminator(input_data, training=True)

#

# print(tf.shape(generator(output)),output)

#

# print(tf.shape(real_output),real_output)

# sample_dat = sample_data()

# random_dat = random_data()

# print(np.shape(random_dat))

# split_arrays = [random_dat[:, i:i+10] for i in range(0, random_dat.shape[1], 10)]

# packed_data_sample = [sample_dat[i:i + 10] for i in range(0, len(sample_dat), 10)]

# noise = split_arrays[1]

# print(np.shape(noise))

# noise = tf.reshape(noise, [1, -1])

# print(generator(noise))

1764

1764

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?